Three conditions for high performance computing

The calculation of the human genome is not the only task in the field of high-performance computing (HPC, High Performance Computing). Scientists calculate physical fields, engineers - details of aircraft, financiers - economic models, and all together they analyze big data, build neural networks and do many other complex calculations.

Three conditions for HPC are tremendous processing power, very capacious and fast storage, and high network bandwidth. Therefore, the standard practice of performing LDC calculations is in the company's own data center (on-premises) or at the provider in the cloud.

But not all companies have their own data centers, and whoever has it often loses to commercial data centers in terms of resource efficiency (capital expenditures are required for the purchase and updating of hardware and software, payment for highly qualified personnel, etc.) . Cloud providers, on the contrary, offer IT resources according to the Pay-as-you-go operating expenses model, i.e. rent is charged only for the time of use. When the calculations are done, the servers from the account can be deleted, and thereby save IT budgets. But if there is a legislative or corporate ban on transferring data to the provider, HPC computing in the cloud is not available.

Private MultiCloud Storage

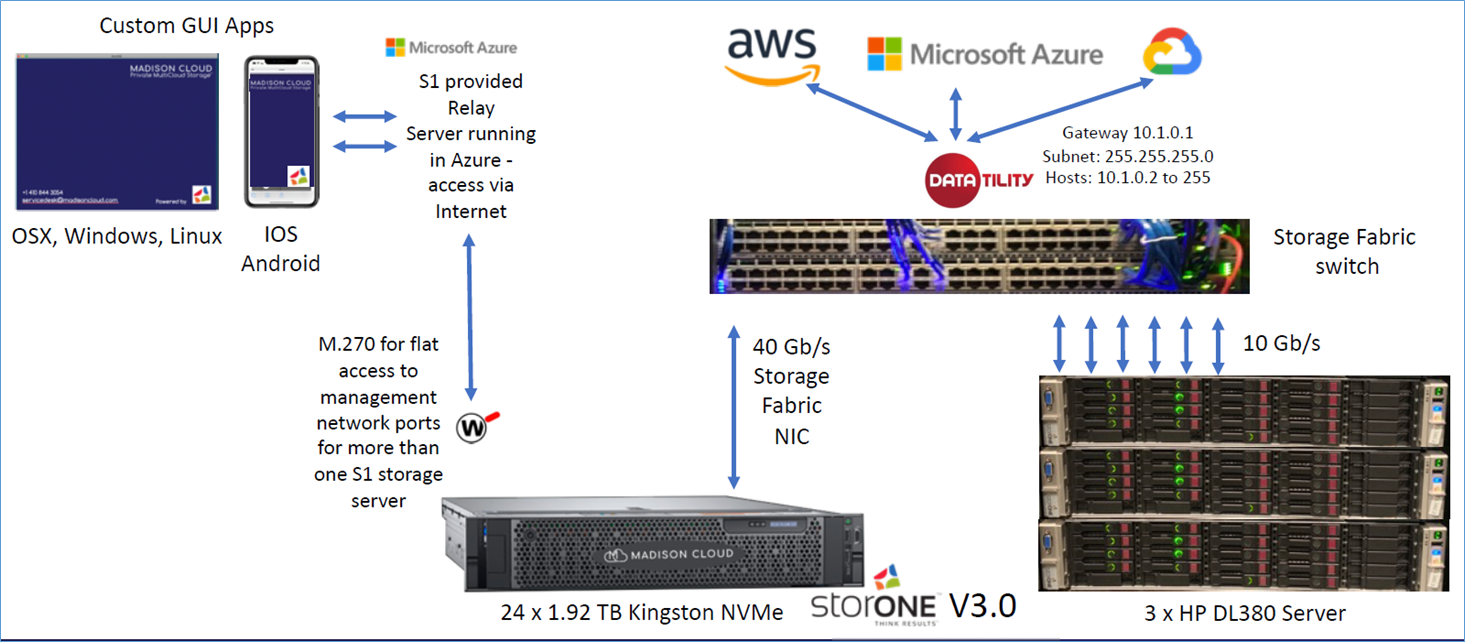

The architecture of Private MultiCloud Storage is designed to provide access to cloud services by physically leaving the data itself at the site of the enterprise or in a separate secure compartment of the data center using the colocation service. In essence, this is a data-centric distributed computing model where cloud servers work with remote storage systems from a private cloud. Accordingly, using the same local data warehouse, you can work with cloud services of the largest providers: AWS, MS Azure, Google Cloud Platform, etc.

Showing an example of PMCS implementation at Supercomputing-2019, Kingston introduced a high-performance storage system (DCS) based on DC1000M SSDs, and one of the cloud-based startups is StorOne S1 management software for software-defined storage and dedicated communication channels with major cloud providers .

It should be noted that PMCS as a working model of cloud computing with private storage is designed for the North American market with the developed network connectivity between data centers, which is supported on AT&T and Equinix infrastructure. So, the ping between the storage system at the colocation in any Equinix Cloud Exchange node and the AWS cloud is less than 1 millisecond (source: ITProToday ).

In a demonstration of the PMCS architecture shown at the exhibition, the DC1000M NVMe storage system was collocated, and virtual machines pinged each other in AWS, MS Azure, and Google Cloud Platform clouds. The client-server application worked remotely with the Kingston storage system and HP DL380 servers in the data center and through the infrastructure of communication channels Equinix gained access to the cloud platforms of the above-mentioned main providers.

Slide from the presentation of Private MultiCloud Storage at Supercomputing-2019. Source: Kingston

The similar software for managing the architecture of a private multi-cloud storage is offered by different companies. The terms for this architecture can also sound differently - Private MultiCloud Storage or Private Storage for Cloud.

“Modern supercomputers run on a host of HPC applications that are at the forefront of everything from oil and gas exploration to weather forecasts, financial markets and the development of new technologies,” said Keat Shimmenti, Corporate Governance Manager for SSDs at Kingston. “These HPC applications require a much greater match between processor performance and I / O speed.” We are proud to talk about how Kingston's solutions help breakthroughs in computing, delivering the performance you need in the most extreme computing environments and applications in the world. ”

Drive DC1000M and an example of a storage system based on it

The DC1000M U.2 NVMe SSD is designed by Kingston for data centers and is specifically designed for intensive work with data and HPC, such as applications for artificial intelligence (AI) and machine learning (ML).

3.84TB DC1000M U.2 NVMe drive. Source: Kingston

The DC1000M U.2 drives are based on 96-layer Intel 3D NAND memory controlled by the Silicon Motion SM2270 controller (PCIe 3.0 and NVMe 3.0). Silicon Motion SM2270 is a 16-channel NVMe corporate controller with PCIe 3.0 x8 interface, dual 32-bit DRAM data bus and three ARM Cortex R5 dual processors.

DC1000M of various volumes are offered for release: from 0.96 to 7.68 TB (the most popular are considered to be 3.84 and 7.68 TB). Drive performance is estimated at 800 thousand IOPS.

Storage system with 10x DC1000M U.2 NVMe 7.68 TB. Source: Kingston

As an example of the storage system for HPC applications, Kingston unveiled a rack-mount solution with 10 DC1000M U.2 NVMe drives, each with a capacity of 7.68 TB, at Supercomputing 2019. The storage system is based on SB122A-PH, AIC's 1U form factor platform. Processors: 2x Intel Xeon CPU E5-2660, Kingston DRAM 128 GB (8x16 GB) DDR4-2400 (Part Number: KSM24RS4 / 16HAI). As the OS, Ubuntu 18.04.3 LTS, Linux kernel ver 5.0.0-31 are installed. The gfio v3.13 test (Flexible I / O tester) showed read performance of 5.8 million IOPS with a throughput of 23.8 Gb / s.

The presented storage system showed impressive characteristics in the parameter of stable reading of 5.8 million IOPS (input-output operations per second). This is two orders of magnitude faster than SSDs for mass market systems. This read speed is needed for HPC applications running on specialized processors.

HPC cloud storage with private storage in Russia

The task of performing high-performance computing with the provider, but physically saving the data on-premises, is also relevant for Russian companies. Another frequent case in domestic business is when, when using foreign cloud services, data must be located in the Russian Federation. We were asked to comment on these situations on behalf of the Selectel cloud provider as a longtime Kingston partner.

“In Russia, you can build a similar architecture, moreover, with service in Russian and all reporting documents for customer accounting. If a company needs to perform high-performance computing using on-premises storage, we at Selectel rent servers with various types of processors, including FPGA , GPU or multi-core CPUs. In addition, we will arrange the laying of a dedicated optical channel between the customer’s office and our data center through partners, ”comments Alexander Tugov, Selectel Services Development Director. - A client can also place its storage on a colocation in the machine room with a special access mode and run applications both on our servers and in the clouds of global providers AWS, MS Azure, Google Cloud. Of course, the signal delay in the latter case will be higher than if the client's storage was in the USA, but a broadband multi-cloud connection would be provided. ”

In the next article, we will talk about another Kingston solution, which was presented at the Supercomputing-2019 exhibition (Denver, Colorado, USA) and is intended for machine learning applications and big data analysis using graphic processors. This is GPUDirect Storage technology, which provides direct data transfer between NVMe storage and GPU processor memory. In addition, we will explain how we managed to achieve a data reading speed of 5.8 million IOPS in rack-mount storage on NVMe disks.

For more information on Kingston Technology products, visit the company's website .