Infa will be useful to JS developers who want to deeply understand the essence of working with Node.js and Event Loop. You can consciously and more flexibly control the flow of program execution (web-server).

I compiled this article based on my recent report to colleagues.

At the end of the article there are useful materials for independent study.

How is Node.js. Asynchronous Capabilities

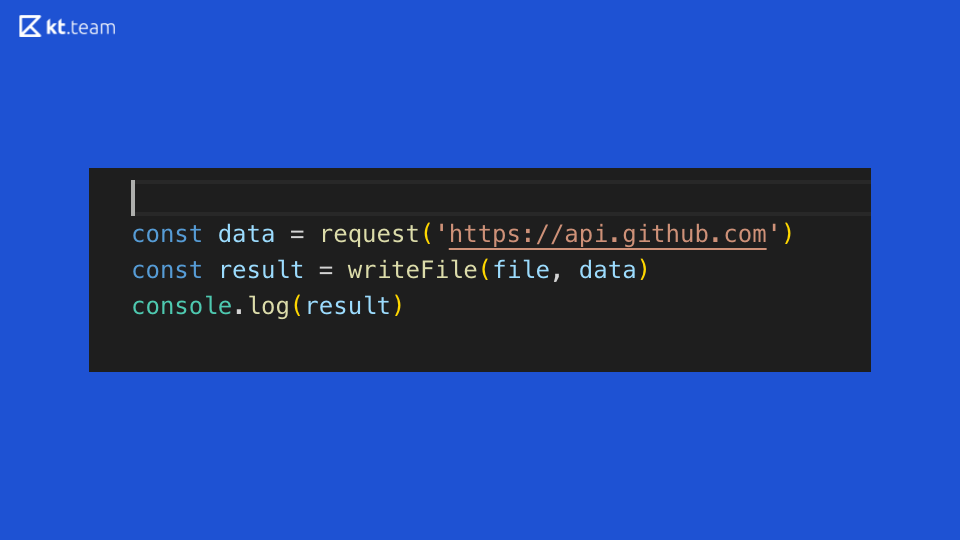

Let's look at this code: it perfectly demonstrates the synchronization of code execution in Node.js. A request is made somewhere on GitHub, then a file is read and the result is displayed in the console. What is clear from this synchronous code?

Suppose this is an abstract web server that performs operations on some router. If an incoming request arrives on this router, we make a request further, read the file, and print it to the console. Accordingly, the time that is spent on requesting and reading the file, the server will be blocked, it will not be able to process any other incoming requests, nor will it perform other operations.

What are the options for solving this problem?

- Multithreading

- Non Blocking I / O

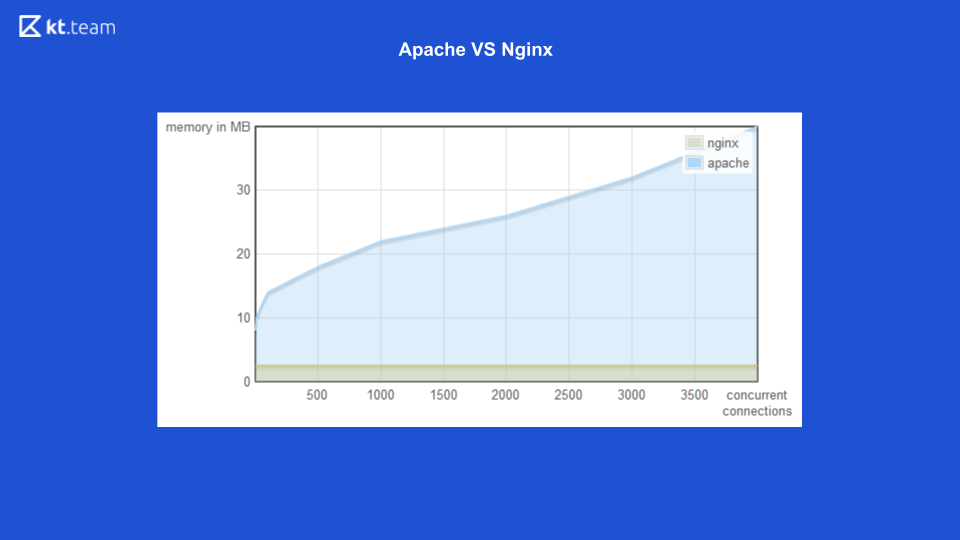

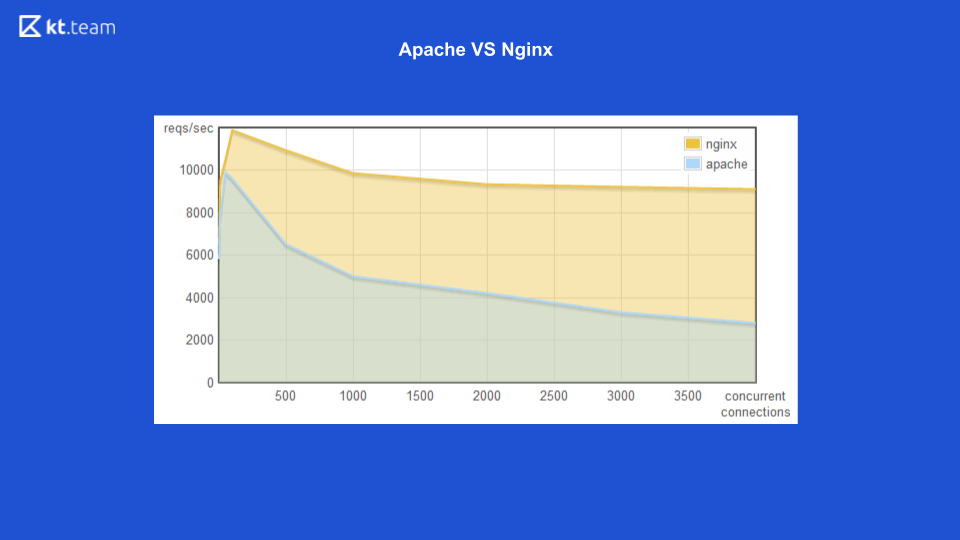

For the first option (multithreading) there is a good example with the Apache vs Nginx web server.

Previously, Apache raised a thread for each incoming request: how many requests there were, the same number of threads. At this time, Nginx had the advantage because it used non-blocking I / O. Here you can see that with an increase in the number of incoming requests, the amount of memory consumed by Apache increases, and on the next slide, the number of processed requests per second with the number of connections at Nginx is higher.

It is clearly shown that non-blocking input / output is more efficient.

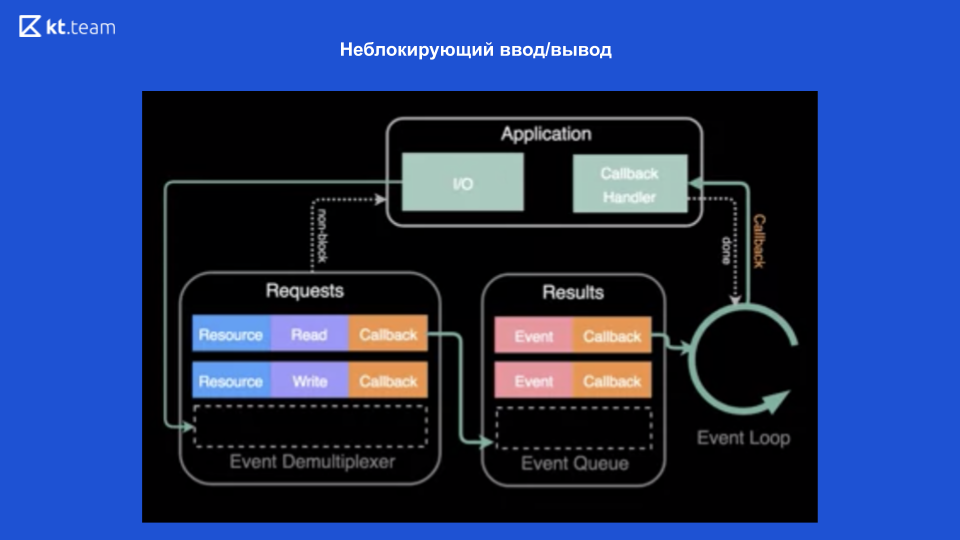

Non-blocking input / output is made possible thanks to modern operating systems that provide this mechanism - an event demultiplexer.

A demultiplexer is a mechanism that receives a request from an application, registers it, and executes it.

In the upper part of the diagram it is seen that we have an application and operations are performed in it (let it be reading a file). To do this, a request is made to the event demultiplexer, a resource is sent here (link to the file), the desired operation and callback. The event demultiplexer registers this request and returns control directly to the application - thus, it is not blocked. Then it performs operations on the file, and after that, when the file is read, the callback is registered in the execution queue. Then the Event Loop gradually synchronously processes each callback from this queue. And, accordingly, returns the result to the application. Further (if necessary) everything is done again.

Thus, thanks to this non-blocking I / O, Node.js can be asynchronous.

I’ll clarify that in this case non-blocking input / output is provided to us by the operating system. To non-blocking input / output (generally, in principle, to input / output operations) we include network requests and file handling.

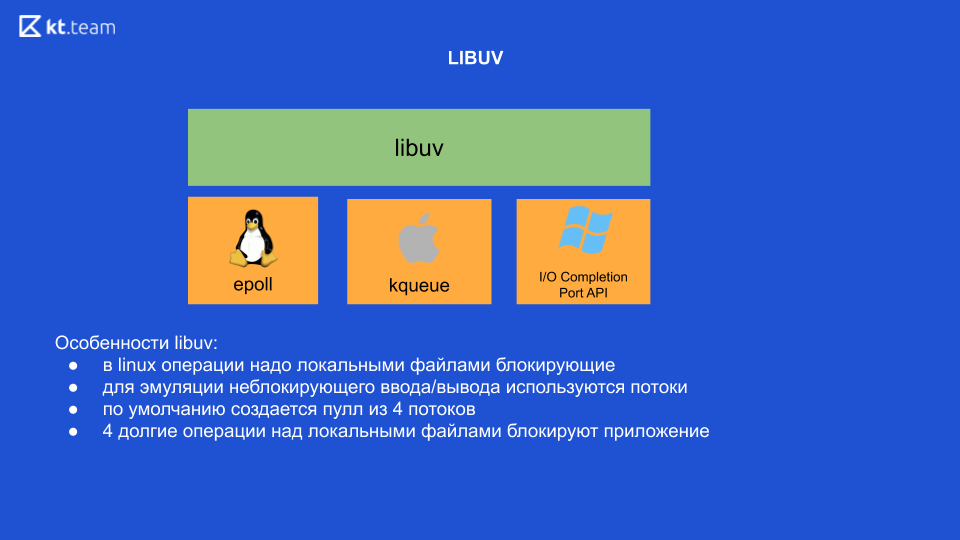

This is the general concept of non-blocking I / O. When the opportunity arose, Ryan Dahl, a Node.js developer, was inspired by the Nginx experience, which used non-blocking I / O, and decided to create a platform specifically for developers. The first thing he needed to do was to “make friends” his platform with an event demultiplexer. The problem was that the demultiplexer was implemented differently in each operating system, and he had to write a wrapper, which later became known as libuv. This is a library written in C. It provides a single interface for working with these event demultiplexers.

Libuv library features

In Linux, in principle, at the moment, all operations with local files are blocking. That is, it seems like there is non-blocking input / output, but it is precisely when working with local files that the operation is still blocking. This is why libuv uses threads internally to emulate non-blocking I / O. 4 threads rise out of the box, and here we need to make the most important conclusion: if we perform some 4 heavy operations on local files, accordingly, we will block our entire application (it is on Linux, other OSs do not).

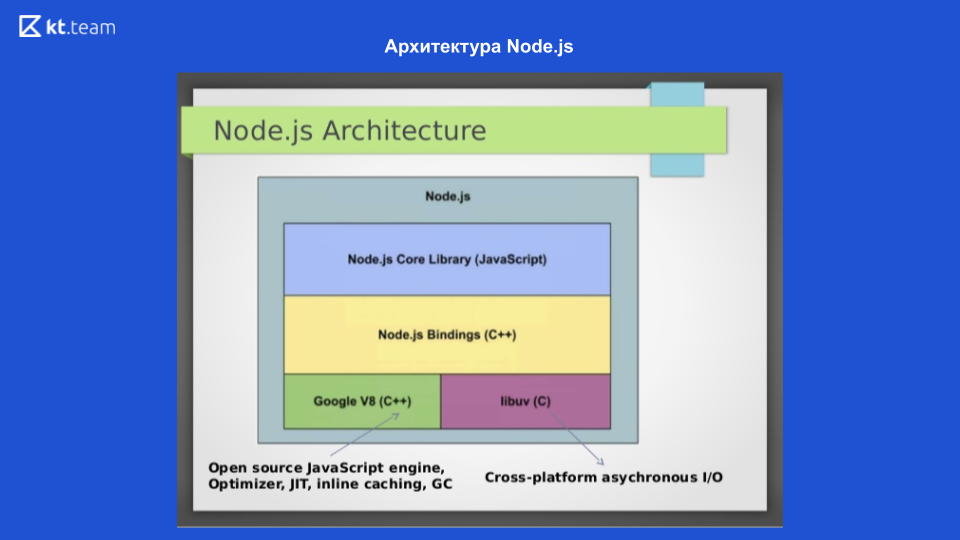

On this slide we see the architecture of Node.js. To interact with the operating system, the libuv library written in C is used; To compile JavaScript code into machine code, the Google V8 engine is used, there is also a Node.js Core library, which contains modules for working with network requests, a file system and a module for logging. That all this interacted with each other, Node.js Bindings are written. These 4 components make up the structure of Node.js. The Event Loop mechanism itself is in libuv.

Event loop

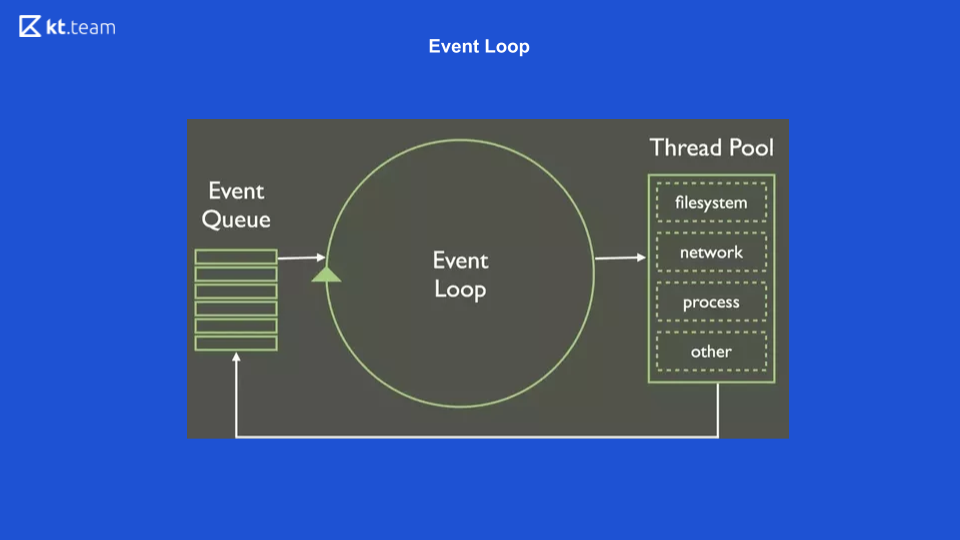

This is the simplest representation of what Event Loop looks like. There is a certain queue of events, there is an endless cycle of events that synchronously performs operations from the queue, and it distributes them further.

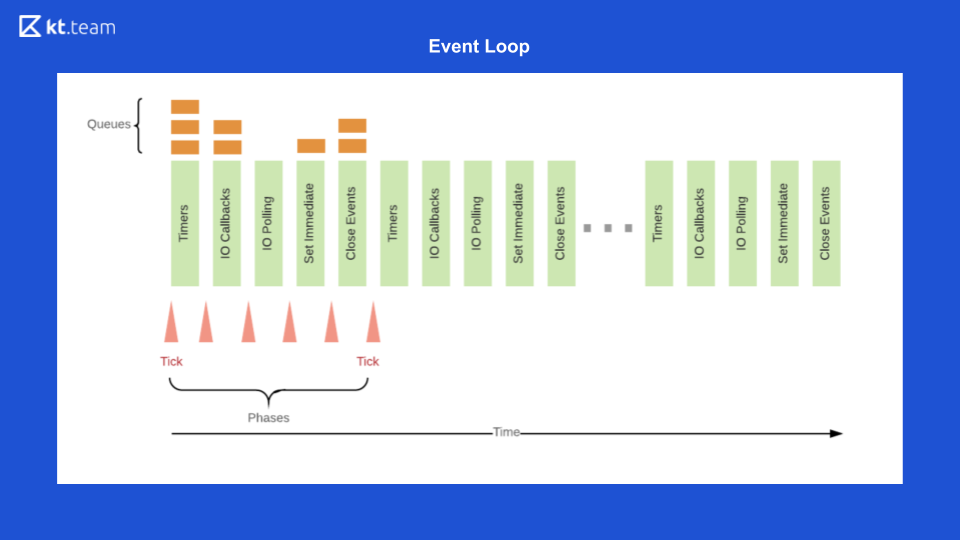

This slide shows how Event Loop looks directly in Node.js.

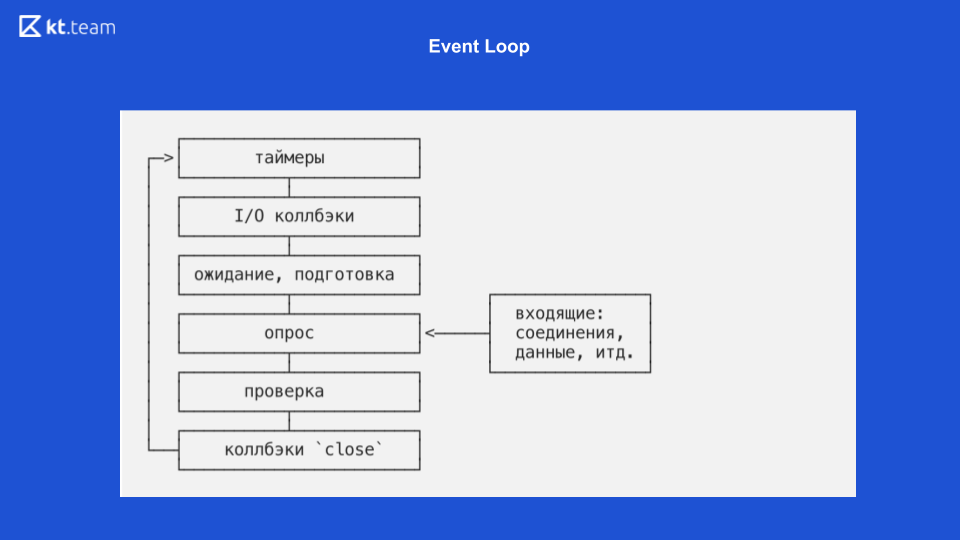

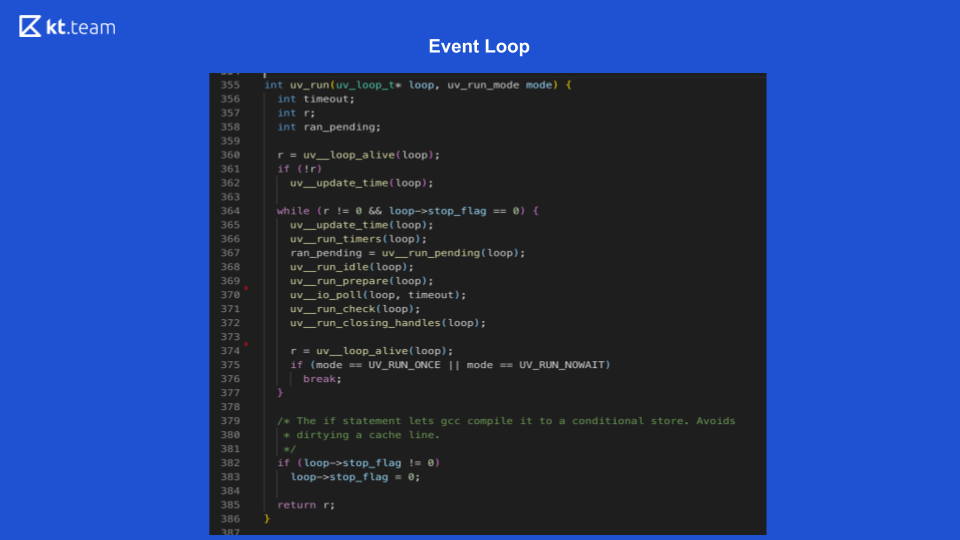

There, the implementation is more interesting and more complicated. Essentially, an Event Loop is an event loop, and it is infinite as long as there is something to do. Event Loop in Node.js is divided into several phases. (The phases from slide 8 must be compared with the source code on slide 9.)

Phase 1 - Timers

This phase is performed directly by Event Loop. (Code snippet with uv_update_time) - here the time when the Event Loop started working is simply updated.

uv_run_timers - in this method, the next timer action is performed. There is a certain stack, more precisely, a bunch of timers, this is essentially the same as the queue where the timers are located. The timer with the smallest time is taken, compared with the current time of the Event Loop, and if it is time to execute this timer, its callback is executed. It is worth noting here that Node.js has an implementation of setTimeout and there is setInterval. For libuv, this is essentially the same thing, only setInterval still has a repeat flag.

Accordingly, if this timer has the repeat flag, then it is again placed in the event queue and then processed in the same way.

Phase 2 - I / O-callbacks

Here we need to return to the diagram about non-blocking input / output.

When the event demultiplexer reads a file and queues the callback, it just corresponds to the I / O-callback stage. Here callbacks are performed for non-blocking input / output, that is, these are exactly the functions that are used after a request to a database or other resource or to read / write a file. They are carried out precisely at this phase.

In slide 9, the execution of the I / O-callback function starts line 367: ran_pending = uv_run_pending (loop).

3 phase - waiting, preparation

These are internal operations for callbacks, in fact, we cannot influence the phase, only indirectly. There is a process.nextTick, its callback may inadvertently be executed in the waiting, preparation phase. process.nextTick is executed in the current phase, that is, in fact, process.nextTick can work in absolutely any phase. There is no ready-made tool to run code in the “wait, prepare” phase in Node.js.

On slide 9, lines 368, 369 correspond to this phase:

uv_run_idle (loop) - wait;

uv_run_prepare (loop) - preparation.

4 phase - survey

This is where all of our code that we write in JS is executed. Initially, all the requests we make get here, and this is where Node.js can be blocked. If any heavy computation operation gets here, then at this stage our application may just freeze and wait until this operation is completed.

On slide 9, the polling function is on line 370: uv_io_poll (loop, timeout).

5 phase - check

There is a setImmediate timer in Node.js, its callbacks are executed in this phase.

In the source code, this is line 371: uv_run_check (loop).

6 phase (last) - callback events close

For example, a web socket needs to close the connection, in this phase the callback of this event will be called.

In the source code, this is line 372: uv_run_closing_handless (loop).

And in the end, Event Loop Node.js is as follows

First, in the timer queue, the timer is executed, the period of which has come.

Then I / O-callbacks are executed.

Then the code is the basis, then setImmediate and the close events.

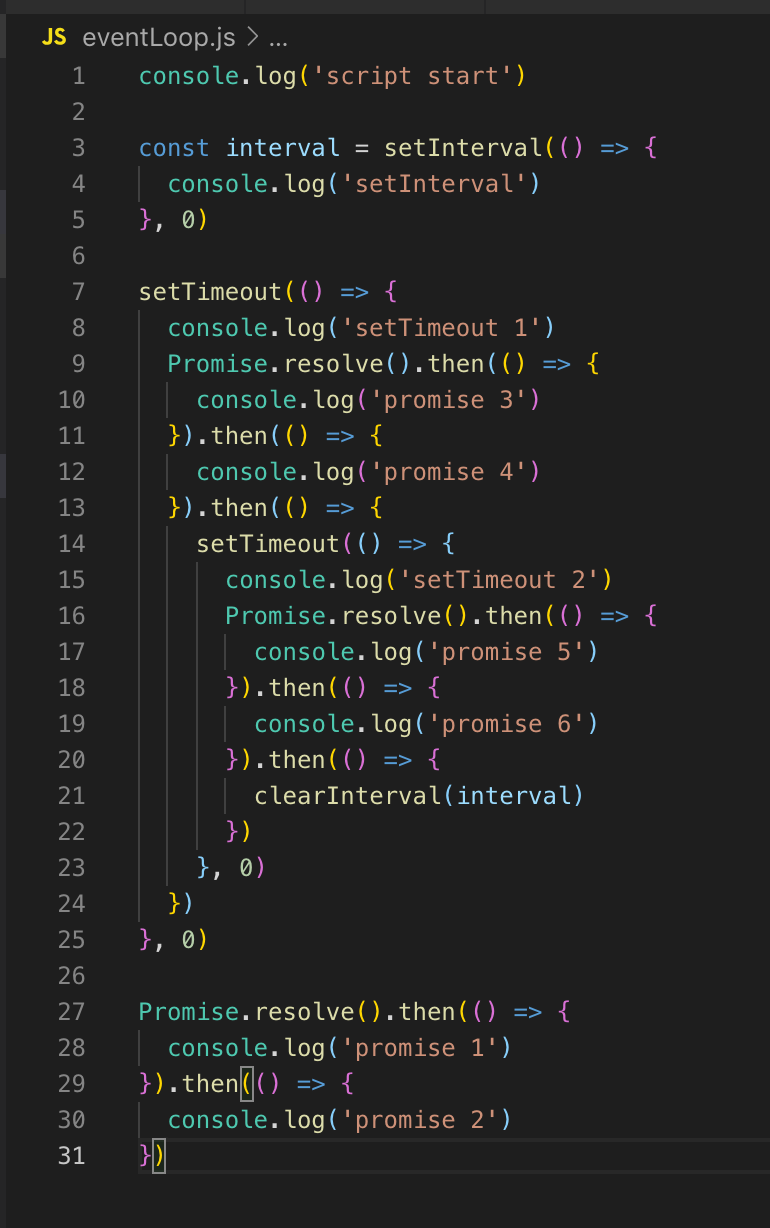

After that, everything repeats in a circle. To demonstrate this, I’ll open the code. How will it be performed?

We do not have timers in line, so the Event Loop moves on. There are no I / O-callbacks either, so we go straight to the polling phase. All the code that is here is initially executed in the polling phase. Therefore, first we print script_start, setInterval is placed in the timer queue (not executed, just placed). setTimeout is also placed in the timer queue, and then the promises are executed: first promise 1 and then promise 2.

In the next tick (event loop), we return to the timer stage, here in the queue there are already 2 timers: setInterval and setTimeout. Both of them with a delay of 0, respectively, they are ready to execute.

SetInterval is executed (output to the console), then setTimeout 1. There are no non-blocking I / O callsbacks, then there will be a polling phase, promise 3 and promise 4 are displayed in the console.

Next, the setTimeout timer is logged. This ends the tick, go to the next tick. There are timers again, the output to the console is setInterval and setTimeout 2, then promise 5 and promise 6 are displayed.

We reviewed Event Loop and can now talk in more detail about multithreading.

Threading - worker_threads module

Multithreading appeared in Node.js thanks to the worker_threads module in version 10.5. And in the 10th version, it was launched exclusively with the --experimental-worker key, and from the 11th version it was possible to start without it.

Node.js also has a cluster module, but it does not raise threads - it raises several more processes. Application scalability is its primary goal.

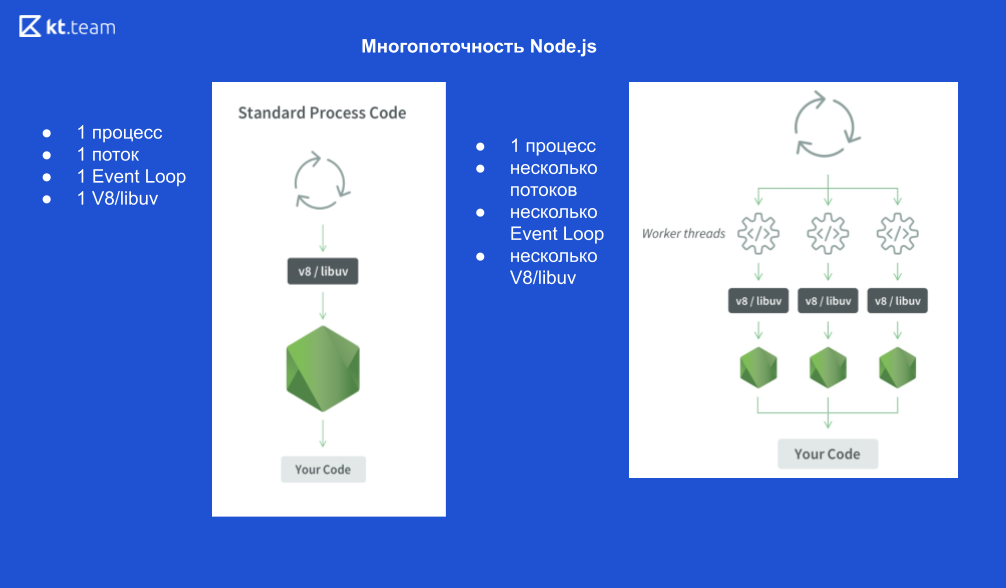

What does 1 process look like:

1 Node.js process, 1 thread, 1 Event Loop, 1 V8 engine and libuv.

If we start X threads, then it looks like this:

1 Node.js process, X threads, X Event Loops, X V8 engines and X libuv.

Schematically, it looks as follows

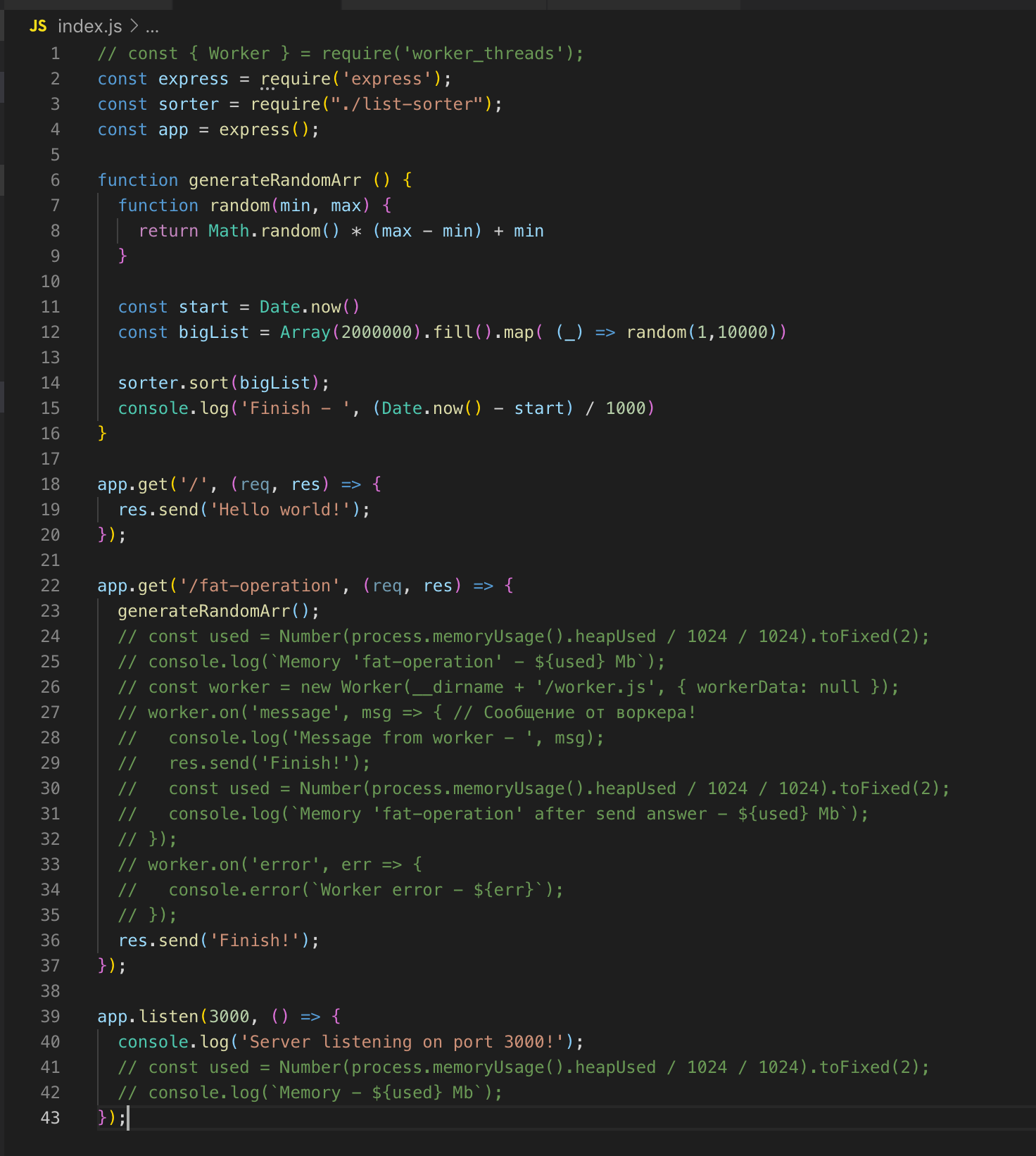

Let's take an example.

The simplest web server on Express. There are 2 routes - / and / fat-operation.

There is also a function generateRandomArr (). She fills the array with two million records and sorts it. Let's start the server.

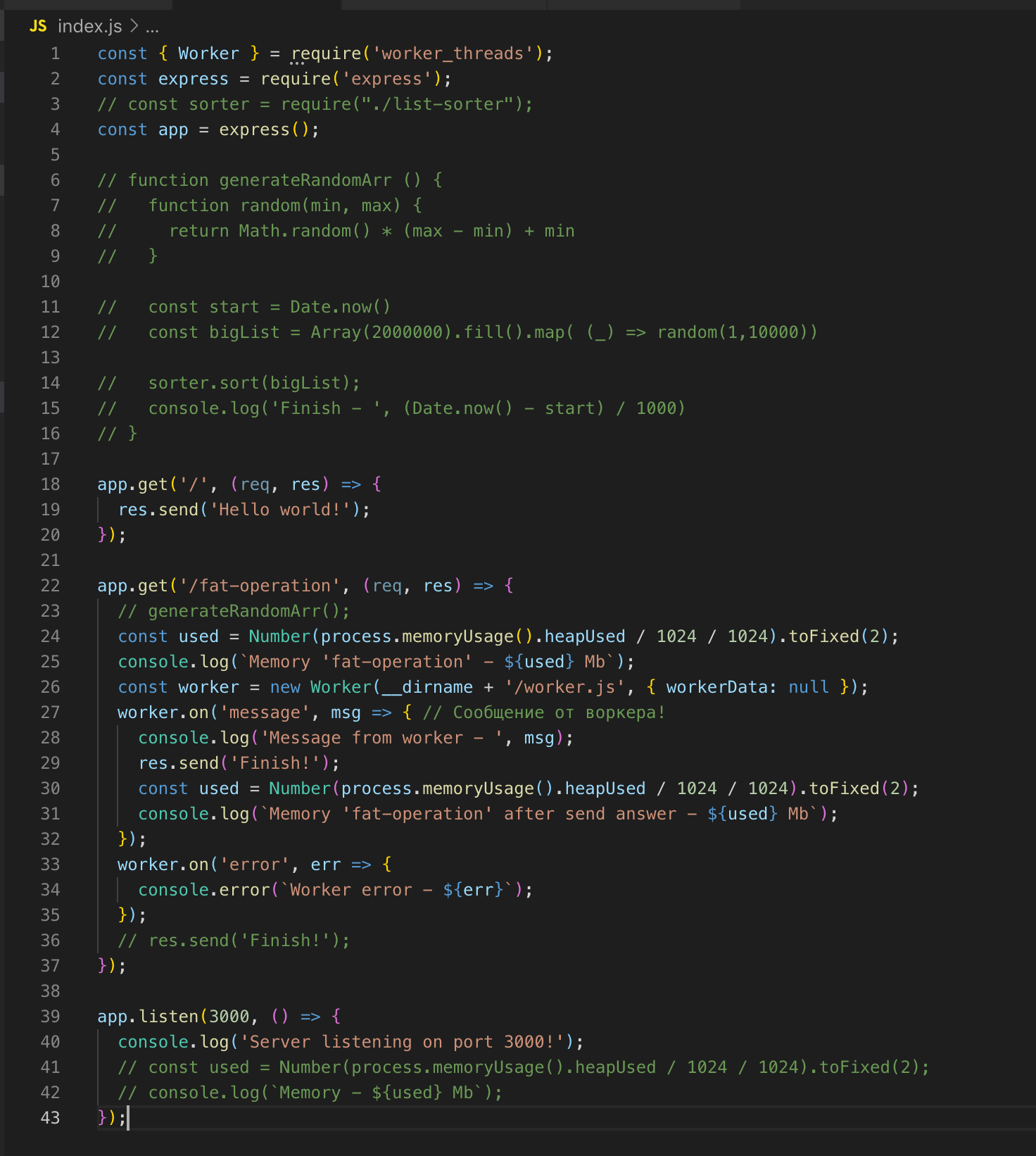

We make a request for / fat-operation. And at that moment when the operation of sorting the array is performed, we send another request to route /, but to get the answer we have to wait until the array is sorted. This is a classic single thread implementation. Now we connect the worker_threads module.

We make a request to / fat-operation and then - to /, from which we immediately get the answer - Hello world!

For the operation of sorting the array, we raised a separate thread that has its own instance of Event Loop, and it does not affect the execution of the code in the main thread.

A thread will be "destroyed" when it has no operations to perform.

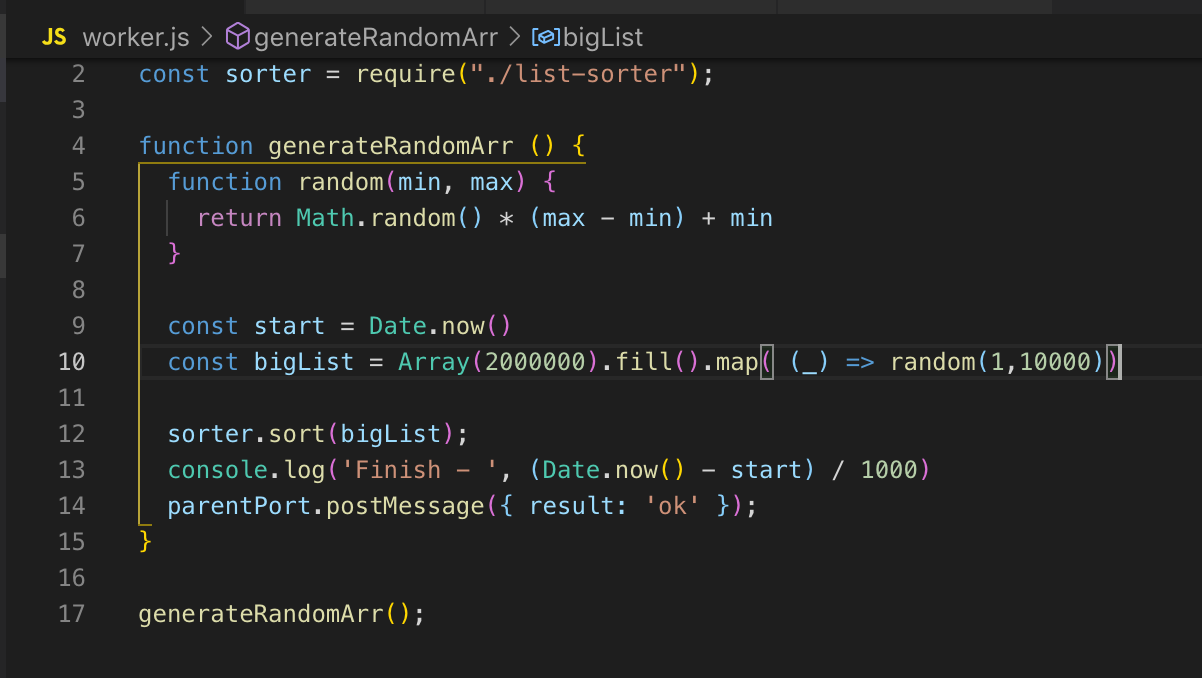

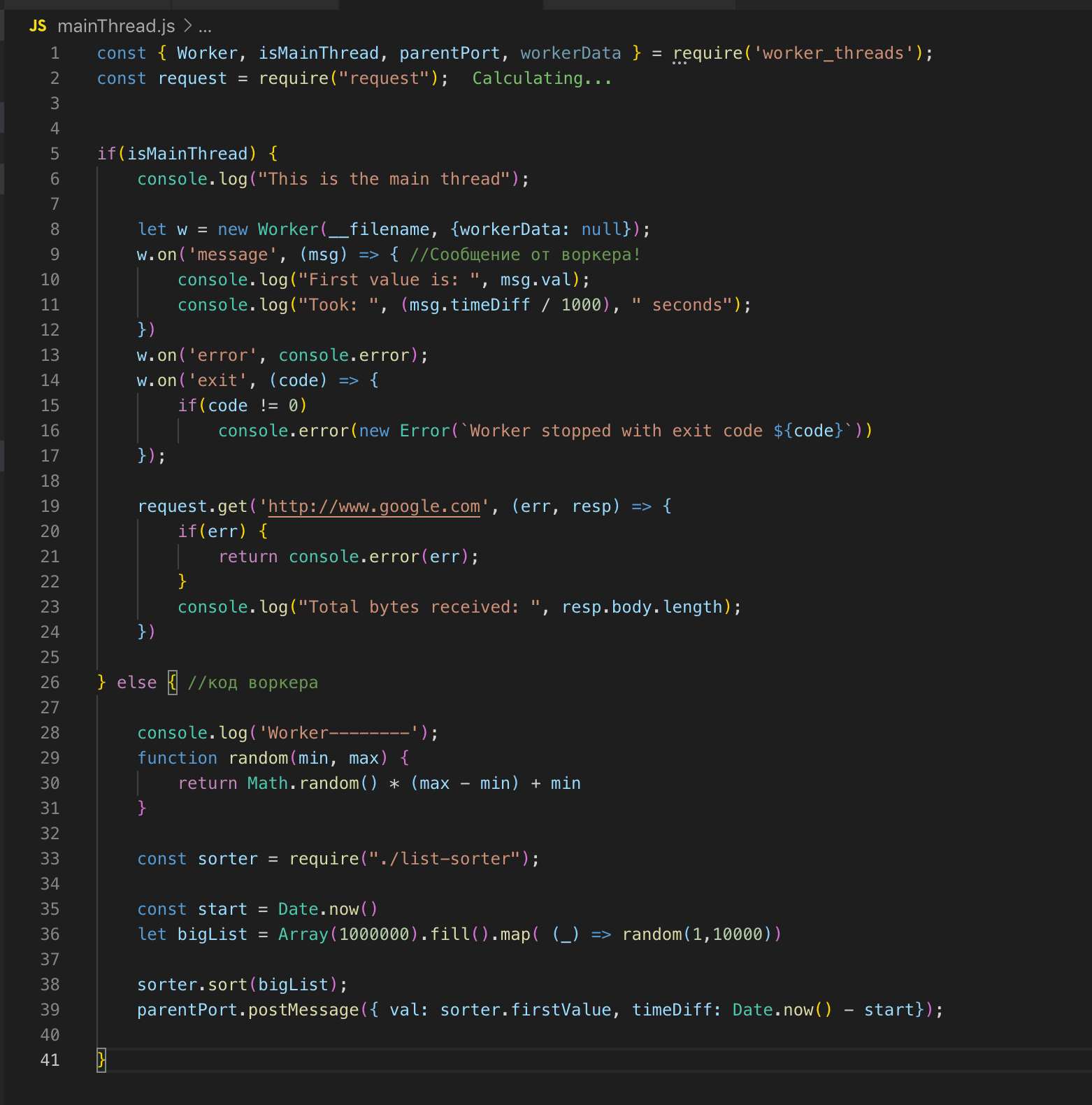

We look at the source code. We register worker on line 26 and, if necessary, pass the data to it. In this case, I am not transmitting anything. And then we subscribe to events: an error and a message. A function call is made in the worker himself, an array of two million records is sorted. As soon as it is sorted, we send the result to the main stream ok through post_message.

In the main thread, we catch this message and send the result to finish. The worker and the main thread have shared memory, so we have access to global variables of the whole process. When we transfer data from the main stream to worker, worker only gets a copy.

We can describe the main stream and the worker stream in one file. The worker_threads module provides an API through which we can determine in which thread the code is currently executing.

Additional Information

I share links to useful resources and a link to the presentation of Ryan Dahl when he presented the Event Loop (interesting to see).

Event loop

- Translation of an article from Node.js documentation

- https://blog.risingstack.com/node-js-at-scale-understanding-node-js-event-loop/

- https://habr.com/en/post/336498/

Worker_threads