Background

There are vending machines of our own design. Inside the Raspberry Pi and a bit of strapping on a separate board. A coin acceptor, bill acceptor, bank terminal are connected ... A self-written program manages everything. The entire history of the work is written to the journal on a flash drive (MicroSD), which is then transmitted via the Internet (using a USB modem) to the server, where it is added to the database. Sales information is loaded in 1s, there is also a simple web interface for monitoring, etc.

That is, the magazine is vital - for accounting (there revenue, sales, etc.), monitoring (all kinds of failures and other force majeure circumstances); this, you can say, all the information that we have about this machine.

Problem

Flash drives show themselves as very unreliable devices. They fail with enviable regularity. This leads to both machine downtime and (if for some reason the magazine could not be transferred online) to data loss.

This is not the first experience using flash drives, before that there was another project with more than a hundred devices where the magazine was stored on USB flash drives, there were also problems with reliability, at times the number of failures per month was in the tens. We tried various flash drives, including branded ones on SLC memory, and some models are more reliable than others, but replacing flash drives did not solve the problem radically.

Attention! Longrid! If you are not interested in “why,” but are interested only in “how,” you can immediately go to the end of the article.

Decision

The first thing that comes to mind: abandon MicroSD, put, for example, SSD, and boot from it. It is theoretically possible, probably, but relatively expensive, and not so reliable (a USB-SATA adapter is added; on budget SSDs, failure statistics are not happy either).

USB HDD also does not look particularly attractive solution.

Therefore, we came to this option: leave the download from MicroSD, but use them in read-only mode, and store the operation log (and other information unique to a specific piece of hardware - serial number, sensor calibrations, etc) somewhere else.

The topic of read-only FS for raspberries has already been studied along and across, I will not dwell on the implementation details in this article (but if there is interest, maybe I will write a mini-article on this topic) . The only point that I want to note: both from personal experience and from reviews that have already implemented a gain in reliability, is. Yes, it is impossible to completely get rid of breakdowns, but it is quite possible to significantly reduce their frequency. Yes, and the cards become unified, which greatly simplifies the replacement for maintenance personnel.

Hardware

There was no doubt about the choice of memory type - NOR Flash.

Arguments:

- simple connection (most often the SPI bus, the experience of using which is already there, so there are no "iron" problems expected);

- ridiculous price;

- standard operating protocol (the implementation is already in the Linux kernel, if you wish, you can take a third-party, which are also present, or even write your own, the benefit is simple)

- reliability and resource:

from a typical datasheet: data is stored for 20 years, 100,000 erase cycles for each block;

from third-party sources: extremely low BER, it is postulated that there is no need for error correction codes (in some papers ECC for NOR is considered, but usually MLC NOR is meant there, it happens) .

Let us estimate the requirements for volume and resource.

I would like to be guaranteed to save data for several days. This is necessary so that in case of any problems with the connection the sales history is not lost. We will focus on 5 days, during this period (even taking into account weekends and holidays), we can solve the problem.

We are now typing about 100kb of magazine per day (3-4 thousand records), but gradually this figure is growing - detailing is increasing, new events are being added. Plus, sometimes there are bursts (some sensor starts spamming with false positives, for example). We will calculate 10 thousand records of 100 bytes - megabytes per day.

A total of 5MB of clean (well-compressible) data comes out. They also (rough estimate) 1MB of service data.

That is, we need an 8MB microcircuit if you do not use compression, or 4MB if you use it. Quite real numbers for this type of memory.

As for the resource: if we plan that the entire memory will be rewritten no more than once every 5 days, then in 10 years of service we get less than a thousand rewrite cycles.

I recall, the manufacturer promises one hundred thousand.

Today, of course, NAND memory is much more popular, but for this project I would not use it: NAND, unlike NOR, necessarily requires the use of error correction codes, a table of bad blocks, etc., and the legs of NAND chips usually much more.

The disadvantages of NOR include:

- small volume (and, accordingly, high price per megabyte);

- low exchange rate (largely due to the fact that a serial interface is used, usually SPI or I2C);

- slow erase (depending on the size of the block, it takes from fractions of a second to several seconds).

It seems to be nothing critical for us, so continue.

If the details are interesting, the at25df321a chip was chosen (however, this is insignificant, there are a lot of analogs on the market that are compatible with the pinout and the command system; even if we want to put the chip from another manufacturer and / or another volume, everything will work without changing the code) .

I use the driver built into the Linux kernel, on Raspberry, thanks to device tree overlay support, everything is very simple - you need to put the compiled overlay in / boot / overlays and modify /boot/config.txt a bit.

Honestly, I’m not sure what is written without errors, but it works.

/* * Device tree overlay for at25 at spi0.1 */ /dts-v1/; /plugin/; / { compatible = "brcm,bcm2835", "brcm,bcm2836", "brcm,bcm2708", "brcm,bcm2709"; /* disable spi-dev for spi0.1 */ fragment@0 { target = <&spi0>; __overlay__ { status = "okay"; spidev@1{ status = "disabled"; }; }; }; /* the spi config of the at25 */ fragment@1 { target = <&spi0>; __overlay__ { #address-cells = <1>; #size-cells = <0>; flash: m25p80@1 { compatible = "atmel,at25df321a"; reg = <1>; spi-max-frequency = <50000000>; /* default to false: m25p,fast-read ; */ }; }; }; __overrides__ { spimaxfrequency = <&flash>,"spi-max-frequency:0"; fastread = <&flash>,"m25p,fast-read?"; }; };

dtoverlay=at25:spimaxfrequency=50000000

I will omit the description of connecting the chip to the Raspberry Pi. On the one hand, I am not an expert in electronics, on the other hand, everything is trivial even for me: the microcircuit has only 8 legs, of which we need ground, power, SPI (CS, SI, SO, SCK); the levels are the same as those of the Raspberry Pi, no additional strapping is required - just connect the specified 6 contacts.

Formulation of the problem

As usual, the formulation of the problem goes through several iterations, it seems to me that the time has come for the next. So let's stop, put together what has already been written, and clarify the details remaining in the shadows.

So, we decided that the log will be stored in SPI NOR Flash.

This is non-volatile memory with which you can do three operations:

- Reading:

The most common reading: we pass the address and read as many bytes as we need; - Record:

Writing to NOR flash looks like an ordinary one, but it has one peculiarity: you can only change 1 to 0, but not vice versa. For example, if we had 0x55 in the memory cell, then after writing 0x0f to it, 0x05 will already be stored there (see the table below) ; - Erase:

Of course, we need to be able to do the inverse operation - change 0 to 1, which is why the erase operation exists. Unlike the first two, it operates not in bytes, but in blocks (the minimum erase block in the selected microcircuit is 4kb). Erase destroys the entire block and this is the only way to change 0 to 1. Therefore, when working with flash memory, you often have to align data structures to the erase block border.

Record in NOR Flash:

| Binary data | |

|---|---|

| It was | 01010101

|

| Recorded | 00001111

|

| Has become | 00000101

|

The journal itself represents a sequence of variable-length records. A typical recording length is about 30 bytes (although recordings of several kilobytes in length sometimes happen). In this case, we work with them just like a set of bytes, but, if you're interested, CBOR is used inside the records.

In addition to the journal, we need to store some “tuning” information, whether updated or not: a certain device ID, sensor calibration, the “device is temporarily disabled” flag, etc.

This information is a set of key-value records, also stored in CBOR. We do not have much of this information (a few kilobytes maximum), it is updated infrequently.

In the future, we will call it context.

If you recall where this article began, it is very important to ensure the reliability of data storage and, if possible, continuous operation even in the event of hardware failures / data corruption.

What sources of problems can be considered?

- Power off during write / erase operations. This is from the category of "against scrap no reception."

Information from the discussion on stackexchange: when you turn off the power while working with flash, that erase (setting to 1), that write (setting to 0) lead to undefined behavior: data can be written, partially written (say, we transferred 10 bytes / 80 bits , and only 45 bits managed to be recorded), it is also possible that some of the bits will be in the "intermediate" state (reading can produce both 0 and 1); - Errors of the flash memory itself.

BER, although very low, cannot be zero; - Bus errors

Data transmitted via SPI is not protected in any way, it may well happen as single bit errors or synchronization errors - loss or insertion of bits (which leads to massive data corruption); - Other errors / failures

Errors in the code, Raspberry "glitches", alien intervention ...

I formulated requirements, the fulfillment of which, in my opinion, is necessary to ensure reliability:

- Records should be written to the flash memory immediately, pending recording is not considered; - if an error occurs, it should be detected and processed as soon as possible; - the system should, if possible, recover from errors.

(an example from life "as it shouldn't be," which, I think, everyone met: after an emergency restart, the file system "broke" and the operating system does not boot)

Ideas, Approaches, Thoughts

When I started thinking about this task, a bunch of ideas flashed through my head, for example:

- Use data compression

- Use tricky data structures, for example, to store record headers separately from the records themselves, so that if an error occurs in any record, you can read the rest without any problems;

- use bit fields to control the completeness of recording when the power is turned off;

- store checksums for everything and everything;

- use some kind of error-correcting coding.

Some of these ideas were used, some were decided to refuse. Let's go in order.

Data compression

The events that we record in the journal themselves are quite the same and repeatable (“they threw a coin of 5 rubles,” “clicked on the button for issuing change”, ...). Therefore, compression should be quite effective.

The overhead for compression is insignificant (the processor we have is quite powerful, even on the first Pi there was one core with a frequency of 700 MHz, on current models there were several cores with a frequency of more than a gigahertz), the speed of exchange with the storage is low (several megabytes per second), the record size is small. In general, if compression will affect performance, then only positive (absolutely uncritical, just stating) . Plus, we don’t have a real embedded, but ordinary Linux - so the implementation should not require much effort (just link the library and use several functions from it).

A piece of the log was taken from the working device (1.7MB, 70 thousand records) and for a start it was checked for compressibility using the gzip, lz4, lzop, bzip2, xz, zstd available on the computer.

- gzip, xz, zstd showed similar results (40Kb).

I was surprised that the fashionable xz showed itself here at the gzip or zstd level; - lzip with default settings gave a slightly worse result;

- lz4 and lzop showed not very good results (150Kb);

- bzip2 showed surprisingly good results (18Kb).

So, the data is compressed very well.

So (if we do not find fatal flaws) there should be compression! Just because more data will fit on the same flash drive.

Let's think about the flaws.

The first problem: we have already agreed that each record should immediately get on a flash. Usually, the archiver collects data from the input stream until it decides that it is time to write to the output. We need to immediately get a compressed data block and save it in non-volatile memory.

I see three ways:

- Compress each entry using dictionary compression instead of the algorithms discussed above.

It is a working option, but I do not like it. To ensure a more or less decent level of compression, the dictionary should be “sharpened” for specific data, any change will lead to the fact that the compression level catastrophically drops. Yes, the problem is solved by creating a new version of the dictionary, but this is a headache - we will need to store all versions of the dictionary; in each entry we will need to indicate with which version of the dictionary it was compressed ... - Compress each record with "classical" algorithms, but independently of the others.

The compression algorithms under consideration are not designed to work with records of this size (tens of bytes), the compression coefficient will be clearly less than 1 (that is, an increase in the amount of data instead of compression); - Do FLUSH after each recording.

Many compression libraries have support for FLUSH. This is a command (or parameter to the compression procedure), having received which the archiver forms a compressed stream so that on its basis it is possible to recover all uncompressed data that has already been received. Such an analogue ofsync

in file systems orcommit

in sql.

Importantly, subsequent compression operations will be able to use the accumulated dictionary and the compression ratio will not suffer as much as in the previous version.

I think it’s obvious that I chose the third option, let’s dwell on it in more detail.

There was a great article about FLUSH in zlib.

I did a test based on the article, took 70 thousand journal entries from a real device, with a page size of 60Kb (we will return to page size) :

| Initial data | Gzip -9 compression (without FLUSH) | zlib with Z_PARTIAL_FLUSH | zlib with Z_SYNC_FLUSH | |

|---|---|---|---|---|

| Volume, Kb | 1692 | 40 | 352 | 604 |

At first glance, the price introduced by FLUSH is excessively high, but in reality we have a poor choice - either not to compress at all, or to compress (and very efficiently) with FLUSH. Do not forget that we have 70 thousand records, the redundancy introduced by Z_PARTIAL_FLUSH is only 4-5 bytes per record. And the compression ratio turned out to be almost 5: 1, which is more than an excellent result.

In the case of using Z_SYNC_FLUSH, the last 4 bytes of each record will always be 0x00, 0x00, 0xff, 0xff. And if we know them, then we can not store them, so the total size is only 324Kb.

The article I am referring to has an explanation:

A new type 0 block with empty contents is appended.

A type 0 block with empty contents consists of:

- the three-bit block header;

- 0 to 7 bits equal to zero, to achieve byte alignment;

- the four-byte sequence 00 00 FF FF.

As you can see, in the last block before these 4 bytes comes from 3 to 10 zero bits. However, practice has shown that zero bits are actually at least 10.

It turns out that such short data blocks are usually (always?) Encoded using a block of type 1 (fixed block), which necessarily ends with 7 zero bits, so we get 10-17 guaranteed zero bits (and the rest will be zero with a probability of about 50%).

So, on test data, in 100% of cases, before 0x00, 0x00, 0xff, 0xff there is one zero byte, and more than in the third case there are two zero bytes (maybe the fact is that I use binary CBOR, and when using text CBOR JSON would be more likely to meet blocks of type 2 - dynamic block, respectively, blocks would occur without additional zero bytes before 0x00, 0x00, 0xff, 0xff) .

Total on the available test data can fit in less than 250Kb of compressed data.

You can save some more by juggling bits: now we ignore the presence of several zero bits at the end of the block, several bits at the beginning of the block also do not change ...

But then I made a strong-willed decision to stop, otherwise at such a pace you can reach the development of your archiver.

In total, I got 3-4 bytes per record from my test data, the compression ratio was more than 6: 1. Honestly, I didn’t count on such a result, in my opinion everything that is better than 2: 1 is already a result that justifies the use of compression.

Everything is fine, but zlib (deflate) is still archaic well-deserved and slightly old-fashioned compression algorithm. The mere fact that the last 32Kb from the uncompressed data stream is used as a dictionary looks strange today (that is, if some data block is very similar to what was in the input stream 40Kb back, it will start to be archived again, but will not refer to the past entry). IN fashionable modern archivers size dictionary is often measured in megabytes rather than kilobytes.

So we continue our mini-study of archivers.

Next bzip2 was tested (recall, without FLUSH it showed a fantastic compression ratio, almost 100: 1). Alas, with FLUSH it showed itself very poorly, the size of the compressed data was greater than the uncompressed.

Libbz2 offers only one flush option, which seems to clear the dictionary (similar to Z_FULL_FLUSH in zlib), there’s no reason to talk about some kind of efficient compression.

And zstd was the last to be tested. Depending on the parameters, it compresses either at the gzip level, but much faster, or gzip is better.

Alas, with FLUSH, it also showed itself to be "not very": the size of the compressed data was about 700Kb.

I asked a question on the project page in github, I got an answer that it is worth counting on up to 10 bytes of service data for each block of compressed data, which is close to the results, catching deflate does not work.

I decided to stop on this in experiments with archivers (I remind you that xz, lzip, lzo, lz4 did not show themselves even at the testing stage without FLUSH, but I did not consider more exotic compression algorithms).

We return to the problems of archiving.

The second (as they say in order, but not in value) problem - the compressed data is a single stream in which there are constantly sending to previous sections. Thus, if a section of compressed data is damaged, we lose not only the block of uncompressed data associated with it, but also all subsequent ones.

There is an approach to solving this problem:

- Prevent the occurrence of a problem - add redundancy to the compressed data, which will allow to identify and correct errors; we will talk about this later;

- Minimize the consequences in case of a problem

We already said earlier that it is possible to compress each data block independently, and the problem will disappear by itself (data corruption of one block will lead to the loss of data of only this block). However, this is an extreme case in which data compression will be inefficient. The opposite extreme: use all 4MB of our microcircuit as a single archive, which will give us excellent compression, but catastrophic consequences in case of data corruption.

Yes, a compromise is needed in terms of reliability. But we must remember that we are developing a data storage format for non-volatile memory with an extremely low BER and a declared data storage period of 20 years.

In the course of the experiments, I found that more or less noticeable losses in the compression level begin on blocks of compressed data with a size of less than 10Kb.

It was mentioned earlier that the memory used has a page organization, I see no reason why you should not use the “one page - one block of compressed data” correspondence.

That is, the minimum reasonable page size is 16Kb (with a margin for service information). However, such a small page size imposes significant restrictions on the maximum recording size.

Although I still do not expect records of more units of kilobytes in compressed form, I decided to use 32KB pages (a total of 128 pages per chip).

Summary:

- We store data compressed using zlib (deflate);

- For each record, set Z_SYNC_FLUSH;

- For each compressed record, we trim the final bytes (for example, 0x00, 0x00, 0xff, 0xff) ; in the header indicate how many bytes we cut;

- We store data in 32Kb pages; inside the page there is a single stream of compressed data; on each page we begin compression again.

And, before finishing with the compression, I would like to draw attention to the fact that we get only a few bytes of write data, so it is extremely important not to inflate service information, each byte is counted.

Storing Data Headers

Since we have records of variable length, we need to somehow determine the location / boundaries of the records.

I know three approaches:

- All records are stored in a continuous stream, first comes the record header containing the length, and then the record itself.

In this embodiment, both the headers and the data may have a variable length.

In fact, we get a singly linked list that is used all the time; - Headers and records themselves are stored in separate streams.

Using headers of constant length, we ensure that damage to one header does not affect the rest.

A similar approach is used, for example, in many file systems; - Records are stored in a continuous stream, the boundary of the record is determined by some marker (symbol / sequence of characters, which is / which is prohibited inside data blocks). If a marker is found inside the record, then we replace it with a certain sequence (escape it).

A similar approach is used, for example, in the PPP protocol.

I will illustrate.

Option 1:

Everything is very simple here: knowing the length of the record, we can calculate the address of the next header. So we move through the headers until we meet a region filled with 0xff (free region) or the end of the page.

Option 2:

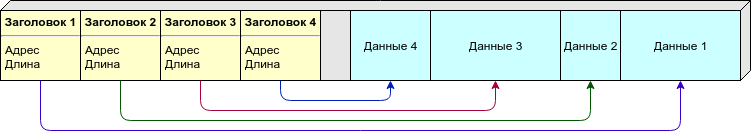

Due to the variable length of the record, we cannot say in advance how many records (and therefore the headers) per page we need. You can spread the headers and the data itself into different pages, but I prefer a different approach: we place the headers and the data on the same page, however, the headers (of constant size) come from the beginning of the page, and the data (of variable length) from the end. As soon as they "meet" (there is not enough free space for a new record) - we consider this page to be full.

Option 3:

There is no need to store in the header the length or other information about the location of the data, there are enough markers that indicate the boundaries of the records. However, the data has to be processed while writing / reading.

As a marker, I would use 0xff (which the page is filled after erase), so the free area will definitely not be treated as data.

Comparison table:

| Option 1 | Option 2 | Option 3 | |

|---|---|---|---|

| Error tolerance | - | + | + |

| Compactness | + | - | + |

| Implementation complexity | * | ** | ** |

Option 1 has a fatal flaw: if any of the headers is damaged, our entire subsequent chain is destroyed. Other options allow you to recover part of the data even with massive damage.

But here it is appropriate to recall that we decided to store the data in a compressed form, and so we lose all the data on the page after the "broken" record, so even though there is a minus in the table, we do not take it into account.

Compactness:

- in the first version, we need to store only the length in the header, if integers of variable length are used, then in most cases we can do with one byte;

- in the second option, we need to store the starting address and length; the record should be a constant size, I estimate 4 bytes per record (two bytes per offset, and two bytes per length);

- In the third option, just one character is enough to indicate the beginning of the recording, plus the recording itself will grow by 1-2% due to screening. In general, approximate parity with the first option.

( ). , .

, - - . , , — , , ...

: , , .. , , , — , .

: " — " - .

, , :

.

, erase 1, 1 0, . " " 1, " " — 0.

flash:

- “ ”;

- ;

- “ ”;

- ;

- “ ”.

, “ ”, 4 .

“1111” — “1000” — ; , .

, , , , , ( ) .

: .

( ) , . , , .

, , ( , , — ) .

, , , — .

— CRC. , 100% , — . , , : , . — .

, CRC — . , .

, .

:

, :

| , | , | |||

|---|---|---|---|---|

| one | 0 | 1000 | 0 | 1000 |

| one | one | 4 | 999 | 1003 |

| one | 2 | ≈0 | 1997 | 1997 |

| one | 4 | ≈0 | 3990 | 3990 |

| 10 | 0 | 9955 | 0 | 9955 |

| 10 | one | 39 | 990 | 1029 |

| 10 | 2 | ≈0 | 1979 | 1979 |

| 10 | 4 | ≈0 | 3954 | 3954 |

| 1000 | 0 | 632305 | 0 | 632305 |

| 1000 | one | 2470 | 368 | 2838 |

| 1000 | 2 | 10 | 735 | 745 |

| 1000 | 4 | ≈0 | 1469 | 1469 |

, — — .

, , 32 ( 64 -) .

, , , - 32- (16 , 0.01%; 24 , , ).

: , 4 ? ? , , .

, CRC-32C.

6 22 (, c), 4 655 ( ), 2 .

, , : ?

"" :

- — ( /, , ..);

- deflate zlib "" , , , ( , zlib ).

"" :

.

: CRC-32C, , flash ( ).

, , , , ( ) .

, .

, - , RAID-6 .

, , , .

, . ?

- ( - , Raspberry, ...)

, ; - ( - flash- , )

, ; - ;

-

.

( ) . , - .

: , , , ( , ).

Other

, ( ) , , .

- ""

- , .., , .

, , ; - .

— !

Magic Number (), ( , ) ; - ( ) , 1 ;

- .

- . .

Byte order

, , big-endian (network byte order), 0x1234 0x12, 0x34.

- .

32, , 1/4 ( 4 128 ).

( ).

( ), 0 ( 0, — 32, — 64 ..)

(ring buffer), 0, 1, ..., , .

4- , (CRC-32C), ", , ".

( -) :

- Magic Number ( — )

0xed00 ⊕

; - " " ( ).

( deflate). ( ), . ( ).

Z_SYNC_FLUSH, 4 0x00, 0x00, 0xff, 0xff, , , .

( 4, 5 6 ) -.

1, 2 3 , :

- (T), : 0 — , 1 — ;

- (S) 1 7 , "", ;

- (L).

S:

| S | , | , |

|---|---|---|

0

| one | 5 ( 00 00 00 ff ff

) |

10

| one | 6 ( 00 00 00 00 ff ff

) |

110

| 2 | 4 ( 00 00 ff ff

) |

1110

| 2 | 5 ( 00 00 00 ff ff

) |

11110

| 2 | 6 ( 00 00 00 00 ff ff

) |

1111100

| 3 | 4 ( 00 00 ff ff

) |

1111101

| 3 | 5 ( 00 00 00 ff ff

) |

1111110

| 3 | 6 ( 00 00 00 00 ff ff

) |

, , :

T, — S, L ( ), — , — , -.

, ( 63+5 ) .

CRC-32C, (init) .

CRC "", (- ) : .

CRC .

.

, 0x00 0xff ( 0xff, ; 0x00 ).

-

.

— - .

( , Linux NOR Flash, )

-

.

.

— .

( ) 1.

( UUID ).

, - .

8 ( + CRC), Magic Number CRC .

"" , , .

, CRC, "". — . — , "" .

, , "" .

zlib ( ).

, , , .

, Z_SYNC_FLUSH., .

( CRC) — (. ).

CRC. — .

New page

( ). — , .

erase. 0xff. - — , ..

, , — ( ).

, - ( , JSON, MessagePack, CBOR, , protobuf) NOR Flash.

, "" SLC NOR Flash.

BER, NAND MLC NOR ( ? ) .

, , FTL: USB flash, SD, MicroSD, etc ( 512 , — "" ) .

128 (16) 1 (128). , , , ( , NOR Flash ) .

- , — , , github.

Conclusion

, .

, : - , , . , () - .

, ? Oh sure. , , . - .

? , , . .

, , " ".

, () , , "" (, , ; ). ( — ) .

, .

Literature

, .

, , , :

- The infgen utility by zlib. Able to understand the contents of archives deflate / zlib / gzip in an understandable way. If you have to deal with the internal deflate (or gzip) format device, I highly recommend it.