The world around us is constantly and continuously changing, whether we want it or not. And technology plays a big role in this, the development and dissemination of which is becoming more and more active every year. We no longer consider the car a rarity, but mobile communications as magic. Technology has become part of our daily lives, work and ourselves. One of the most popular topics among futurists and science fiction writers has always been robots - autonomous machines that can make life easier for a person or arrange total genocide of all life on the planet, according to films about the Terminator. In any case, a number of very funny questions remain - how will a person behave with a machine, will he trust it, and how effective will their cooperation be? Scientists from the US Army research laboratory decided to conduct a series of experiments to establish which aspects of the behavior and “appearance” of an autonomous machine improve collaboration with humans. What exactly we managed to find out we learn from their report. Go

Study basis

To better understand the relationship between man and machine, you should first look at the relationship between man and man. Given that the planet’s population is divided into many groups (religion, nationality, ethnicity, etc.), a person will always divide people into “we” and “they”. This process of separation, according to the researchers, proceeds at a subconscious level and leads to an increase in the likelihood of choosing a person belonging to the same group for cooperation. Scientists also note that these preferences have nothing to do with manifestations of discrimination against people of other nationalities, religion, and other things.

In the case of autonomous machines, it remains unclear whether such a social categorization takes place at the time of cooperation between man and machine. If it is, how is it manifested, how is it regulated, and how can it be changed?

To test this, scientists conducted a series of experiments, the participants of which were people from two distant cultures (USA and Japan) and cars with virtual faces corresponding to the same cultures and expressing certain emotions.

There were 468 participants from the USA and 477 from Japan.

Demographic characteristics for a group from the USA were as follows:

- gender: 63.2% - men and 36.8% - women;

- age: 0.9% - 18-21; 58.5% - 22-34; 21.6% - 35-44; 10.7% - 45-54; 6.2% - 55-64; 2.1% - over 64 years old;

- ethnicity: 77.3% - Indo-European; 10.3% - African American; 1.3% - East Indian; 9.2% - Hispanic; 6.0% - Asian (southeastern).

Demographic characteristics for the group from Japan were as follows:

- gender: 67.4 - men and 32.6% - women;

- age: 0.6% - 18-21; 15.7% - 22-34; 36.4% - 35-44; 34.7% - 45-54; 10.4% - 55-64; 2.1% - over 64 years old;

- ethnicity: 0.6% - East Indian; 99.4% - Asian (southeastern).

People may relate to each other differently, depending on personal categorization, but it is worth remembering that an autonomous machine is not a person. Therefore, this device already belongs to another social group, so to speak, by default. Of course, a person can perceive a machine as a social actor (participant in some kind of interaction), but he will perceive it differently from what he would perceive another living person from flesh and blood.

A striking example of this is the earlier study of the human brain during the game of “rock-paper-scissors”. When a person played with another person, the medial prefrontal cortex was activated, which plays an important role in mentalization * .

Mentalization * - the ability to represent the mental state of oneself and other people.But when playing with the machine, this part of the brain was not activated.

Similar results were shown by experiments with the game of ultimatum.

The ultimatum game * is an economic game in which player No. 1 receives a certain amount of money and must share it with player No. 2, whom he sees for the first time. The offer can be any, but if player number 2 refuses it, then both players will not receive money at all.When player # 2 received an unfair offer from player # 1 (human), he activated sections of the central lobe of the brain responsible for the formation of negative emotions. If player 1 was a car, then no such reaction was observed.

Such experiments once again confirm the fact that a person perceives a machine differently than another person. Some researchers believe that the machine does not represent competition for us from the point of view of our instincts, and therefore there is a similar reduced (or even none at all) activity of certain parts of the brain.

Everything is quite logical: man is a wolf to man, but even between wolves there is a certain degree of trust and desire to cooperate in certain conditions. But the car acts as a fox, with which the wolves do not want to cooperate from the word at all. However, such preferences do not cancel the fact that there are more and more robots in our world, and therefore we need to find methods to improve the relationship between us and them.

Why did researchers decide to use this type of social categorization as cultural identity? Firstly, earlier it was already established that a person reacts positively to machines that have certain speech characteristics (emphasis, for example), social norms and race.

Secondly, in cases where representatives of different social groups take part in experiments, a distinct interweaving of cooperation and competition is traced in the first stages. In the subsequent stages, when the participants get to know each other better, a clear line of behavior is drawn up corresponding to the task of experience. Simply put, participants from different social groups did not trust each other in the first place, even when the task of experience was related to cooperation. But after some time, trust and desire to complete the task outweighed the subconscious distrust.

Thirdly, previous experiments have shown that the initial expectations of cooperation based on social categorization can be rejected if we resort to more situationally significant signs (emotions).

That is, the researchers endowed the machine with visual features of those social groups to which the experiment participants (people) belonged, and a number of emotional expressions, and then checked different combinations to identify the connection.

From all this, two main hypotheses were identified. The first is the association of positive signs of cultural identity can mitigate the adverse prejudices that people have by default about machines. The second is that expressing emotions can exceed expectations of cooperation / competition based on cultural identity. In other words, if you give the car visual features of a certain cultural group, then people from the same group can subconsciously trust it more. However, in the case of the manifestation of certain emotions, indicating the unwillingness of the machine to enter into cooperation, all cultural prejudices instantly cease to be important.

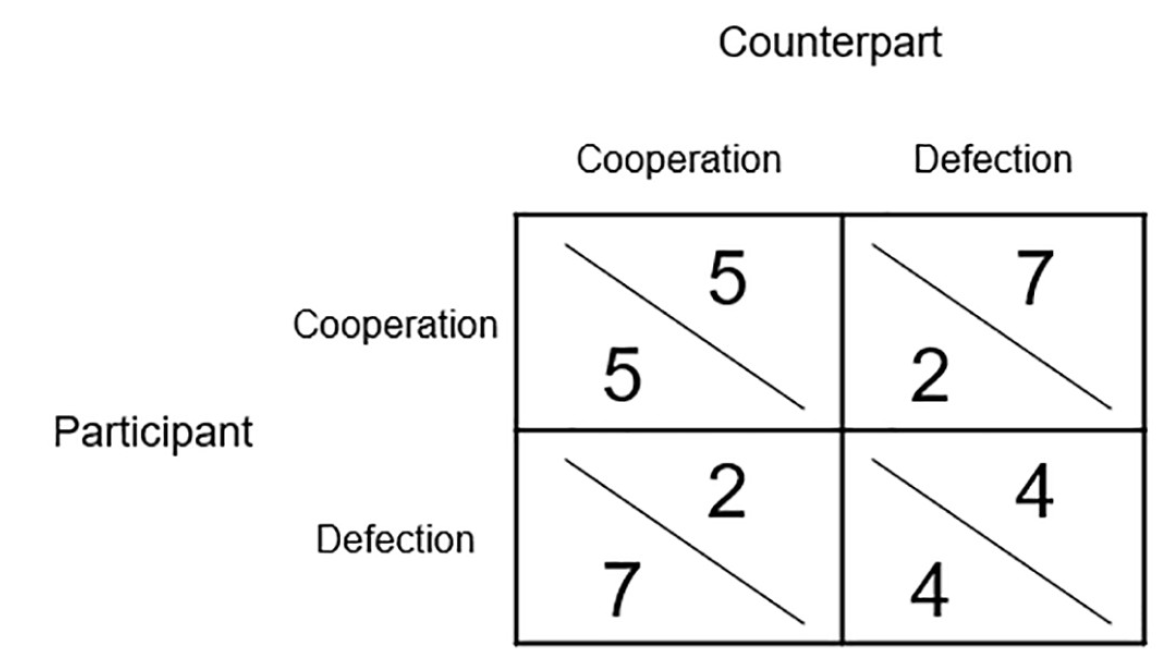

Curiously, the experiment was based on the prisoner's dilemma - a game in which cooperation is not a winning tactic. Following the theory of games, the prisoner (player) will always maximize his own benefit without thinking about the benefits of other players. Thus, rationality should push players towards betrayal and unwillingness to cooperate, but in reality the results of such experiments are often far from rational, which explains the presence of the word “dilemma” in the name of this mathematical game. There is a small catch: if one player believes that the second will not cooperate, then he also should not cooperate, however, if both players follow such tactics, then both will lose.

Experiment Results

Image No. 1

In total, each of the participants in the experiment spent 20 rounds of playing with the machine. The image above is a gain matrix. The points gained during the game had real financial benefit, as they were subsequently converted into lottery tickets with a winning amount of $ 30.

The main objective of the experiment was to identify how participants will collaborate / compete with people or machines, and how cultural identity and emotions will influence these processes.

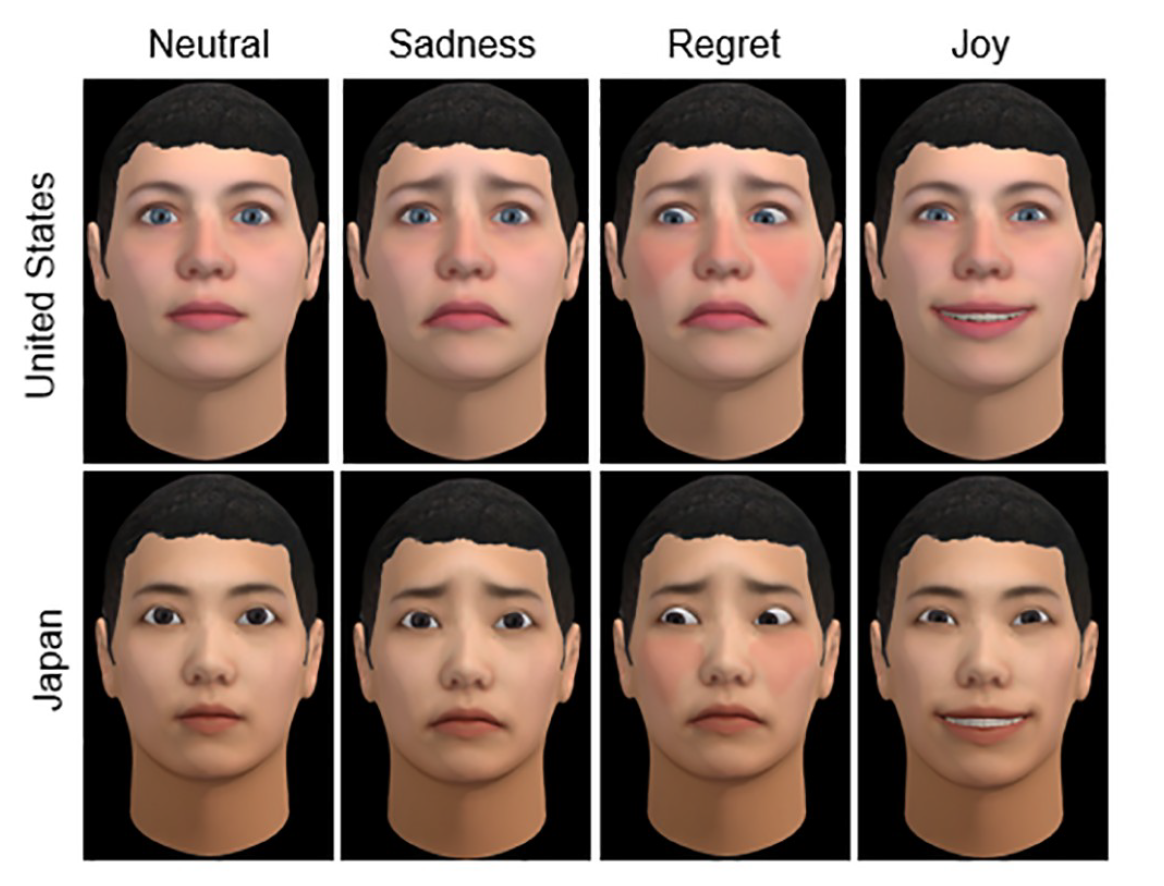

Image No. 2

The above two cultural identities (USA and Japan) of virtual faces for machines expressing different emotions (neutral, sadness, regret and joy). It is worth noting that virtual faces were used by all participants in the experiments, i.e. people and cars.

The emotional series was not chosen by chance. Earlier experiments with the prisoner's dilemma showed that some emotions can encourage cooperation, while others can compete, depending on the time they are expressed.

In this model, the emotions of the machine, and the actions that cause them, were divided into two main categories.

Pushing a person to competition:

- regret after mutual cooperation (the machine missed the opportunity to operate a human participant for its own benefit);

- joy after operation (the participant goes for cooperation, but receives nothing in return from the car);

- sadness after mutual competition / neutral emotion after mutual cooperation.

Encouraging people to cooperate:

- the joy of mutual cooperation;

- regret from exploitation;

- sadness after mutual competition.

Thus, in the experiment there were several variables at the same time: cultural identity (USA or Japan), the declared type of partner (person or machine) and expressed emotions (competitive or collaborative).

The results of the experiments were divided into two categories according to the type of pairs of participants: participants from one cultural group and participants from different cultural groups.

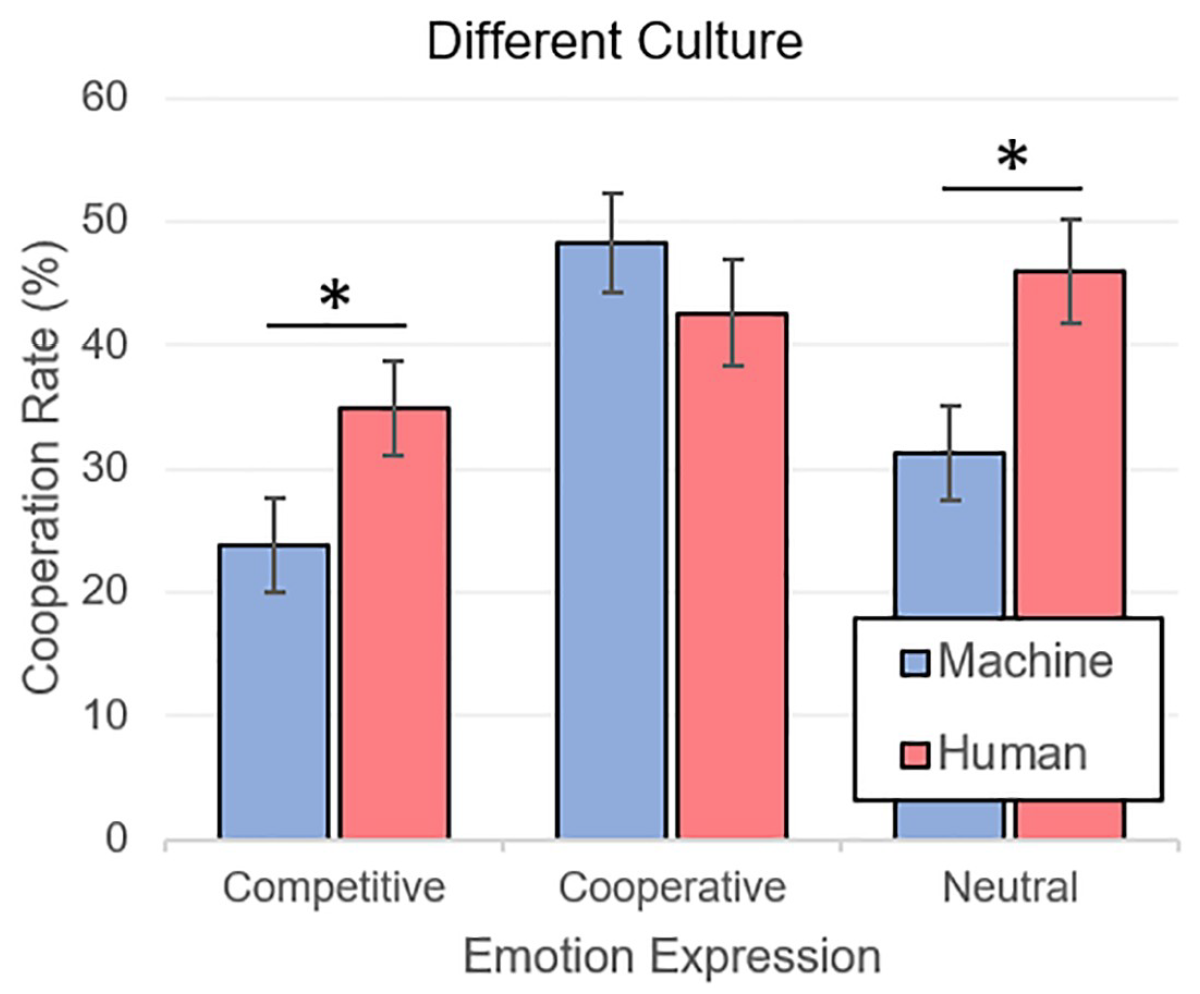

Image No. 3

Above is a graph of the results (analysis of variance, i.e. ANOVA) of experiments in which different cultural groups came together in the game. As expected, cooperation with people (M = 41.15, SE = 2.39) was expressed more than cooperation with machines (M = 34.45, SE = 2.25). Regarding emotions, everything was no less predictable - players more often collaborated with those who expressed emotions of cooperation (M = 45.42, SE = 2.97) than emotions of competition (M = 29.34, SE = 2.70). Neutral emotions (M = 38.63, SE = 2.85) also outperformed competitive ones.

It is curious that the emotions that inspire confidence in human players completely neutralized the fact that they play with the machine, thereby reducing the degree of mistrust.

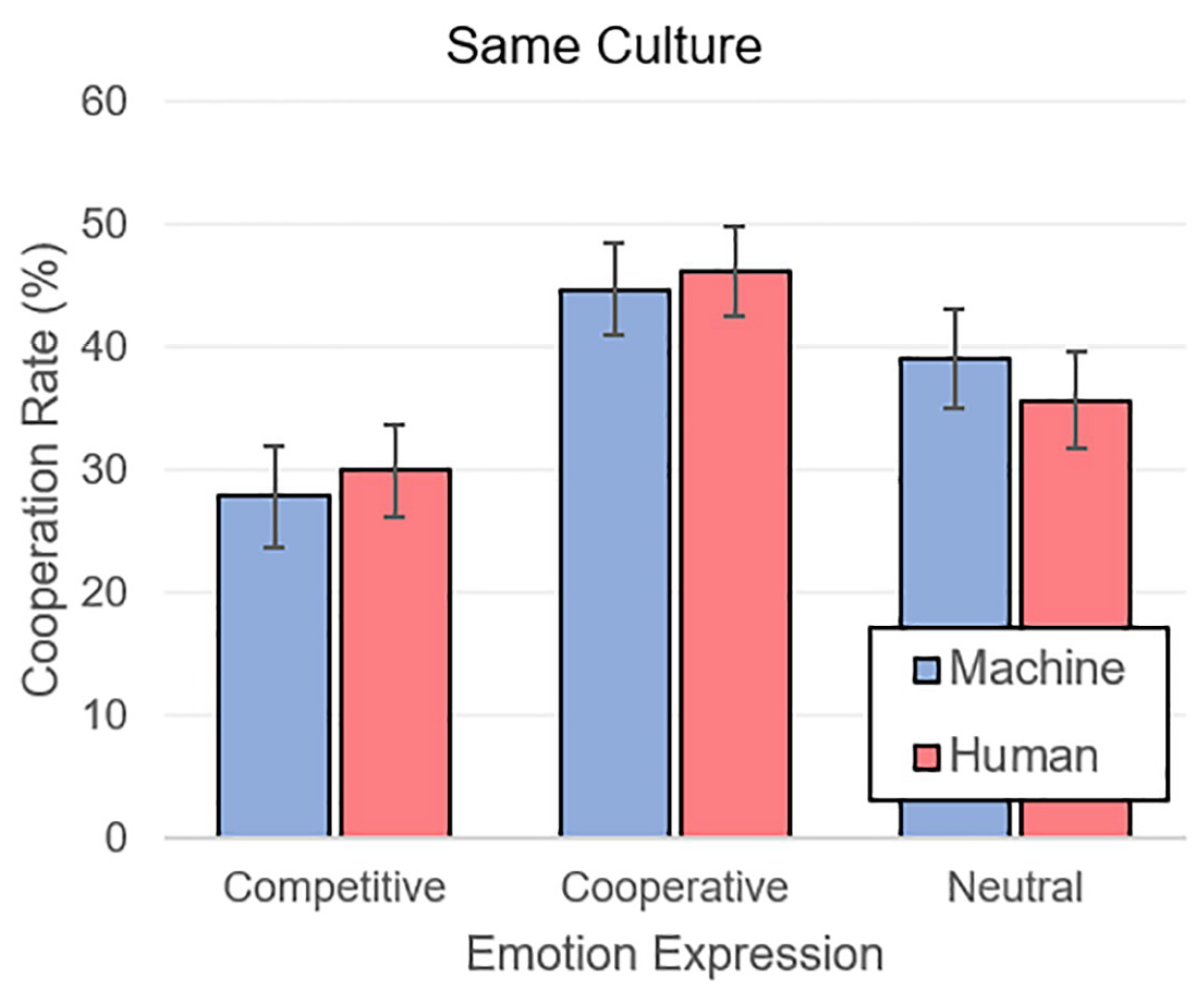

Image No. 4

When participants came across partners from the same cultural group, the situation was similar. Cooperative (M = 45.55, SE = 2.61) emotions outweighed neutral (M = 37.42, SE = 2.81) and competitive (M = 28.99, SE = 2.78).

The totality of the results makes it clear that a person uses the same psychological parameters of trust and mistrust in communication with a machine as another person. Autonomous machines are initially perceived as members of a social group alien to the person. However, the use of a visual face with traits of a certain cultural identity appropriate to the human partner can mitigate the distrust of the machine.

The most important observation is that cultural identity and belonging to machines are completely leveled by the correct, so to speak, emotions.

For a more detailed acquaintance with the nuances of the study, I recommend a look at the report of scientists .

Epilogue

This study is very ambiguous, but it shows us an important feature of human psychology. We unconsciously categorize the people around us into certain social groups, experiencing greater trust in those who belong to our group. This is our primary reaction. But it is not the only one, because when communicating with a person, we begin to receive a number of signals (visual and sound) expressed by emotions, manner of speech, voice, etc. These signals complement the picture of a person’s representation for us, from which we are repelled at the moment of making a decision - trust or not. In other words, the social categorization that occurs in our heads does not matter when it comes to lively communication. This is what the old sayings say: “they are greeted by clothes, but escorted by the mind” and “do not judge a book by its cover”.

In relation to cars, a person was always tuned up with apprehension and distrust, which were often not supported by significant reasons. Such is the nature of man - to initially reject that which is foreign. However, just as knowledge conquers ignorance, so does actual interaction with autonomous machines capable of expressing emotions conquer human prejudices.

Does this mean that all robots in the future should have emotions? Yes and no. Researchers, whose work we examined today, believe that in the first couple of emotions for robots will be an excellent function that can accelerate the process of adoption of autonomous machines by a society of people. What can I say, to live with wolves - howl like a wolf, no matter how rude it may sound.

In addition, we already endow robots with our own traits: we make them eyes like ours, faces like ours, endow them with voices and emotions. A person will always try to humanize the car, and, as this study shows, not in vain.

Friday off-top:

Thank you for your attention, stay curious and have a great weekend everyone, guys! :)

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending it to your friends, cloud VPS for developers from $ 4.99 , a 30% discount for Habr users on the unique entry-level server analog that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share a server? (options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

Dell R730xd 2 times cheaper? Only we have 2 x Intel TetraDeca-Core Xeon 2x E5-2697v3 2.6GHz 14C 64GB DDR4 4x960GB SSD 1Gbps 100 TV from $ 199 in the Netherlands! Dell R420 - 2x E5-2430 2.2Ghz 6C 128GB DDR3 2x960GB SSD 1Gbps 100TB - from $ 99! Read about How to Build Infrastructure Bldg. class c using Dell R730xd E5-2650 v4 servers costing 9,000 euros for a penny?