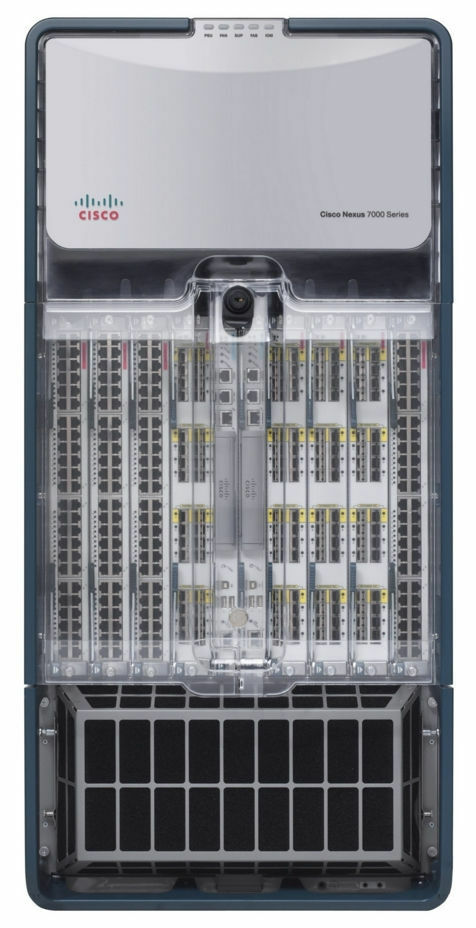

Hello! My name is Dmitry Samsonov, I work as a leading system administrator at Odnoklassniki . The photo shows one of the four data centers where the equipment that serves our project is installed. Behind these walls there are about 4 thousand units of equipment: servers, data storage system, network equipment, etc. - almost ⅓ of all our equipment.

Most servers are Linux. There are several dozens of Windows servers (MS SQL) - our legacy, which we have been systematically refusing for many years.

So, on June 5, 2019 at 14:35 the engineers of one of our data centers reported a fire alarm.

Negation

14:45. Minor smoky incidents in data centers are more common than they appear. The indicators inside the halls were normal, so our first reaction was relatively calm: we introduced a ban on working with production, that is, on any configuration changes, rolling out new versions, etc., except for work related to fixing something.

Anger

Have you ever tried to find out from firefighters exactly where on the roof there was a fire, or to get on a burning roof yourself to assess the situation? What will be the degree of trust in the information received through five people?

14:50. Information has been received that the fire is approaching the cooling system . But will it come? The system administrator on duty displays external traffic from the fronts of this data center.

At the moment, the fronts of all our services are duplicated in three data centers, balancing at the DNS level is used, which allows you to remove the addresses of one data center from DNS, thereby protecting users from potential problems with access to services. In the event that problems have already occurred in the data center, it automatically leaves the rotation. More details can be found here: Load balancing and fault tolerance in Odnoklassniki.

So far, the fire has not affected us in any way - neither users nor equipment have been affected. Is it an accident? The first section of the document “Accident Action Plan” defines the concept of “Accident”, and the section ends as follows:

“ If in doubt, an accident or not, then this is an accident! "

14:53. An accident coordinator is appointed.

The coordinator is a person who monitors the communication between all participants, estimates the scale of the accident, uses the "Accident Action Plan", attracts the necessary staff, monitors the completion of the repair, and most importantly, delegates any tasks. In other words, this is the person who manages the entire process of eliminating the accident.

Bargain

15:01. We start disconnecting servers that are not tied to production.

15:03. Turn off all reserved services correctly.

This includes not only fronts (which at this point users are no longer logging on to) and their auxiliary services (business logic, caches, etc.), but also various databases with replication factor 2 or more ( Cassandra , binary data storage , cold storage , NewSQL , etc.).

15:06. Information has been received that a fire threatens one of the halls of the data center. We don’t have equipment in this hall, but the fact that fire can spread from the roof to the halls greatly changes the picture of what is happening.

(Later it turned out that there was no physical threat to the hall, since it was hermetically isolated from the roof. The threat was only for the cooling system of this hall.)

15:07. We allow the execution of commands on servers in accelerated mode without additional checks ( without our favorite calculator ).

15:08. The temperature in the halls is within normal limits.

15:12. An increase in temperature in the halls was recorded.

15:13. More than half of the servers in the data center are turned off. We continue.

15:16. It was decided to turn off all equipment.

3:21 p.m. We begin to turn off the power on stateless-servers without properly shutting down the application and OSes.

15:23. A group of those responsible for MS SQL is singled out (there are few of them, the dependence of services on them is not great, but the recovery procedure takes longer and is more complicated than, for example, Cassandra).

Depression

15:25. Information was received about turning off the power in four of the 16 rooms (No. 6, 7, 8, 9). In the 7th and 8th halls are our equipment. There is no more information about our two rooms (No. 1 and 3).

Usually, in case of fires, the power supply is immediately turned off, but in this case, thanks to the coordinated work of firefighters and technical personnel of the data center, it was turned off not everywhere and not immediately, but if necessary.

(Later it turned out that the power in halls 8 and 9 did not turn off.)

15:28. We begin to deploy MS SQL databases from backups in other data centers.

How long will it take? Is there enough network bandwidth for the entire route?

15:37. Locked off some parts of the network.

Management and production network are physically isolated from each other. If the production network is available, then you can go to the server, stop the application and turn off the OS. If it is not available, then you can go through IPMI, stop the application and turn off the OS. If there is no network, then you can’t do anything. “Thank you cap!” You will think.

“Anyway, there’s a lot of confusion,” you might also think.

The thing is that servers even without a fire generate a huge amount of heat. More precisely, when there is cooling, they generate heat, and when there is none, they create hellish hell, which at best will melt part of the equipment and turn off the other part, and at worst ... it will cause a fire inside the hall, which will almost certainly destroy everything.

15:39. We fix problems with the conf database.

The conf database is the backend for the service of the same name, which is used by all production applications to quickly change settings. Without this base, we cannot control the portal, but the portal itself can work.

15:41. Temperature sensors on Core network equipment record readings close to the maximum permissible. This is a box that occupies an entire rack and ensures the operation of all networks inside the data center.

15:42. Issue tracker and wiki are not available, switch to standby.

This is not production, but in an accident, the availability of any knowledge base can be critical.

15:50. One of the monitoring systems has disconnected.

There are several of them, and they are responsible for various aspects of the services. Some of them are configured to work autonomously inside each data center (that is, they monitor only their data center), while others consist of distributed components that transparently survive the loss of any data center.

In this case , the business logic metric anomaly detection system , which works in master-standby mode, stopped working. Switch to standby.

Adoption

15:51. Through IPMI, all servers except MS SQL were turned off without a correct shutdown.

Are you ready for mass server management through IPMI if necessary?

The very moment when the rescue of equipment in the data center at this stage is completed. All that could be done has been done. Some colleagues can relax.

16:13. There was information that freon tubes from the air conditioners burst on the roof - this will delay the launch of the data center after eliminating the fire.

16:19. According to data received from the technical staff of the data center, the temperature increase in the halls stopped.

17:10. Restored the work of the conf database. Now we can change the application settings.

Why is it so important if everything is fault tolerant and works even without one data center?

First, not all is fault tolerant. There are various secondary services that do not yet survive the data center failure well, and there are bases in master-standby mode. The ability to manage the settings allows you to do everything necessary to minimize the impact of the consequences of the accident on users even in difficult conditions.

Secondly, it became clear that in the next few hours the data center will not fully recover, so it was necessary to take measures so that the long-term unavailability of replicas did not lead to additional troubles like disk overflows in the remaining data centers.

17:29. Pizza time! People work for us, not robots.

Rehabilitation

18:02. In rooms No. 8 (ours), 9, 10 and 11, the temperature stabilized. In one of those that remain disconnected (No. 7), our equipment is located, and the temperature there continues to rise.

18:31. They gave the green light to the launch of equipment in halls Nos. 1 and 3 - the fire did not affect these halls.

Currently, servers are being launched in the halls No. 1, 3, 8, starting with the most critical. Checks the correct operation of all running services. There are still problems with room 7.

18:44. Technical staff of the data center found that in the hall number 7 (where only our equipment is located), many servers are not turned off. According to our data, 26 servers remain there. After re-checking, we find 58 servers.

20:18. Technical staff of the data center blows air in the room without air conditioning through mobile ducts laid through the corridors.

23:08. They let the first admin go home. Someone needs to sleep at night in order to continue work tomorrow. Next, release another part of the admins and developers.

02:56. We launched everything that could be launched. We do a big check of all services with autotests.

03:02 a.m. Air conditioning in the last, 7th hall restored.

03:36. They brought the fronts in the data center into rotation in the DNS. From this moment, user traffic begins to arrive.

We dissolve most of the home administrators team. But we leave a few people.

Small FAQ:

Q: What happened from 18:31 to 02:56?

A: Following the “Accident Action Plan”, we launch all services, starting with the most important ones. At the same time, the coordinator in the chat gives a service to a free administrator, who checks whether the OS and the application have started, if there are any errors, or the indicators are normal. After the launch is completed, he informs the chat that he is free and receives a new service from the coordinator.

The process is additionally inhibited by the failed iron. Even if the OS shutdown and server shutdown were correct, some of the servers do not return due to suddenly failed drives, memory, or chassis. With a loss of power, the failure rate increases.

Q: Why can’t you just start everything at once, and then repair what comes out in monitoring?

A: Everything needs to be done gradually, because there are dependencies between services. And you should check everything right away, without waiting for monitoring - because it is better to deal with problems right away, not wait for their aggravation.

7:40 a.m. The last admin (coordinator) went to bed. The work of the first day is completed.

8:09 a.m. The first developers, engineers in the data centers and administrators (including the new coordinator) began restoration work.

09:37. We started to raise the hall number 7 (the last).

At the same time, we continue to restore what we didn’t finish in other rooms: replacing disks / memory / servers, fixing everything that’s on fire in monitoring, reverse role switching in master-standby circuits, and other little things, which are nevertheless quite a lot.

17:08. We allow all regular work with production.

21:45. The work of the second day is completed.

09:45. Today is Friday. There are still quite a few minor issues in monitoring. Ahead of the weekend, everyone wants to relax. We continue to massively repair everything that is possible. Regular admin tasks that could be postponed are postponed. The coordinator is new.

15:40. Suddenly half of the Core stack of network equipment in another OTHER data center restarted. Removed fronts from rotation to minimize risks. There is no effect for users. Later it turned out that it was a bad chassis. The coordinator works with fixing two crashes at once.

17:17. The network in another data center is restored, everything is checked. The data center is in rotation.

18:29. The work of the third day and, in general, recovery from the accident is completed.

Afterword

04/04/2013, on the day of the 404th error , Odnoklassniki survived the largest accident — for three days the portal was completely or partially unavailable. Throughout this time, more than 100 people from different cities, from different companies (thanks a lot again!) Remotely and directly in the data centers, manually and automatically repaired thousands of servers.

We have drawn conclusions. To prevent this from happening again, we have carried out and continue to carry out extensive work to this day.

What are the main differences between the current accident and 404?

- We have got an “Emergency Action Plan”. Once a quarter, we conduct exercises - we play out an emergency situation that a group of administrators (all in turn) must eliminate using the "Emergency Action Plan". Leading system administrators take turns working out the role of coordinator.

- Quarterly in test mode, isolate data centers (all in turn) over the LAN and WAN, which allows you to identify bottlenecks in a timely manner.

- Fewer bad drives, because we have tightened standards: less running hours, stricter thresholds for SMART,

- Completely abandoned BerkeleyDB - an old and unstable database that required a lot of time to recover from server restart.

- Reduce the number of servers with MS SQL and reduce the dependence on the remaining.

- We have got our own cloud - one-cloud , where we have been actively migrating all services for two years now. The cloud greatly simplifies the entire cycle of work with the application, and in case of an accident gives such unique tools as:

- correct stop all applications in one click;

- simple application migration from failed servers;

- automatic ranking (in order of priority of services) launching a whole data center.

The accident described in this article became the largest since day 404. Of course, not everything went smoothly. For example, during the inaccessibility of the data center-burner in another data center, a disk crashed on one of the servers, that is, only one of the three replicas in the Cassandra cluster was available, due to which 4.2% of users of mobile applications could not log in . At the same time, already connected users continued to work. In total, more than 30 problems were identified according to the results of the accident - from banal bugs to deficiencies in the architecture of services.

But the main difference between the current accident and the 404th is that while we eliminated the consequences of the fire, users still corresponded and made video calls in Tamtam , played games, listened to music, gave gifts to each other, watched videos, TV shows and TV channels in OK , and also streamed to OK Live .

How are your accidents going?