I am the FirstVDS system administrator, and this is the text of the first introductory lecture from my short course on helping novice colleagues. Professionals who have recently begun to engage in system administration are faced with a number of the same problems. To propose solutions, I undertook to write this series of lectures. Some things in it are specific for hosting technical support, but in general, they can be useful, if not for everyone, then for many. Therefore, I adapted the text of the lecture to share here.

It does not matter what your position is called - it is important that you are in fact administering. Therefore, let's start with what the system administrator should do. Its main task is to put in order, maintain order and prepare for future increases in order. Without a system administrator, a mess begins on the server. Logs are not written, or something is written in them, resources are not optimally allocated, the disk is filled with all kinds of garbage and the system begins to slowly bend from so much chaos. Calm System administrators in your person begin to solve problems and eliminate the mess!

System Administration Pillars

However, before starting to solve problems, it is worth getting acquainted with the four main pillars of administration:

- Documentation

- Templating

- Optimization

- Automation

This is the foundation of the basics. If you do not build your workflow on these principles, it will be inefficient, unproductive, and generally not very similar to real administration. Let's deal with each separately.

Documentation

Documentation does not mean reading the documentation (although without it anywhere), but also maintaining it.

How to keep records:

- Faced with a new problem that you have never seen before? Write down the main symptoms, methods of diagnosis and principles of elimination.

- Have you come up with a new elegant solution to a typical problem? Write it down so you don't have to reinvent it in a month.

- Have you been helped to deal with a question in which you did not understand anything? Write down the main points and concepts, draw a diagram for yourself.

The main idea: do not completely trust your own memory in the development and application of the new.

The format in which you will do this depends only on you: it can be a system with notes, a personal blog, a text file, a physical notebook. The main thing is that your records meet the following requirements:

- Do not be too long . Highlight key ideas, methods, and tools. If understanding the problem requires diving into the low-level mechanics of Linux allocation of memory, do not rewrite the article from which you learned it - give a link to it.

- The entries should be understandable to you. If the line

race cond.lockup

does not allow you to immediately understand what you described with this line - explain. Good documentation does not need to be understood for half an hour. - Search is a very good feature. If you are blogging, add tags; if in a physical notebook - stick a small post-it with descriptions. There is no special meaning in the documentation if you spend as much time searching for an answer in it as you would spend on solving a problem from scratch.

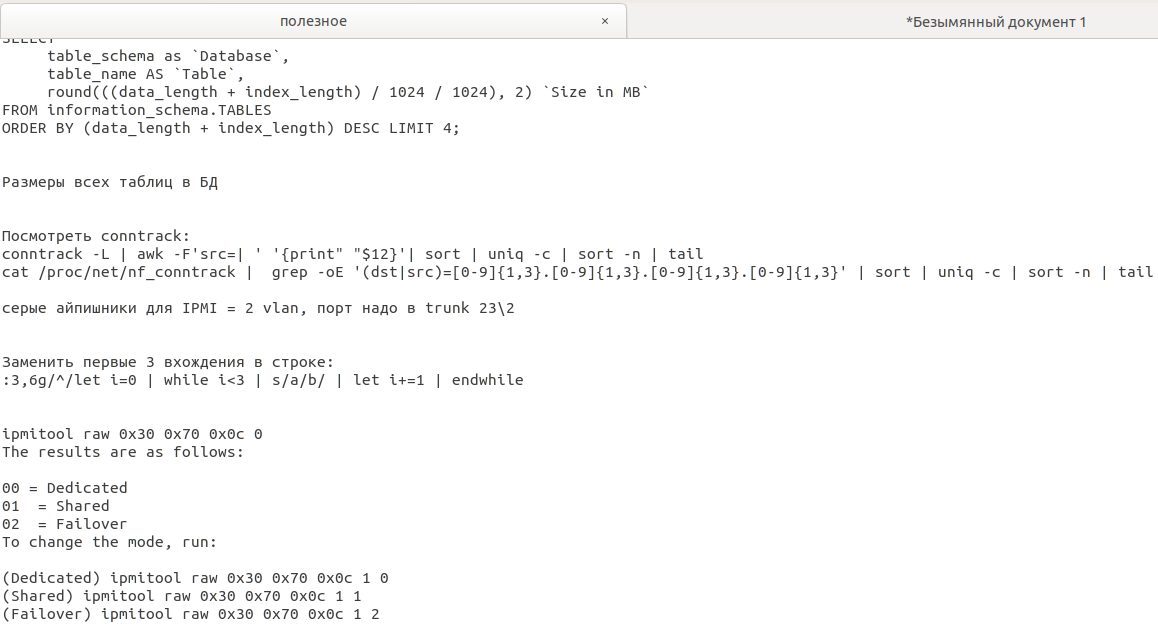

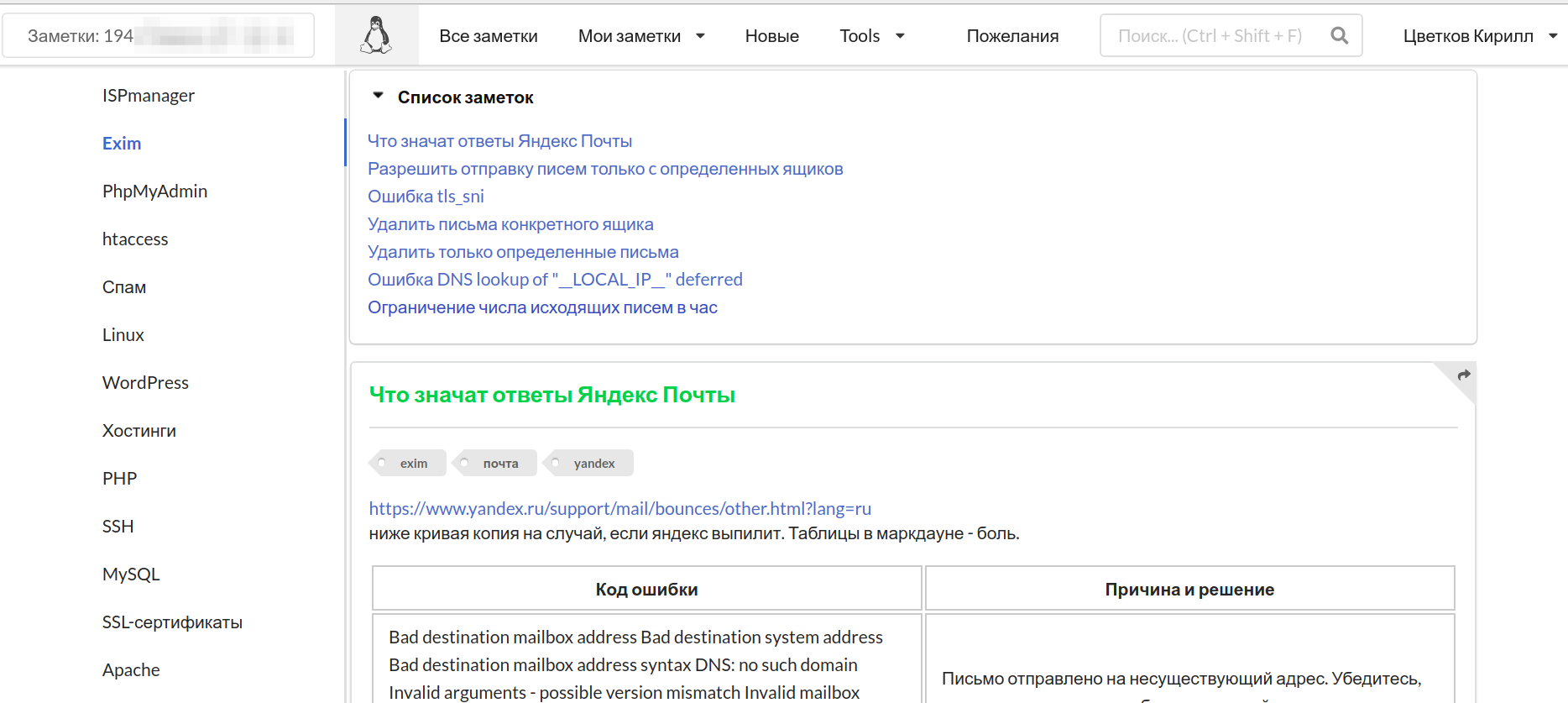

This is how the documentation may look: from primitive notepad entries (picture above), to a full-fledged multi-user knowledge base with tags, search and all possible amenities (below).

You will not only not have to look for the same answers twice: documentation will be a great help in learning new topics (notes!), Pump your spider sense (the ability to diagnose a difficult problem with one superficial glance) and add organization to your actions. If the documentation is available to your colleagues, it will allow them to figure out what and how you piled up there when you are not in place.

Templating

Patterning is the creation and use of patterns. To solve most of the typical questions, it is worth creating a specific action template. A standardized sequence of actions should be used to diagnose most problems. When you have repaired / installed / optimized something, the performance of this something should be checked against standardized check lists.

Templating is the best way to organize your workflow. Using standard procedures to solve the most common problems, you get a lot of cool stuff. For example, the use of checklists will allow you to diagnose all the functions important for the operation and discard the diagnostics of unimportant functionality. And standardized procedures will minimize unnecessary throwing and reduce the likelihood of error.

The first important point is that the procedures and checklists also need to be documented. If you just rely on memory, you can skip some really important test or operation and ruin everything. The second important point is that all template practices can and should be modified if the situation requires it. There are no perfect and absolutely universal templates. If there is a problem, but a template check did not reveal it, this does not mean that there is no problem. However, before undertaking the verification of some unlikely hypothetical problems, you should always do a quick template check first.

Optimization

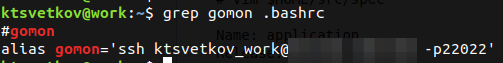

Optimization speaks for itself. The workflow needs to be optimized as much as possible in terms of time and labor. There are countless options: learn keyboard shortcuts, abbreviations, regular expressions, available tools. Look for options for more practical use of these tools. If you call a command 100 times a day, hang it up on the keyboard shortcut. If you need to regularly connect to the same servers, write alias in one word, which will connect you there:

Check out the different options for the available tools - perhaps there is a more convenient terminal client, DE, clipboard manager, browser, email client, and operating system. Find out what tools your colleagues and acquaintances use - maybe they choose them for a reason. After you pick up the tools, learn how to use them: learn the keys, abbreviations, tips and tricks.

Make the best use of standard tools - coreutils, vim, regular expressions, bash. There are tons of great manuals and documentation for the last three. With their help, you can quickly switch from the state of "I feel like a monkey who prunes nuts with a laptop - to" I'm a monkey who uses a laptop to order a walnut cracker. "

Automation

Automation will transfer heavy operations from our tired hands to the tireless hands of automation. If some standard procedure is performed in the heels of the same type of commands, then why not wrap all these commands in one file and not call one command that downloads and executes this file?

Automation itself consists of 80% of writing and optimizing your own tools (and another 20% of trying to get them to work as they should). It can be just an advanced one-liner or a huge omnipotent tool with a web interface and API. The main criterion here is that creating a tool should take no more time and effort than the amount of time and effort that this tool will save you. If you write a script for five hours that you will never need again, for a task that would take you an hour or two to solve without a script - this is a very poor optimization of the workflow. You can spend five hours creating a tool only if the number, type of tasks and time allow it, which happens infrequently.

Automation does not necessarily mean writing complete scripts. For example, to create a bunch of objects of the same type from the list, a dexterous one-liner is enough to automatically do what you would do with your hands, switching between windows, with heaps of copy-paste.

Actually, if you build the administration process on these four pillars, you can quickly increase your efficiency, productivity and qualifications. However, this list needs to be supplemented with one more item, without which work in IT is almost impossible - self-education.

Sysadmin self-education

To be at least a little competent in this area, you need to constantly learn and learn new things. If you don’t have the slightest desire to run into the unknown and understand, you will very quickly wake up. All sorts of new solutions, technologies and methods are constantly appearing in IT, and if you do not study them at least superficially, you are on the way to losing. Many areas of information technology are on a very complex and voluminous basis. For example, network operation. Networks and the Internet are everywhere, you encounter them every day, but if you dig into the technologies behind them, you will find a huge and very complex discipline, the study of which is never a walk in the park.

I did not include this item in the list, because it is the key for IT in general, and not just for system administration. Naturally, you won’t be able to learn absolutely everything at once - you simply don’t have enough time physically. Therefore, in self-education, one should remember the necessary levels of abstraction.

You do not need to immediately learn how the internal memory management of each individual utility works, and how it interacts with Linux memory management, but it’s not bad to know what the RAM is schematically, and why it is needed. You don’t need to know how TCP and UDP headers are structurally different, but it would be nice to understand the main differences in protocols. You do not need to study what signal attenuation in optics is, but it would be nice to know why real losses are always inherited by nodes. There is nothing wrong with knowing how certain elements work at a certain level of abstraction and it’s not necessary to disassemble absolutely all levels when there is no abstraction at all (you just go crazy).

However, to discuss in their field at the level of abstraction “well, this is such a thing that allows you to display sites” is not very good. The following lectures will be devoted to an overview of the main areas that a system administrator has to deal with at lower levels of abstraction. I will try to limit the amount of knowledge reviewed to a minimum level of abstraction.

The 10 Commandments of System Administration

So, we have learned the four main pillars and foundation. Can I start solving problems? Not yet. Before this, it is advisable to familiarize yourself with the so-called "best practices" and the rules of good form. Without them, it is likely that you will do more harm than good. So, let's begin:

- Some of my colleagues believe that the very first rule is “do no harm”. But I tend to disagree. When you try not to harm, you can’t do anything - too many actions are potentially destructive. The most important rule I consider is “make a backup” . Even if you hurt, you can always roll back, and everything will not be so bad.

You need to back up always when time and place allow it. You need to backup what you will change and what you risk losing with a potentially destructive action. It is advisable to check the backup for the integrity and availability of all the necessary data. A backup should not be deleted immediately after you have checked everything, if you do not need to free up disk space. If the space requires it - back up to your personal server and delete it in a week. - The second most important rule (which I myself often break) is “don't hide it” . If you made a backup, write - where so that your colleagues do not have to look for it. If you have made some unobvious or complex actions, write down: you will go home, but the problem may recur or someone else will have it and your solution will be found by keywords. Even if you do something that you know well, your colleagues may not know.

- There is no need to explain the third rule: "never do the consequences of which you do not know, do not imagine or understand . " Do not copy commands from the Internet, if you do not know what they are doing, call man and parse first. Do not use ready-made solutions if you cannot understand what they are doing. Minimize the execution of obfuscated code. If there is no time to understand, then you are doing something wrong and you should read the next paragraph.

- "Test it . " New scripts, tools, single-line and commands should be checked in a controlled environment, and not on the client machine, if there is at least minimal potential for destructive actions. Even if you backup everything (and you did), downtime is not the coolest thing. Get a separate server / virtual machine / chroot for this case and test there. Has nothing broken? Then you can run on the "battle".

- "Control . " Minimize all operations that you do not control. One dependency curve in a package can drag half of the system behind it, and the -y flag set for yum remove gives you the opportunity to train your system recovery skills from scratch. If the action does not have uncontrolled alternatives, the next point and a ready backup.

- "Check it out . " Check the consequences of your actions and whether you need to roll back to backup. Check if the problem really resolved. Check whether the error is reproduced and under what conditions. Verify that you can break with your actions. To trust in our work is superfluous, but never to verify.

- "Communicate . " If you cannot solve the problem, ask your colleagues if they have encountered such a problem. Want to apply a controversial decision - find out the opinion of colleagues. Perhaps they will offer a better solution. There is no confidence in your actions - discuss them with colleagues. Even if this is your area of expertise, a fresh look at the situation can clarify a lot. Do not be shy of your own ignorance. It is better to ask a stupid question, to seem like a fool and get an answer to it, than not to ask this question, not to get an answer and stay in the cold.

- “Do not refuse help unreasonably . ” This item is the flip side of the previous one. If you were asked a stupid question - clarify and explain. Ask for the impossible - explain that it is impossible and why, offer alternatives. If there is no time (there really is no time, not desire) - say that you have an urgent question \ a lot of work, but you will figure it out later. If your colleagues do not have urgent tasks, suggest contacting them and delegate the question.

- "Come on feedback . " Some of the colleagues began to apply a new technique or a new script, and do you encounter the negative consequences of this decision? Report this. Perhaps the problem is solved in three lines of code or five minutes of refinement of the methodology. Stumbled upon a bug in software? Report a bug. If it is being reproduced or if it is not necessary to reproduce it, it will most likely be fixed. Speak out wishes, suggestions and constructive criticism, submit questions for discussion if it seems that they are relevant.

- “Ask for feedback . ” We are all imperfect, as are our decisions, and the best way to verify the correctness of our decision is to bring it up for discussion. They optimized something at the client - ask them to follow the work, maybe the “bottleneck” of the system is not where you were looking. They wrote a helping script - show your colleagues, maybe they will find a way to improve it.

If you constantly apply these practices in your work, most of the problems cease to be problems: you will not only reduce the number of your own errors and fakaps to a minimum, but you will also have the opportunity to correct errors (in the face of backups and colleagues who will advise you to backup). Further, only technical details, in which, as you know, the devil lies.

The main tools with which you will have to work more than 50% of the time are grep and vim. What could be easier? Text search and text editing. However, grep and vim are powerful multifunctional multitools that allow you to search and edit text efficiently. If some Windows notepad allows you to simply write / delete a line, then in vim you can do almost anything with text. Do not believe it - call the vimtutor command from the terminal and start learning. As for grep, its main strength is in regular expressions. Yes, the tool itself allows you to set search conditions and output data quite flexibly, but without RegExp it does not make much sense. And you need to know regular expressions! At least at a basic level. To begin with, I would advise you to watch this video , it discusses the basics of the basics of regular expressions and their use in conjunction with grep. Oh yes, when you combine them with vim, you get

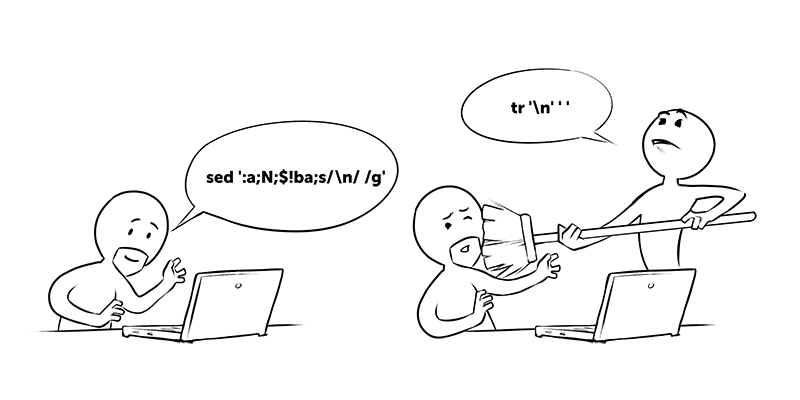

Of the remaining 50%, 40% are coreutils. For coreutils, you can see the list on Wikipedia , and the manual for the entire list on the GNU website. What is not covered by this set is in the POSIX utilities. It is not necessary to memorize this with all the keys by heart, but at least roughly knowing what basic tools can do is useful. No need to reinvent the wheel from crutches. Somehow I had to replace line breaks with spaces in the output from some utility, and the sick brain gave birth to a construction of the form

sed ':a;N;$!ba;s/\n/ /g'

, a colleague who approached me drove me off with a broom from the console, and then solved the problem by writing

tr '\n' ' '

.

I would advise you to remember that each individual tool and keys to the most frequently used commands approximately execute, for everything else there is man. Feel free to call man if you are in any doubt. And be sure to read man on man himself - it contains important information about what you find.

Knowing these tools, you can effectively solve a significant part of the problems that you will encounter in practice. In the following lectures, we will consider when to apply these tools and the structures of the main services and applications to which they are applied.

The FirstVDS system administrator, Kirill Tsvetkov, was with you.