BGL and what it is eaten with

The BGL template library is probably known to any developer who has encountered graph tasks. Appearing in Boost 1.18.1 in 2000, she immediately earned approving reviews from such classics of the genre as Alexander Stepanov. The library guide, compiled by Jeremy Sik, Lai-Kwan Lee, and Andrew Lamsdane, was published in Russian in 2006 by Peter (original - Jeremy G. Siek, Lie-Quan Lee and Andrew Lumsdaine, “The Boost Graph Library”, 2001 , Addison-Wesley). The library was intensively updated and developed almost until the end of 2013 (Boost 1.55.0). In particular, in 2005, the announcement of its distributed version (PBGL) appeared, which was included in Boost from version 1.40 in 2009 and to this day remains a kind of de facto standard for graph computing on high-performance clusters, in any case, in the academic world. As far as the history of commits can be judged, until 2005, the main developer of the library was Jeremy Sick, after 2005 - Douglas Gregor, and in general at various times a considerable amount of diverse people worked on the library. The publications devoted to it have repeatedly appeared on habr.com: first of all, a series of articles by Vadim Androsov should be noted: [ 1 , 2 , 3 ]. Thus, in principle, a good and diverse literature is devoted to the library, but its own documentation, also, generally speaking, quite extensive, suffers somewhat due to the fact that:

- Its table of contents and root sections, which claim to provide an exhaustive list of key entities, have not changed since 2001. For example, the author of these lines, who naively believed that:

The BGL currently provides two graph classes and an edge list adapter:

, after some time I was surprised to find the compressed_sparse_row_graph (sparse matrix) representation implemented in 2005. A similar story took place with the Bron-Kerbosch algorithm. Do not believe the table of contents, use a direct search in the header files;

adjacency_list

adjacency_matrix

edge_list

- There is no single commented list of the library’s internal categories (container_category, parallel_edge_traits, iterator_stability, etc., etc.) needed to implement your own views. Problems with understanding what is happening overtake, apparently, all users of the library who want to dig deeper, which leads to the appearance of a “kind of working code”, bringing it to a completely complete state takes a lot of time and effort: see, for example, a typical discussion .

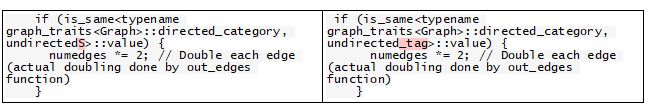

The number of categories and various selectors, including those that are confusingly similar, is so great that the authors themselves sometimes get confused in them. For example, in the constructors of the compressed_sparse_row_graph already mentioned above, in the current version there is a systematic error leading to crashes when trying to copy an undirected adjacency list:

It may be noted here on occasion that the full testing of such a flexible mechanism is a separate problem, since it is accompanied by a combinatorial explosion of the number of possible substitutions.

It should be noted with regret that at present the main developers have apparently lost interest in further work on the library, and for the last six years it, by no means having exhausted its development potential and not even completely freeing itself from internal inconsistencies and direct errors, is in free flight. The plans voiced in the region of 2011 to significantly expand the set of methods and cover new areas of graph theory (including by adding internal graph partitioning support to the ability to read METIS format) remained unfulfilled. It also seems that the library could benefit a lot (at least in terms of readability) from the widespread use of new products that became standard after 2011.

Thus, when looking from 2019, the issues of choosing a reference library for graph applications do not look as unambiguous as we would like, and over the past 5 years, the uncertainty has increased rather than decreased.

This situation causes some sadness, because the creation of a universal mechanism, like BGL, is in itself a kind of intellectual feat, both in terms of the power of the approach and the richness of the arsenal of implemented universal methods (a good one and a half hundred single-threaded and a couple dozen distributed) the library, as far as the author of these lines is known, still has no equal.

At the moment, only this library fundamentally allows, without loss of performance, imposing strict agreements on the presentation of data and loss of control over the internal mechanisms of the library itself, to completely separate graph algorithms and graph representations, additionally making the latter completely independent of the presentation of metadata associated with edges and vertices ( which, in principle, is obviously the most correct way of doing things).

The word “fundamentally” is used here for a reason. Considering the specific situation on the example of the long-suffering compressed_sparse_row_graph class already mentioned above, we can note, for example, the following deviations from high standards:

- The [] operator for the adjacency list and the sparse matrix handle Internal and Bundled Properties differently: the first returns only the external properties (the internal ones are accessible only using property_map), the second returns the framing property structure containing the general list of properties.

- The get function to get the index of the edge using boost :: property_map <compressed_sparse_row_graph, boost :: edge_index_t> :: type fell into boost :: detail, and not into boost, as in all other cases.

Finally, in the compressed_sparse_row_graph template, the specialization for the undirected graph (boost :: undirectedS) remained unfulfilled.

In this regard, when using the edge_index property (edge serial number), additional difficulties arise due to the fact that for the adjacency list this property must be explicitly set as internal and as such can be changed arbitrarily, but for an undirected graph its value does not depend on the direction where the rib passes. For a sparse matrix (always directional), it is a built-in constant property_map of a special form (calculated as an index in an array of edges). Accordingly, the values for oncoming edges (representing an undirected graph) cannot change and will always be different.

All these discrepancies lead to the impossibility of "simply replacing the graph representation with an equivalent one" when calling algorithmic functions, which significantly undermines the main advantage of the library. In practice, in such cases, either an excessive specialization of the code is required, or its processing to exclude elements with different behavior, or such adjustment of the graph templates so that they “behave identically” with different definitions of attributes, or, finally, removal of individual files from the library and creation "Personal boost version."

Additionally, the following, not so significant, inconveniences can be noted:

- The dimensions of the internal descriptors of graph representations have a significant impact on the memory consumption needed to store the graph, and sometimes affect the performance of the algorithms.

Some views (the same compressed_sparse_row_graph) allow you to control these dimensions. Others (adjacency_list) do not have such parameters and always use 64-bit integers (usually redundant), which cannot be replaced without modifying the code;

- Despite the fact that the authors of the library provided for very, very much, some obviously necessary primitives were not included in the library. For example, there is no function like reverse_edge that performs edge inversion.

The implementation of such functions, of course, depends on the graph representation: in this case, it can be implemented by a trivial exchange of elements of a pair, more or less efficient search by container, or not at all. It is difficult for the end user to understand all this variety of options, especially since, according to the ideology of the library, the internal members of descriptors should not be of interest to him.

- Likewise, some far from worthless scripts dropped out of the library. For example, you can define edge predicates that use filtered_graph to turn an undirected graph into a directed graph, but there is no way to bring this transformation to the attention of the library. Accordingly, regular algorithms for directed graphs will not compile with such an object, and algorithms for undirected graphs will not work correctly with it.

Somewhere in the neighborhood is the topic of support for technically non-directional graphs that have a service direction marker on the edges. However, increased attention to such a representation may be due to the particular nature of the tasks solved by the author, and the presence of wide interest in supporting such objects is not obvious.

- As for the reverse_edge function, taken as an example above, there is not at all an incredible option that the desired function is present somewhere in the bowels of the library, but for some reason received an unobvious name. This leads to the following problem, which at first glance is not serious, but significantly slows down work with complex template libraries (not only BGL, although it is clearly among the leaders by this criterion): work with extensive systems of implicitly interconnected functions without explicit parameter typing and with the non-obvious semantics of use (often the less transparent the more well-thought-out) are physically difficult, and existing development environments do not provide any support for this in the developer:

In fact, automatic assistants:

- Designed primarily for OOP support, when a set of functions is bound to the object on the right according to its type. With global functions that can be to the left of a type (much less a set of types) they help much worse even if all types are known.

- They are ridiculously not even able to work with simple templates. The version of the visual assistant used by the author, having in front of him the definition of a template class with default parameters, suggests specifying a “test substitution” in order to be able to generate a hint for the class. If you meet her, absolutely nothing happens.

- Moreover, they are less able to understand metaprogram qualifiers, even the simplest ones, such as enable_if.

- About the typical scenario: “we are inside a template function that is called from an indefinite amount of an indefinite length of chains of other functions, including template ones”, it is impossible to speak without tears. In this case, vim really remains the programmer's best friend.

Another aspect of the same situation can be illustrated using the first line of the code fragment shown in the previous figure. The reader is invited to complete the “boost current time” vs “CRT current time” queries and compare the results. Yes, boost :: date_time (now partially moved to std) makes it possible to do a lot of complex things correctly, while CRT allows you to do several trivial operations incorrectly, but in ubiquitous simple everyday situations, CRT is more convenient from all points of view, and polynomial constructions of the form posix_time :: second_clock :: local_time (a gentle example) tend to turn into hieroglyphs wandering in the program. Deprive the developer of access to the personal library of such hieroglyphs and the development speed will go to zero .

Boost :: string_algo makes it possible to do anything with strings, but, honestly, each not quite trivial operation is accompanied by a session of re-reading documentation to refresh the general logic of the library, the names of predicates, as well as a separate exercise to find out the compatibility of parameters. A similar situation occurs with tokenization operations in boost :: regexp, with flawless internal logic of the latter.

If this situation occurs with the most commonly used libraries, it is not surprising that BGL, as a more specialized library, in which, for example, there are make_property_map_function and make_function_property_map functions that are not related to each other, as well as a sacramental get function, reloaded to any the number of arguments of any type gives rise to the same problems, but in a hypertrophied form. Yes, any task can be solved by the get call chain, but, alas, not every get chain solves this problem.

Reading such a code can be easy and pleasant, it may even look like a synopsis of a formally written algorithm in a natural language, but when writing it, the impossibility of replacing words with synonyms, etc. manifestations of rigidity, is uncharacteristic for a real one.

- In general order, one cannot but repeat the banal, but not becoming less true, observation that metaprogramming in C ++ is still literally based on side effects of language tools whose original purpose was different, and even the simplest ideas on the basis of As a result, metalanguage is difficult to express and read, and linking the template code to the archaic system of included files does not facilitate the life of the developer and does not reduce the amount of code processed by the compiler.

(On the other hand, regularly occurring updates of boost and std bring a lot of not quite trivial and often extremely useful constructs and unexpected solutions, which really allow writing more clear and compact code at lower costs. However, the stream of new products is so wide, unequal and poorly structured that the most important additions to the standard library, even such obvious ones as those mentioned below options / apply_visitor or any, if the conceptual advantages of their application in the context of a specific project are not relevant For self-evident, without the help of a happy event, they can fall out of focus for a long time, if you do not spend a significant portion of working time directly thoughtfully tracking new products, studying non-trivial examples of their use and mental attempts to apply them to an existing code. cope with this problem - to keep for every five practicing C ++ programmers one C ++ - a theoretician who is only concerned with priority issues of new products, their implementation tions in the project and the selective education practitioners. Conclusion:do not start C ++ - projects with fewer developers).

- Finally, objectively the most serious problem that occurs when working with BGL boilerplate code . Suppose you are using a template algorithm that traverses a graph and takes a representation of graph G as an argument. In a typical case, this representation depends on the filters superimposed on the vertices and edges , and weight scheme . To work with filtered graphs, BGL offers the filter_graph template class mentioned above, the way of attaching the weight scheme to it is at the user's discretion. Functors representing , and may include at least the following views:

- Directly a wrapper for a function representing a weighting scheme and predicates representing filters (slowly, without initialization loss);

- Caches over these wrappers, mapping edge / node descriptors to edge / node indices, addressing the bitmap and array of values (without initialization loss, with a gradual increase in speed as used);

- Direct mapping of node / edge descriptors to filled value arrays (requires initialization, but can be built on the basis of the previous representation; the speed reaches its maximum).

Thus, if this algorithm were written in the traditional style, three selectors with at least three branches in each would appear in its body (and the need to adjust the body when new representations appear). Since each branching in the body of the algorithm that executes when passing through the graph a huge number of times results in noticeable loss of time, the desire to avoid these losses while maintaining the code of the same traditional style can lead to 27+ implementations of the algorithm for various combinations of representations.

The metaprogram style should save us from these troubles, allowing us to support one metafunction describing the algorithm that implicitly generates all the necessary implementations (as well as, perhaps, some, and possibly quite a lot of unnecessary ones, if the runtime structures of the code do not de facto generate some type combinations, which with a combinatorial explosion of options it can become a problem ) that do not contain encumbrances in the form of additional internal branches.

The operability of this system, as is known, strongly depends on the compiler’s ability to perform deep substitution of inline functions and to eliminate redundant variables, which are fully disclosed only when compiled with the –O2 switch. Without the use of full optimization, the metacode loses the traditional code in the strongest way due to the dramatic increase in the number of function calls (the characteristic speed ratio between optimized and non-optimized assemblies varies in such cases between 1: 3 and 1: 5, which often leads to the need to debug directly on the optimized assembly - and this is pleasure, of course, not for everybody ).

When performing the algorithm, the use of each version of the functors has a certain price, which is easily measurable. At the same time, using the fastest version should essentially be equivalent to directly accessing the array. Suppose we compare a traditional (hard-typed) implementation of an algorithm with a metaprogram implementation that receives an appropriate set of “fast” and “slow” versions of functors. Based on the foregoing, the operating time should not experience significant changes. In practice, the system demonstrates a strange elasticity: if the runtime of the "traditional" implementation is really well estimated by adding up the "costs" of the functors used, then the execution time of the metacode does not change much when one or even two functors of three of them are "fast" versions, and only with full replacing all components, the time changes abruptly and really turns out to be comparable with the time of the “traditional” implementation.

It will not be superfluous to note that the profiler in this situation is more than useless: it is misleading, stimulating 100% useless optimization of random user functions from the number of often called, although delays obviously do not occur in them, but in the "invisible" connection code. (The solution, based on the foregoing, should rather be some kind of special decomposition of the original template function, to please the compiler, which, regardless of the success of such manipulations, is wildness).

- A template code of this kind is characterized by the presence of an input layer preceding the actual calculations, in which the types that best fit the call parameters are selected. At this point in C ++, apparently, there is still a gap, which would not be harmful to fill up with several kilograms of syntactic sugar.

Uncomplicatedly written code corresponding to this case looks something like this:

void type_selector_fun(type_a a, type_b b, ...) { if (condition_1(a, b, ...)) { auto arg = get_type_1_obj(a, b, ...); run_calc(arg, a, b, ...); } else if (condition_1(a, b, ...)) { auto arg = get_type_2_obj(a, b, ...); run_calc(arg, a, b, ...); } else ... }

It can be rewritten somewhat more compactly using variant <...> in approximately the following form:

void type_selector_fun(type_a a, type_b b, ...) { variant<type_1, type_2, ...> arg; if (condition_1(a, b, ...)) { arg = get_type_1_obj(a, b, ...); } else if ... ... apply_visitor([&](auto arg_){run_calc(arg_, a, b, ...); }, arg); }

The disadvantage of this form of writing is the need for explicit enumeration of types type_1, type_2, ... in the variant declaration. These types can be cumbersome, recording using declval / result_of_t can be no less cumbersome.

When using any, there is no need to list types, but there is no way to get an analog apply_visitor.

It begs the use of some template function make_variant, which allows you to write code similar to the following:

auto arg = make_variant ( bind(condition_1, a, b, ...), bind(get_type_1_obj, a, b, ...), bind(condition_2, a, b, ...), bind(get_type_2_obj, a, b, ...), ... );

but the cure doesn’t look better than the disease.

In general, there is a typical situation for metaprogramming in C ++, when to express a very simple idea you have to use a whole arsenal of auxiliary tools with a result that is not very satisfactory in terms of readability and ease of recording. Essentially, I would like to be able to write something like the following:

// variant<...> // , : type_1, type_2 etc. variant<auto...> get_type_obj(typa_a a, type_b b, ...) { if (condition_1(a, b, ...)) { return get_type_1_obj(a, b, ...); } else if (condition_2(a, b, ...)) { return get_type_2_obj(a, b, ...); } else ... }

or even:

select_value_type(arg) { if (condition_1(a, b, ...)) { arg = get_type_1_obj(a, b, ...); } else ... ... } run_calc(arg, a, b, …);

The latter option, although it is completely knocked out of the C ++ style, looks the most practical, since there can be more than one arg variable for which the type is selected, and there is no reason to anticipate the logic of their construction .

- The flip side of this situation is the use of auxiliary structures (for example, caching) that implement a script that deserves the name of a "template variable", but differs from the C ++ 14 standard extension of the same name.

The corresponding code may look something like this:

struct CacheHolder { boost::variant< container<T1>, container<T2>, // ... container<TN>> ct; template<typename T> struct result_type_selector { typedef typename if_c<is_compatible<T, T1>::value, T1, if_c<is_compatible<T, T2>::value, T2, // ... if_c<is_compatible<T, TN>::value, TN, std::decay_t<T>>>>::type type; }; template<typename T> auto get() const -> const container<typename result_type_selector<T>::type>& { return boost::get<container<typename result_type_selector<T>::type>>(ct); } };

Here, as above, long constructions express the simple idea of accessing a variable representing the cache by a specific name, regardless of the dimension of the cached value (transparently passing through the calling code).

For brevity, the code is given for the case when only one type can be active, but in practice the situation is more common when several containers can exist simultaneously (it can be easily implemented in the same style using tuple and optional).

The implementation of the get <...> function assumes that the calling code has some idea of what kind of cached value it wants to access (for example, integer or floating point).

No less common is the situation where the exact type value is not important to the caller. In this case, the select_value_type / apply_visitor script from the previous paragraph is reproduced (adjusted for the possible multiplicity of values, which implies viewing the types in descending order of priority).

- Until now, there has been virtually no mention of PBGL in this text. This is explained by the author’s disappearingly small experience of working with this part of the library (in connection with which the author himself treats with a certain skepticism everything that is written below in this paragraph, and calls on others to the same). In fact, such an experiment boils down to several experiments, on the same type of search problems, demonstrating on practical data the loss of a distributed version to a local solution 3-5 times in memory and 15-20 times in overall performance (the origin of this frightening figure is explained here and additionally commented on in the following paragraphs) . Given the greater complexity of working with distributed structures, the choice in favor of the local version was self-evident in such a situation.

Let us explain the mechanics of PBGL operation using a typical example of the delta-walking algorithm. In this parallel version of Dijkstra’s algorithm, the priority queue is replaced with an array of “buckets”. Elements that fall into one "bucket" are processed in parallel. In its original form, delta pacing is a typical algorithm for shared memory systems.

In the distributed version, the following happens: in PBGL, when loading, the graph is scattered between the processes, and each process has a continuous range of vertex numbers. Thus, by the global vertex number, it is easy to know which process it belongs to. Accordingly, each process at each turn of the algorithm stores a part of the “bucket” containing the vertices belonging to this process. All processes simultaneously select and process the vertices from their parts of the "buckets" one at a time, while sending out messages about the need to update the following "buckets" to processes that own neighboring vertices. It is easy to see that, ceteris paribus, an increase in the number of processes leads to an increase in the number of messages they send. As a result, the execution time of the algorithm can not only not decrease, but even increase. In particular, the launch of several MPI processes to solve this problem on the same physical machine with a certain probability will only lead to an increase in the total processor load without any time gain.

It should be noted that delta pacing is the fastest distributed search algorithm (out of three supported by the library).

Thus, if the graph is not previously prepared, it should be divided into blocks of maximum size, one block per physical machine. By preliminary preparation, we mean here renumbering the vertices of the graph so that the continuous ranges of numbers that PBGL uses, if possible, correspond to loosely connected subgraphs. Packages such as METIS, paraMETIS and Zoltan are used for these purposes. Working with dynamic graphs in this mode is difficult.

In general, according to the results of the experiments described, the author had the impression that normal operation of the PBGL cluster is possible only with special communication equipment, and it makes sense to use machines with a minimum number of cores and maximum thread performance as nodes of such a cluster. The authors of Trinity in their article argue that their distributed storage works much more efficiently - the author finds it difficult to comment on this statement, but, given the above circumstances, finds it quite possible: the PBGL architecture bears a clear seal of the time when multi-core machines have not yet received wide distribution.

PBGL also shares the problems of the single-threaded version: some code synchronization, documentation and examples, aggravated by the greater complexity of the system and fewer users willing to share useful experience.

BGL and other animals

Taking into account a rather long list of specific complaints, it would not be inappropriate to ask: can the author recommend BGL for new projects in 2019. The answer will be this: the author believes that libraries of this style and applications based on them must have a future . As for the choice of a reference library for a particular project, we should seriously consider the instrumentation, not losing sight of the problems listed above. The answer obviously depends on many circumstances, including but not limited to those listed in the following paragraphs:

- Whether working with graphs in a project is the basis of functionality or an optional task;

- Can a project get an advantage through the use of multiple representations, or is work with hard-typed algorithms quite sufficient for it;

- The most beneficial type of concurrency for the project;

- Organizational nuances: the desire for metaprogramming in C ++ among employees (especially mathematics programmers), etc.

Probably, ceteris paribus, the use of BGL can be justified either in the case of a very small one-time use (to extrude or copy a working piece of code and forget), or for a large system for which increased flexibility will pay for heavy entry and other costs over time. In other cases, it makes sense to carefully study other options.

As for the possible alternatives, their list includes at least the following items :

| Title | Lemon |

| Type of library | C ++ Template Header |

| URL | lemon.cs.elte.hu |

| Distributed | no |

| Multithreaded | no |

| OS | any |

| Latest version | 2014

Distributed by the archive |

| Stackoverflow mentions | ~ 100 (36 in the [lemon-graph-library] section) |

| Comment | According to some reports, in single-threaded mode significantly exceeds BGL in speed .

The authors' attitude toward multithreading is evident from the following dialogue . In view of the above in the section on PBGL, this position is doubtful. |

| Title | SNAP |

| Type of library | C ++ |

| URL | github.com/snap-stanford/snap.git |

| Distributed | no |

| Multithreaded | yes (part of the methods) |

| OS | Linux, Mac, Cygwin |

| Latest version | 2018

The repository is being actively updated. |

| Stackoverflow mentions | <50 |

| Comment | One of the largest (over 10 MB of code) network analysis libraries (Network Ananlysis), which has been actively developing for many years. In a strange way, it is comparatively ignored by public attention.

See the description of the ideology of the system . The attitude to the implementation of parallel methods expressed on page 12 is close to the author of this article. Under the operating conditions of a typical modern machine park, it is the most natural. The paradigm shift took place in conditional 2011, to which the LEMON declaration above refers. |

| Title | MTGL |

| Type of library | C ++ Template Header |

| URL | software.sandia.gov/svn/public/mtgl/trunk |

| Distributed | ? |

| Multithreaded | Yes |

| OS | any |

| Latest version | ? |

| Stackoverflow mentions | 3 |

| Comment | Mysterious member of the meeting. The library was actively developing between 2005 and 2012. Sources were uploaded in 2017. Status unclear, mention of the project from the Sandia website removed. Ideologically inspired by the same BGL, but the code is completely independent. The total volume of source codes (including numerous tests and examples) reaches 17 Mb. The code looks well designed. See description . |

| Title | igraph |

| Type of library | C |

| URL | github.com/igraph/igraph.git |

| Distributed | no |

| Multithreaded | no |

| OS | any? |

| Latest version | 2014

The repository is being actively updated. |

| Stackoverflow mentions | About 100 in the [igraph] [c ++] and [igraph] [c] sections, and more than 500 in total (for all languages)

|

| Comment | Another library of network analysis, apparently, is very popular (mainly among pythonists, etc.). Description here . |

| Title | graph-tool |

| Type of library | C ++ python lib |

| URL | git.skewed.de/count0/graph-tool.git |

| Distributed | no |

| Multithreaded | Yes |

| OS | Judging by the use of autoconf - * nix only, but a simple adaptation to other systems is likely |

| Latest version | 2019 |

| Stackoverflow mentions | <20 |

| Comment | Another actively developing network analysis library with a long history of commits that directly uses BGL (in the local patched version).

See performance comparison table. |

| Title | LEDA |

| Type of library | C ++ |

| URL | www.algorithmic-solutions.com/index.php/products/leda-for-c |

| Distributed | no |

| Multithreaded | ? |

| OS | any |

| Latest version | ? |

| Stackoverflow mentions | ~ 10 |

| Comment | Commercial license. A large (and, one might say, old) library for scientific and technological computing, including a graph section. Apparently, it relies on its own infrastructure, and not on stl / boost, and in this sense is archaic. |

Of particular general interest is the question of the classification of various software products oriented to work with graphs. Their diversity, not to mention the number, is very large. Without pretending to complete (and even formally correct) classification, you can try, nevertheless, to highlight the following important areas in the development of graph applications:

- Graph DBMS (neo4j, etc.).

Systems of this kind are focused on performing transactional operations on large (distributed disk) graphs. Although the API of such a system can be highly developed, the execution speed of graph algorithms as far as one can judge is not the first priority. The system may not even try to load the entire graph into memory. For graph modification and traversal, declarative languages (SPARQL, Cypher, Gremlin) are supported. Great importance is attached to ensuring continuity with traditional SQL systems. - Graph extensions of big data processing systems working in the map / reduce paradigm (GraphX in Spark, Pegasus and Giraph for Hadoop) and independent cluster systems ( MS Trinity / MS Graph Engine , GraphLab). The first to perform operations on the graph implement the Google Pregel model (but not only it) and can be configured for use including massive parallel computing nodes. Both of them can be used, among other things, as a basis for corporate software projects.

Although the API of such systems can be quite developed (among other things, GraphX supports SPARQL and Cypher), the main focus when working with them is on solving infrastructure problems. GraphX is characterized by data immutability and a bias in the pipelining of all operations. MS Trinity currently does not include high-level methods and provides only a set of primitives for working with nodes and edges. Systems running on top of Hadoop are, in principle, of little use for solving arbitrary graph problems.

- Actually universal tool libraries that implement more or less wide sets of methods (BGL / PBGL, LEMON etc.), including massively parallel ones (nvGraph, Gunrock).

Based on them, application systems can be created that adapt graph algorithms to specific subject areas. - Systems and libraries specializing in certain complex tasks of universal importance (METIS, paraMETIS, Zoltran: graph partitioning, GraphViz, Gephi: visualization, GraphBLAS: algebraic algorithms for working with graphs, etc.).

Many independent graph applications can be conditionally assigned to this category, a detailed analysis of which would require too much time. The latter contains applications of all conceivable varieties: academic and commercial, single-user and multi-user, recently appeared and existing for more than a decade, etc.

An obscure but significant part of graph applications is focused on the tasks of Network Analysis and, already, Social Network Analysis (Community Detection). Oddly enough, Link Analysis systems (used, as a rule, by various "crime fighters"), which have some similarities with the system we are developing, are much less common. In all cases, without a special check, it is difficult to determine the nature of the data models used by various systems and the associated performance limitations, supported volumes, sets of operations, etc.

Notes

- BGL is not a pure header library, but at the moment the only functionality requiring linking is (rather optional) work with GraphViz format DOT files. Therefore, in the vast majority of cases, there is no need for linking and automatic linking with the proper version of libbost-graph to include BGL headers in the Boost configuration is not provided. Thus, for consistency with the libboost-regex library used by non-header BGL functions, it is convenient to simply plug in the boost \ regex.hpp header from the project code, even if the latter does not use regular expressions.

- Additional chaos is introduced by the presence of entities whose apparent equivalence encourages the hunt for (possibly absent) black cats in dark rooms .

- Before proceeding to its description (using a specific example, where it manifested itself especially strongly and unpleasantly), we note that the author is among the relatively few lucky people working with a loaded project in a powerful Windows operating system and the God-saved line of MSVC compilers. It is possible that the troubles described below are artifacts of this line of compilers: a variety of particular circumstances make it difficult to conduct a comparative experiment with gcc / clang in the * nix environment. If so, you can only congratulate users of other compilers.

- To soften which, in some cases, the recently appeared constexpr if will probably help.

- In our case, this led to special attention to the state-saving function, which allows debugging with convenience, first bringing the system to the desired initial state in an optimized assembly.

- In my practice, for various reasons, there was a need to convert runtime parameters into template arguments, and quite often I had to resort to, very accurately, very elaborate methods (inspired by the now outdated implementations of boost typeof and boost lambda for C ++ 98 that directly hit treat the programming technique in C ++ as a solution to the rebus), among which the star shines the selection of the argument by dividing in half , but, in general, the main problems with such operations were always associated with the inability to export selected type outside the scope, which gave rise to exotic patterns.

- The twenty-fold speed loss indicated above (in absolute figures - about 80 seconds versus 4 on a test directional graph with 50 million vertices and 200 million edges represented as an adjacency list) was obtained on an unprepared (randomly broken) graph when compared with the local version delta walks on an eight-core machine. , . , 6-8 — , .

- , . ( , - , . , , , , , , , ).

- , , – , , «» (-- ..) . ( , ), , «» , — ( ). , , , - . , , : «» ( ) , «» ( ), , . . , - , « », . , « » ? , , , : – , , , , .

- . , , .

- «LIBNAME C++ graph» , stackoverflow. , BGL 500 [boost-graph].