Can a car come up with something new or is it limited by what it knows? So far, no one knows the answer to this question. But now, artificial intelligence perfectly solves the problems of analyzing large non-standard data.

Once at Dodo Pizza they decided to conduct an experiment: to systematize and structurally describe what is considered chaotic and subjective throughout the world - taste. Artificial intelligence helped to find the craziest combinations of ingredients, which, despite their unusual nature, turned out to be tasty for most people.

My colleague and I acted as neural network specialists from MIPT and Skoltech in this unusual project. We have developed and trained a neural network that can solve the problem of generating kitchen recipes. In the course of the work, more than 300,000 recipes were analyzed, as well as the results of scientific research on the molecular compatibility of ingredients. Based on this, AI learned to find non-obvious connections between ingredients and understand how they combine with each other and how the presence of each of them affects the compatibility of all the others.

How we got into the Dodo AI-pizza project

Everything, as it usually happens, happened suddenly. There was a short timeout period before summer practice, we just finished the Deep Learning course, defended the project and tried to adapt to a calmer rhythm of study / life. But they couldn’t: accidentally ran into reposts in a personal request from BBDO about finding guys who could write a neural network to generate new recipes. More specifically: new pizza recipes for Dodo. Without hesitation, we decided that we wanted to try.

When the project was just beginning, we did not fully understand whether it would go any further, whether there would be a practical implementation, we were simply interested in the task. A lot of redbowl and fast internet helped and moved us forward. Looking back, we understand that some things could be done differently, but this is normal.

In any case, after a few weeks the working model of the neural network was ready, the stage of its launch in production began. We are very lucky that the project cannot be called industrial or technical in the strict sense of these words. The status of the experiment is more suitable for him.

Using our model, various variants of pizza recipes were generated, which we passed into the hands of very cool Dodo chefs to run product tests. The pizza tasting moment at the Dodo R&D Lab was a watershed in terms of recognizing the value of the work we did. It was very exciting to see the product sold. Indeed, often all developments and solutions are a rather ephemeral, intangible thing, and here the result could not only be touched, but also tasted.

Primary collection of dataset and chili

Any model needs data to work. Therefore, to train our AI, we collected 300,000 recipes from all available sources. It was important for us to collect not only pizza recipes, but to diversify the selection as much as possible, while trying not to go beyond the reasonable (for example, ignore cocktail recipes, realizing that their semantics will not greatly affect the pizza's recipe semantics).

After collecting the data, we got more than 100,000 unique ingredients. The big problem was bringing them to one form. But where did so many items come from? Everything is simple, for example, chili peppers in recipes are indicated like this: chili, chilli, chiles, chillis. It is obvious to you that this is one and the same pepper, but the neural network perceives different spellings as separate entities. We fixed it. After we cleaned the data and brought them to one form, we only had 1,000 positions left.

World tastes analysis

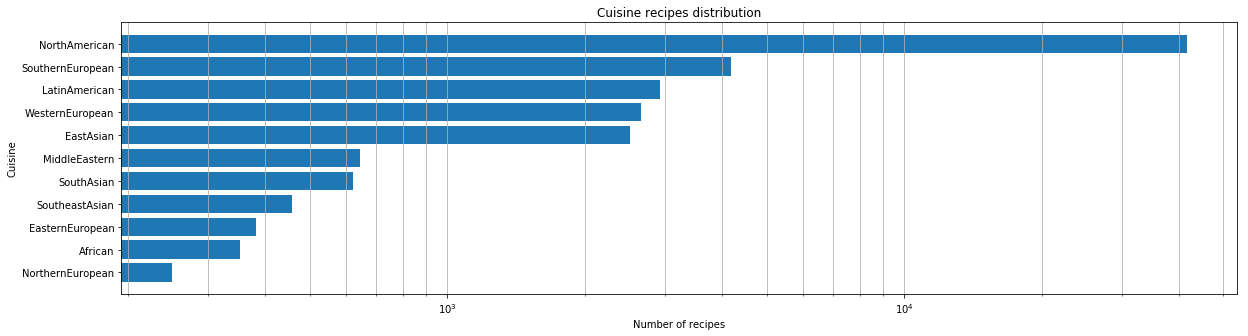

After we received the dataset ready for work, we carried out the initial analysis. First, we looked at what cuisines of the world are represented in our dataset in a quantitative ratio.

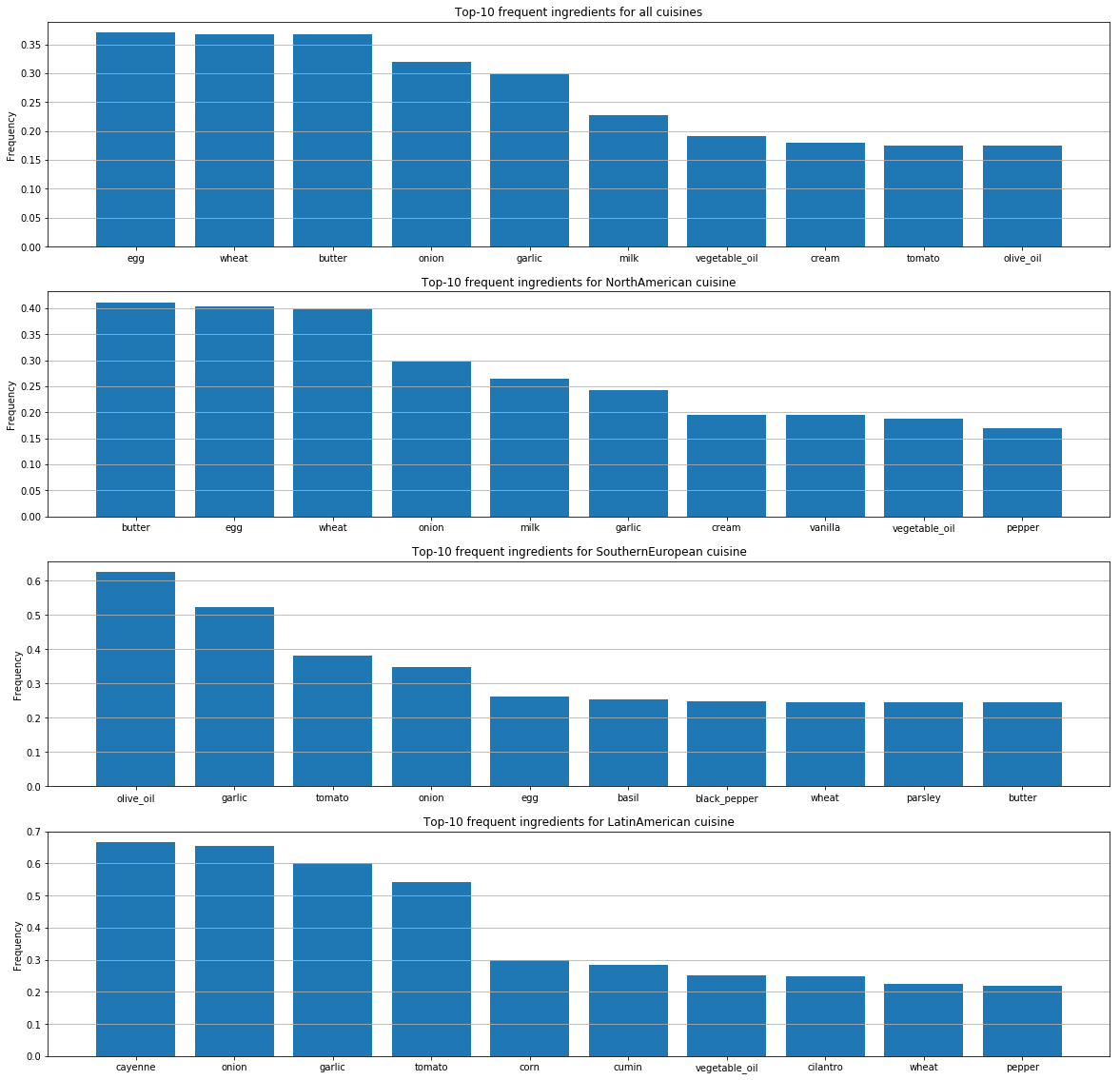

For each of the cuisines, we have identified the most popular ingredients.

On these graphs, differences in the taste preferences of people by country are noticeable. It also becomes clear from these preferences how people from different countries combine ingredients with each other.

Two pizza findings

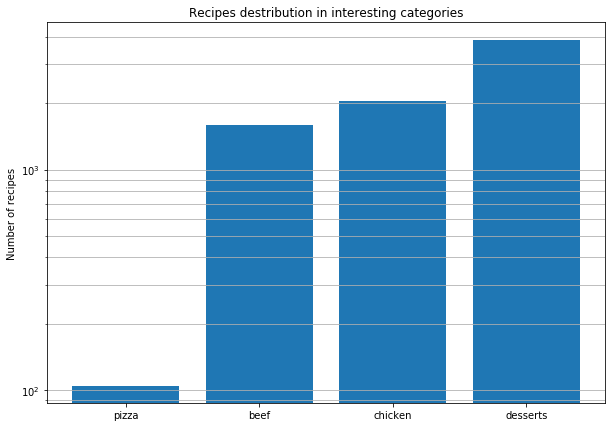

After this global analysis, we decided to study pizza recipes from around the world in more detail to find patterns in their composition. Here are the conclusions we made:

- Pizza recipes are an order of magnitude smaller than recipes for meat / chicken dishes and desserts.

- Many of the ingredients found in pizza recipes are limited. The very variability of the products is much lower than in other dishes.

How we tested the model

Finding real flavor combinations is not the same as revealing the compatibility of molecules. All cheeses have a similar molecular composition, but this does not mean that successful combinations lie only in the area of the nearest ingredients.

However, we should see exactly the compatibility of ingredients similar to the molecular level when we translate everything into mathematics. Because similar objects (the same cheeses) must remain similar, no matter how we describe them. So we can determine that described these objects correctly.

Convert recipe to math

To present the recipe in an understandable form for the neural network, we used Skip-Gram Negative Sampling (SGNS) - the word2vec algorithm, which is based on the occurrence of words in context. We decided not to use the pre-trained word2vec models, because our recipe is obviously different in semantic structure from simple texts. Using such models, we could lose important information.

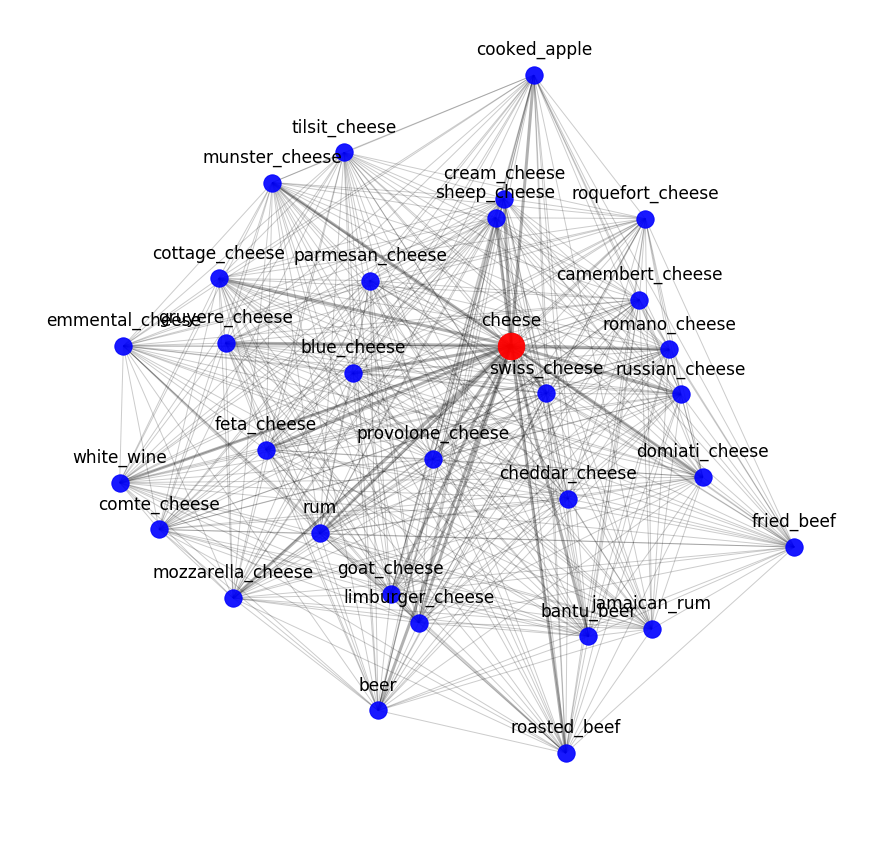

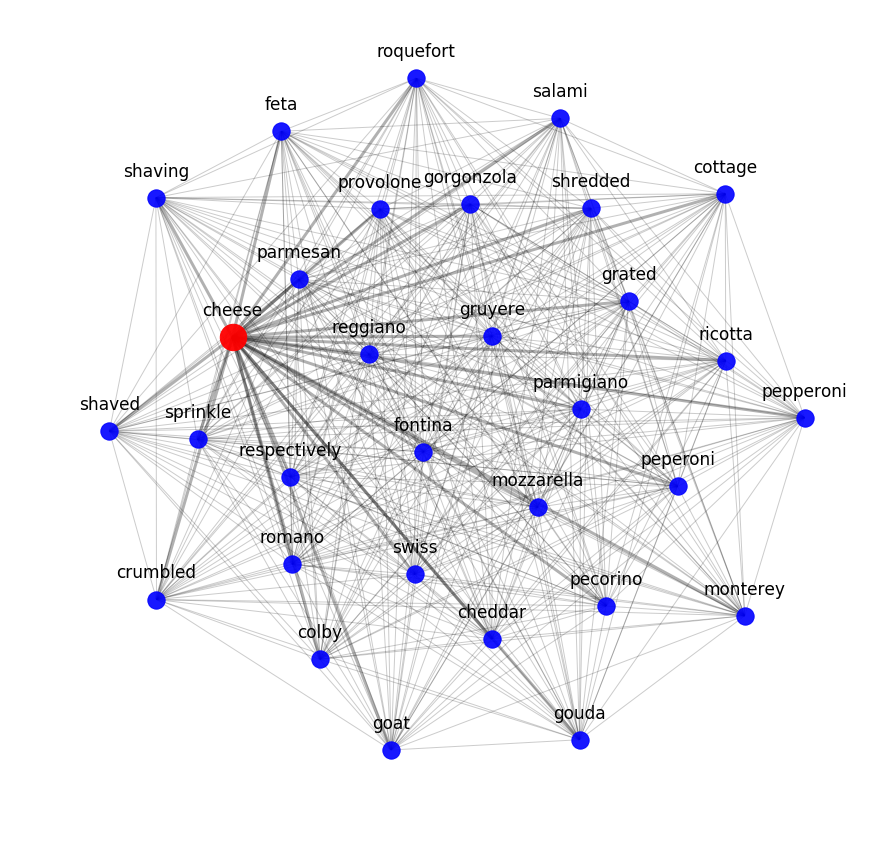

You can evaluate the result of word2vec by looking at the closest semantic neighbors. For example, here is what our model knows about cheese:

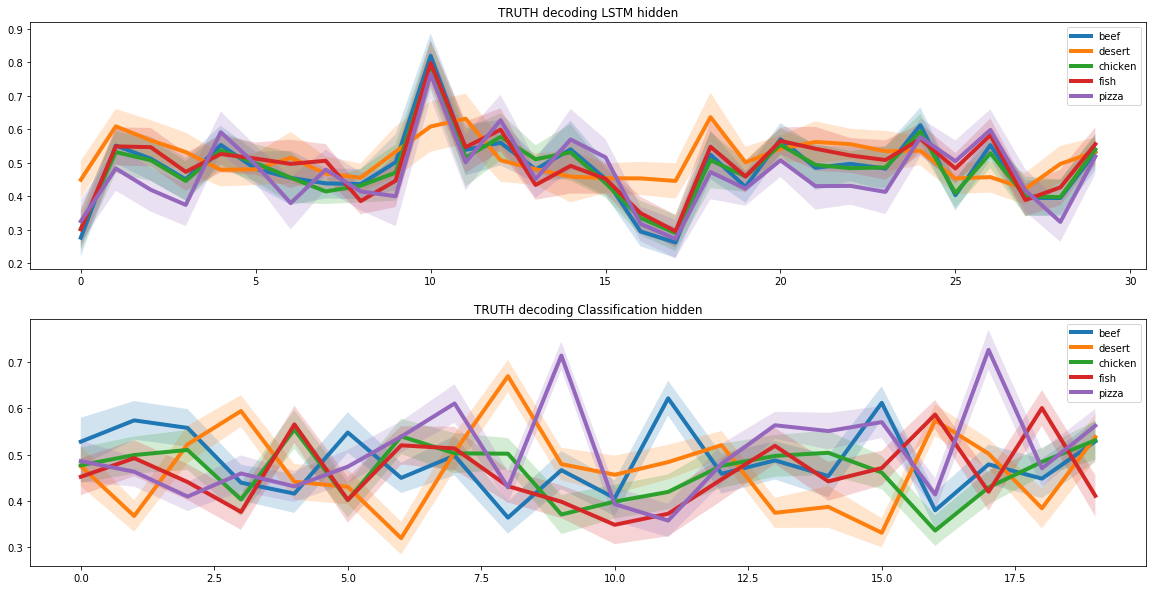

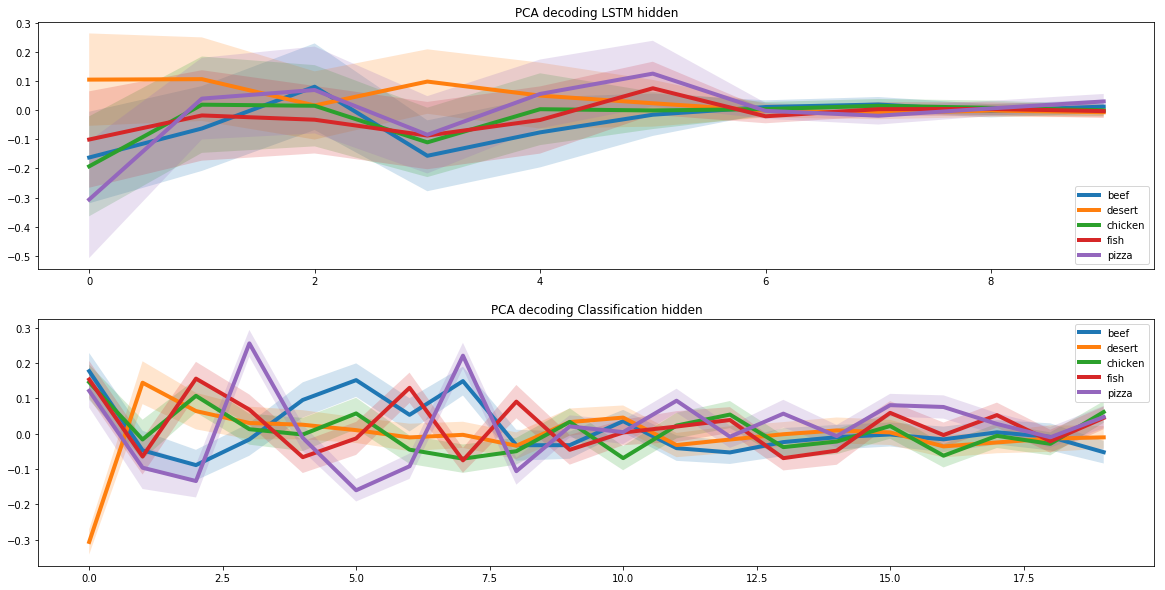

To test how semantic models can capture the recipe interactions of ingredients, we applied a thematic modeling model for all recipes from the sample. That is, they tried to break the recipes dataset into clusters according to mathematically identified patterns.

Knowing in advance that a certain sample of recipes belongs to various real classes obtained from the data, we constructed the distribution of belonging of each real class to the identified generated ones.

The most obvious was the class of desserts, which formed the theme 0 and 1, generated by the thematic model. In addition to desserts, there are almost no other classes in these subjects, which suggests that desserts are easily separated from other classes of dishes. Also in each subject there is a class that describes it best. This means that our models coped well with the mathematical description of the non-obvious meaning of “taste”.

Recipe Generation

To create new recipes, we used two recurrent neural networks. To do this, we suggested that in the common space of recipes there is a subspace that is responsible for pizza recipes. In order for the neural network to learn to come up with new pizza recipes, we had to find this subspace.

Such a task is similar in meaning to autocoding images when we present an image as a vector of small dimension. In this case, the vectors may contain a large amount of specific information about the image.

For example, for face recognition in the photo, such vectors can store information about the color of human hair in a separate cell. We chose this approach precisely because of the unique properties of the hidden subspace.

To identify the pizza subspace, we ran recipes through two recurrent neural networks. The first one received a pizza recipe at the entrance and looked for its representation in the form of a hidden vector. The second one received a hidden vector from the first neural network and had to offer a recipe based on it. Recipes at the input of the first neural network and at the output of the second should have coincided.

So two neural networks in the format of encoder decoding learned to correctly relay the recipe to a hidden (latent) vector and vice versa. Based on this, we were able to discover a hidden subspace that is responsible for all of the many pizza recipes.

Molecular compatibility

When we decided to create a pizza recipe, we had to add the molecular compatibility criterion to the model. For this, we used the results of a joint study of scientists from Cambridge and several US universities.

As a result of the study, it was found that the ingredients are best combined with the highest number of total molecular pairs. Therefore, when creating the recipe, the neural network preferred ingredients with a similar molecular structure.

Result and AI Pizza

As a result, our neural network has learned to successfully create pizza recipes. By adjusting the coefficients, AI can produce both classic recipes (such as Margarita or Pepperoni), and crazy ones. One such crazy recipe formed the basis of the world's first molecularly perfect pizza with ten ingredients: tomato sauce, melon, pear, chicken, cherry tomatoes, tuna, mint, broccoli, Mozzarella cheese, granola. Limited edition could even be bought at one of the Dodo pizzerias. And here are some more interesting recipes that you can try to cook at home:

- spinach, cheese, tomato, black_olive, olive, garlic, pepper, basil, citrus, melon, sprout, buttermilk, lemon, bass, nut, rutabaga;

- onion, tomato, olive, black_pepper, bread, dough;

- chicken, onion, black_olive, cheese, sauce, tomato, olive_oil, mozzarella_cheese;

- tomato, butter, cream_cheese, pepper, olive_oil, cheese, black_pepper, mozzarella_cheese;

All this would be garbage if we did not give a link to the most interesting: