The parrot has landed. The announcement of the book "Generative deep learning"

Hello colleagues!

We are pleased to inform you that our publishing plans for the beginning of next year include an excellent new Generative Deep Learning book by David Foster

The author, who compares this work no less with the landing of Apollo on the moon, published on Medium a detailed review of his masterpiece, which we propose to consider a teaser close to reality.

Enjoy reading, stay tuned!

My 459-day journey from blog to book and back

20:17 UTC, July 20, 2019

50 years earlier, minute per minute - the Eagle module was piloted by Neil Armstrong and Buzz Aldrin. It was the highest manifestation of engineering, courage and genuine determination.

Fast forward 50 years - and find that all the computing power of the Apollo spacecraft onboard control computer (AGC), which delivered these people to the moon, today fits in your pocket many times. In fact, the computing power of the iPhone 6 would simultaneously bring to the moon 120 million such spaceships as Apollo 11.

This fact in no way detracts from the greatness of the AGC. Given Moore’s law, you can take any computer device and not be mistaken, saying that in 50 years there will be a machine that can work 2²⁵ times faster.

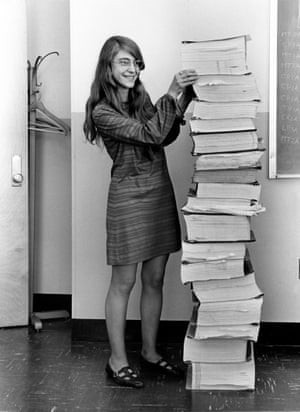

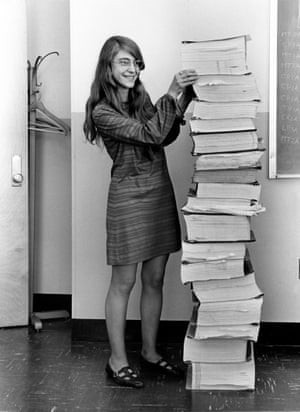

Margaret Hamilton, the head of the team of programmers who wrote the code for AGC, did not regard the hardware limitations of computers of that time as an obstacle, but as a challenge. She used all the resources at her disposal to accomplish the unthinkable.

Margaret Hamilton and code for the AGC (source: Science History Images)

And now, I want to tell you about ...

The book "Generative deep learning"

459 days ago I received a letter from O'Reilly Media publishing house asking me how interesting it would be to write a book. I liked this idea then, so I agreed and decided to write the most up-to-date guide to generative modeling, or rather, a practical book that would tell you how to create time-honored deep learning models that can draw, write texts, compose music and play .

Most importantly, I wanted the reader to read the generative deep learning in depth and try to create models that are truly amazing things; moreover, these models do not require huge and expensive computing resources, which still work quite slowly.

I am deeply convinced: in order to learn any technical topic, you need to start by solving small problems on it, but understand them in such detail that you understand the rationale for all lines of code to a single one.

If you start with huge data sets and with such models, each of which takes a whole day and not an hour to run, then do not learn anything beyond the minimum - just spend 24 times more on training.

The most important thing that Apollo’s landing on the moon taught us is that you can achieve absolutely amazing results with very modest computing resources. Exactly the same impression you will have about generative modeling after you read my book.

What’s the matter with the parrot?

Writing for O'Reilly is all the more pleasant as they select animalistic illustrations for the cover of your book. I got a blue-headed red-tailed parrot, which I christened Neil Wingstrong in a fit of feelings.

Neil Wingstrong from the Parrot family.

So, the parrot has landed. What should be expected from the book?

What is this book about?

This book is an applied generative modeling guide.

It outlines all the basic things that help build the simplest generative models. Then the material gradually becomes more complex to more advanced models, step by step. All sections are accompanied by practical examples, architectural diagrams, and code.

This book is for anyone who wants to better understand the upcoming hype about generative modeling. Reading a book does not require deep learning knowledge; all code examples are given in Python.

What is considered in the book?

I tried to cover in the book all the key developments in the field of generative modeling over the past 5 years. It turns out such a scale.

The book is divided into two parts, a brief overview of the chapters is given below:

Part 1: Introduction to Generative Deep Learning

The purpose of the first four chapters of the book is to introduce you to the key techniques that you will need to begin to build generative deep learning models.

1. Generative modeling

In a broad context, we will consider the discipline of generative modeling and the type of tasks that we are trying to solve using a probabilistic approach. Then we examine our first example of the simplest probabilistic probabilistic generative model and analyze why it may be necessary to resort to deep learning methods in case of complication of the generative task.

2. Deep learning

This chapter will help you navigate the deep learning tools and techniques you need to get started creating more complex generative models. Here you will meet Keras , a framework for building neural networks, which can be useful for designing and training some of the ultra-modern deep neural network architectures discussed in the literature.

3. Variation auto encoders

In this chapter, we will look at our first model for generative deep learning - the variational auto encoder. This powerful approach will help us generate realistic faces from scratch and change existing images - for example, add a smile to our faces or change our hair color.

4. Generative contention networks (GAN)

This chapter discusses one of the most successful varieties of generative modeling that has emerged in recent years - the generative-competitive network. Based on this beautiful framework designed to structure the problem of generative modeling, many of the most advanced generative models are built. We will consider how it can be fine-tuned and why it allows us to constantly expand the boundaries of what is achievable by means of generative modeling.

Part 2: We teach machines to draw, write, compose music and play

Part 2 presents cases that help you understand how generative modeling techniques can be used to solve specific problems.

5. Drawing

This chapter discusses two techniques related to machine drawing. First, we will discuss the neural network CycleGAN, which, as the name implies, is an adaptation of the GAN architecture, which allows the model to learn how to convert a photo into a painting written in a specific style (or vice versa). We will also talk about the neural transfer technique built into many mobile photo editing applications, which helps to transfer the style of the picture to the photo in order to create the impression that this photo is just another one of the paintings of the same artist.

6. Writing texts

In this chapter, we turn to machine-written texts; in this subject area, one has to face other challenges than when generating images. Here you will learn about recurrent neural networks (RNN) - an architecture that is convenient for solving problems associated with serial data. We will also discuss how the architecture of the encoder-decoder works and write the question and answer generator ourselves.

7. Composing music

This chapter is dedicated to music generation, and this task is also related to serial data generation. But, unlike writing texts, in this case there are additional difficulties - for example, modeling of musical timbre and rhythm. We will make sure that many of the techniques used to generate the text are applicable in this subject area, as well as explore the deep learning architecture called MuseGAN, which adapts the ideas from chapter 4 (about GAN) to music data.

8. The game

This chapter describes how generative models are compatible with other areas of machine learning, including reinforced learning. We will discuss one of the most interesting scientific articles published recently, which is called 'World Models' (Models of the world). The authors of this article show how the generative model can be used as an environment in which the agent is trained, in fact allowing the agent to “think up” possible future scenarios and imagine what will happen if certain actions are taken, and all this is completely within the context of our conceptual environmental models.

9. The future of generative modeling

Here we summarize the current technological landscape of generative modeling and look back, recalling some of the techniques presented in this book. We will also talk about how the most modern neural network architectures available today, such as GPT-2 and BigGAN, can change our ideas about creative activity. I wonder if it will ever be possible to create an artificial system that could generate works indistinguishable from samples of human fine art, literature and music.

10. Conclusion

Final thoughts on why generative deep learning may turn out to be one of the most important technology areas in deep learning in the next 5–10 years.

Summary

In a world where it is becoming increasingly difficult to distinguish reality from fiction, the work of engineers who understand in detail the principles of the work of generative models and are not afraid of possible technological limitations becomes vitally important.

I hope my book will help you take the first steps towards understanding these latest technologies, and will also become an interesting and enjoyable reading for you.

We are pleased to inform you that our publishing plans for the beginning of next year include an excellent new Generative Deep Learning book by David Foster

The author, who compares this work no less with the landing of Apollo on the moon, published on Medium a detailed review of his masterpiece, which we propose to consider a teaser close to reality.

Enjoy reading, stay tuned!

My 459-day journey from blog to book and back

20:17 UTC, July 20, 2019

50 years earlier, minute per minute - the Eagle module was piloted by Neil Armstrong and Buzz Aldrin. It was the highest manifestation of engineering, courage and genuine determination.

Fast forward 50 years - and find that all the computing power of the Apollo spacecraft onboard control computer (AGC), which delivered these people to the moon, today fits in your pocket many times. In fact, the computing power of the iPhone 6 would simultaneously bring to the moon 120 million such spaceships as Apollo 11.

This fact in no way detracts from the greatness of the AGC. Given Moore’s law, you can take any computer device and not be mistaken, saying that in 50 years there will be a machine that can work 2²⁵ times faster.

Margaret Hamilton, the head of the team of programmers who wrote the code for AGC, did not regard the hardware limitations of computers of that time as an obstacle, but as a challenge. She used all the resources at her disposal to accomplish the unthinkable.

Margaret Hamilton and code for the AGC (source: Science History Images)

And now, I want to tell you about ...

The book "Generative deep learning"

459 days ago I received a letter from O'Reilly Media publishing house asking me how interesting it would be to write a book. I liked this idea then, so I agreed and decided to write the most up-to-date guide to generative modeling, or rather, a practical book that would tell you how to create time-honored deep learning models that can draw, write texts, compose music and play .

Most importantly, I wanted the reader to read the generative deep learning in depth and try to create models that are truly amazing things; moreover, these models do not require huge and expensive computing resources, which still work quite slowly.

I am deeply convinced: in order to learn any technical topic, you need to start by solving small problems on it, but understand them in such detail that you understand the rationale for all lines of code to a single one.

If you start with huge data sets and with such models, each of which takes a whole day and not an hour to run, then do not learn anything beyond the minimum - just spend 24 times more on training.

The most important thing that Apollo’s landing on the moon taught us is that you can achieve absolutely amazing results with very modest computing resources. Exactly the same impression you will have about generative modeling after you read my book.

What’s the matter with the parrot?

Writing for O'Reilly is all the more pleasant as they select animalistic illustrations for the cover of your book. I got a blue-headed red-tailed parrot, which I christened Neil Wingstrong in a fit of feelings.

Neil Wingstrong from the Parrot family.

So, the parrot has landed. What should be expected from the book?

What is this book about?

This book is an applied generative modeling guide.

It outlines all the basic things that help build the simplest generative models. Then the material gradually becomes more complex to more advanced models, step by step. All sections are accompanied by practical examples, architectural diagrams, and code.

This book is for anyone who wants to better understand the upcoming hype about generative modeling. Reading a book does not require deep learning knowledge; all code examples are given in Python.

What is considered in the book?

I tried to cover in the book all the key developments in the field of generative modeling over the past 5 years. It turns out such a scale.

The book is divided into two parts, a brief overview of the chapters is given below:

Part 1: Introduction to Generative Deep Learning

The purpose of the first four chapters of the book is to introduce you to the key techniques that you will need to begin to build generative deep learning models.

1. Generative modeling

In a broad context, we will consider the discipline of generative modeling and the type of tasks that we are trying to solve using a probabilistic approach. Then we examine our first example of the simplest probabilistic probabilistic generative model and analyze why it may be necessary to resort to deep learning methods in case of complication of the generative task.

2. Deep learning

This chapter will help you navigate the deep learning tools and techniques you need to get started creating more complex generative models. Here you will meet Keras , a framework for building neural networks, which can be useful for designing and training some of the ultra-modern deep neural network architectures discussed in the literature.

3. Variation auto encoders

In this chapter, we will look at our first model for generative deep learning - the variational auto encoder. This powerful approach will help us generate realistic faces from scratch and change existing images - for example, add a smile to our faces or change our hair color.

4. Generative contention networks (GAN)

This chapter discusses one of the most successful varieties of generative modeling that has emerged in recent years - the generative-competitive network. Based on this beautiful framework designed to structure the problem of generative modeling, many of the most advanced generative models are built. We will consider how it can be fine-tuned and why it allows us to constantly expand the boundaries of what is achievable by means of generative modeling.

Part 2: We teach machines to draw, write, compose music and play

Part 2 presents cases that help you understand how generative modeling techniques can be used to solve specific problems.

5. Drawing

This chapter discusses two techniques related to machine drawing. First, we will discuss the neural network CycleGAN, which, as the name implies, is an adaptation of the GAN architecture, which allows the model to learn how to convert a photo into a painting written in a specific style (or vice versa). We will also talk about the neural transfer technique built into many mobile photo editing applications, which helps to transfer the style of the picture to the photo in order to create the impression that this photo is just another one of the paintings of the same artist.

6. Writing texts

In this chapter, we turn to machine-written texts; in this subject area, one has to face other challenges than when generating images. Here you will learn about recurrent neural networks (RNN) - an architecture that is convenient for solving problems associated with serial data. We will also discuss how the architecture of the encoder-decoder works and write the question and answer generator ourselves.

7. Composing music

This chapter is dedicated to music generation, and this task is also related to serial data generation. But, unlike writing texts, in this case there are additional difficulties - for example, modeling of musical timbre and rhythm. We will make sure that many of the techniques used to generate the text are applicable in this subject area, as well as explore the deep learning architecture called MuseGAN, which adapts the ideas from chapter 4 (about GAN) to music data.

8. The game

This chapter describes how generative models are compatible with other areas of machine learning, including reinforced learning. We will discuss one of the most interesting scientific articles published recently, which is called 'World Models' (Models of the world). The authors of this article show how the generative model can be used as an environment in which the agent is trained, in fact allowing the agent to “think up” possible future scenarios and imagine what will happen if certain actions are taken, and all this is completely within the context of our conceptual environmental models.

9. The future of generative modeling

Here we summarize the current technological landscape of generative modeling and look back, recalling some of the techniques presented in this book. We will also talk about how the most modern neural network architectures available today, such as GPT-2 and BigGAN, can change our ideas about creative activity. I wonder if it will ever be possible to create an artificial system that could generate works indistinguishable from samples of human fine art, literature and music.

10. Conclusion

Final thoughts on why generative deep learning may turn out to be one of the most important technology areas in deep learning in the next 5–10 years.

Summary

In a world where it is becoming increasingly difficult to distinguish reality from fiction, the work of engineers who understand in detail the principles of the work of generative models and are not afraid of possible technological limitations becomes vitally important.

I hope my book will help you take the first steps towards understanding these latest technologies, and will also become an interesting and enjoyable reading for you.

All Articles