Media files, especially images, are what these days are the main component of the size of a typical web page. Over time, the situation only worsens . In order to improve page performance, we try to cache as much data as possible. But is it worth it? In most cases - not worth it. Even considering that we now have all these newfangled technologies at our disposal, in order to achieve high performance of web pages, we still need to adhere to one simple rule. It consists in the fact that you need to request from the server only what is needed, while striving to ensure that as little as possible data is received in response to each request.

We strive to ensure that our projects would cause visitors only positive feelings. At the same time, we would not want to overload network connections and user hard drives. This means that the time has come to give some classic practical tricks, experiment with media caching strategies and learn the tricks of the Cache API that are hidden in the sleeve of service workers.

Good intentions

All that we learned a very long time ago, optimizing web pages for slow modem connections, has become extremely relevant these days, with the development of mobile access to the web. All this does not lose value in the work on modern projects designed for a global audience. Unreliable networks, or networks with large delays, are, in many parts of the planet, still the norm. This should help us not to forget that we cannot confidently rely on assumptions about the level of quality of networks made on the basis of monitoring those places where advanced technologies are introduced. And this applies to the recommended approaches to optimizing productivity: history has confirmed that approaches that help improve productivity today will not lose their value in the future.

Before service workers appeared, we could give browsers some guidance as to how long they should store certain resources in caches, but this was probably the only effect on caching. Documents and resources downloaded to the user's computer were placed in some directory on the hard drive. When the browser prepared a request for a document or resource, it first looked in the cache to find out if it already had what it needed. And if what the browser needed was already in the cache, the browser might not load the network again.

Today we can control network requests and cache much better. But this does not relieve us of responsibility for exactly which resources are part of the web pages.

Request only what you need from the server

As I said, the modern web is full of media. Images and video files have become a leading means of communication. They, if we are talking about commercial sites, can increase sales, but if we talk about the speed of downloading materials and the speed of output pages, then the more of them - the worse. With this in mind, we should strive to ensure that every image (and every video, and the like) before proving a place on the page can prove its need for this place.

A few years ago, my culinary recipe was included in a newspaper article about cooking. I am not subscribed to the print version of that newspaper, so when the article came out, I went to the site to take a look at the article. The creators of the site recently redesigned it. They then decided to load all the articles into a modal window, which was displayed almost on the whole screen and located on top of their main page. This meant that to download the article, you had to download everything that was needed to display the page of the article, plus everything that was needed to form the home page of the resource. And on the home page there was video advertising. And not one. And of course, it started automatically.

When I visited that site, I opened the developer’s tools and found out that the page size exceeds 15 MB. Then the project What Does My Site Cost? . It allows you to find out how much it costs to use the site in the mobile networks of different countries. I decided to check the newspaper website with this project. It turned out that the real cost of viewing this site for an average user from the United States exceeds the cost of one issue of a paper version of the newspaper. In a word - a mess.

Of course, I can criticize the creators of the site for the fact that they render their readers a disservice, but the reality is this: none of us goes to work in order to worsen the user experience of working with our resources. Something similar can happen with any website developer. You can spend long days optimizing page performance, and then management decides that this neatly made page will be displayed on top of another page full of video ads. Imagine how much worse things would be if two very poorly optimized pages were stacked on top of each other?

Images and videos can come in handy for attracting visitors to certain materials in conditions of a high level of rivalry of different materials with each other (on the newspaper’s home page, for example). But if you want the visitor to calmly and intently do one thing (say, read the selected article), the value of media materials can fall from the level of "very important" to the level of "does not hurt." Yes, studies have shown that the images are very good at attracting attention, but after the visitor got to a certain page, they already turn out to be not so important . Pictures only lengthen page loading time and increase access to the site. And when more and more media materials are added to pages, the situation only worsens.

We must do everything in our power in order to reduce the page sizes of our projects. That is - we need to refuse to download everything that does not add value to the pages. For example, if you are writing an article about data leaks, resist the temptation to include an interesting photo in it, in which someone, dressed in a jacket with a hood, is sitting at a computer in a very dark room.

Request from the server the smallest files that suit you

Suppose we pick up the image, which is absolutely necessary in the article. Now we need to ask one very important question: "What is the fastest way to deliver this image to the user?" The answer to this question can be either very simple or very complex. The simple answer is to choose the most suitable graphic format (and carefully optimize what we ultimately send to the user). The difficult answer is to completely recreate the image in a new format (for example, if changing the raster format to vector is the most effective solution).

▍ Offer browsers alternative file formats

When it comes to image formats, we no longer have to find a compromise between page performance and image availability for various browsers. We can prepare the image in different versions, tell the browser about them, and let it decide what to use. Such a decision will be based on the capabilities of the browser.

You can do this by using several

source

elements in the

picture

or

video

tag. Such work begins with the creation of various media resource options. For example, the same image is saved in WebP and JPG formats. It is very likely that the WebP-image will be smaller than the JPG-image (although you probably can’t say this, it’s worth checking such things yourself). Having prepared alternative resource options, the paths to them can be placed in the

picture

element:

<picture> <source srcset="my.webp" type="image/webp"> <img align="center" src="my.jpg" alt="Descriptive text about the picture."> </picture>

Browsers that recognize the

picture

element will check the

source

element before deciding which image to request. If the browser supports the MIME type

"image/webp"

, a request will be made to receive a WebP image. If not (or if the browser does not know about the

picture

elements), a regular JPG image will be requested.

This approach is good in that it allows users to give optimized images of a relatively small size to users and without resorting to JavaScript.

The same thing can be done with video files:

<video controls> <source src="my.webm" type="video/webm"> <source src="my.mp4" type="video/mp4"> <p>Your browser doesn't support native video playback, but you can <a href="my.mp4" download>download</a> this video instead.</p> </video>

Browsers that support the WebM format will load what is in the first

source

element. Browsers that WebM does not support but understand the MP4 format will request a video from the second such element. And browsers that do not support the

video

tag will simply show a line of text telling the user that he can download the corresponding file.

The order in which the

source

elements go is relevant. Browsers choose the first of these elements that they can use. Therefore, if you place an optimized alternative version of the image link after an option that has a higher level of compatibility, this may result in no browser ever loading this optimized version.

Depending on the specifics of your project, this layout-based approach may not suit you, and you may decide that processing things like this on the server is better for you. For example, if a JPG file is requested, but the browser supports the WebP format (as indicated in the

Accept

header), nothing prevents you from responding to this request with a WebP version of the image. In fact, some CDN services, such as Cloudinary , support such capabilities, as they say, out of the box.

▍ Offer browsers images of different sizes

In addition to using various graphic formats for storing images, the developer can provide for the use of images of various sizes, optimized based on the size of the browser window. In the end, it makes no sense to upload an image whose height or width is 3-4 times larger than the browser window visible to the user that displays this image. This is a waste of bandwidth. And here responsive images come in handy.

Consider an example:

<img align="center" src="medium.jpg" srcset="small.jpg 256w, medium.jpg 512w, large.jpg 1024w" sizes="(min-width: 30em) 30em, 100vw" alt="Descriptive text about the picture.">

There is a lot of interesting things going on in this “charged”

img

element. Let's look at some details about it:

- This

img

element offers the browser three options for the size of the jpg file: 256 pixels wide (small.jpg

), 512 pixels wide (medium.jpg

) and 1024 pixels wide (large.jpg

). File name information is in thesrcset

attribute. They are equipped with width descriptors . - The

src

attribute contains the name of the default file. This attribute acts as asrcset

for browsers that do not supportsrcset

. The choice of the default image is likely to depend on the features of the page and on the conditions under which it is usually viewed. I would recommend to indicate here, in most cases, the name of the smallest image, but if the main volume of traffic for such a page falls on old desktop browsers, then it might be worth using an image of medium size. - The

sizes

attribute is a presentation hint that tells the browser how the image will be displayed in various usage scenarios (i.e., the external size of the image ) after applying CSS. This example indicates that the image will occupy the entire width of the viewport (100vw

) until it reaches30 em

in width (min-width: 30em

), after which the image will be30 em

wide. Thesizes

can be very simple, it can be very complex - it all depends on the needs of the project. If you do not specify it, this will lead to the use of its standard value equal to100vw

.

You can even combine this approach with a choice of different image formats and different crop options in one

picture

element.

The main point of this story is that you have a lot of tools at your disposal to organize the quick delivery to users of exactly those media files that they need. I recommend using these tools.

Postpone query execution (if possible)

Once upon a time, Internet Explorer introduced support for a new attribute that allowed developers to depriorize specific

img

elements in order to speed up page output. This is the

lazyload

attribute. This attribute did not become a generally accepted standard, but it was a worthy attempt to organize, without the use of JavaScript, a delay in loading images until they were in the visible area of the page (or close to such an area).

Since then, countless JavaScript implementations of lazy image loading systems have appeared, but Google recently attempted to implement this using a more declarative approach by introducing the loading attribute.

The

loading

attribute supports three values (

auto

,

lazy

and

eager

) that determine how to handle the corresponding resource. For us, the

lazy

value looks most interesting, since it allows you to delay the loading of the resource until it reaches a certain distance from the viewing area.

Add this attribute to what we already have:

<img align="center" src="medium.jpg" srcset="small.jpg 256w, medium.jpg 512w, large.jpg 1024w" sizes="(min-width: 30em) 30em, 100vw" loading="lazy" alt="Descriptive text about the picture.">

Using this attribute contributes to some increase in page performance in Chromium-based browsers. Hopefully, it will go down to web standards and that it will appear in other browsers. But, until this happens, there will be no harm from its use, since browsers that do not understand a certain attribute simply ignore it.

This approach perfectly complements the strategy for prioritizing media downloads, but before we talk about this, I suggest taking a closer look at service workers.

Request management in service workers.

Service workers are a special type of web worker . They use the Fetch API and have the ability to intercept and modify all network requests, as well as respond to requests. They also have access to the Cache API and other asynchronous client data stores, such as IndexedDB . IndexedDB can be used, for example, as a resource store.

When the service worker is installed, you can intercept this event and populate the cache in advance with resources that you may need later. Many use this opportunity to stock up with copies of global resources, such as styles, scripts, logos, and the like. But you can preload images in the cache in order to use them in case of network request failures.

▍ Just in case, keep backup images in the cache

Based on the assumption that certain backup versions of images used in special cases are used in various network scenarios, you can create a named function that will return the corresponding resource:

function respondWithFallbackImage() { return caches.match( "/i/fallbacks/offline.svg" ); }

Then, in the fetch event handler , you can use this function to issue a spare image if requests for regular images are not working:

self.addEventListener( "fetch", event => { const request = event.request; if ( request.headers.get("Accept").includes("image") ) { event.respondWith( return fetch( request, { mode: 'no-cors' } ) .then( response => { return response; }) .catch( respondWithFallbackImage ); ); } });

When the network is available, everything works as expected:

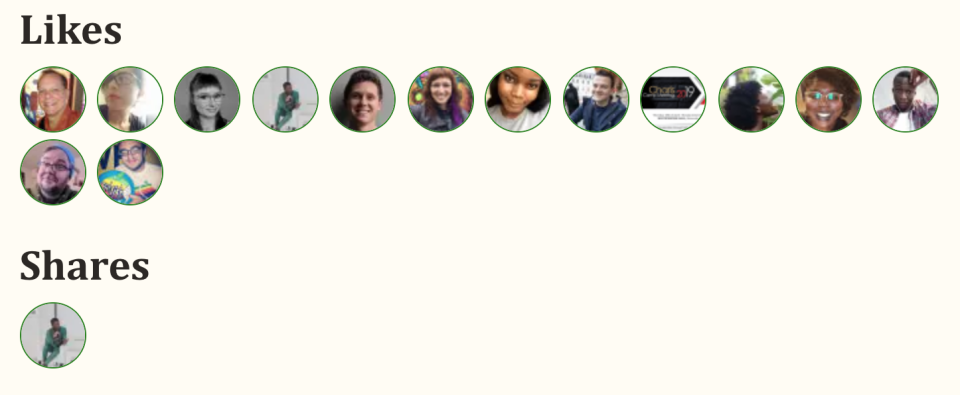

When the network is available - avatars are displayed as expected

But if the network connection is interrupted, the images will be automatically replaced with a spare image. However, the page still looks more or less acceptable.

Universal fallback image displayed instead of avatars if the network is unavailable

At first glance, in terms of performance, this approach may not seem so useful, since, in addition to ordinary images, the browser also has to download a spare one. But, when everything is arranged just like that, some very interesting possibilities open up before the developer.

▍ Respect user desire for traffic savings

Some users, trying to reduce traffic consumption, use the “light” browser mode or enable a setting that may be called “Data Saving” or “Traffic Saving”. When this happens, the browser often sends a Save-Data header in requests.

In the service worker, you can check requests for the presence of this header and adjust the responses to requests accordingly. So, first check the header:

let save_data = false; if ( 'connection' in navigator ) { save_data = navigator.connection.saveData; }

Then, in the

fetch

handler responsible for working with images, you can decide that you do not need to send a corresponding request to the network. Instead, you can respond to it by passing the backup image from the cache to the browser:

self.addEventListener( "fetch", event => { const request = event.request; if ( request.headers.get("Accept").includes("image") ) { event.respondWith( if ( save_data ) { return respondWithFallbackImage(); } // , ); } });

Here you can go even further by setting up the

respondWithFallbackImage()

function so that it

respondWithFallbackImage()

different images depending on what the original request was. In order to do this, you can define several backup images at the global level of the service worker:

const fallback_avatar = "/i/fallbacks/avatar.svg", fallback_image = "/i/fallbacks/image.svg";

Then, when processing the service worker installation event, both of these files must be cached:

return cache.addAll( [ fallback_avatar, fallback_image ]);

Finally, in

respondWithFallbackImage()

you can

respondWithFallbackImage()

suitable image by analyzing the URL used to load the resource. On my site, for example, avatars are downloaded from webmention.io . As a result, the following check is performed in the function:

function respondWithFallbackImage( url ) { const image = avatars.test( /webmention\.io/ ) ? fallback_avatar : fallback_image; return caches.match( image ); }

When this change is made to the code, you will need to update the

fetch

handler so that when you call the

respondWithFallbackImage()

function, the

request.url

argument is passed to it. After this is done, when you interrupt the request to download images, you will see something similar to the following figure.

The avatar and image loaded under normal conditions from the webmention resource, if the request has a Save-Data header, are replaced by two different images

Next - we need to develop some general rules regarding working with media resources. The application of these rules, of course, depends on the situation.

▍ Caching strategy: prioritization of certain media resources

Experience tells me that media resources on the web, especially images, tend to fall into one of three categories. These categories are distinguished by the fact that their images have different values in ensuring the correct operation of the project. At one end of the spectrum are elements that do not add value to the project. At the other end are critical resources that are certainly valuable. For example, these are graphs, without which it will be impossible to understand a certain text. Somewhere in the middle are resources that, so to speak, "will not hurt." They add value to a project, but they cannot be called vital for understanding its contents.

If you consider your graphic resources, taking into account this classification, then you will be able to form some kind of general guide for working with each of these types of resources in different situations. In other words, here is an example of a caching strategy.

The strategy of loading media resources, divided into classes, reflecting their importance for understanding the contents of the project

one

- Resource category: “critical”.

- Fast connection, the presence of the Save-Data header, slow connection: loading the resource.

- Lack of connection: placeholder replacement.

2

- Resource category: “it won't hurt”.

- Quick connection: loading a resource.

- Presence of Save-Data header, slow connection, no connection: replacement with a placeholder.

3

- Resource Category: “Unimportant”

- Fast connection, the presence of the Save-Data header, slow connection, no connection: are completely excluded from the content of the page.

▍About dividing resources into categories

When it comes to a clear separation of the “critical” resources and the “does not hurt” category resources, it is useful to organize their storage in different folders (or do something like that). With this approach, you can add some logic to the service worker to help him figure out what is what. For example, on my personal website, I either store critical images in my possession or take them from the site of my book . Knowing this, I can, for the selection of these images, use regular expressions that analyze the domain to which the request is directed:

const high_priority = [ /aaron\-gustafson\.com/, /adaptivewebdesign\.info/ ];

Having the

high_priority

variable, I can create a function that can find out, for example, whether a certain request to download an image is high priority:

function isHighPriority( url ) { // ? let i = high_priority.length; // while ( i-- ) { // URL ? if ( high_priority[i].test( url ) ) { // , return true; } } // , return false; }

Equipping a project with support for prioritizing requests for downloading media files requires only adding a new condition check to the

fetch

event handler. This is implemented according to the same scheme that was used to work with the

Save-Data

header. How exactly this will be implemented with you is very likely to differ from what I got, but I still want to share with you my version of the solution to this problem:

// // - // - // ? if ( isHighPriority( url ) ) { // // - // - "" // } else { // ? if ( save_data ) { // " " // } else { // // - // - "" } }

This approach can be applied not only to images, but also to resources of other types. It can even be used to control which pages are issued to the browser with the advantage of their cached versions, and which are with the advantage of their versions stored on the server.

Keep your cache clean.

The fact that the developer can control what is cached and stored in the user's system gives him great opportunities. But the developer has a serious responsibility. He must try to implement these opportunities correctly and without harm to the user.

All caching strategies are likely to be different from each other. At least in the details. If we, for example, publish a book on a site, then it may make sense to cache all of its materials. This will help the user to read the book without being connected to the network. The book has a certain fixed size, and, based on the assumption that it does not include gigabytes of images and videos, the user will only get better that he does not have to download each chapter individually.

However, if you cache every article and every photo on a news site, it will very soon lead to an overflow of hard drives of users of such a site. If the site has an unknown number of pages and other resources in advance, then it is very important to have a caching strategy, which provides for setting strict limits on the number of cached resources.

One approach to introducing such restrictions is to create several different blocks related to caching various contents. The faster one or another resource becomes obsolete, the stricter the restrictions on the number of elements that are stored in the cache for such resources. , , , : « , 2 ?».

, , :

const sw_caches = { static: { name: `${version}static` }, images: { name: `${version}images`, limit: 75 }, pages: { name: `${version}pages`, limit: 5 }, other: { name: `${version}other`, limit: 50 } }

. ,

name

, API Cache. ,

version

. -. , , .

static

, ,

limit

, , . , , 5 . , . - ( — ; ).

, . :

function trimCache(cacheName, maxItems) { // caches.open(cacheName) .then( cache => { // cache.keys() .then(keys => { // , ? if (keys.length > maxItems) { // cache.delete(keys[0]) .then( () => { trimCache(cacheName, maxItems) }); } }); }); }

. -, . , (

postMessage()

) - JavaScript:

// - if ( navigator.serviceWorker.controller ) { // window.addEventListener( "load", function(){ // - , navigator.serviceWorker.controller.postMessage( "clean up" ); }); }

- :

addEventListener("message", messageEvent => { if (messageEvent.data == "clean up") { // for ( let key in sw_caches ) { // if ( sw_caches[key].limit !== undefined ) { // trimCache( sw_caches[key].name, sw_caches[key].limit ); } } } });

-

clean up

,

trimCache()

,

limit

.

, . , , , , . ( , , , .) , , . , , API Cache.

: —

, -, . , PWA, . , -. , , - , , .

- , , « », , . , . , . , , . — . , . , , — , -.

«». , JioPhone , , , 10- .

! - -?