In the PHP ecosystem, there are currently two connectors for working with the Tarantool server - this is the official PECL extension tarantool / tarantool-php , written in C, and tarantool-php / client , written in PHP. I am the author of the latter.

In this article, I would like to share the results of testing the performance of both libraries and show how, with the help of minimal changes in the code, you can achieve 3-5 performance gains ( on synthetic tests! ).

What will we test?

We will test the above-mentioned synchronous connectors running asynchronously, in parallel and asynchronously-in parallel. :) Also, we do not want to touch the code of the connectors themselves. At the moment, several extensions are available to achieve the desired:

- Swoole is a high-performance asynchronous framework for PHP. Used by Internet giants like Alibaba and Baidu. Since version 4.1.0, the magic Swoole \ Runtime :: enableCoroutine () method has appeared, which allows "converting PHP synchronous network libraries to asynchronous with one line of code."

- Async is, until recently, a very promising extension for working asynchronously in PHP. Why until recently? Unfortunately, for a reason unknown to me, the author deleted the repository and the fate of the project is foggy. We'll have to use one of the forks. Like Swoole, this extension allows you to

turn trousers withan easy movement of thepantsto enable asynchrony by replacing the standard TCP and TLS streams with their asynchronous versions. This is done through the option " async.tcp = 1 ".

- Parallel is a fairly new extension from the notorious Joe Watkins, the author of such libraries as phpdbg, apcu, pthreads, pcov, uopz. The extension provides an API for multi-threaded work in PHP and is positioned as a replacement for pthreads. A significant limitation of the library is that it only works with the ZTS (Zend Thread Safe) version of PHP.

How will we test?

Run the Tarantool instance with the write-ahead log disabled ( wal_mode = none ) and the increased network buffer ( readahead = 1 * 1024 * 1024 ). The first option will exclude work with the disk, the second - will make it possible to read more requests from the operating system buffer and thereby minimize the number of system calls.

For benchmarks that work with data (insert, delete, read, etc.) before starting the benchmark, a memtx space will be created (re), in which the primary index values are created by the generator of ordered values of integers (sequence).

Space DDL looks like this:

space = box.schema.space.create(config.space_name, {id = config.space_id, temporary = true}) space:create_index('primary', {type = 'tree', parts = {1, 'unsigned'}, sequence = true}) space:format({{name = 'id', type = 'unsigned'}, {name = 'name', type = 'string', is_nullable = false}})

If necessary, before launching the benchmark, the space is filled with 10,000 tuples of the form

{id, "tupl_<id>"}

Access to tuples is carried out according to the random key value.

The benchmark itself is a single request to the server, which is executed 10,000 times (revolutions), which, in turn, are executed in iterations. Iterations are repeated until all deviations in time between 5 iterations are within the permissible error of 3% *. After that, the average result is taken. There is a 1 second pause between iterations to prevent the processor from going into throttling. The Lua garbage collector is turned off before each iteration and forced to start after it completes. The PHP process starts only with the extensions necessary for the benchmark, with output buffering turned on and the garbage collector turned off.

* The number of revolutions, iterations and the error threshold can be changed in the benchmark settings.

Test environment

The results published below were made on MacBookPro (2015), the operating system is Fedora 30 (kernel version 5.3.8-200.fc30.x86_64). Tarantool started in docker in with the parameter "

--network host"

.

Package Versions:

Tarantool: 2.3.0-115-g5ba5ed37e

Docker: 03/19/3, build a872fc2f86

PHP: 7.3.11 (cli) (built: Oct 22 2019 08:11:04)

tarantool / client: 0.6.0

rybakit / msgpack: 0.6.1

ext-tarantool: 0.3.2 (+ patch for 7.3) *

ext-msgpack: 2.0.3

ext-async: 0.3.0-8c1da46

ext-swoole: 4.4.12

ext-parallel: 1.1.3

* Unfortunately, the official connector does not work with PHP version> 7.2. To compile and run the extension in PHP 7.3, I had to use a patch .

results

Synchronous mode

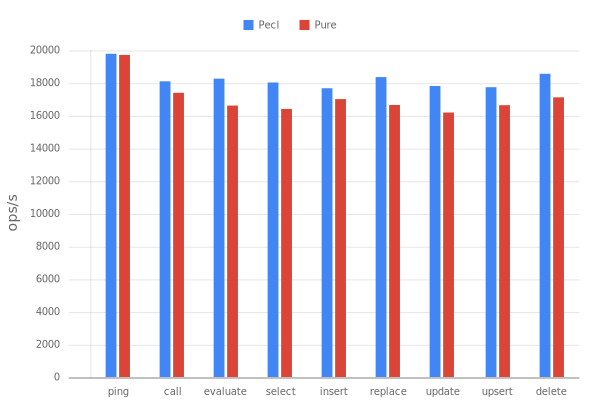

Tarantool's protocol uses the MessagePack binary format to serialize messages. In the PECL connector, serialization is hidden deep in the bowels of the library and it is not possible to influence the coding process from userland code. A connector in pure PHP, by contrast, provides the ability to customize the encoding process by expanding a standard encoder or by using its implementation. Two encoders are available from the box, one based on msgpack / msgpack-php (official extension of MessagePack PECL), the other based on rybakit / msgpack (in pure PHP).

Before comparing the connectors, we measure the performance of MessagePack encoders for the PHP connector and in future tests we will use the one that will show the best result:

Although the PHP version (Pure) is inferior to the PECL extension in speed, I would still recommend using rybakit / msgpack in real projects, because in the official MessagePack extension, the format specification is only partially implemented (for example, there is no support for custom data types without which you you will not be able to use Decimal - a new data type introduced in Tarantool 2.3) and has a number of other problems (including compatibility issues with PHP 7.4). Well, in general, the project looks abandoned.

So, let's measure the performance of connectors in synchronous mode:

As you can see from the graph, the PECL connector (Tarantool) shows better performance compared to the PHP connector (Client). But this is not surprising, given that the latter, in addition to being implemented in a slower language, does, in fact, more work: with each call, a new Request and Response object is created (in the case of Select, also Criteria , and in the case of Update / Upsert - Operations ), separate entities Connection , Packer and Handler also add an overhead. Obviously, you have to pay for flexibility. However, in general, the PHP interpreter shows good performance, although there is a difference, it is insignificant and may be even less when using preloading in PHP 7.4, not to mention JIT in PHP 8.

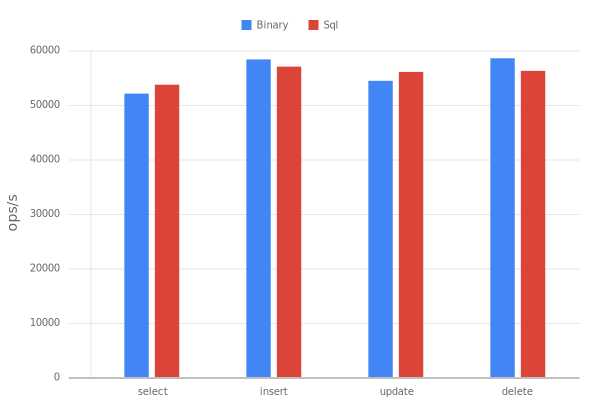

We move on. Tarantool 2.0 introduces SQL support. Let's try to perform Select, Insert, Update and Delete operations using the SQL protocol and compare the results with noSQL (binary) equivalents:

The SQL results are not very impressive (I remind you that we are still testing synchronous mode). However, I would not be upset about this ahead of time, SQL support is still in active development (relatively recently, for example, support for prepared statements has been added) and, judging by the list of issues , the SQL engine will wait for a number of optimizations in the future.

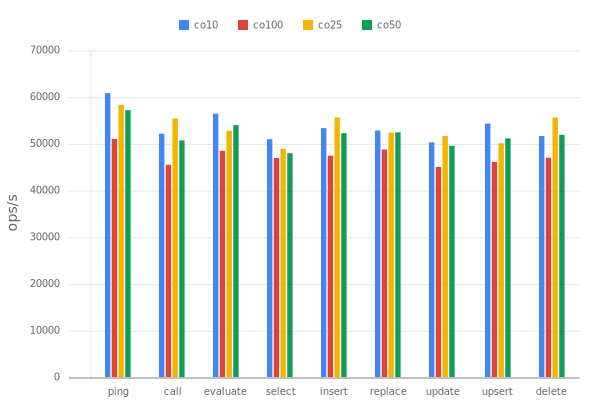

Async

Well, now let's see how the Async extension can help us improve the results above. For writing asynchronous programs, the extension provides an API based on coroutines, and we will use it. Empirically, we find out that the optimal amount of corutin for our environment is 25:

We “smear” 10,000 operations on 25 coroutines and see what happened:

The number of operations per second increased by more than 3 times for tarantool-php / client !

Sadly, the PECL connector did not start with ext-async.

What about SQL?

As you can see, in asynchronous mode, the difference between the binary protocol and SQL has become within the margin of error.

Swoole

Again we find out the optimal amount of coroutine, now for Swoole:

Let's dwell on 25. Let's repeat the same trick as with the Async extension - distribute 10,000 operations between 25 coroutines. In addition, we add another test in which we divide all the work into 2 two processes (that is, each process will perform 5,000 operations in 25 coroutines). Processes will be created using Swoole \ Process .

Results:

Swole shows a slightly lower result compared to Async when running in one process, but with 2 processes the picture changes dramatically (the number 2 was not chosen randomly, on my machine it was 2 processes that showed the best result).

By the way, the Async extension also has an API for working with processes, but there I did not notice any difference from running benchmarks in one or several processes (it is possible that I messed up somewhere).

SQL vs binary protocol:

As with Async, the difference between binary and SQL operations is leveled out in asynchronous mode.

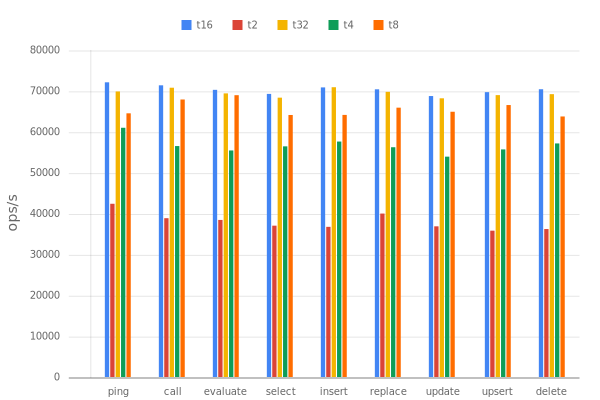

Parallel

Since the Parallel extension is not about coroutines, but about threads, we measure the optimal number of parallel threads:

It is 16 on my car. Run the benchmarks of the connectors on 16 parallel threads:

As you can see, the result is even better than with asynchronous extensions (not counting Swoole running on 2 processes). Note that for the PECL connector in place of Update and Upsert operations are empty. This is due to the fact that these operations crashed with an error - I do not know if the fault was ext-parallel, ext-tarantool, or both.

Now compare the performance of SQL:

Notice the similarity with the graph for connectors running synchronously?

Together

And finally, we will bring all the results together in one graph to see the big picture for the tested extensions. We add only one new test to the chart, which we have not yet done - we will run Async coroutines in parallel using Parallel *. The idea of integrating the aforementioned extensions has already been discussed by the authors, however, consensus has not been reached, we will have to do it ourselves.

* Launching Swoole's coroutines with Parallel did not work, it seems these extensions are incompatible.

So, the final results:

Instead of a conclusion

In my opinion, the results were very decent, and for some reason I’m sure that this is not the limit! Whether you need to decide this in a real project solely for yourself, I’ll just say that for me it was an interesting experiment, which allows me to estimate how much I can “squeeze” out of a synchronous TCP connector with minimal effort. If you have ideas for improving benchmarks, I will gladly consider your request pool. All code with launch instructions and results is published in a separate repository .