Intel NCS2 neurostices, Myriad X chips, third-party solutions - Intel promotes solutions based on Myriad X in a wide variety of options.

Why are these accelerators so good? Firstly, the cost of one FPS. Secondly, full compatibility with OpenVINO, where you can transfer existing solutions from the CPU / GPU to a stick or MyriadX without further development or additional adaptation. Of course, adaptation is not a feature of VPU, but rather a feature of OpenVINO, where each trained network can work on any selected hardware platform, be it CPU, GPU, FPGA, VPU, and the choice can be made not before development, but after.

Consider the main indicators of the sticks and chips installed in them in the inference of neural networks based on the UNET 576x384 and Darknet19 MMR topologies (recognition of measurements and models of vehicles):

Key indicators of Intel NCS2 in the inference of neural networks UNET, Darknet19

Accordingly, at low cost, we have a high efficiency of using these devices. But what if we are talking about the inference of incoming video streams, for example, RTSP Ful HD 15 FPS H.264 / H.265 within the server infrastructure? You won’t put any sticks in the server, after all, these are solutions for home or experimentation:

Intel Neural Compute Stick

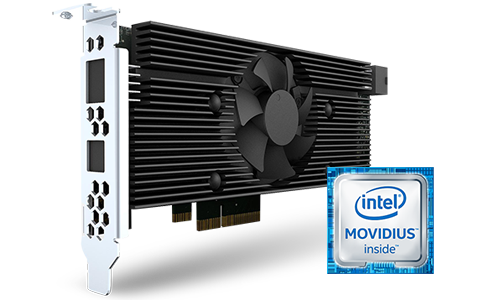

Server-side inference now often uses the nVidia Tesla 4, Tesla V100 GPUs. But there is an option from Intel with Myriad X on board - this is Intel Vision Accelerator, a specialized narrow-profile solution for PCIe or M.2, depending on the modification and the number of chips on the board. The PCIe modification of the board is presented below:

Intel Vision Accelerator

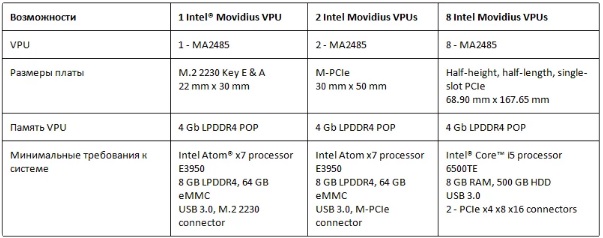

There are three main modifications here - boards with 1, 2, 8 Myriad X on board the M.2, M-PCIe or full-sized PCIe formats. Here are the main features and requirements:

Modifications of Intel Vision Accelerator with 1, 2, 8 Myriad X on board

Let's consider board modifications from UP Board, for example, UP Core AI. Such devices are often used in inference in the immediate vicinity of the camera or other autonomous small-sized devices, which can significantly save the precious resources of existing devices and do not require their complete replacement when supplementing the existing functionality with artificial intelligence skills.

Up core

But what if a higher density is required?

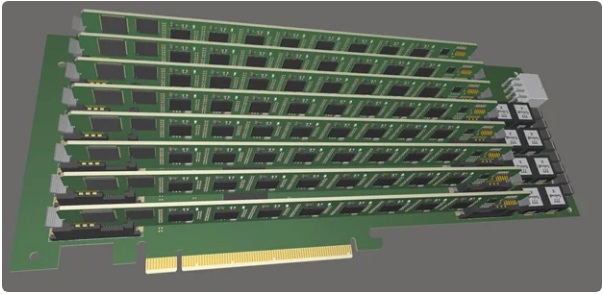

An interesting solution was proposed by ComBox Technology - a full-sized PCIe x4 carrier board and 8 blades, each of which has 8 Myriad X MA2485 chips installed. In fact, this is a PCIe bus blade system where from 1 to 8 slots with 8 inference accelerators on each can be installed within the carrier board. The bottom line is an industrial scalable, high density VPU solution for the Enterprise segment.

All blades in the system are displayed as multiple HDDL boards with 8 accelerators each. This allows you to use many as the inference of one task with many incoming streams, and for many different tasks.

ComBox Myriad X Blade Board

The solution uses PLX for 12 PCIe lanes, 8 of which go to 8 blades, one line for each, and 4 to the motherboard. Next, each line goes to the PCIe-USB switch, where 1 port is used for connection, and 8 are forwarded to each connected Myriad X.

The total power consumption of a board with 64 chips does not exceed 100 W, but serial connection of the required number of blades is also allowed, which proportionally affects power consumption.

In total, within one full-sized PCIe board, we have 64 Myriad X, and in the framework of a server solution on the platform, for example, from Supermicro 1029GQ-TRT, 4 boards in the 1U form factor, i.e. 256 Myriad X chips for 1U inference.

Supermicro 1029GQ-TRT

If we compare the solution based on Myriad X with nVidia Tesla T4, then it is reasonable to consider the ResNet50 topology, in which the VPU gives 35 FPS. In total, we have 35 FPS / Myriad X * 64 pieces = 2240 FPS / board and 8960 FPS / 1U server, which is comparable to the batch = 1 nVidia Tesla V100, with the cost of the accelerator on Myriad X much less. Not only the cost shows the feasibility of using Myriad X in inference, but also the possibility of parallel inference of various neural networks, as well as efficiency in terms of heat and energy consumption.