Last month, we already wrote about what's new in the hardware and software of the iPhone 11 and 11 Pro cameras. And you may have noticed that the changes that occurred in the equipment were quite modest. All attention was paid to software image processing.

And this is true: the impressive improvement in image quality in the new iPhone should be attributed mainly to software improvements. I spent some time studying the process of acquiring images from the iPhone 11, and, apparently, this is one of the most significant improvements related to the camera in the iPhone 11 line.

What is a photograph?

It sounds like we decided to hit on a philosophy first, or write a script to advertise photos on an iPad. But to emphasize the unique features of the iPhone 11 camera, we first need to understand what we can expect from photography.

For quite some time now your photos have not been taken by you. And this is not a neglecting remark in your direction, dear reader: when you press the shutter button with your finger, the iPhone 11 takes a photo that it already took before you even touched the screen to reduce the perceived delay.

This is done through a kind of ring buffer of photos that starts working as soon as you open the Camera application. When you press the shutter release, iPhone 11 selects the sharpest shot from this buffer. And saves the photo, which, as the user thinks, knowing nothing about this forgery, he took himself. No, no - you just hinted, helped the camera select one photo from the set of those that she does.

Of course, you can say that it was a deliberate step anyway. And without your actions there would be no photo.

However, the resulting photo is not the result of a single exposure. The iPhone 11 takes many shots - as they say in Apple's presentation, dofiga - and intelligently combines them.

Last year, the iPhone XS introduced the Smart HDR mode, combining overexposed and underexposed photographs to guarantee increased detail on fragments of the image that were in the shade or very overexposed. HDR is an “ extended dynamic range ”, and for many decades it has served as an unattainable dream of photography technology.

Smart HDR gets smarter

Ever since people first applied silver halide to the plate to take a photograph, we have been disappointed with the limited ability of the photograph to fix details in bright and dark areas of the image.

It is difficult to choose the right exposure immediately for the background and for the building itself. The image on the right is similar to what the eye sees.

Our eyes are actually doing pretty well. They have approximately 20 exposure levels for the dynamic range. In comparison, one of the newest and greatest cameras, the Sony A7R4, is capable of taking 15 steps. The iPhone 11 has about 10.

Long before the digital era, photographers using film faced similar problems when switching from film negative (13 steps) to paper (8 steps). I had to trick and dodge in order to develop the photo so that it could be printed.

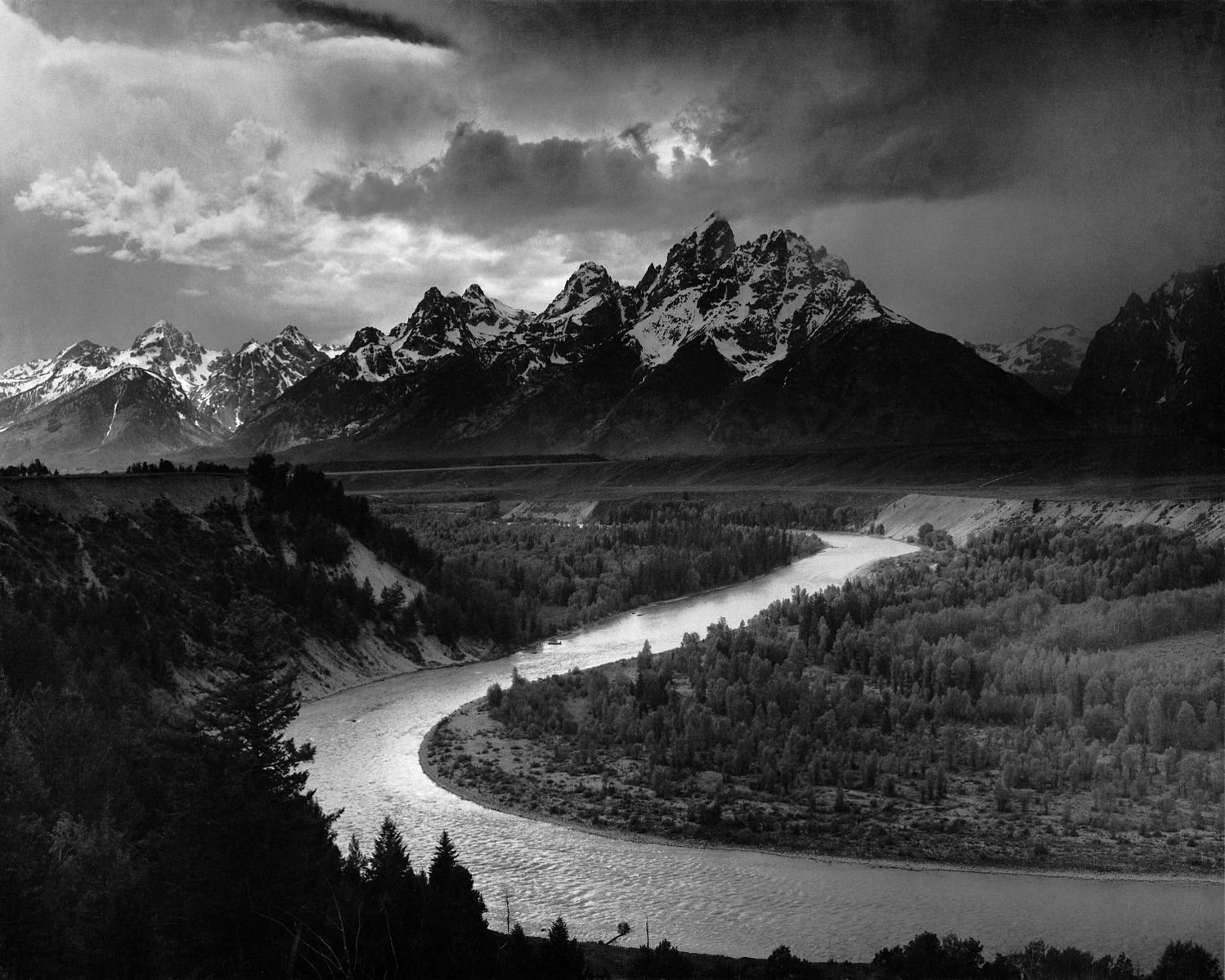

The Titon Ridge and the Serpent River in Grand Teton National Park, 1942, Ansel Adams. This photo needed old-school processing to get the look we know from HDR

Today, an experienced photographer can restore the dynamic range from a single RAW image using editing. This is a manual process, and in difficult situations in it you may encounter the limitations of the photomatrix.

Getting an acceptable image from the cunning exposure of the iPhone 11 Pro when editing. IPhone 11 RAW files have an impressive dynamic range.

Smart HDR uses a completely different approach, using the “trick” to increase DD. You do not need a 15-speed sensor that can capture more details in the light and dark areas at the same time. You need a matrix that can take pictures fast enough, and smart software that can combine them. “Editing” takes place in real time, without the participation of the photographer.

As we wrote last year, this approach has its tradeoffs. If we adjust the matrix of the iPhone 11 to quickly take shots with a small exposure, we will receive images with noise - and this is confirmed by RAW files from the iPhone XS and XR. Therefore, we need to reduce noise, a side effect of which is the loss of detail.

Original and powerful noise reduction in Lightroom. The bricks of the wall turned into watercolor.

Interestingly, this noise reduction led to Apple's accusations of applying a skin-smoothing filter to every shot. In fact, all textures were smoothed. For the most part, photos turned out to be more beautiful because of this, however, a loss of detail could be noticed. Photos looked blurry:

iPhone XS RAW vs Smart HDR HEIC

This made RAW shooting an attractive option for sophisticated iPhone photographers. Details were saved, and with tools like Halide Smart RAW or manual editing, noise could almost be eliminated. In the RAW editor, it was often possible to restore the dynamic range almost like in Smart HDR. I liked it more than the “processed” look of the iPhone XS / XR photos.

Smart hdr

Edited Image iPhone XS RAW

But after the advent of the iPhone 11, I immediately noticed a tremendous improvement in quality compared to its predecessor. Smart HDR has become smarter.

On the iPhone XS and XR, Smart HDR sometimes went too far, smoothing textures or making highlighted areas unnecessarily flat. Sometimes he could not cope at all, and refused to leave the overexposed photo simply overexposed.

An example of how Smart HDR stupid on iPhone XS

This is a classic HDR issue. Sometimes you can restore the dynamic range by getting a flat picture without contrast. In the worst case, the pictures were just ruined. Smart HDR had such a strong prejudice against backlighting that it was very easy to recognize photos taken with the iPhone XS and XR.

Not a very flat frame. On the photos of the iPhone XS RAW there is enough dynamic range, but there is no escape from graininess

The iPhone 11 camera knows that sometimes you want excessive exposure. And this is the smarter Smart HDR.

Devils details

Photos from the iPhone 11 show remarkable detail:

Normal photo from a cell phone

(For this article, I will evaluate ordinary photos taken on the iPhone 11. From version iOS 13.2, Apple sometimes uses Deep Fusion processing, which we will discuss in future articles).

Details are what we always lack in pictures. There is an almost irrational pursuit of clarity, endless demands for increasing the number of megapixels and a constant discussion of the size of the matrix needed to get acceptable shots.

On iFixit (and our disassembly ) it can be seen that the size and number of megapixels of the iPhone 11 matrix remained the same as that of the XS. What does Apple do to achieve such an increase in the number of parts?

Two things have changed.

Equipment

The hardware changes are small, but under the hood (under the lens?) Several obvious improvements have been made. In particular, the ISO range has been improved, adding 33% on the main camera and 42% on the telephoto lens.

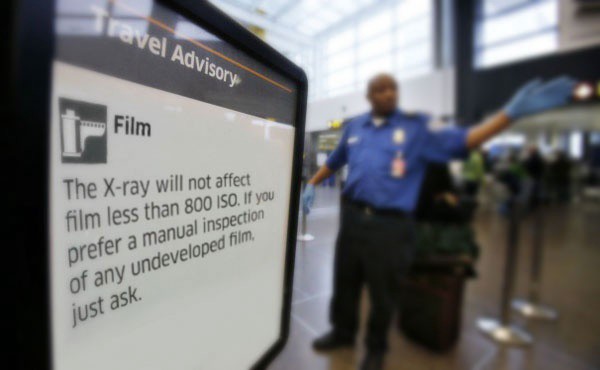

What is an ISO? This is a measure of sensitivity to light. In the last century, it denoted the sensitivity of film. For example, ISOs above 800 are very sensitive, and even exposed to airport x-rays.

Today ISO is a matrix setting. If there is little light, ISO can be increased. As a result, you can take pictures with a high shutter speed, and they will turn out sharper. The tradeoff is that raising ISO will increase the amount of noise. Strengthening of the noise reduction function is required, which reduces the number of parts.

Many decided that increasing the maximum ISO on the iPhone 11 was only necessary for night mode, but improving the range also significantly improves the situation with everyday noise. To see the difference, you do not need to use the new ISO to the maximum. You will see it in most pictures.

There is only one exception: the minimum ISO on the iPhone 11 has grown, which means that at a basic level it is a bit noisier than the iPhone XS and XR. But:

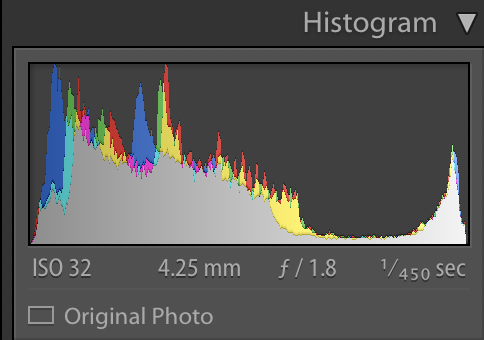

iPhone XS RAW and iPhone 11 Pro RAW, at the basic ISO level (20 on XS, 32 on iPhone 11). It is easy to notice an improvement in detail on a billboard at the top of a dark building and solar panels. Taken from a tripod.

Apparently, the matrix gives more detail with a little additional noise.

At the same time, equipment has its limits. People compared the results of shots from the iPhone 11 with DSLR or other large cameras, but here's what the iPhone 11 matrix looks like compared to the Sony A7R4 matrix:

Yes, it takes magic to get good shots. And this year, magic has become even more difficult. Or, more precisely, more cunning.

Major improvement: selective post-processing

When editing a photo, you may decide to apply a filter to the entire image, for example, make the scene brighter or increase sharpness. However, you can apply effects selectively to part of the image, as often magazines smooth the skin of the model to make it look smoother.

Apple has added a similar retouch to the process of taking pictures. iPhone 11 automatically splits the image into parts and applies noise reduction of different strengths to them. You may not see the noise reduction results in this image:

If you strongly twist the contrast, you can see in the clouds "watercolor" artifacts:

Processed by HEIC and RAW

In the sky, noise is eliminated more aggressively. Since the sky usually has a uniform surface, iOS decides that it can apply a stronger noise reduction, making the sky smooth without a single grain.

In other areas, other noise reduction levels apply. Cacti and other objects, which the camera considered worthy of detail, were left almost without processing.

However, this technology is not perfect. On the original, not very clear bushes lost detail. iOS doesn't know where the cactus is and where the sky is. It applies noise reduction, apparently based on the original number of parts.

One way the computer sees details

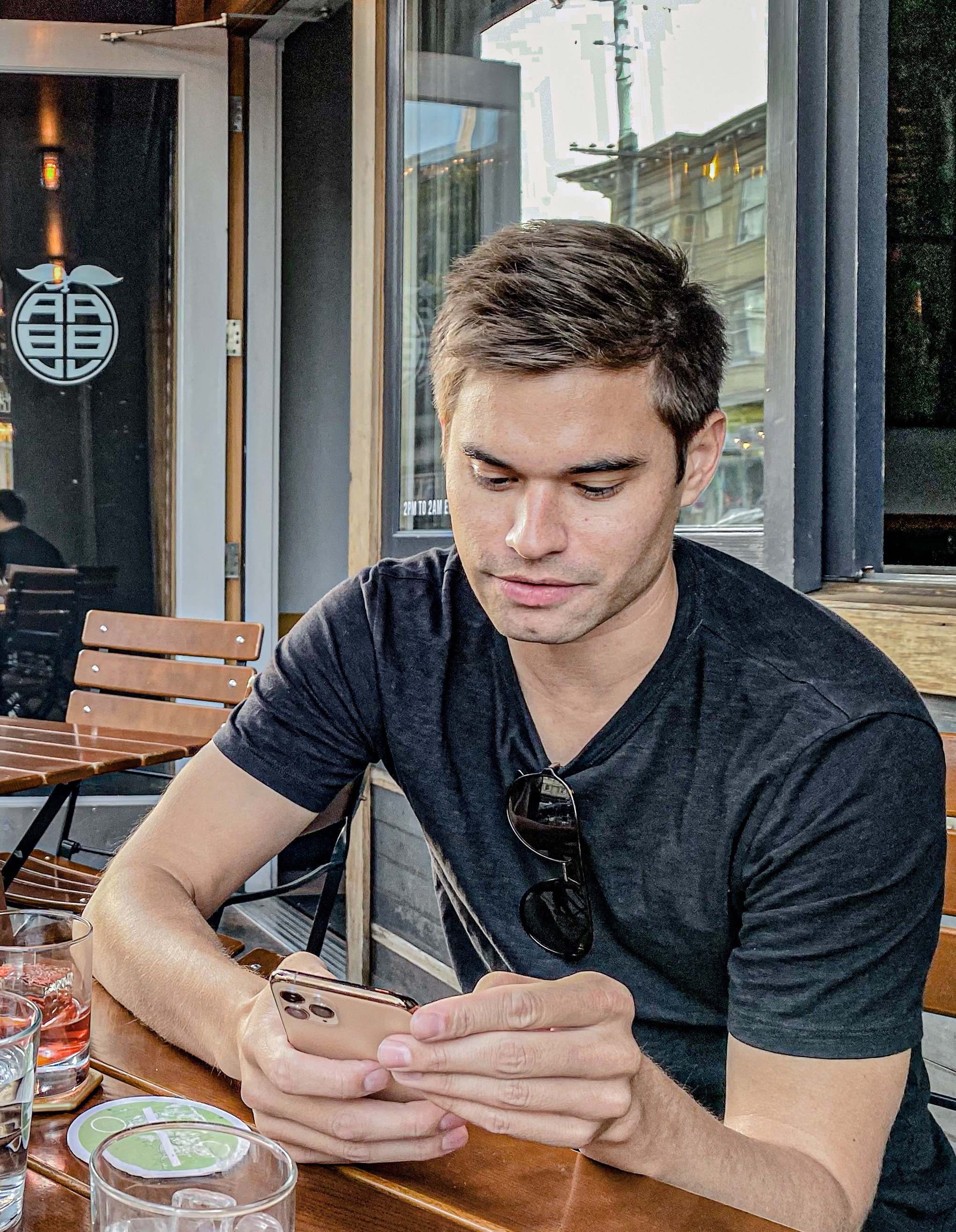

Recognizing a face, iOS behaves in a completely different way. She can specifically preserve the detail of the texture of the skin, hair and clothes.

Edited to highlight areas where semantic markup has retained texture. The background decorations are rather generously blurred, but the camera took special care to keep my friend Chris's skin and hair texture in the image. The gray window frame behind it, which had a rich texture, became completely “watercolor”.

Apple calls this "photo segmentation." They use machine learning to break the photo into pieces and process each one separately. It is impressive that all this is done instantly.

iOS 13 can specifically highlight hair, teeth, eyes, skin, etc.

This targeted post-processing makes the iPhone 11 a completely new camera. Last year's Smart HDR was not so selective. A new change in the processing process edits all the pictures you take to save certain areas of the image or smooth textures.

In the night mode of the iPhone 11 you can also notice the disappearance of details in some areas. However, this usually happens in those parts of the image that you don't care much about. The night mode is somehow surprisingly and even mysteriously able to sometimes get even sharper images than the usual mode, and saves details in parts of the image selected by the camera during its processing.

It’s ironic that last year’s Beautygate scandal turned out to be false, because this year the iPhone 11 really aligns some parts of the image more than others. But since the camera is now smart enough to do it selectively, and to do it where you do not notice it, no scandals have arisen this year. Images just look sharper and softer.

Improving noise reduction

It is quite difficult to objectively compare how iPhone 11 and iPhone XS get rid of noise (semantic markup cannot be turned off), it seems that iPhone 11 reduces noise better preserves details.

It could be assumed that the new noise reduction system uses machine learning to fill in parts that could otherwise be lost, as many of today's technologies do. And yet, such an improvement is welcome.

Post-Processing and Ultra Wide Angle Lenses

We already mentioned that we are upset by the inability to get a RAW file from ultra wide angle lenses. Perhaps this fix the software update. In the next photo you can see how all the details are mostly blurred:

Ultra wide angle lenses are the slowest of the three rear lenses, so they have to use a higher ISO value. If you want to see the result of their work in real time, spin this new fashionable zoom wheel from the Camera app immediately after sunset begins. As soon as you fall below 0.9x, you will begin to notice how the texture, for example, of your carpet disappears.

With a decrease in the amount of light, the result of ultra-wide-angle lenses becomes very blurry and produces many noise reduction artifacts

USL has one unique difficulty: chromatic aberrations, or, in simple terms, purple edges. For a telephoto lens, this problem is less felt, but we can observe it in its RAW. Pay attention to the slightly noticeable pink-violet edges of the leaves in this picture taken in the Hong Kong Botanical Garden against sunlight:

Photographers shooting with “fast lenses” (with a large aperture; in the range from f / 2.0 to f / 0.95 are accustomed to this, and the smaller the number, the larger the aperture). The more light the lens lets in, the greater the likelihood that it will suffer from this effect. Fortunately, in applications like Lightroom, you can remove such edges. And now Apple’s automatic post-processing saves images from this chromatic aberration.

The result looks much better after applying Apple magic quickly.

If you have an example of RAW made on ultra wide-angle lenses, I would like to look at it without correction. Everywhere, from fisheye distortions to edge effects, I want to see the (ugly) truth.

A convincing opponent for DSLRs?

The changes that took place this year are very impressive. Thanks to improvements in hardware, semantic corrections in each frame, updating Smart HDR and adjustments to the noise removal algorithm, the iPhone 11 and 11 Pro have become real contenders for the throne among smartphone cameras.

In the past, iPhone took great photos for social networks, but when viewed on a large screen, they could not stand the competition. Therefore, I often take a “big” camera with me on trips.

But these major improvements in frame processing make it possible to say that the iPhone 11 is the first iPhone to really compete with the camera.

Ah it? It's just a shot from the main lens of the iPhone 11 Pro, 50% shrunk. Yeah.

Thanks to clever processing algorithms, clarity and detail have reached the level suitable for printing and viewing on high-resolution screens.

One of the shots below was taken with a Sony A7R4 camera for $ 4,000 - the newest flagship full-frame camera, released in September - on a $ 1,400 G-Master lens with a focus length close to the wide-angle iPhone lens (for the camera is 24 mm, for the iPhone 26 mm). The second frame was taken on the iPhone 11 Pro with a 52mm telephoto lens.

Perhaps you will understand which of them made which of the cameras, but the question is not in achieving parity, but in when the “fairly good” quality will be achieved. Camera makers need to strain.

Obviously, Sony’s initial frame was much larger, but it also had a 61 megapixel sensor versus 12 megapixels on the iPhone 11. The iPhone 11 had to be reduced a little to bring it in line with Sony’s frame.

Does this mean that RAW is no longer needed?

Not.

It would seem that with all these details it makes no sense to shoot in RAW. After all, if we can get all the details without noise, what's the point?

However, the sense of shooting RAW on the iPhone 11 still remains - if you need to draw even more out of this tiny but capable array of cameras.

RAW still hides more details

This may surprise you, but yes: there are still more details hiding in RAW. This is especially noticeable on small details that semantic markup and noise reduction algorithm do not consider important, and when Smart HDR goes too far with the merging of parts:

Left - Smart HDR, right - edited RAW

Yes, details are still better stored in RAW. Look at this part of the frame without scaling. On a sweater, hair, grass and even trees in the background:

Smart HDR vs RAW

Yes, grit is available. But the small graininess that occurs under almost any shooting conditions, in my opinion, only improves the picture.

Does the graininess in this image annoy or add charm to it?

Of course, you can always use your favorite application in order to remove noise later. But this is only one of the possibilities - unlike the case when you have only an image from which all noise has been irretrievably removed, and with it a part of the details and textures.

Editing flexibility

Saving a picture in JPEG or HEIC, the algorithm throws a large amount of data useful for editing. Working with light and dark areas sometimes reveals strange areas and faces of objects:

Sometimes, when restoring highlighted areas, the sky looks strange; and additional data contained in the RAW file allows you to make natural looking gradients:

Now, RAW files on the iPhone 11 are leaning toward a bright and flat picture. And despite this seemingly overexposed look, the aggressive underestimation of the exposure does not produce any new details that could be lost in the highlighted areas:

For professionals, I give the initial state of the levels:

No HDR needed. Beautiful and smooth reduction of highlighted areas.

A similarly exposed JPEG or HEIC may look similar with Smart HDR, but less flexible when editing.

White balance editing is super power

Cameras are faced with various types of lighting, and they have to guess that the picture is white. You can take a picture with a beautiful snow scene at Christmas, and find that in the picture the snow will be blue or yellow. Your camera is confused by the blue reflection of the sky on white snow, or the orange glow of sunset.

If you take a photo using the Camera application, white balance is built into the picture, and you can’t change it. You can change all colors by adjusting the hue or tone [tint / hue], but you cannot just assign a white balance after the fact. You can do this in RAW files, and it gives you the ability to take expressive shots with a completely different mood:

Or use a very creative approach to color and color balance, creating frames that fit perfectly with the subreddit / r / outrun [dedicated to the retro-futuristic aesthetics of the 80s with neon lights and chrome / approx. transl.]:

The more cameras independently edit pictures for us, the more often you want to abandon the aesthetics imposed by the camera and take everything into your own hands. After all, photography is, after all, an art!

No need to choose

If all this sounds somewhat messy, then we decided that the best way to give you the opportunity to enjoy both worlds - the magic of processing and the unlimited possibilities of RAW - is to give you access to both worlds at once. Applications like Halide can shoot both RAW and processed JPEG at the same time so you can later choose what you like best. Unless you shoot with an ultra-wide angle lens.

Halide 1.15: smarter and smarter

Halide 1.15 has a new setting: "enable smart processing."This allows the camera to process the image for as long as necessary, and squeeze out the entire dynamic range from it.

The only minus is that for this you need to disable images in RAW, since it is impossible to simultaneously shoot in RAW and engage in image processing (this restriction is not subject to us).

The new setting is available for devices running iOS 13, and its results are best seen on the iPhone XS, XR, 11, and 11 Pro. Deep Fusion is only available on the iPhone 11 and 11 Pro, starting with iOS 13.2.

We are very interested in letting you play with RAW images and fully processed images right away and find out what you like best.