Other articles in the series:

- Relay history

- The history of electronic computers

- Transistor history

- Internet history

In the first half of the 1970s, the ecology of computer networks moved away from its original ancestor ARPANET and grew into several different dimensions. ARPANET users have discovered a new application, email, which has become the main activity on the network. Entrepreneurs released their ARPANET options to serve commercial users. Researchers all over the world, from Hawaii to Europe, have developed new types of networks to meet needs or correct errors that are not taken into account by ARPANET.

Almost all participants in this process have moved away from the originally defined goal of ARPANET - to provide shared access to computers and programs for a diverse range of research centers, each of which had its own special resources. Computer networks have become primarily a means of combining people with each other or with remote systems that served as a source or dump of human-readable information, for example, with information databases or printers.

This possibility was foreseen by Liklider and Robert Taylor, although they did not try to achieve this goal by starting the first network experiments. Their 1968 article, “Computer as a Communication Device,” lacks the energy and timeless quality of a prophetic milestone in the history of computers, which can be found in Vanivar Bush’s articles “ How We Can Think ” or Turing's “Computing Machines and Intelligence”. However, it contains a prophetic fragment regarding the fabric of social interaction interwoven with computer systems. Liklider and Taylor described a near future in which:

You will not send letters or telegrams; you will simply identify the people whose files you want to link with yours, and which parts of the files you need to link to them, and possibly determine the urgency ratio. You rarely call, you ask the network to link your consoles.

The network will have available functions and services for which you will subscribe, and other services that you will use as necessary. The first group will include consultations on investments and taxes, the selection of information from your field of activity, announcements of cultural, sports and entertainment events that are relevant to your interests, etc.

(True, their article also described how unemployment will disappear on the planet, because in the end all people will become programmers serving the needs of the network and will be engaged in interactive debugging of programs.)

The first and most important component of this computer-controlled future - email - spread like a virus according to ARPANET in the 1970s, starting to take over the world.

To understand how email developed in ARPANET, you first need to understand how important the computing systems of the entire network were in the early 1970s. When ARPANET was first conceived in the mid-1960s, the equipment and control programs at each point had virtually nothing in common. Many points were concentrated on special systems that existed in a single copy, for example, Multics in MIT, TX-2 in the Lincoln laboratory, ILLIAC IV, built at the University of Illinois.

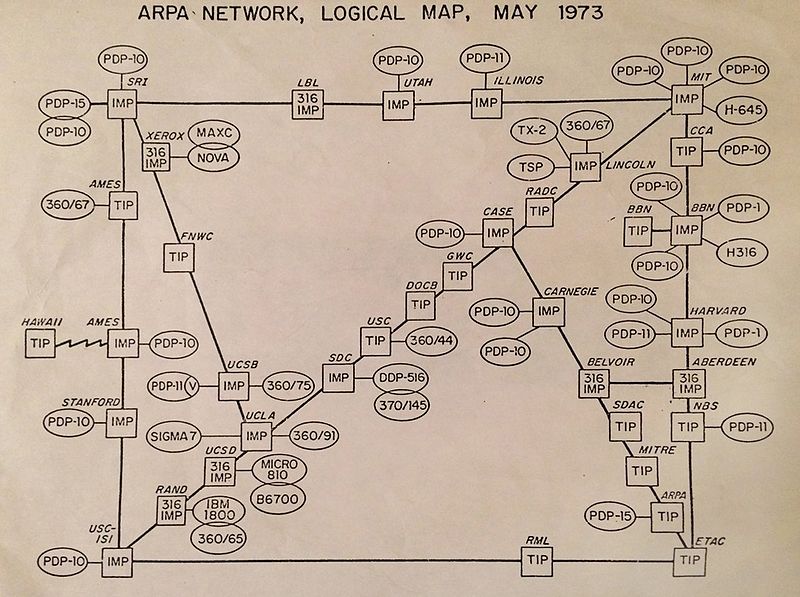

But already by 1973, the landscape of computer systems connected to the network had gained considerable uniformity due to the wild success of Digital Equipment Corporation (DEC) and its penetration into the market of scientific computing (this was the brainchild of Ken Olsen and Harlan Anderson, based on their experience with TX-2 at the Lincoln Laboratory). DEC developed the PDP-10 mainframe, which was released in 1968 and provided reliable time-sharing work for small organizations, providing a whole set of tools and programming languages built into it to simplify the adjustment of the system to specific needs. This is exactly what scientific centers and research laboratories of that time needed.

See how many PDPs there are!

BBN, which was responsible for ARPANET support, made this bundle even more attractive by creating the Tenex operating system, which added page virtual memory to the PDP-10. This greatly simplified the management and use of the system, since it was no longer necessary to adjust the set of running programs to the available memory size. BNN delivered Tenex for free to other ARPA nodes, and soon the OS became dominant on the network.

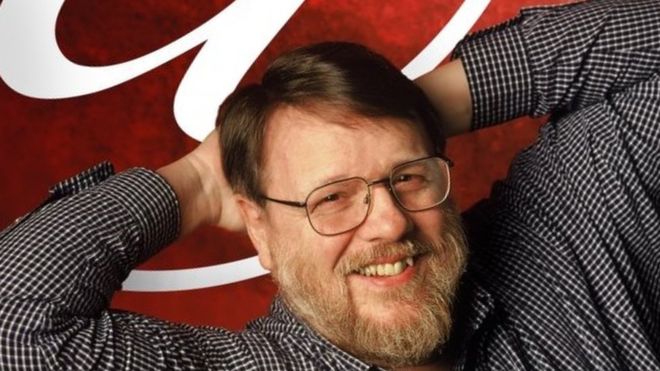

But how does all this relate to email? Users of time-sharing systems were already familiar with electronic messaging, since by the end of the 1960s, most of these systems provided mailboxes of one kind or another. They provided some kind of internal mail, and only users of one system could exchange letters. The first person to take advantage of having a network to transfer mail from one machine to another was Ray Tomlinson, an engineer at BBN and one of the authors of Tenex. He has already written the SNDMSG program for sending mail to another user of the same Tenex system, and the CPYNET program for sending files over the network. It only remained to turn on the imagination a little, and he was able to see how to combine these two programs to create network mail. In previous programs, only the user name was required to designate the recipient, so Tomlinson invented combining the local user name and the host name (local or remote) by connecting them with the @ symbol and receiving an email address unique to the entire network (before, the @ symbol was rarely used, mainly for price designations: 4 cakes @ $ 2 apiece).

Ray Tomlinson in later years, amid his characteristic @

Tomlinson began testing his new program locally in 1971, and in 1972 his network version of SNDMSG was included in the new Tenex release, and as a result, Tenex mail was able to break out of one node and spread throughout the network. The abundance of machines running Tenex gave access to the Tomlinson hybrid program immediately for most of the ARPANET users, and the email immediately gained success. Pretty quickly, ARPA executives incorporated email into everyday life. Stephen Lucasic, director of ARPA, was one of the first users, as was Larry Roberts, who was still the former head of computer science at the agency. This habit inevitably passed on to their subordinates, and soon the email became one of the basic facts of the life and culture of ARPANET.

Tomlinson’s e-mail program has spawned many different imitations and new developments, as users have been looking for ways to improve its rudimentary functionality. Most of the first innovations focused on correcting the flaws of the letter reader. When mail went beyond the boundaries of a single computer, the volume of messages received by active users began to grow along with the growth of the network, and the traditional approach to incoming letters as plain text was no longer effective. Larry Roberts himself, unable to cope with a flurry of incoming messages, wrote his own program for working with the inbox called RD. But by the mid-1970s, the MSG program, written by John Wittal of the University of Southern California, was in the lead by a wide margin in popularity. The ability to automatically fill in the name and recipient fields of an outgoing message based on the incoming one by pressing a button is taken for granted. However, it was the MSG Vital program that first presented this amazing opportunity to “reply” to a letter in 1975; and she was also part of the Tenex software suite.

A variety of such attempts required the introduction of standards. And this was the first, but by no means the last case, when the networked computer community had to develop standards in hindsight. Unlike the basic ARPANET protocols, before the advent of any standards for email in the wild, there were already many options. Inevitably, contradictions and political tensions appeared, concentrating on the main documents describing the email standard, RFC 680 and 720. In particular, users of OSs other than Tenex were irritated by the fact that the assumptions encountered in the proposals were tied to the features of Tenex. The conflict never flared up too much - all ARPANET users in the 1970s were still part of one relatively small scientific community, and the differences were not so big. However, this was an example of future battles.

The unexpected success of the email was the most important event in the development of the network software layer in the 1970s - the layer most distracted from the physical details of the network. At the same time, other people decided to redefine the fundamental layer of “communication”, in which bits flowed from one machine to another.

Aloha

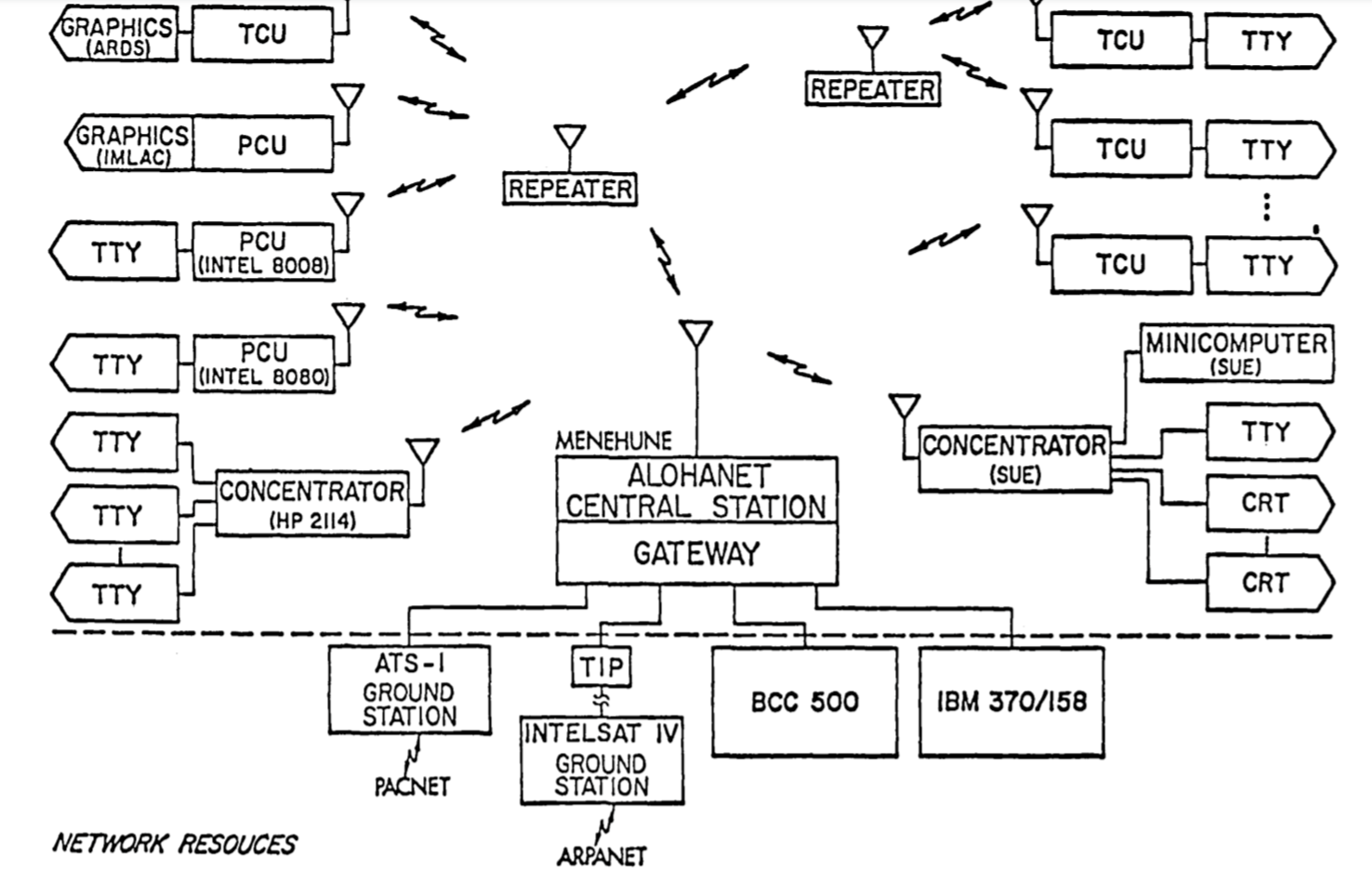

In 1968, Norma Abramson arrived in the University of Hawaii from California to take up the combined post of professor of electrical engineering and computer science. His university had a main campus in Oahu and an additional campus in Hilo, as well as several community colleges and research centers scattered across the islands of Oahu, Kauai, Maui and Hawaii. Between them lay hundreds of kilometers of water and mountainous terrain. The powerful IBM 360/65 worked on the main campus, however, ordering from AT&T a dedicated line connecting the terminal located in one of the public colleges was not as easy as on the mainland.

Abramson was an expert in radar systems and information theory, and at one time worked as an engineer at Hughes Aircraft in Los Angeles. And his new environment, with all his physical problems associated with wired data transfer, inspired Abramson to a new idea - what if radio would be a better way to connect computers than a telephone system that, after all, was designed to transmit voice, not data?

To test his ideas and create a system called ALOHAnet, Abramson received funding from Bob Taylor of ARPA. In its initial form, it was not a computer network at all, but an environment for connecting remote terminals with the only time sharing system developed for an IBM computer located on the Oahu campus. Like ARPANET, it had a dedicated mini-computer for processing packets received and sent by the 360/65 machine - Menehune, the Hawaiian equivalent of IMP. However, ALOHAnet did not complicate its life by routing packets between different points, which was used in ARPANET. Instead, each terminal that wanted to send a message simply sent it on the air at a dedicated frequency.

Fully deployed ALOHAnet in the late 1970s, with multiple computers on the network

In the traditional engineering way, to process such a common transmission band was to cut it into sections with separation of broadcast time or frequencies, and allocate a section to each terminal. But for processing messages from hundreds of terminals according to such a scheme, each of them would have to be limited to a small fraction of the available bandwidth, despite the fact that only a few of them could actually be in operation mode. But instead, Abramson decided not to stop the terminals from sending messages at the same time. If two or more messages overlapped each other, the central computer detected this thanks to error correction codes, and simply did not accept these packets. Having not received confirmation of receipt of packets, senders tried to send them again after a random amount of time. Abramson calculated that such a simple work protocol would be able to support up to several hundred simultaneously operating terminals, and due to numerous overlapping signals, 15% of the bandwidth would be utilized. However, according to his calculations, it turned out that with an increase in the network, the whole system would fall into chaos of noise.

Office of the future

Abramson’s concept of “packet broadcasting” did not make a fuss at first. But then she was born again - a few years later, and already on the mainland. This was due to the new Xerox Palo Alto Research Center (PARC), which opened in 1970 right next to Stanford University, in the area that was nicknamed Silicon Valley shortly before. Some Xerox xerography patents were about to expire, so the company risked falling into the trap of its own success without adapting due to unwillingness or inability to flourish computing technology and integrated circuits. Jack Goldman, head of research at Xerox, convinced the big bosses that the new lab - set apart from headquarters influence, set up in a comfortable climate, with good salaries - will bring in the talent needed to keep the company at the forefront of progress while developing information architecture of the future.

PARC definitely succeeded in attracting the best talents from the field of computer science, not only because of working conditions and a generous salary, but also thanks to the presence of Robert Taylor, who launched the ARPANET project in 1966, being the head of the ARPA information processing technology department. Robert Metcalfe , a hot-tempered and ambitious young engineer and computer scientist from Brooklyn, was one of those who got into PARC due to his connections with ARPA. He joined the lab in June 1972 after a graduate student working part-time for ARPA, inventing an interface for connecting MIT to a network. Having settled in PARC, he still remained an “intermediary” of ARPANET - he traveled around the country, helped to connect new points to the network, and also prepared for the presentation of ARPA at the 1972 international conference on computer communications.

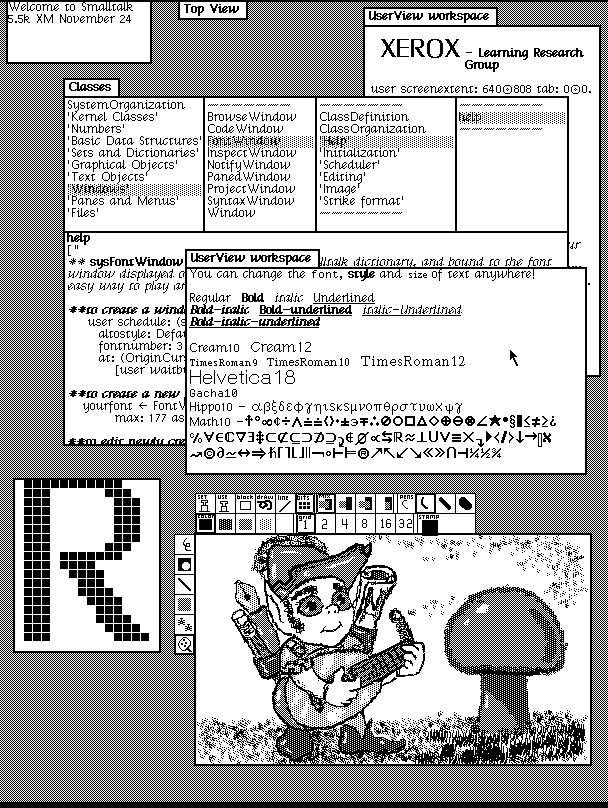

Among the projects running at PARC at the time of Metcalf's arrival was Taylor’s proposed plan for connecting dozens, or even hundreds of small computers to the network. Year after year, the cost and size of computers fell, obeying the indomitable will of Gordon Moore . Looking to the future, engineers at PARC foresaw that in the not-too-distant future, each office worker would have their own computer. As part of this idea, they developed and created the Alto personal computer, copies of which were distributed to each researcher in the laboratory. Taylor, who over the past five years has only strengthened his belief in the usefulness of a computer network, also wanted to tie all these computers together.

Alto. The computer itself is located below, in a cabinet the size of a mini-fridge.

Arriving at PARC, Metcalf took on the task of connecting the lab-owned clone PDP-10 to ARPANET, and quickly gained a reputation as a “networker”. So when Taylor needed a network from Alto, his assistants turned to Metcalf. Like computers in ARPANET, Alto computers in PARC had virtually nothing to say to each other. Therefore, an interesting application of the network again became the task of communicating with people - in this case, in the form of words and images printed by a laser.

The key idea of the laser printer did not appear in PARC, but on the east bank, in the original Xerox laboratory in Webster, New York. The local physicist Gary Starkweather proved that a coherent laser beam can be used to deactivate the electric charge of a xerographic drum, just like the scattered light used in a photocopy until then. The beam, being correctly modulated, can draw an image of arbitrary detail on the drum, which can then be transferred to paper (since only uncharged parts of the drum capture toner). Such a computer-controlled machine will be able to produce any combination of images and text that come to a person’s head, and not just reproduce existing documents, like a photocopier. However, Starkweather’s wild ideas did not receive support from either his colleagues or his superiors in Webster, so he transferred to PARC in 1971, where he met with a much more interested audience. The ability of the laser printer to display arbitrary images dot by dot made it an ideal partner for the Alto workstation, with its pixel monochrome graphics. Using a laser printer, half a million pixels on a user's display could be directly printed on paper with perfect clarity.

Bitmap on Alto. Nobody has seen anything like this on computer displays before.

In about a year, with the help of a few more engineers from PARC, Starkuezer eliminated the main technical problems and built a working prototype of a laser printer on the Xerox 7000 workhorse. It produced pages at the same speed - in pieces per second - and with a resolution of 500 points per inch. A character generator built into the printer printed text with predefined fonts. Arbitrary images (different from those that could be created from fonts) were not yet supported, so the network did not need to transmit 25 million bits per second for the printer. Nevertheless, in order to fully occupy the printer, it would require incredible network bandwidth for those times - when 50,000 bits per second were the limit of ARPANET.

Second Generation PARC Laser Printer, Dover (1976)

Alto Aloha Network

And how was Metcalf able to fill this speed gap? So we returned to ALOHAnet - it turned out that Metcalfe was better than anyone versed in packet broadcasting. The year before, in the summer, while in Washington with Steve Crocker on ARPA affairs, Metcalf studied the materials of a general autumn computer conference and stumbled upon Abramson’s ALOHAnet work there. He immediately understood the genius of the basic idea, and that its implementation was not good enough. By making some changes to the algorithm and its assumptions - for example, by making senders first listen to the air, waiting for the channel to clear, before trying to send messages, and also exponentially increase the retransmission interval in case of a clogged channel - it could achieve bandwidth utilization bands by 90%, and not by 15%, as it came out of Abramson’s calculations. Metcalf took a short vacation, traveled to Hawaii, where he incorporated his ideas about ALOHAnet into a revised version of his doctorate, after Harvard rejected its original version for lack of a theoretical basis.

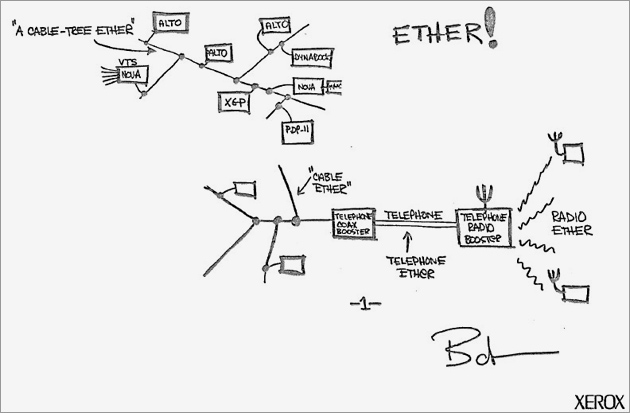

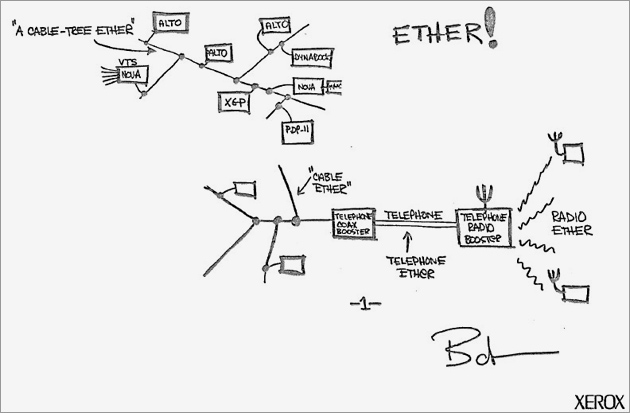

Metcalf first described his plan for implementing packet broadcast in PARC as the “ALTO ALOHA network.” Then, in a memorandum of May 1973, he renamed it Ether Ether, referring to the luminiferous ether, a 19th-century physical idea of a substance that carries electromagnetic radiation. “This will help spread the network,” he wrote, “and who knows what other methods of signal transmission will turn out to be better than cable for a broadcast network; perhaps it will be radio waves, or telephone wires, or power, or cable television with frequency multiplexing, or microwaves, or combinations thereof. ”

Sketch from a 1973 Metcalf memorandum

From June 1973, Metcalfe worked with another PARC engineer, David Boggs, to translate his theoretical concept of a new high-speed network into a working system. Instead of transmitting signals through the air, as in ALOHA, he limited the radio spectrum to a coaxial cable, which dramatically increased the bandwidth compared to the limited radio frequency band of Menehune. The transmission medium itself was completely passive, and did not require any routers to route messages. It was cheap, and made it easy to connect hundreds of workstations - PARC engineers simply passed a coaxial cable through the building, and added connections to it as needed - and was also able to pass three million bits per second.

Robert Metcalf and David Boggs, 1980s, a few years after Metcalf founded 3Com to sell Ethernet technology

By the fall of 1974, the finished prototype of the office of the future was deployed and worked in Palo Alto - the first batch of Alto computers, with drawing programs, email and word processors, a prototype printer from Starkweather and an Ethernet network to connect all this into a network.The central file server, which stored data that would not fit on the local Alto drive, was the only shared resource. Initially, PARC offered the Ethernet controller as an optional accessory for Alto, but when the system was launched, it became clear that it was a necessary part; a constant stream of messages went along the coax, many of which came out of the printer — technical reports, memos, or scientific papers.

Along with developments for Alto, another project from PARC tried to push resources sharing ideas in a new direction. The PARC Online Office System (POLOS), developed and implemented by Bill English and other fugitives from Doug Engelbart's Online System (NLS) project at Stanford Research Institute, consisted of a Data General Nova microcomputer network. But instead of devoting each individual machine to specific user needs, at POLOS, work was transferred between them to serve the interests of the system as a whole in the most efficient way. One machine could generate images for user screens, another could handle ARPANET traffic, and the third with word processors. But the complexity and coordination costs in this approach were excessive, and the scheme collapsed under its own weight.

Meanwhile, nothing else showed Taylor’s emotional rejection of a resource-sharing approach better than his acceptance of the Alto project. Alan Kay, Butler Lampson and other authors of Alto brought all the computing power that the user might need to his independent computer on the table, which he should not have shared with anyone. The function of the network was not to provide access to a diverse set of computer resources, but to send messages between these independent islands, or store them on some distant shore - for printing or long-term archiving.

Although both email and ALOHA were developed under the auspices of ARPA, the advent of Ethernet was one of several signs that appeared in the 1970s that computer networks became too large and diverse for one company to dominate in this field, and we track in the next article.

What else to read

- Michael Hiltzik, Dealers of Lightning (1999)

- James Pelty, The History of Computer Communications, 1968-1988 (2007) [http://www.historyofcomputercommunications.info/]

- M. Mitchell Waldrop, The Dream Machine (2001)