We have prepared a text version of the report.

Good morning!

DevOpsDays in Moscow for the second year in a row, I am the second time on this stage, many of you are the second time in this room. What does it mean? This means that the DevOps movement in Russia is growing, multiplying, and most importantly, it means that it's time to talk about what DevOps is in 2018.

Hands up for those who think DevOps is already a profession in 2018? There are such. Are there DevOps engineers in the room who have “DevOps engineer” written in the job description? Are there DevOps managers in the hall? There is no such. DevOps architects? Also no. Not enough. What, really, no one has written that he is a DevOps engineer?

So most of you think this is an antipattern? What should not be such a profession? We can think anything, but for now we think the industry is solemnly moving forward to the sounds of the DevOps pipe.

Who has heard of a new topic called DevDevOps? This is such a new technique, which allows for effective cooperation between developers and devops. And not so new. Judging by twitter, then 4 years ago they started talking about it. And still interest in this is growing and growing, that is, there is a problem. The problem must be solved.

We are creative people, so just do not calm down. We say: DevOps is not a comprehensive enough word; there is still a lack of all sorts of different, interesting elements. And we go to our secret laboratories and begin to produce interesting mutations: DevTestOps, GitOps, DevSecOps, BizDevOps, ProdOps.

The logic is iron, right? Our delivery system is not functional, our systems are unstable and users are dissatisfied, we do not have time to roll out the software on time, we do not fit the budget. How will we solve all this? We will come up with a new word! It will end in Ops and the problem is resolved.

So I call this approach - "Ops, and the problem is resolved."

This all fades into the background if we remind ourselves why we all came up with this. We came up with all this DevOps to make software delivery and our own work in this process as unobstructed, painless, effective, and most importantly, enjoyable.

DevOps has grown out of pain. And we are tired of suffering. And for this to happen, we rely on evergreen practices: effective collaboration, flow practices, and most importantly, systemic thinking, because without it, no DevOps works.

What is a system?

And if we are already talking about systemic thinking, let's remind ourselves what a system is.

If you are a revolutionary hacker, then for you the system is a clear evil. This is a cloud that hangs over you and makes you do what you do not want to do.

From the point of view of systemic thinking, a system is a whole that consists of parts. In this sense, each of us is a system. The organizations we work for are systems. And what we are building is called the system.

All this is part of one large sociotechnological system. And only if we understand how this sociotechnological system works together, only then can we really optimize something in this matter.

From the point of view of systemic thinking, a system has various interesting properties. Firstly, it consists of parts, which means that its behavior depends on the behavior of the parts. Moreover, all its parts are also interdependent. It turns out that the more parts the system has, the more difficult it is to understand or predict its behavior.

In terms of behavior, there is another interesting fact. The system can do something that none of its individual parts can do.

As Dr. Russell Akoff (one of the founders of systemic thinking) said, this is fairly easy to prove with a thought experiment. For example, who in the audience can write code? A lot of hands, and this is normal, because this is one of the basic requirements for our profession. Can you write, and your hands can write code separately from you? There are people who say: "My hands do not write code, my brain writes code." Can the brain write code separately from you? Well, most likely not.

The brain is an amazing machine, we and 10% do not know how it works there, but it cannot function separately from the system that our body is. And this is easy to prove: open your skull box, get your brain out of it, put it in front of the computer, let it try to write something simple. "Hello, world" in Python, for example.

If a system can do something that none of its parts individually can do, then this means that its behavior is not determined by the behavior of its parts. And then what is it determined by? It is determined by the interaction between these parts. And accordingly, the more parts, the more complicated the interaction, the more difficult it is to understand and predict the behavior of the system. And this makes such a system chaotic, because any, the smallest, invisible to the eye change in any part of the system can lead to completely unpredictable results.

This sensitivity to initial conditions was first discovered and investigated by the American meteorologist Ed Lorenz. Subsequently, it was called the “butterfly effect” and led to the development of such a movement of scientific thought called the “chaos theory”. This theory has become one of the main paradigm shifts in 20th century science.

Chaos theory

People who study chaos call themselves chaosologists.

Actually, the reason for this report was that, working with complex distributed systems and large international organizations, at some point I realized that this is what I feel like. I am a chaosologist. This, in general, is such an ingenious way of saying: “I don’t understand what is happening here and I don’t know what to do with it.”

I think that many of you also feel yourself so often, so you are also chaosologists. I invite you to the guild of chaosologists. The systems that we, dear fellow Chaosologists, will study, are called "complex adaptive systems."

What is adaptability? Adaptability means that the individual and collective behavior of parts in such an adaptive system changes and self-organizes in response to events or chains of micro-events in the system. That is, the system adapts to changes through self-organization. And this self-organization ability is based on the voluntary, completely decentralized cooperation of free autonomous agents.

Another interesting property of such systems is that they are freely scalable. That we, as chaosologists-engineers, should undoubtedly be of interest. So, if we said that the behavior of a complex system is determined by the interaction of its parts, then what should interest us? Interaction.

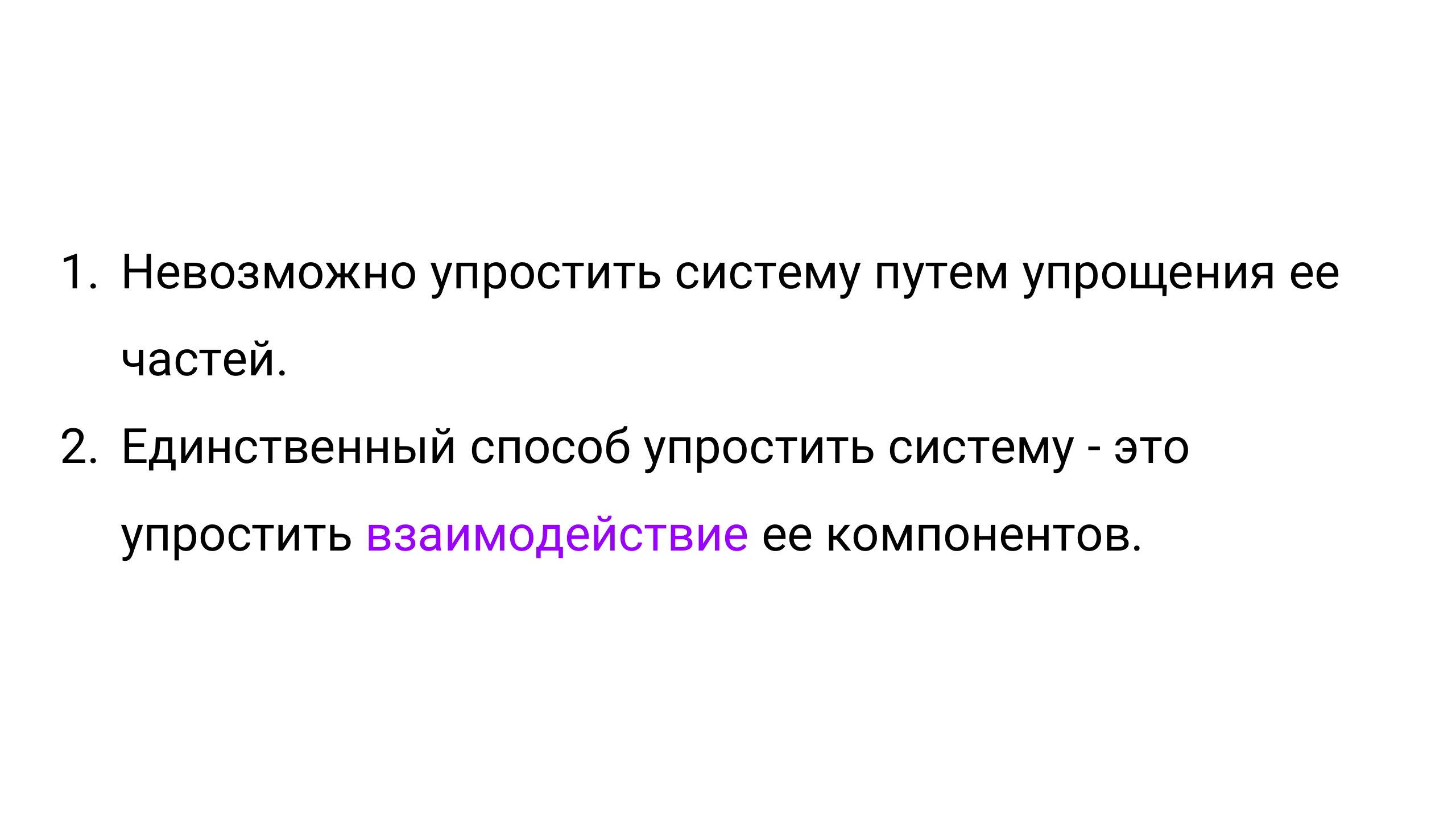

There are two more interesting conclusions.

First, we understand that a complex system cannot be simplified by simplifying its parts. Secondly, the only way to simplify a complex system is to simplify the interactions between its parts.

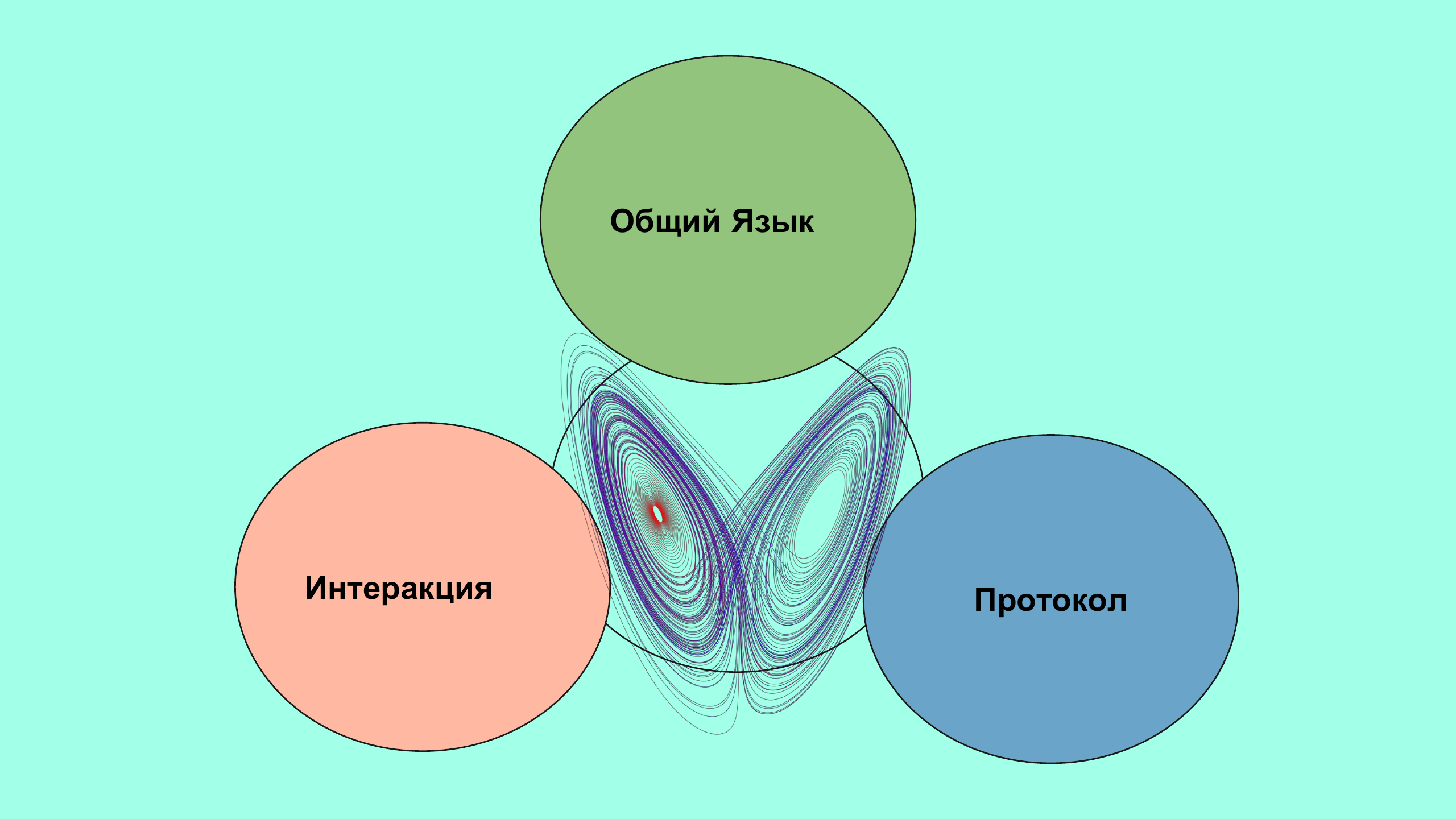

How do we interact? We are all part of a large information system called the human society. We interact through a common language, if we have it, if we find it.

But language itself is a complex adaptive system. Accordingly, in order to interact more efficiently and simply, we need to create some kind of protocols. That is, some sequence of symbols and actions that will make the exchange of information between us simpler, more predictable, more understandable.

I want to say that the tendencies to complication, to adaptability, to decentralization, to randomness are traced in everything. And in those systems that we are building, and in those systems of which we are a part.

And in order not to be unfounded, let's look at how the systems that we create are changing.

You were waiting for this word, I understand. We are at the DevOps conference, today this word will sound somewhere a hundred thousand times and then we will dream at night.

Microservices is the first software architecture that emerged as a reaction to DevOps practices, which is designed to make our systems more flexible, more scalable, and ensure continuous delivery. How does she do this? By reducing the volume of services, reducing the boundaries of the problems that these services process, reducing delivery time. That is, we reduce, simplify parts of the system, increase their number, and accordingly, the complexity of the interactions between these parts constantly increases, that is, new problems arise that we have to solve.

Microservices - this is not the end, microservices are, in general, already yesterday, because Serverless is coming. All servers burned out, no servers, no operating systems, only clean executable code. The configuration is separate, the state is separate, everything is event driven. Beauty, purity, silence, no events, nothing happens, complete order.

Where is the difficulty? Complexity, of course, in interactions. How much can one function do on its own? How does it interact with other functions? Message queues, databases, balancers. How to recreate an event when a failure occurs? A bunch of questions and few answers.

Microservices and Serverless are all what we computer hipsters call Cloud Native. It is all about the cloud. But the cloud is also essentially scalable. We are used to thinking of it as a distributed system. In fact, where do the cloud provider servers live? In data centers. That is, here we have a certain centralized, very limited, distributed model.

Today we understand that the Internet of things is no longer just big words that, even according to modest predictions, billions of devices connected to the Internet are waiting for us in the next five to ten years. A huge amount of useful and useless data that will merge into the cloud and flood from the cloud.

The cloud will not stand, so we are increasingly talking about what is called "peripheral computing." Or I also like the wonderful definition of fog computing. It is tattered with the mysticism of romanticism and mystery.

Misty computing. The point is that clouds are such centralized clots of water, steam, ice, stones. And fog is water droplets scattered around us in the atmosphere.

In a foggy paradigm, most of the work is done by these droplets completely autonomously or in collaboration with other droplets. And they turn to the cloud only when they really really press it.

That is, again, decentralization, autonomy, and, of course, many of you already understand what this is all about, because you can’t talk about decentralization and not mention the blockchain.

There are those who believe, those who invested in cryptocurrency. There are those who believe, but are afraid, like me, for example. And there are those who do not believe. Here you can relate differently. There is technology, a new incomprehensible thing, there are problems. Like any new technology, it raises more questions than answers.

The hype around the blockchain is understandable. Even if you put aside the gold rush, the technology itself makes wonderful promises of a bright future: more freedom, more autonomy, distributed global trust. What is there not to want?

Accordingly, more and more engineers around the world are starting to develop decentralized applications. And this is a force that cannot be dismissed by simply saying: “Ahh, the blockchain is just a poorly implemented distributed database.” Or how skeptics like to say: “There are no real uses for the blockchain.” If you think about it, then 150 years ago they said the same thing about electricity. And even in some ways they were right, because what electricity makes possible today in the 19th century was completely unrealistic in any way.

By the way, who knows what kind of logo is on the screen? This is Hyperledger. This is a project that is being developed under the auspices of The Linux Foundation, it includes a set of blockchain technologies. This is really the power of our open source community.

Chaos Engineering

So, the system that we are developing is becoming more complicated, more chaotic, more adaptive. Netflix - pioneers of microservice systems. They were one of the first to understand this, they developed a tool kit called the Simian Army, the most famous of which was Chaos Monkey . He defined what became known as the "principles of chaos engineering . "

By the way, in the process of working on the report, we even translated this text into Russian, so go to the link , read, comment, scold.

Briefly, the principles of chaos engineering indicate the following. Complex distributed systems are inherently unpredictable, and inherently have errors in them. Errors are inevitable, which means that we need to accept these errors and work with these systems in a completely different way.

We ourselves must try to introduce these errors into our production systems in order to test our systems for this very adaptability, for this very ability to self-organize, to survive.

And that changes everything. Not only how we run the system in production, but also how we develop them, how we test them. There is no process of stabilization, code freezing, on the contrary, there is a constant process of destabilization. We are trying to kill the system and see that it continues to survive.

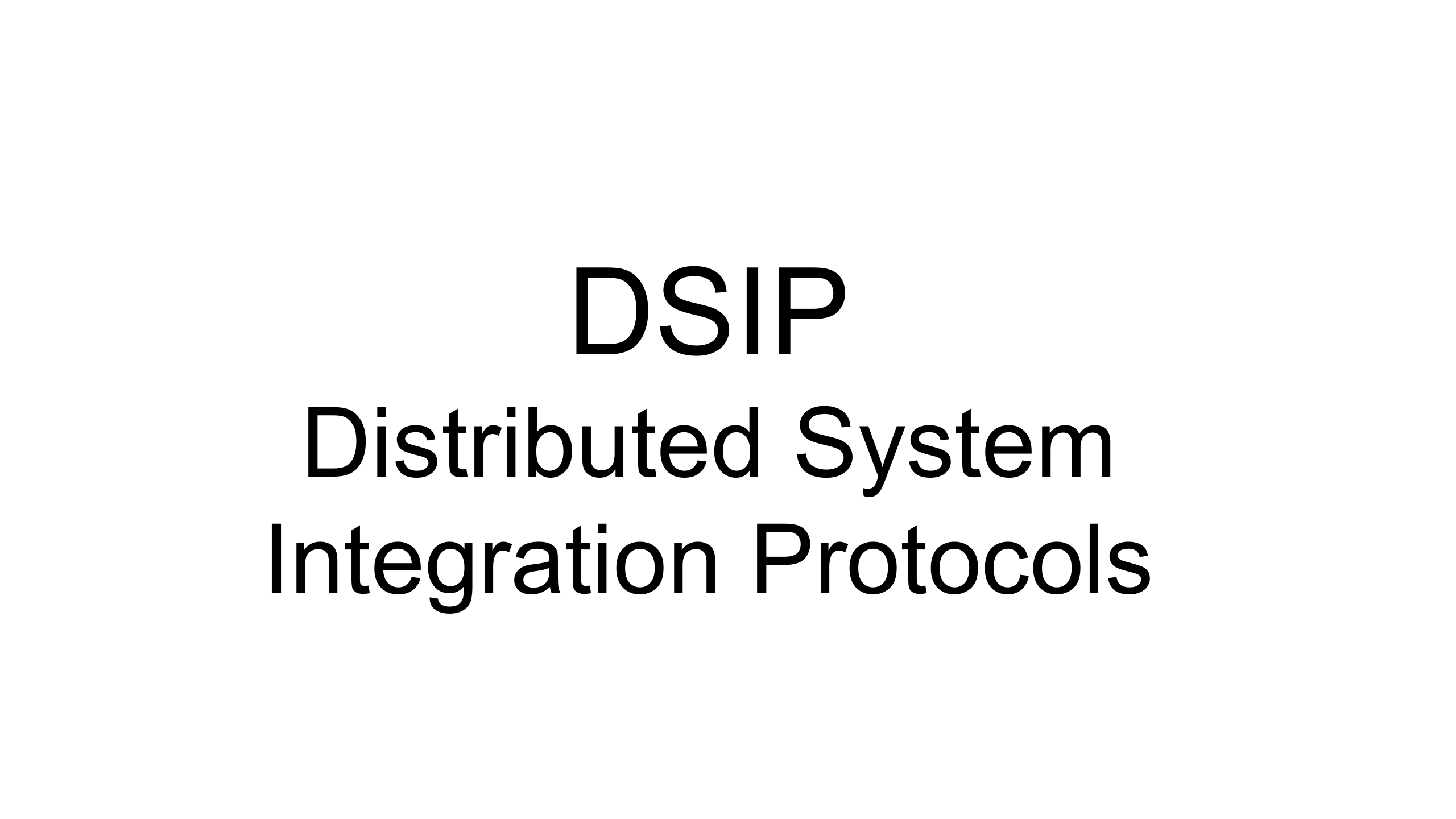

Distributed System Integration Protocols

Accordingly, this requires our systems to also somehow change. In order for them to become more stable, they need some new protocols of interaction between their parts. So that these parts can negotiate and come to some sort of self-organization. And there are all sorts of new tools, new protocols, which I call "protocols for the interaction of distributed systems."

What I'm talking about? Firstly, the Opentracing project. Some attempt to create a common protocol for distributed tracking, which is an absolutely indispensable tool for debugging complex distributed systems.

Next is the Open Policy Agent . We say that we cannot predict what will happen to the system, that is, we need to increase its observability, observability. Opentracing is a family of tools that give observability to our systems. But we need observability in order to determine whether the system behaves as we expect from it or not. How do we determine the expected behavior? By defining in it some kind of policy, some kind of set of rules. The Open Policy Agent project is defining this set of rules on a wide range: from access to resource allocation.

As we said, our systems are increasingly event driven. Serverless is a great example of event driven systems. In order for us to transmit events between systems and track them, we need some common language, some common protocol of how we talk about events, how we transmit them to each other. This is a project called Cloudevents .

The continuous stream of changes that washes our systems, constantly destabilizing them, is a continuous stream of software artifacts. In order for us to be able to maintain this constant flow of changes, we need some general protocol with which we can talk about what a software artifact is, how it is verified, what verification it passed. This is a project called Grafeas . That is, a common software artifact metadata protocol.

And finally, if we want our systems to be completely independent, adaptive, self-organizing, we must give them the right to self-identification. A project called spiffe does just that. This is also a project sponsored by the Cloud Native Computing Foundation.

All these projects are young, they all need our love, our test. This is all open source, our testing, our implementation. They show us which way technology is moving.

But DevOps was never primarily about technology, primarily it was always about collaboration between people. And, accordingly, if we want the systems that we develop to change, then we must change ourselves. In fact, we are already changing, we have no particular choice.

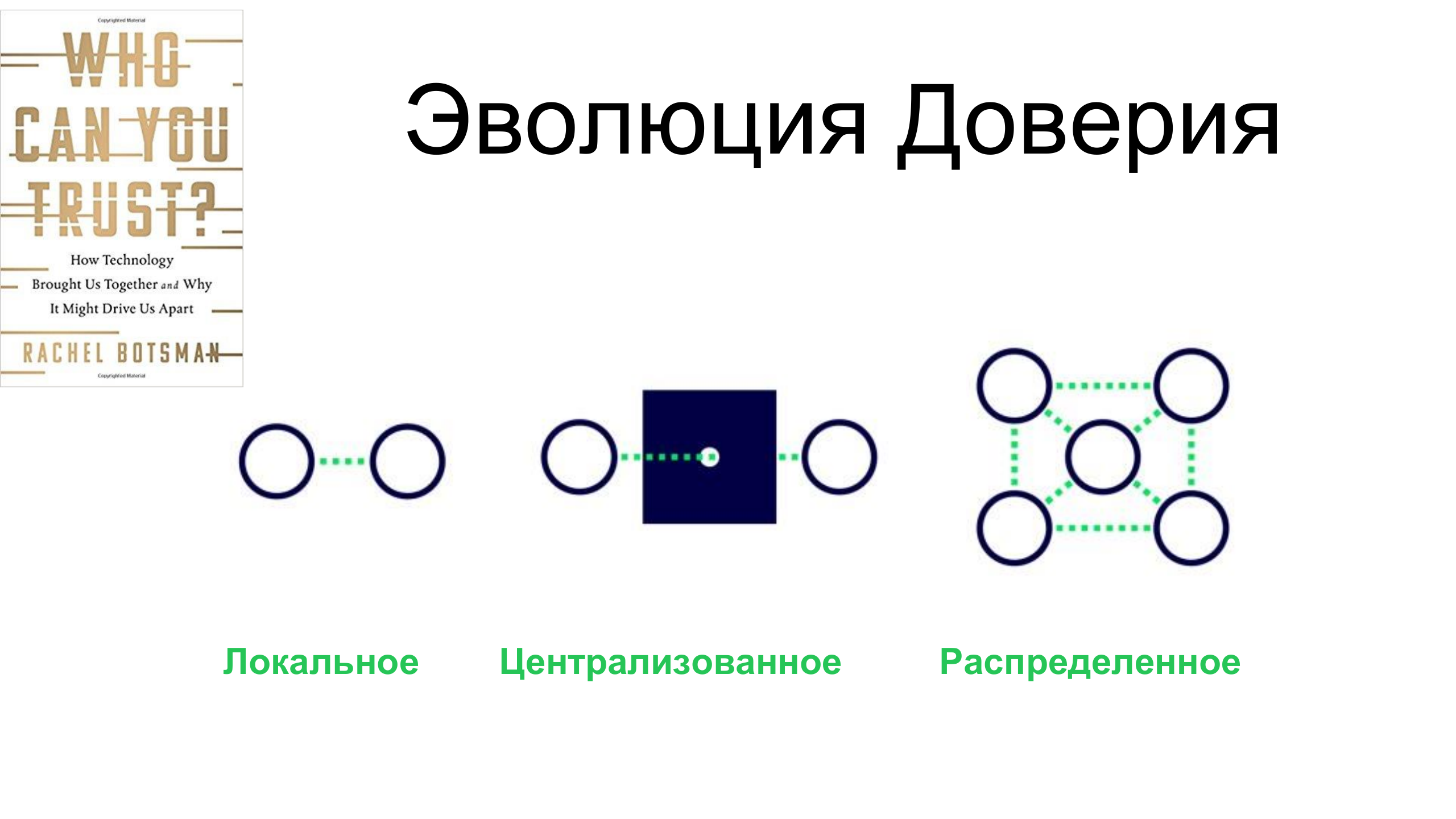

There is a wonderful book by British writer Rachel Botsman in which she writes about the evolution of trust throughout human history. She says that initially, in primitive societies, trust was local, that is, we trusted only those whom we personally know.

Then there was a very long period - a dark time, when trust was centralized, when we began to trust people whom we do not know on the basis that we belong to the same public or state institution.

And here is what we see in our modern world: trust is becoming more and more distributed and decentralized, and it is based on the freedom of information flows, on the availability of information.

If you think about it, this very accessibility that makes this trust possible is what we are implementing. This means that both the way we work and the way we do it must change, because the centralized hierarchical IT organizations of the old kind stop working. They begin to die.

Basics of DevOps Organization

The ideal DevOps organization of the future is a decentralized, adaptive system consisting of autonomous teams, each of which consists of autonomous individuals. These teams are scattered around the world, they effectively cooperate with each other using asynchronous communication, using highly transparent protocols for the exchange of information. Very beautiful, right? A very beautiful future.

Of course, this is all impossible without cultural change. We must have transformational leadership, personal responsibility, internal motivation.

This is the basis of DevOps organizations: information transparency, asynchronous communications, transformational leadership, decentralization.

Burnout

The systems of which we are a part, and those that we build, they are more and more chaotic, and it is difficult for us, people, to cope with this idea, and to give up the illusion of control. We try to continue to control them, and this often leads to burnout. I say this from my own experience, I also got burned, also a disabled person of unforeseen production failures.

Burnout occurs when we try to control something that, in essence, cannot be controlled. When we burn out, then everything loses its meaning, because we lose the desire to do something new, we take a defensive position and begin to defend what is.

The engineering profession, as I often like to remind myself, is primarily a creative profession. If we lose the desire to create something, then we turn into ashes, turn into ashes. People burn out, whole organizations burn out.

In my opinion, only accepting the creative power of chaos, only building cooperation on its principles is what helps us not to lose the good that is in our profession.

What I want for you: to love my work, to love what we do. This world feeds on information, we are honored to feed it. So let's study chaos, we will be chaosologists, we will bring value, create something new, well, and the problems, as we have already found out, are inevitable, and when they appear, we will just say “Ops!”, And the problem is solved.

What besides Chaos Monkey?

In fact, all these tools, they are so young. Those Netflix built tools for themselves. Build tools for yourself. Read the principles of chaos engineering and comply with these principles, and do not try to look for other tools that someone else has already built.

Try to understand how your systems break and start to break them and watch how they withstand impacts. This is first and foremost. And you can search for tools. There are all kinds of projects.

I did not quite understand the moment when you said that the system cannot be simplified by simplifying its components, and immediately switched to microservices, which just simplify the system by simplifying the components themselves and complicating interactions. These are essentially two parts that contradict each other.

That's right, microservices are a very controversial topic in general. In fact, simplifying parts increases flexibility. What do microservices give? They give us flexibility and speed, but they certainly do not give us simplicity in any way. They increase complexity.

That is, in the philosophy of DevOps, microservices are not such a blessing?

Any good has a wrong side. There is a benefit: it increases flexibility, gives us the ability to make changes faster, but increases the complexity and, accordingly, the fragility of the entire system.

Still, what is more emphasis on: simplification of interaction or simplification of parts?

The emphasis is undoubtedly on the simplification of interaction, because if we look at it from the point of view of how we work with you, then, first of all, we need to pay attention to the simplification of interactions, and not to simplify the work of each of us separately. Because simplifying work is turning into robots. But at McDonald's it works fine when it is prescribed for you: here you put the burger, here you poured sauce on it. This in our creative work does not work at all.

Is it true that everything you have said lives in a world without competition, and the chaos there is so kind, and there are no contradictions within this chaos, no one wants to eat, kill anyone? How should competition and DevOps live?

Well, it depends on what kind of competition we are talking about. Workplace competition or competition between companies?

About the competition of services that exist, because services are not a few companies. We are creating a new type of information environment, and any environment cannot live without competition. Everywhere there is competition.

The same Netflix, we take them for a role model. Why did they come up with this? Because they needed to be competitive. This flexibility and speed of movement, it is precisely this very competitive requirement, it introduces randomness into our systems. That is, chaos is not what we consciously do, because we want it, it is what happens because the world requires it. We just have to adapt. And chaos, it is just the result of competition.

Does this mean chaos is a lack of goals? Or those goals that we do not want to see? We are in the house and do not understand the goals of others. Competition, in fact, is due to the fact that we have clear goals, and we know where we will come at every next moment in time. In this, from my point of view, the essence of DevOps.

Also a look at the question. I think that we all have one goal: to survive and do it with

the greatest pleasure. And the competitive goal of any organization is the same. Survival is often in competition, there is nothing to be done.

This year, the DevOpsDays Moscow conference will be held on December 7 at Technopolis. Until November 11, we accept applications for reports. Email us if you want to speak.

Registration for participants is open, the ticket costs 7,000 rubles. Join now!