Python SAX parser vs python DOM parser. Parsim FIAS-houses

In a previous article , we considered an approach to creating csv from xml based on the data published by FIAS. The parsing was based on the DOM parser, which loads the entire file into memory before processing, which led to the need to split large files in view of the limited amount of RAM. This time it is proposed to see how good the SAX parser is and compare its speed with the DOM parser. The largest of the FIAS database files, houses, 27.5 GB in size, will be used as a test subject.

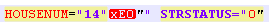

We were forced to immediately upset the most respected audience - immediately fail to feed the SAX parser the FIAS houses database file. The parser crashes with the error "not well-formed (invalid token)". And initially there were suspicions that the database file was broken. However, after cutting the database into several small parts, it was found that the departures were caused by a changed coding for house and / or building numbers. That is, the STRUCNUM or HOUSENUM tags came across houses with a letter written in a strange encoding (not UTF-8 and not ANSI, in which the document itself was formed):

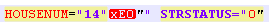

At the same time, if this encoding is corrected by running the file through the remove_non_ascii function, the record takes the form:

Such a file was also not absorbed by the parser, due to extra characters.

I had to remember regular expressions and clean the file before loading it into the parser.

Question: why it is impossible to create a normal database, which is laid out for work, acquires a rhetorical shade.

To align the starting capabilities of the parsers, we clear the test fragment from the above inconsistencies.

Code to clean the database file before loading it into the parser:

The code translates the non_ascii characters to normal in the xml file and then removes the unnecessary "" in the names of buildings and houses.

To start, take a small xml file (58.8 MB), we plan to get txt or csv at the output, convenient for further processing in pandas or excel.

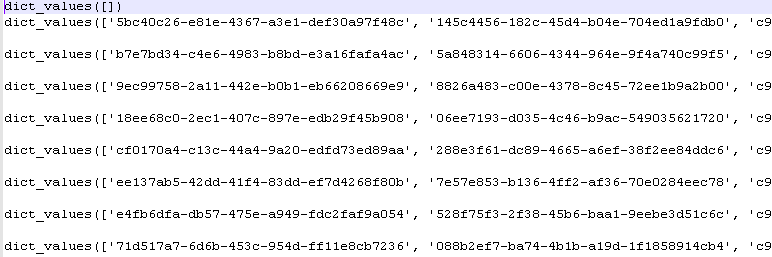

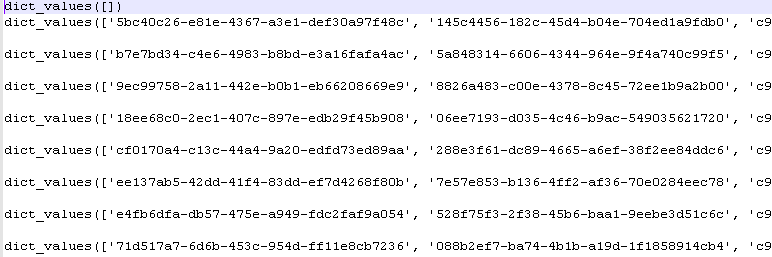

After executing the program, we get the values of the python dictionary:

Lead time: 5-6 sec.

We process the same file by first loading it in its entirety into memory. This is exactly the method the DOM parser uses.

Lead time 2-3 sec.

Winning a DOM parser?

Small files do not fully reflect reality. Take a larger file 353 MB (after cleaning, as mentioned above).

Shoulder results:

SAX parser: 0: 00: 32.090836 - 32 sec

DOM parser: 0: 00: 16.630951 - 16 sec

The difference is 2 times in speed. However, this does not detract from the main advantage of the SAX parser - the ability to process large files without first loading them into memory.

It remains to be regretted that this advantage is not applicable to the FIAS database, since preliminary work with encodings is required.

File for pre-cleaning encodings:

- 353 MB in the archive .

Purified DB file for parser tests:

- 353 MB in the archive .

Introduction

We were forced to immediately upset the most respected audience - immediately fail to feed the SAX parser the FIAS houses database file. The parser crashes with the error "not well-formed (invalid token)". And initially there were suspicions that the database file was broken. However, after cutting the database into several small parts, it was found that the departures were caused by a changed coding for house and / or building numbers. That is, the STRUCNUM or HOUSENUM tags came across houses with a letter written in a strange encoding (not UTF-8 and not ANSI, in which the document itself was formed):

At the same time, if this encoding is corrected by running the file through the remove_non_ascii function, the record takes the form:

Such a file was also not absorbed by the parser, due to extra characters.

I had to remember regular expressions and clean the file before loading it into the parser.

Question: why it is impossible to create a normal database, which is laid out for work, acquires a rhetorical shade.

To align the starting capabilities of the parsers, we clear the test fragment from the above inconsistencies.

Code to clean the database file before loading it into the parser:

The code

from datetime import datetime import re from unidecode import unidecode start = datetime.now() f= open('AS_HOUSE.462.xml', 'r',encoding='ANSI') def remove_non_ascii(text): return unidecode(unidecode(text)) for line in f: b=remove_non_ascii(line) for c in re.finditer(r'\w{5}NUM="\d{1,}\"\w\"',b): print(c[0]) c1=c[0][:-3]+c[0][-2] print(c1) b=b.replace(c[0],c1) # # f1= open('out.xml', 'w',encoding='ANSI') f1.write(b) f1.close() f.close() print(datetime.now()- start)

The code translates the non_ascii characters to normal in the xml file and then removes the unnecessary "" in the names of buildings and houses.

Sax parser

To start, take a small xml file (58.8 MB), we plan to get txt or csv at the output, convenient for further processing in pandas or excel.

The code

import xml.sax import csv from datetime import datetime start = datetime.now() class EventHandler(xml.sax.ContentHandler): def __init__(self,target): self.target = target def startElement(self,name,attrs): self.target.send(attrs._attrs.values()) def characters(self,text): self.target.send('') def endElement(self,name): self.target.send('') def coroutine(func): def start(*args,**kwargs): cr = func(*args,**kwargs) cr.__next__() return cr return start with open('out.csv', 'a') as f: # example use if __name__ == '__main__': @coroutine def printer(): while True: event = (yield) print(event,file=f) xml.sax.parse("out.xml", EventHandler(printer())) print(datetime.now()- start)

After executing the program, we get the values of the python dictionary:

Lead time: 5-6 sec.

DOM parser

We process the same file by first loading it in its entirety into memory. This is exactly the method the DOM parser uses.

The code

import codecs,os import xml.etree.ElementTree as ET import csv from datetime import datetime parser = ET.XMLParser(encoding="ANSI") tree = ET.parse("out.xml",parser=parser) root = tree.getroot() Resident_data = open('AS_HOUSE.0001.csv', 'a',encoding='ANSI') csvwriter = csv.writer(Resident_data) attr_names = [ 'HOUSEID', 'HOUSEGUID', 'AOGUID', 'HOUSENUM', 'STRUCNUM', 'STRSTATUS', 'ESTSTATUS', 'STATSTATUS', 'IFNSFL', 'IFNSUL', 'TERRIFNSFL', 'TERRIFNSUL', 'OKATO', 'OKTMO', 'POSTALCODE', 'STARTDATE', 'ENDDATE', 'UPDATEDATE', 'COUNTER', 'NORMDOC', 'DIVTYPE', 'REGIONCODE' ] start = datetime.now() object = [] for member in root.findall('House'): object = [member.attrib.get(attr_name, None) for attr_name in attr_names] csvwriter.writerow(object) Resident_data.close() print(datetime.now()- start)

Lead time 2-3 sec.

Winning a DOM parser?

Larger Files

Small files do not fully reflect reality. Take a larger file 353 MB (after cleaning, as mentioned above).

Shoulder results:

SAX parser: 0: 00: 32.090836 - 32 sec

DOM parser: 0: 00: 16.630951 - 16 sec

The difference is 2 times in speed. However, this does not detract from the main advantage of the SAX parser - the ability to process large files without first loading them into memory.

It remains to be regretted that this advantage is not applicable to the FIAS database, since preliminary work with encodings is required.

File for pre-cleaning encodings:

- 353 MB in the archive .

Purified DB file for parser tests:

- 353 MB in the archive .

All Articles