AI supremacy: Leela Chess. Or about how a fully open neural network won

Honestly, I am very disappointed with Habr. Why didn’t anyone highlight such a thing as winning a neural network approach with fully open source, eh? And completely open neural network data ? Indeed, well, DeepMind Technologies described how they taught a chess AI playing with itself ... Only the code was closed there, and the training took place on Google clusters, and not distributed on Nvidia Turing with tensor kernels , as in this case. Why do I have to edit the English Wikipedia (I'm ZBalling there) to draw attention to this?

Okay, something I went too far with emotions, I guess. (There is still a link in the comments mentioning leela.) This article is an experiment: a way to show me how my other article , which is too popular, in my opinion, will affect this.

The story began when I upgraded my old Nvidia Geforce GTX 770 to the RTX 2080 Ti. Well, considering how much it costs, I wanted to maximize it. At first I played around with Ray Tracing , read about how it works with CUDA through NVIDIA OptiX . I read about how one person managed to use RT cores for other purposes. Repeated it. But the number of comments that a leather jacket divorced people was so great that I was tired of reading about it. Especially considering that I know that path tracing is the most important algorithm of the movie and gaming industry, and this is not the discovery of Nvidia. Absolutely not. Even hardware.

Then I decided to look towards NVENC . I'm generally a freebie lover. As it turned out, there was plenty of it in this hardware encoder. First, the most popular article on Habr about NVENC talks about how YourChief , armed with a debugger, was able to break the limit on the number of simultaneous encoding streams for it (and my card, as it turned out, can be awesome ). Moreover, the patch, as it usually happens, changes only a couple of bytes .

Then it turned out that the NvFBC technology (ultrafast capture of a full frame) can also be enabled on the geforce card, if you use a magic patch on top of the Looking glass framework, before activating this technology (the patch can be seen here ).

Then I wanted a 30 bit color for myself in Photoshop. Repeating the success of others, I found a byte that limited OpenGL support in windowed mode (as in DirectX in windowed and fullscreen modes and in OpenGL in fullscreen mode, 30-bit color worked this way). And Nvidia wrote about it, promising to unveil the patch. Maybe this is a coincidence, but they removed this restriction at Gamescom 2019. But there is still unofficial support for HDR10 + (dynamic HDR metadata).

So, now the time has come for the matrix accelerator, neural accelerator, tensor nuclei, call it what you like. It was a bit more complicated. I will say right away that I overslept a lecture on the neural network at the university, so I had to figure it out. But after watching a couple of videos where a man spawns thousands of birds to go through the Flappy Bird, and after a couple of generations these birds pass obstacles like clockwork, I was inspired by the idea. The question was what to launch. And then I remembered that Google recently boasted that they beat their best player in Go with their AI, which was considered impossible before using “normal” algorithms. Honestly, he loves Google DeepMind complex versioning. Well, that is, who came up with it: AlphaGo Lee → AlphaGo Master → AlphaGo Zero → AlphaZero (the latter can already be in chess, and in shogov, and in go and in general, since they implemented a lengthy mechanism for explaining the rules of the game, it and under poker can be adapted). I’m aware of poker, if anything, don’t write about it, otherwise I know you.

My google search “alphazero source code” yielded nothing. Well, that is, it turned out that they did not think to open the code! But I could not believe it. Well, that is, Elon Mask is in favor of OpenAI (supposedly so that when the AI develops, it is more studied, or something, and more free from copyright of the authors). And here on reddit I came across a link . As it turned out, some compassionate people from DeepMind still decided to give part of the source code, however, by printing it directly in a pdf file. / facepalm

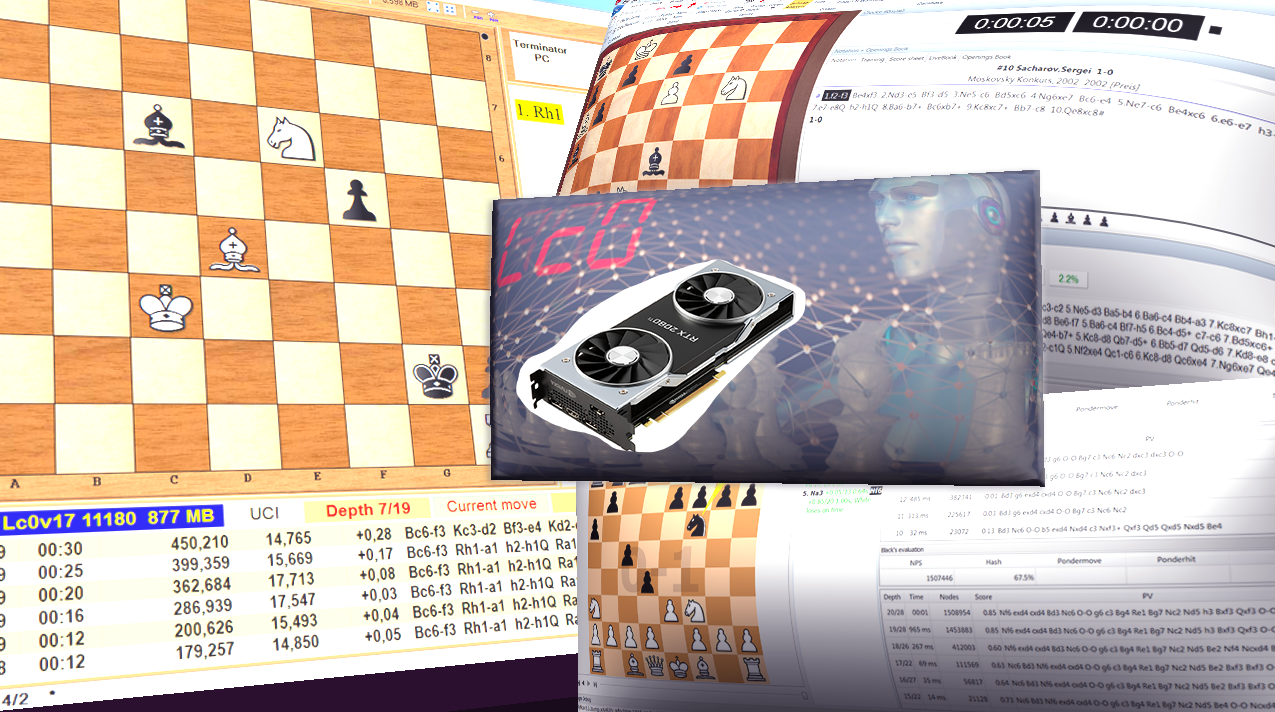

Well, here I immediately downloaded and the neural network from here . And after playing with her, I realized that for some reason it does not play chess. It took me about 20 minutes to understand that it plays go, not chess, and another 40 minutes to find a version for chess. And here I was surprised. Firstly, they already managed to implement cuda backend with support for tensor kernels from Nvidia both for the game and for training ( voluntary and distributed , moreover). Secondly, right at that moment they had a duel with Stockfish for 1st place in the ranking of computer chess! I did not sleep half a night, watching the TCEC super final and the neural network won! I immediately ran to edit Wikipedia, which at that time had little information, and the very next day everyone wrote about it! As it turned out, during the game leela used only a bunch of 2080 Ti and 2080, that is, my card was quite enough. Having downloaded the neural network from here , I quite calmly launched it on my computer! Well, of course, the neural network broke me. Here's how to set it up.

Personally, I really used the HIARCS Chess explorer shell. Further, I also gave people on android to test leela in DroidFish. Android uses a distilled network, simpler and smaller, but still not weak.

In principle, I can paint the installation instructions in more detail, add links, write: =))

By the way, such a question, has anyone heard about the non-standard use of NVENC and NVDEC? It seems like it can be adapted to speed up mathematical operations. I read about it somewhere, but there were no details.

All Articles