Watch me in full: squeeze the most out of live video on mobile platforms

The easiest way to play video on a mobile device is to open the link with an existing player on the system, but this is not always effective.

You can take ExoPlayer and optimize it, or you can even write your own video player using only codecs and sockets. The article will talk about the work of streaming and video playback, and how to reduce the delay in starting a video, reduce the response time between a streamer and a viewer, and optimize power consumption and iron load.

Let’s take a look at the example of specific applications: the Odnoklassniki mobile client (where the videos are played) and OK Live (where the broadcasts are streamed from the phone to 1080p). There will be no master classes on how to play a video by reference, with code examples. The story will focus on how the video looks from the inside, and how, knowing the general architecture of video players and video streaming, you can understand any system and make it better.

The material is based on the transcript of the report of Alexander Tobol ( @alatobol ) and Ivan Grigoriev ( @ivan_a ) from the Mobius conference.

Introduction

For starters - a few numbers about the video in Odnoklassniki.

The peak average daily VOD traffic (video on demand) is more than one and a half terabits per second, and for live broadcasts - more than 3 terabits per second.

Now in OK there are more than 870 million video views per day, more than half of which are from mobile devices.

If you look at the history of streaming, then a mobile video appeared on YouTube in 2007. We hopped on this train later, but in 2014-2015 we already had 4K video playback on mobile devices, and in recent years we have been actively developing our players. About this and the conversation will go.

The second trend that arose with Periscope in 2015 was broadcasting from phones. We launched our OK Live application, which allows you to stream even Full HD video over mobile networks. In the second half of the material, we’ll also talk about streaming.

We will not dwell on the API for working with video, but right now dive deeply and try to find out what is happening inside.

When you shoot a video on a camera, it gets to the codec, from there to the socket, then to the server (regardless of whether VOD or Live). And then the server in the reverse order distributes it to the audience.

Let's start with the KPI player. What do we want from him?

- Fast first frame. Users do not want to wait for the start of playback.

- Lack of buffering. Nobody likes to run into a torso.

- High quality. When there was almost no 4K content yet, we already made 4K support “outgrowing”: if you turn off the player for it and figure out the performance, then 1080p will play perfectly even on weak devices.

- UX requirements. We need the video to play in the tape while scrolling, and for the tape we also need video prefetching.

There are many problems in this way. The stream for 4K video is large, and we work on mobile devices where there are problems with the network, there are various features of video formats and containers on different devices, and the devices themselves can also become a problem.

Where do you think video starts faster on iOS or Android?

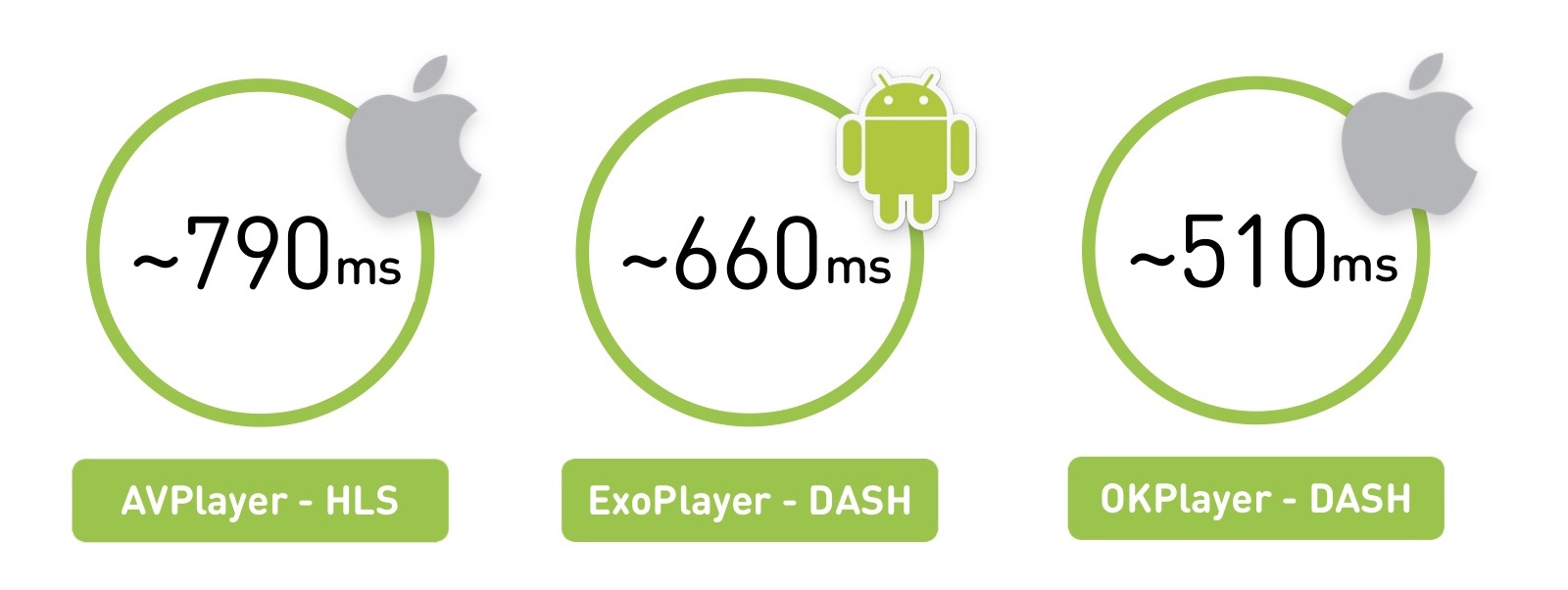

In fact, any answer is correct: it depends on what, where and how to play. If we take a region of Russia with a not-so-good network, we will see that AVPlayer starts at about 800 milliseconds. But with the same network, ExoPlayer on Android, playing a different format, will launch it in 660 ms. And if you make your player on iOS, then it will be able to run even faster.

There is a nuance in that we measure the average for users, and the average power of iOS devices is higher than on Android.

The first part of the material will be theoretical: we will find out what video is and what the architecture of any Live player looks like. And in the second part, let's compare the players and talk about when to write your own.

Part one

What is video

Let's start with the most basic. Video is 60 or 24 pictures per second.

Obviously, storing this with a full set of pictures is quite expensive. Therefore, they are stored in this way: some frames are called reference frames (I-frames), while others (B-frames and P-frames) are called "diffs". In fact, you have a jpg file and a specific set of changes to it.

There is also the concept of GOP (group of picture) - this is an independent set of frames, which begins with a reference frame and continues with a set of diffs. It can be independently played, unpacked and so on. At the same time, if you lost an opornik in the group, the remaining frames there are no longer relevant.

There are a lot of coding algorithms, transformation matrices, motion search, and the like - this is what codecs differ in.

Codec performance

The classic H.264 has been known since 2003 and has developed well. We will take its effectiveness as the base. He works and plays everywhere. It has hardware support for CPU / GPU (both on iOS, on Android). This means that there is either some special coprocessor that can code it, or built-in instruction sets that allow you to do this quickly. On average, hardware support delivers up to 10x faster performance and saves battery life.

In 2010, VP8 from Google appeared. In terms of efficiency, it does not differ from H.264. Well, actually the effectiveness of the codec is a very controversial thing. In the forehead, it is measured as the ratio of the original video to the compressed, but it is clear that there are different video artifacts. Therefore, we provide a link to detailed comparisons of codecs from Moscow State University. And here we restrict ourselves to the fact that VP8 is focused on a software organization, you can drag it with you anywhere, and it is usually used as a fallback if there is no native H.264 support.

In 2013, a new generation of codecs appeared - H.265 (HEVC) and VP9. The H.265 codec gives an increase in efficiency of 50%, but they cannot be encoded on Android video, the decoder appeared only with Android 5.0+. But on iOS there is support.

There is an alternative to H.265 - VP9. All the same, but supported by Google. Well, V9 is YouTube, and H.265 is Netflix. So everyone has their own peculiarities: one will not work on iOS, the other will have problems on Android. In the end, many remain on H.264.

In the future, we are promised the AV1 codec, it already has a software implementation, and its efficiency is 35% higher than that of the 2013 codecs. Now available in Chrome and Firefox, and in 2020 Google promises hardware support - I think, most likely, we will all move to it.

Finally, they recently announced the H.266 / JVEC codec, saying that everything will be better and faster.

The main pattern: the higher the efficiency of the codec, the more computing resources it requires from devices.

In general, by default, everyone takes H.264, and then for specific devices it can be complicated.

Quality, Resolution and Bitrate

In 2019, you will not surprise anyone with adaptive quality: users upload or stream videos in one quality, and we cut a line of different qualities and send the most suitable to devices.

In this case, it is necessary that the resolution of the video correlates with the bitrate. If the resolution doubles, the bit rate should double:

Obviously, if you compress a large resolution with a low bit rate or vice versa, then there will be either artifacts or useless burning of the bit rate.

How does the bitrate of the encoded video compare with the initial amount of information? On a 4K screen, we can play almost 6 Gb / s of information (if you count all the pixels and their frequency at 60 frames per second), while the codec bitrate can be 50 Mb / s. That is, the codec compresses the video up to 100 times.

Delivery technology

You have audio and video packed with some codecs. If you just keep it at home, you can add up all the audio and video by adding a small index that tells you from what second the audio and video starts. But the video cannot be delivered to the phone, and for streaming to the viewer online, there are two main classes of protocols: streaming and segment.

The streaming protocol implies that you have some kind of state on the server, the client too, and it sends data. The server can adjust, for example, quality. Very often this is a UDP connection.

Such protocols are highly complex for the server and difficult to deliver. For highly loaded translations, we use segmented protocols that work on top of HTTP, can be cached by nginx and CDN, and they are much easier to distribute. And the server is not responsible for anything and, in this case, stateless.

What segment delivery looks like: we cut the existing video into segments, accompany them with a header for audio and video, MPEG-TS and MP4 as an example of transport. On the phone we give a manifest with information where and for what quality the segment lies, and this manifest can be updated periodically.

Historically, Apple delivers through HLS, and Android through DASH. Let's see how they differ.

Let's start with the older HLS, it has a manifest that describes all the available qualities - low, medium, high, and so on. There are bitrates of these qualities so that the player can immediately choose the right one. He chooses the quality and gets a nested manifest with a list of links to segments. The duration of these segments is also indicated.

There is an interesting feature here: to start playing the first frame, you will have to make two additional round trips. The first request you get the main manifest, the second - nested manifests, and only then access the data itself, which is not very good.

The second difficulty: HLS was designed to work on the Internet via HTTP, but the legacy MPEG-2 Transport Stream was chosen as a container for video data, which was developed for completely different purposes: transmitting a signal from a satellite in noisy channels. As a result, we get additional headers, which in the case of HLS are completely useless and only add overhead.

Add network overhead and the complexity of parsing: if you try to play 4K in DASH and HLS in Chrome, you will feel the difference when your computer “takes off” with HLS packets.

Apple is trying to solve this. In 2016, they announced the possibility of using Fragmented MPEG-4, there was some support for DASH in HLS, but the extra RTT and its features have not disappeared.

DASH looks a little easier: you have one manifest with all the qualities inside, and each quality is a set of segments. You can play one segment to play in one quality, then understand that the speed has increased, from the next segment to switch to another. All segments always begin with reference frames, allowing switching.

Here is a small plate about what to choose from:

In HLS, historically supported video codecs are only H.264; in MPEG-DASH, you can shove anyone. The main problem of HLS is an extra round trip at the start, it plays well on both iOS and Android with 4.0. And DASH is mainly supported by Google (Chrome and Android) and cannot be played on iOS.

Player architecture

We sorted out the video more or less, now let's see how any player looks like.

Let's start with the network part: when starting a video, the player follows the manifest, somehow selects the quality, then follows the segment, downloads it, then it needs to decode the frames, understand that there are enough frames in the buffer for playback, and then starts playback.

The general architecture of the player:

There is a network part, a socket, where the data comes from.

After that - a demultiplexer or some kind of thing that gets audio and video streams from a transport (HLS / DASH). She sends them to the appropriate codecs.

Codecs decode video and audio, and then the most interesting thing happens: they need to be synchronized so that your video and audio are played simultaneously. There are various mechanisms based on timestamps for this.

Then you need to render it somewhere - in Texture, Surface, GL or Metal, anywhere.

And at the input there is a load control, which loads the data and controls the buffer.

What does load control look like in all players? There is some amount of data that needs to be downloaded. The player waits until they are downloaded, then begins to play, and we download further. We have the maximum buffer limit at which the download stops. After that, as the playback progresses, the amount of data in the buffer drops - and there is a minimum border at which it starts loading. So all this also lives:

What does the main loop thread look like? Gamers are familiar with the concept of “tick thread”, it seems to be here. There is a part responsible for the network that stacks everything into one buffer. There is an extractor that unpacks and sends it to the codecs, where its intermediate buffer and then it will go for rendering. And you have a tick that shifts and controls them, deals with synchronization.

Outside, you have an application that sends some commands through a message queue and receives some information through listeners. And sometimes back pressure may appear, which lowers the quality - for example, in a situation where your buffer runs out or the render can't cope (say, drop frames appear).

Estimator

When adapting, the player relies on 2 main parameters: network speed and stock data in the buffer.

How it looks: first, a certain quality is reproduced, for example, 720p. You have a growing buffer, caching more and more. Then the speed grows, you understand that you can download even more, the buffer grows. And at this moment you understand that you are stepping on some boundaries of the minimum buffer when you can try the following quality.

It is clear that you need to try it carefully: there is also an estimator that says if you can meet this quality in terms of network speed. If you fit into this assessment and the buffer stock allows, then you switch, for example, to 1080p and continue to play.

Over pressure protection

With us it appeared over time through trial and error. The need for it arises when you slightly overload your equipment.

There is a situation when the network dulls during playback or the resources run out on the backend. When the player resumes playback, it starts to catch up.

A huge set of segments has accumulated in the player’s manifest by this moment, it quickly downloads them all at once, and we get some “traffic blow”. The situation may be aggravated if a timeout occurs on clients and the player starts to re-request data. Therefore, it is imperative to provide for back pressure in the system.

The first simple way that we, of course, use is throttler on the server. He understands that the traffic ends, reduces the quality and deliberately slows down the customers so that the same blow does not happen.

But this does not affect the estimators very well. They can generate the same “twists”. Therefore, if possible, support the removal of quality from the manifest. To do this, you must either periodically update the manifest, or if there is a feedback channel, give the command to remove the quality, and the player will automatically switch to another one, lower.

Players

In iOS there is only native AVPlayer, but on Android there is a choice. There is a native MediaPlayer, but there is an open source Java-based ExoPlayer that applications "bring with them." What are their pros and cons?

Compare all three:

In the case of adaptive streaming, ExoPlayer plays DASH / HLS and has many expandable modules for other protocols, while AVPlayer is worse.

Support for operating system versions, in principle, suits everyone everywhere.

Prefetching is when you know that after the end of one video you want to play the following in the tape, and preload it.

There is a problem with bugfixes of native players. In the case of ExoPlayer, you simply roll it into a new version of your application, but in native AVPlayer and MediaPlayer the bug will be fixed only in the next OS release. We came across this painfully: in iOS 8.01 our video began to play poorly, in iOS 8.02 the entire portal stopped working, in 8.03 everything worked again. And nothing depended on us in this case, we just sat and waited for Apple to roll out the next version.

The ExoPlayer team talks about the inefficiency of energy consumption in the case of audio. There are general recommendations from Google: to play audio, use MediaPlayer, for everything else Exo.

Understood, we will use ExoPLayer with DASH for video on Android, and AVPlayer with HLS on iOS.

Quick first frame

Again, remember the time until the first frame. How it looks on HLS iOS: the first RTT behind the manifest, then another RTT behind the nested manifest, only then - getting the segment and playing. In Android, one RTT is less, it starts a little better.

Buffer size

Now let's deal with the buffers. We have a minimum amount of data that needs to be downloaded before we start playing. In AVPlayer, this value is configured using AVPlayerItem preferredForwardBufferDuration.

On Android, ExoPlayer has a lot more configuration mechanisms. There is the same minimum buffer that is needed to start. But there is also a separate setting for rebuffing (if your network fell off, the data from the buffer ran out, and then it returned):

What is the profit? If you have a good network, you quickly start and fight for a quick first frame, for the first time you can try to take a chance. But if the network breaks during playback, it is obvious that you need to ask for more buffering to play during rebuffing so that there is no repeated problem.

Original quality

HLS on iOS has a cool problem: it always starts playing from the first quality in the m3u8 manifest. What you give him will begin from that. And only then it will measure the download speed and start playing in normal quality. It is clear that this should not be allowed.

Logical optimization - re-sort quality. Either on the server (by adding an additional parameter to preferredquality, it re-sort the manifest), or on the client (make a proxy that will do this for you).

And on Android, there is a DefaultBandwidthMeter parameter for this. It gives a value that it considers the default bandwidth of your band.

How it works: there is a huge table of constants in the code, and the parameters are simple - the country (region) and the type of connection (wi-fi, 2G, 3G, 4G). What are the meanings? For example, if you have Wi-Fi and are located in the USA, your initial bandwidth is 5.6 Mbps. And if 3G is 700 kbps.

It can be seen that according to Google estimates, 4G in Russia is 2-3 times faster than in America.

It is clear that Russia is a big country, and such a setup did not suit us at all. Therefore, if you want to do it simply, remember the previous value for the current network, subtract one unit just in case, and start it.

And if you have a large application that plays video around the world, collect statistics on subnets, and recommend from the server the quality from which to start. Keep in mind that after buffering it is advisable to increase the value of the buffer (on Android, this is easily allowed).

How to speed up rewind

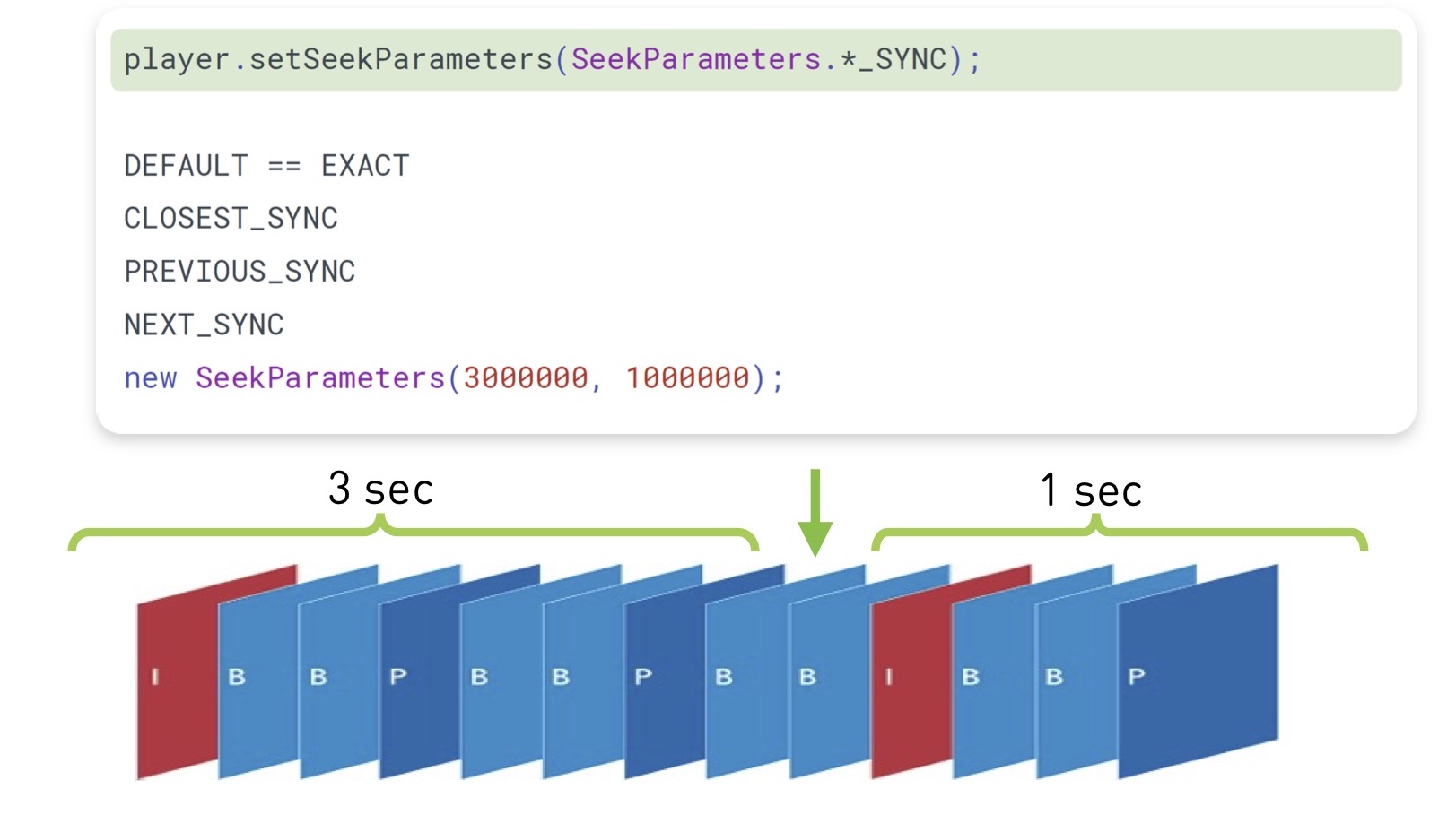

When your users rewind the video to a specific place (seek), you may not fall into the reference frame, but between them. Accordingly, everything that was from the previous reference to it needs to be downloaded and decoded.

In fact, if a user watches a two-hour movie, then a plus or minus second is not important for him. Therefore, on iOS, if you know that the video is neatly cut at certain intervals, you can calculate and send it to that reference frame where it is (plus a small delta, to be exactly after it, and not in front of it).

In ExoPlayer from version 2.7.0, it became possible to specify how you want to rewind, and there is an option "in the next frame." In this case, he will search for the closest frame one second forward and three backward. Which will find, in such and rewinds.

If the video does not start from the beginning (and almost all video hosting companies remember the time until which the user last watched the video), and you rewind to some position, never do prepare (mediaSource) and then seekTo () on Android. If you do so, he will first prepare to play from the very beginning, and then rewind. Swap these lines - this allowed us to greatly accelerate:

Also, when you change the video (first one was played, then the other), it is better not to let go of the codec. This is a very expensive operation (about 100 ms), and you will probably play the next video with the same decoder settings, and it will suit you completely.

Rendering

On iOS, everything is rendered simply, but on Android there are many different legacy things.

Many render on TextureView. The option is good in that it is a separate area of memory, you copy the whole frame, it is well animated, synchronized with the UI. But there are downsides - a large delay in launch and high power consumption.

There is a SurfaceView. You can start there quickly, but it is a hole in the video memory. Therefore, on some older Android devices, when scrolling, a hole appears in the form of various artifacts. YouTube initially never scrolled the video during playback, so it suited them.

Therefore, there is GLSurfaceView - an intermediate option between the first two. If you file your rendering, you can fix the problem of slow texture on older devices.

The bottom line: we found that if we carefully tune ExoPlayer, we can make the first frame 23% faster. The number of “krutilok” was reduced by 10%. And all this tuning added about 4% of views to us. Do you need these 4% - decide for yourself, but it is not difficult.

Bottom line: Android recommendations

- Use MediaPlayer for music, for everything else ExoPlayer exists

- Optimize start, seek, swap

- Write your estimate, it's easy to replace

- Use the correct view as recommended

Bottom line: iOS recommendations

IOS is getting harder:

- We have extra RTT on HLS in AVPlayer

- Proprietary Estimator

- Slows down main stream after AVPlayer # pause

- Native - no source, updates only with iOS release

Therefore, we decided to gash our own DASH player, taking as a basis "the architecture of any live player." We used:

- cURL or GCDAsyncSocket

- AVAssetReader, then abandoned it

- CADisplayLink

- AVSampleBufferDisplayLayer

This is time consuming, but we got a number of accelerations. Time to the first frame was reduced by 28%, “twists” decreased by 6%. But the most pleasant thing is that when switching from HLS to DASH, we increased the average consumed bitrate by 100 kbit / s, and the number of views by 6%.

And iOS recommendations come out like this:

- Optimize start and seek

- Use HLS over Fragmented mp4

- Write your DASH player

We think that we will try to make our player also cross-platform.

Conclusions on the first part:

You learned about video, standard player architecture, player comparison and tuning.

- Choose the appropriate streaming format (not mp4 only)

- Choose the right player (ExoPlayer, AVPlayer)

- Collect statistics on firstFrame, seek, emptyBuffer

- Pull the player and saw your estimator

- Write your player (if specifically you really need to do this)

- If you want to do something serious, raise the bar. We raised the bar to 4K, and found all the bugs: in performance, in parsing, in everything.

And now about streaming.

Part Two: DIY Video Streaming

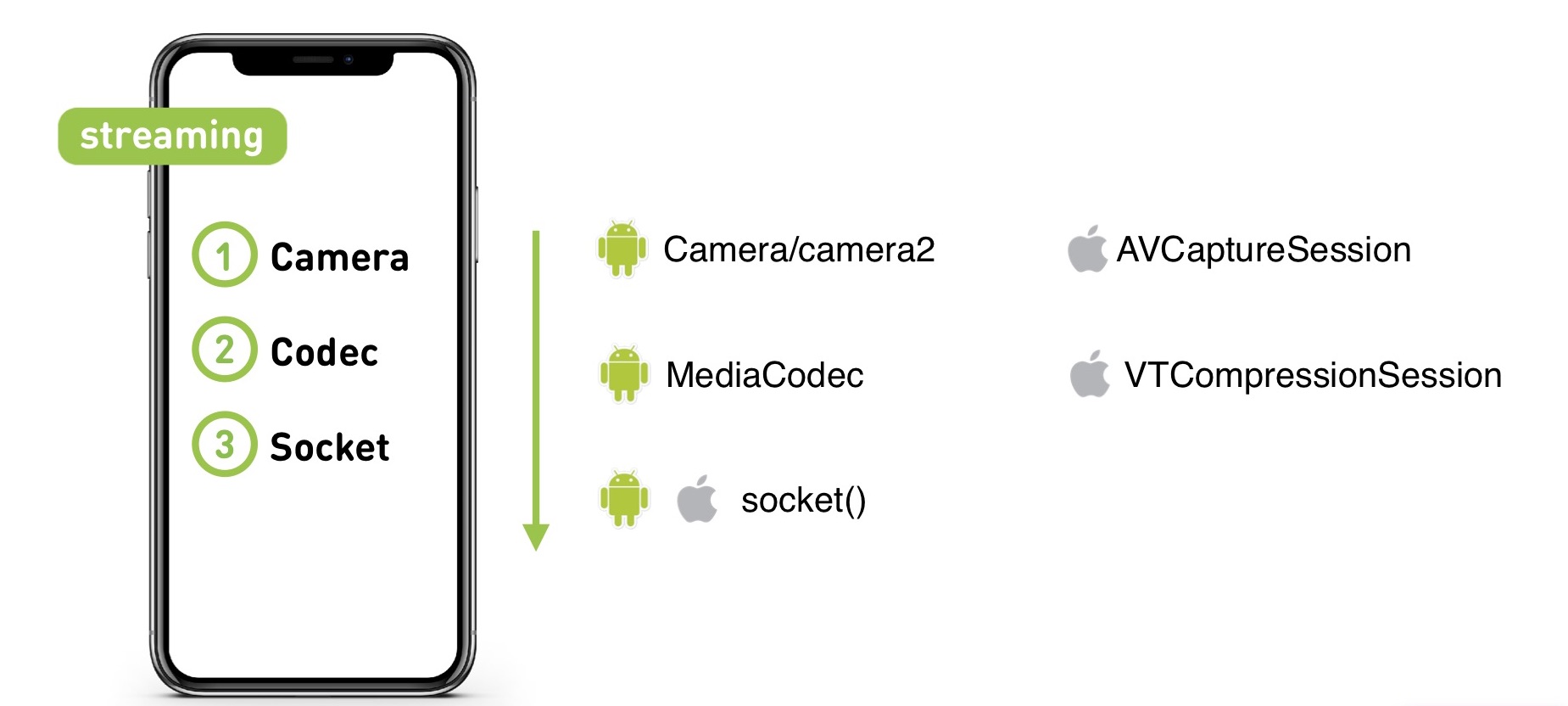

What if I need to send video from our mobile device?

Need API for camera capture and encoding. These APIs provide access to the camera and hardware encoder on iOS and Android, which is very important - it works much faster than software.

Socket: you can use some kind of wrapper in your favorite framework, or you can use a POSIX socket, do everything in native mode, and then you can make a cross-platform network part.

What do we want to achieve from streaming?

- Low latency

- Good broadcast quality

- Video and audio stability

- Fast start

And what will have to fight?

- Low bandwidth

- Delays

- Audio and video interruption

- Start delay (N x RTT, it is usually convenient to calculate the start as the number of RTT)

Why low latency is needed

The first case is interactive with the audience. We have features such as a quiz and live calls, the delay is very important there.

In the quiz, they ask a question and set a timer: if users have different amounts of data in the buffer, they will not be in equal conditions, and the time for a response will be different. And the only way to achieve the same buffer is to do low latency.

The scenario that is currently under development is live calls. You can call the streamer live and talk to him.

The third case is sports broadcasts in 4K. For example, you watch the World Cup with beer and chips, and the neighbors behind the wall watch the same thing. If they already have a goal, and you still have 30 seconds of buffering, they begin to rejoice and galde much earlier and spoil the whole buzz. People will go to competitors whose delay is less.

Adaptation

The networks we have, of course, are different, so you need to adapt to each. To do this, we change the bitrate of video and audio (moreover, in a video, it can change 100 times).

We can drop some frames if we see that we do not have time to adapt or have exhausted our adaptation capabilities.

And we also need to change the resolution of the video. If we encode the entire range of bitrates 100 times in one resolution, it will turn out badly. Say, if you encode FullHD video and 480p with the same bitrate of 300 kbps, then it would seem that superior FullHD will look worse. With a high resolution, the codec is hard, the picture crumbles: instead of spending bits on encoding the picture itself, it encodes overhead pieces.So the resolution should match the bit rate.

The general coding scheme with adaptation is as follows:

We have some source that can change the bitrate on the fly, and there is a network. All data goes to the network, in some form we get a feedback from the network and understand whether it is possible to increase the amount of data sent, or to reduce it.

The streamer has MediaCodec or VideoToolbox as the source (depending on the platform). And during playback, everything is done by Server Transcoder.

On the network - various network protocols about which we have already talked and will talk more.

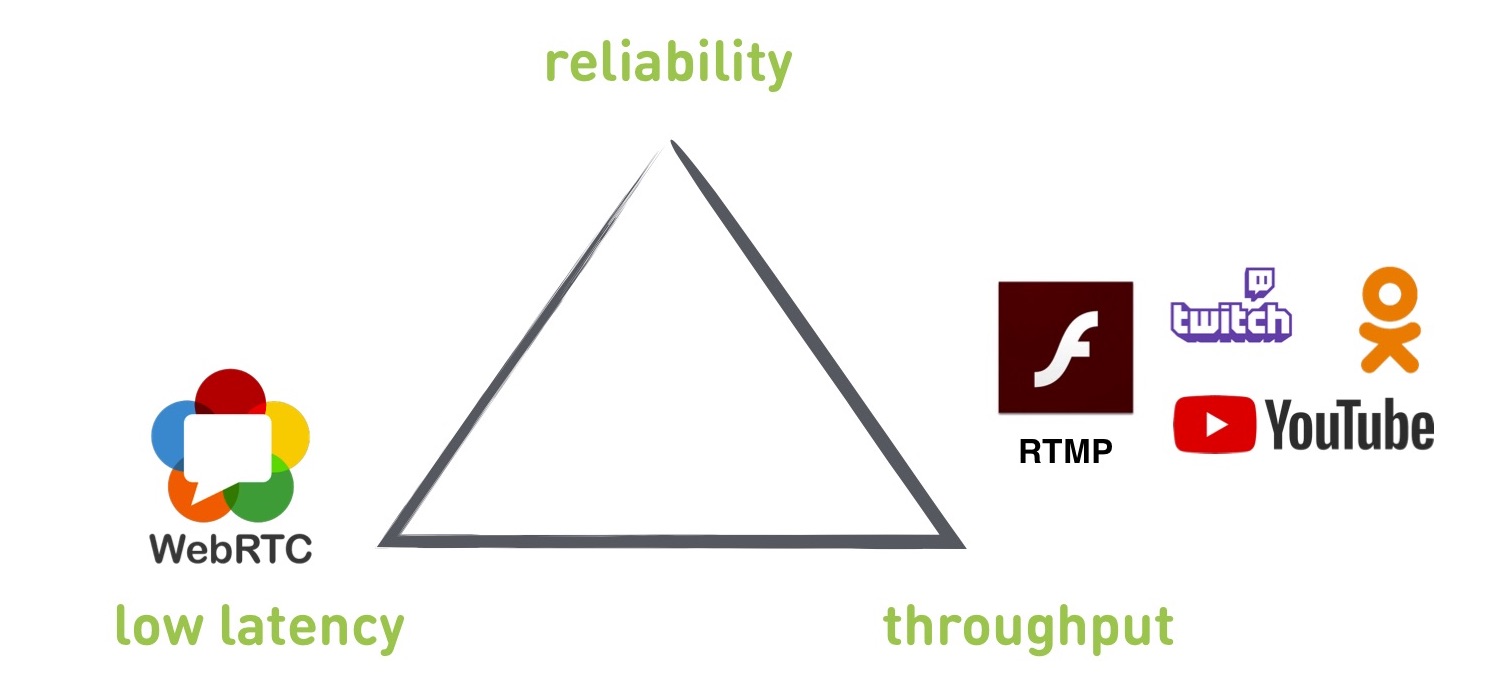

The Triangle of Compromise

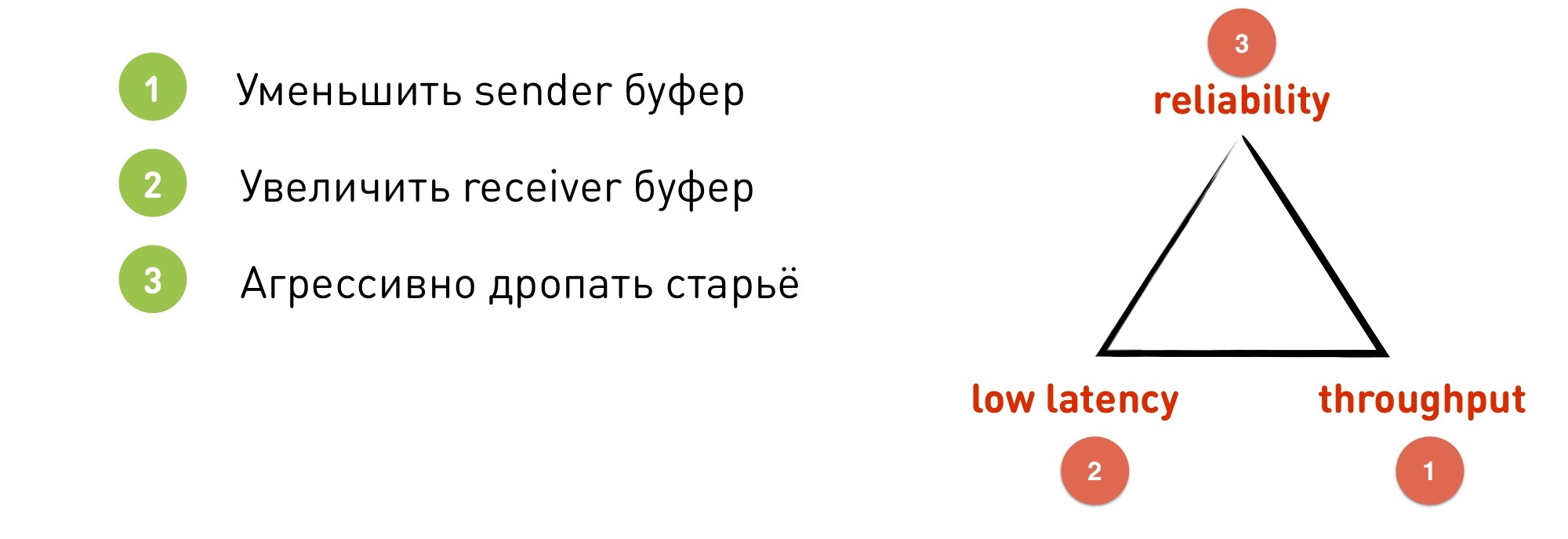

When we begin to delve into streaming, we come across a certain number of compromises. In particular, there is a triangle in the corners of which reliability is reliability (no drops), throughput is bandwidth (how much we use the network) and low latency is low latency (do we get low latency).

If we begin to optimize one of these parameters, the rest will inevitably fail. We cannot get everything at once, we have to sacrifice something.

Protocols

The protocols that we will see today: RTMP and WebRTC are standard protocols, OKMP is our custom protocol.

It is worth mentioning that RTMP runs on top of TCP, and the other two use UDP.

RTMP

What does he give? In a certain way, this is a standard that is supported by all services - YouTube, Twitch, Flash, OK. They use it so that users can upload live streams. If you want to stream a live stream to some third-party service, you will most likely have to work with RTMP.

The minimum delay that we managed to achieve from a streamer to a player is 300 ms, but this is in an ideal network in good weather. When we have a real network, the delay usually grows to 2-3 seconds, and if everything is bad with the network, it can grow to tens of seconds.

RTMP supports changing resolution and bit rate on the fly (the other protocols mentioned above also, but there is erroneous information about RTMP that there is no change on the fly).

Of the minuses: built on TCP (we will explain later why this is bad), the delay is uncontrolled.

If you look at the triangle, RTMP will not be able to give low latency. It can be obtained, but not at all guaranteed.

In addition, RTMP is a little crap: it does not support new codecs, since Adobe does not do this, and the documentation is quite ancient and crooked.

Why is TCP not suitable for live broadcasts? TCP gives a guarantee of delivery: the data that you put on the socket will be delivered exactly in the order and in the form in which you put it there. Nothing will be dropped or rearranged. TCP will either do this or die. But this means that a delay guarantee is excluded - he will not be able to drop old data, which already, maybe, does not need to be sent. The buffer, backlogs and so on begins to grow.

As an illustration, the Head of Line blocking problem. It is found not only in streaming, but also in many other cases.

What is it?We have an initially empty receiver buffer. We are receiving data from somewhere: a lot of data and a lot of IP packets. We received the first IP packet, and on the receiver using the recv () method we can subtract this packet, get data, lose, render. But then suddenly the second packet was lost. What happens next?

In order to recover a lost IP packet, TCP must retransmission. For this to happen, you need to spend RTT, while retransmission can also be lost, and we will go in cycles. If there are many packages, this will certainly happen.

After that comes a lot of data that we cannot read, because we are standing and waiting for the second package. Although he showed a broadcast frame that happened five minutes ago and is no longer needed.

To understand another problem, let's take a look at the RTMP adaptation. We do the adaptation on the sender side. If the network cannot cram data at the speed with which it is put into the socket, the buffer is filled and the socket says EWOULDBLOCK or is blocked if blocking is used at this moment.

Only at this moment we understand that we have problems, and we need to reduce the quality.

Let's say we have a network with a specific speed of 4 Mbps. We chose a socket size of 250 KB (corresponding to 0.5 seconds at our speed). Suddenly the network failed 10 times - this is a normal situation. We have 400 kbps. The buffer quickly filled up in half a second, and only at that moment we understand that we need to switch down.

But now the problem is that we have a 250 KB buffer that will be transmitted for 5 seconds. We are already completely behind: we need to shove the old data first, and only then new and adapted ones will go to catch up with realtime.

What to do? Here our “triangle of compromises” is just relevant.

- We can reduce the sender buffer, put instead of 0.5 sec - 0.1 sec. But we lose bandwidth, as we will often “panic” and switch down. Moreover, TCP works in such a way that if you put a sender buffer smaller than RTT, you can not use the full bandwidth of the channel, it will decrease by several times.

- We can increase the receiver buffer. With a large buffer, data arrives, we can smooth out some irregularities within the buffer. But, of course, we are losing low latency, since we immediately set a 5-second buffer.

- . TCP — , . reliability, .

WebRTC

This is a C ++ library that already takes into account experience and runs on top of UDP. Builds under iOS, Android, is built into browsers, supports HTML5. Since it is imprisoned for P2P calls, the delay is 0.1-1 seconds.

Of the minuses: this is a monolithic library with an abundance of legacy that cannot be removed. In addition, due to its focus on P2P calls, it prioritizes low latency. It would seem that we wanted this, but for the sake of this, she sacrifices other parameters. And there are no settings to change priorities.

It should also be borne in mind that the library is client-oriented for the conversation of two clients without a server. The server must be searched for third-party, or write your own.

What to choose - RTMP or WebRTC? We implemented both protocols and tested them in different scenarios. On the graph, WebRTC has a low delay, but a low throughput, while RTMP has the opposite. And between them is a hole.

And we wanted to make a protocol that completely covers this hole and can work both in WebRTC and in RTMP mode. They made and named it OKMP.

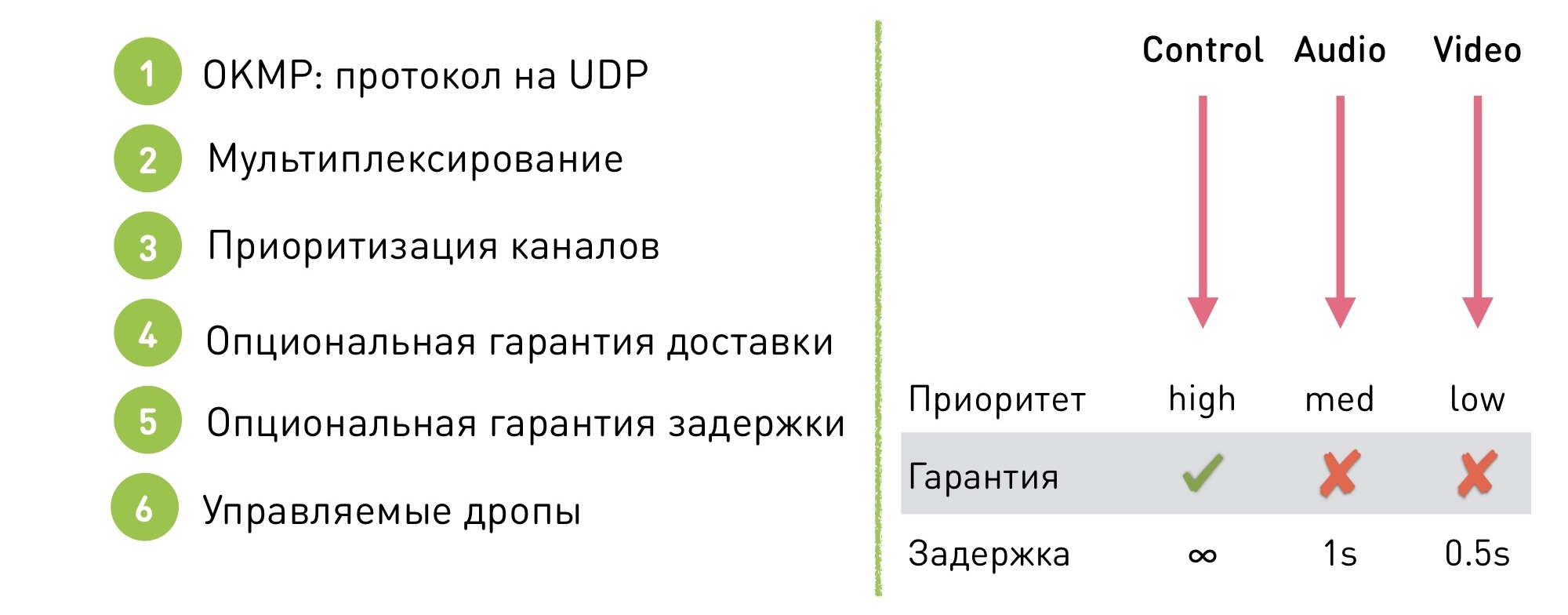

Okmp

This is a flexible protocol for UDP.

Supports multiplexing. What does this mean: there are several channels inside the session (in the case of OK Live - the manager, audio and video). Within each channel, the data is guaranteed to be delivered in a certain order (but they themselves are not guaranteed to be delivered), and the order between the channels is not guaranteed, since it is not important.

What does it give?Firstly, it gave us the opportunity to prioritize channels. We can say that the control channel has high priority, the sound is medium, and the video is low. Video jitter and uneven video delivery are easier to mask, and the user has fewer problems from video problems than from unpleasant stuttering of audio.

In addition, our protocol has an optional delivery guarantee. We can say that on a certain channel we work in TCP mode, with guaranteed delivery, and on the others we allow some drops.

Thanks to this, a delay guarantee can be made: there is no guarantee of delay on the TCP channel, but on the others where drops are allowed, a threshold is set, after which the data begins to drop and we stop delivering old data.

For example, for audio it is 1 second, and for video 0.5 seconds. Why is the threshold different? This is another prioritization mechanism. Since it’s more important for us that the audio is smooth, we begin to drop the video first of all.

Our protocol is flexibly configured: there is no single operating mode, we change settings on the fly to switch to the desired mode without visible effects for the user. What for?For example, for the same video calls: if a video call starts in a stream, we quietly transfer it to low latency mode. And then back to throughput mode for maximum quality.

Implementation Challenges

Of course, if you decide to write your protocol on UDP, you will come across some problems. Using TCP, we get mechanisms that you will have to write on UDP yourself:

- Packetizing/Depacketizing. , 1,5 , MTU .

- Reordering. , . , sequence , .

- Losses. , . , receiver sender', « , », sender . .

- Flow control If the Receiver does not receive data, does not keep up with the speed with which we shove it, the data may begin to get lost, we must process this situation. In the case of TCP, the send socket will be blocked, and in the case of UDP it will not be blocked, you yourself must understand that the receiver is not receiving data, and reduce the amount of data sent.

- Congestion Control. A similar thing, only in this case the network died. If we send packets to the deceased network, we will destroy not only our connection, but also the neighboring ones.

- Encryption Need to take care of encryption

- ... and much more

OKMP vs RTMP

What did we get when we started using OKMP instead of RTMP?

- The average increase in OKLive bitrate is 30%.

- Jitter (measure of uneven packet arrival) - 0% (on average the same).

- Jitter Audio - -25%

- Jitter Video - 40%

Changes in audio and video - demonstration of priorities in our protocol. Audio we give a higher priority, and it began to come more smoothly due to the video.

How to choose a protocol for streaming

If you need low latency - WebRTC.

If you want to work with external services, publish videos on third party services, you will have to use RTMP.

If you want a protocol tailored for your scripts - implement your own.

All Articles