Dynamic detail: compiler covert games, memory leak, performance nuances

Prelude

Consider the following code:

//Any native COM object var comType = Type.GetTypeFromCLSID(new Guid("E13B6688-3F39-11D0-96F6-00A0C9191601")); while (true) { dynamic com = Activator.CreateInstance(comType); //do some work Marshal.FinalReleaseComObject(com); }

The signature of the Marshal.FinalReleaseComObject method is as follows:

public static int FinalReleaseComObject(Object o)

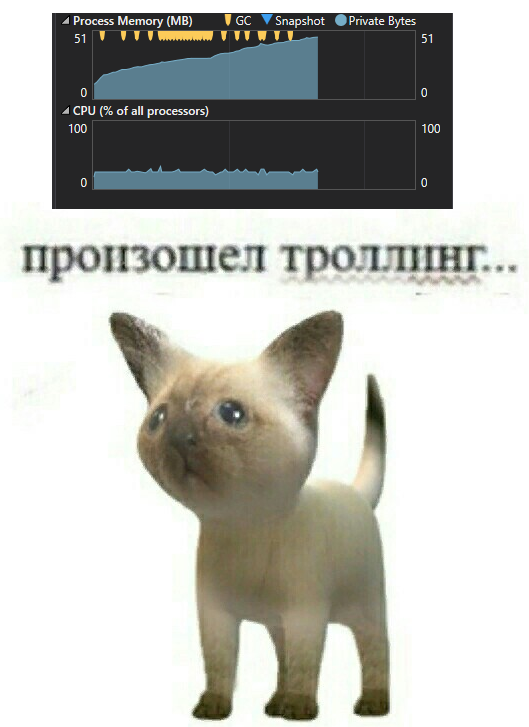

We create a simple COM object, do some work, and immediately release it. It would seem that what could go wrong? Yes, creating an object inside an infinite loop is not a good practice, but the GC will take on all the dirty work. The reality is slightly different:

To understand why memory leaks, you need to understand how dynamic works. There are already several articles on this subject on Habré, for example this one , but they do not go into details of implementation, so we will conduct our own research.

First, we will examine in detail the dynamic mechanism, then we will reduce the acquired knowledge into a single picture and at the end we will discuss the causes of this leak and how to avoid it. Before diving into the code, let's clarify the source data: which combination of factors leads to the leak?

The experiments

Perhaps creating many native COM objects is a bad idea in itself? Let's check:

//Any native COM object var comType = Type.GetTypeFromCLSID(new Guid("E13B6688-3F39-11D0-96F6-00A0C9191601")); while (true) { dynamic com = Activator.CreateInstance(comType); }

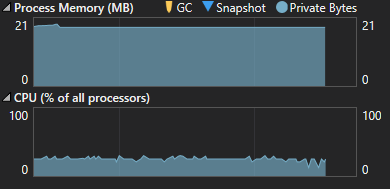

Everything is good this time:

Let's go back to the original version of the code, but change the type of object:

//Any managed type include managed COM var type = typeof(int); while (true) { dynamic com = Activator.CreateInstance(type); //do some work Marshal.FinalReleaseComObject(com); }

And again, no surprises:

Let's try the third option:

//Simple COM object var comType = Type.GetTypeFromCLSID(new Guid("435356F9-F33F-403D-B475-1E4AB512FF95")); while (true) { dynamic com = Activator.CreateInstance(comType); //do some work Marshal.FinalReleaseComObject((object) com); }

Well now, we definitely should get the same behavior! Yes? No :(

A similar picture will be if you declare com as an object or if you work with Managed COM . Summarize the experimental results:

- Instantiating native COM objects by itself does not lead to leaks - GC successfully copes with clearing memory

- When working with any Managed class, leaks do not occur

- When explicitly casting an object to object , everything is fine too

Looking ahead, to the first point we can add the fact that working with dynamic objects (calling methods or working with properties) by itself also does not cause leaks. The conclusion suggests itself: a memory leak occurs when we pass a dynamic object (without "manual" type conversion) containing native COM , as a method parameter.

We need to go deeper

It's time to remember what this dynamic is all about :

Quick reference

C # 4.0 provides a new type of dynamic . This type avoids static type checking by the compiler. In most cases, it works as an object type. At compile time, it is assumed that an element declared as dynamic supports any operation. This means that you don’t need to think about where the object comes from - from the COM API, a dynamic language like IronPython, using reflection, or from somewhere else. Moreover, if the code is invalid, errors will be thrown in runtime.

For example, if the exampleMethod1 method in the following code has exactly one parameter, the compiler recognizes that the first call to ec.exampleMethod1 (10, 4) is invalid because it contains two parameters. This will result in a compilation error. The second method call, dynamic_ec.exampleMethod1 (10, 4) is not checked by the compiler since dynamic_ec is declared as dynamic , therefore. there will be no compilation errors. Nevertheless, the error will not go unnoticed forever - it will be detected in runtime.

static void Main(string[] args) { ExampleClass ec = new ExampleClass(); // , exampleMethod1 . //ec.exampleMethod1(10, 4); dynamic dynamic_ec = new ExampleClass(); // , // dynamic_ec.exampleMethod1(10, 4); // , // , dynamic_ec.someMethod("some argument", 7, null); dynamic_ec.nonexistentMethod(); }

class ExampleClass { public ExampleClass() { } public ExampleClass(int v) { } public void exampleMethod1(int i) { } public void exampleMethod2(string str) { } }

Code that uses dynamic variables undergoes significant changes during compilation. This code:

dynamic com = Activator.CreateInstance(comType); Marshal.FinalReleaseComObject(com);

Turns into the following:

object instance = Activator.CreateInstance(typeFromClsid); // ISSUE: reference to a compiler-generated field if (Foo.o__0.p__0 == null) { // ISSUE: reference to a compiler-generated field Foo.o__0.p__0 = CallSite<Action<CallSite, Type, object>>.Create(Binder.InvokeMember(CSharpBinderFlags.ResultDiscarded, "FinalReleaseComObject", (IEnumerable<Type>) null, typeof (Foo), (IEnumerable<CSharpArgumentInfo>) new CSharpArgumentInfo[2] { CSharpArgumentInfo.Create(CSharpArgumentInfoFlags.UseCompileTimeType | CSharpArgumentInfoFlags.IsStaticType, (string) null), CSharpArgumentInfo.Create(CSharpArgumentInfoFlags.None, (string) null) })); } // ISSUE: reference to a compiler-generated field // ISSUE: reference to a compiler-generated field Foo.o__0.p__0.Target((CallSite) Foo.o__0.p__0, typeof (Marshal), instance);

Where o__0 is the generated static class, and p__0 is the static field in it:

private class o__0 { public static CallSite<Action<CallSite, Type, object>> p__0; }

Note: for each interaction with dynamic , a CallSite field is created. This, as will be seen later, is necessary to optimize performance.

Note that there is no mention of dynamic left - our object is now stored in a variable of type object . Let's walk through the generated code. First, a binding is created, which describes what and what we are doing:

Binder.InvokeMember(CSharpBinderFlags.ResultDiscarded, "FinalReleaseComObject", (IEnumerable<Type>) null, typeof (Foo), (IEnumerable<CSharpArgumentInfo>) new CSharpArgumentInfo[2] { CSharpArgumentInfo.Create(CSharpArgumentInfoFlags.UseCompileTimeType | CSharpArgumentInfoFlags.IsStaticType, (string) null), CSharpArgumentInfo.Create(CSharpArgumentInfoFlags.None, (string) null) })

This is a description of our dynamic operation. Let me remind you that we pass a dynamic variable to the FinalReleaseComObject method.

- CSharpBinderFlags.ResultDiscarded - the result of the method execution is not used in the future

- "FinalReleaseComObject" - the name of the called method

- typeof (Foo) - operation context; the type of call

CSharpArgumentInfo - description of the binding parameters. In our case:

- CSharpArgumentInfo.Create (CSharpArgumentInfoFlags.UseCompileTimeType | CSharpArgumentInfoFlags.IsStaticType, (string) null) - description of the first parameter - the Marshal class: it is static and its type should be considered when binding

- CSharpArgumentInfo.Create (CSharpArgumentInfoFlags.None, (string) null) - description of the method parameter, usually there is no additional information.

If it was not about calling a method, but about, for example, calling a property from a dynamic object, then there would be only one CSharpArgumentInfo that describes the dynamic object itself.

CallSite is a wrapper over a dynamic expression. It contains two important fields for us:

- public T Update

- public T Target

It can be seen from the generated code that when any operation is performed, Target is called with parameters describing it:

Foo.o__0.p__0.Target((CallSite) Foo.o__0.p__0, typeof (Marshal), instance);

In conjunction with the CSharpArgumentInfo described above , this code means the following: you need to call the FinalReleaseComObject method on the static Marshal class with the instance parameter. At the time of the first call, the same delegate is stored in Target as in Update . The Update delegate is responsible for two important tasks:

- Binding a dynamic operation to a static one (the biding mechanism itself is beyond the scope of this article)

- Cache generation

We are interested in the second point. It should be noted here that when working with a dynamic object, we are faced with the need to check the validity of the operation each time. This is a rather resource-intensive task, so I want to cache the results of such checks. With regard to calling a method with a parameter, we need to remember the following:

- The type on which the method is called

- The type of object that is passed in by the parameter (to be sure that it can be cast to the parameter type)

- Is the operation valid

Then, when calling Target again, we do not need to carry out relatively expensive bindings: just compare the types and, if they match, call the target function. To solve this problem, an ExpressionTree is created for each dynamic operation, which stores the constraints and the objective function to which the dynamic expression was bound.

This function can be of two types:

- Binding error : for example, a method is called on a dynamic object that does not exist or a dynamic object cannot be converted to the type of the parameter to which it is passed: then you need to throw an exception like Microsoft.CSharp.RuntimeBinderException: 'NoSuchMember'

- The challenge is legal: then just perform the required action

This ExpressionTree is formed during the execution of the Update delegate and stored in Target . Target - L0 cache, we'll talk more about the cache later.

So, Target stores the last ExpressionTree generated through the Update delegate. Let's see how this rule looks like an example of a Managed type passed to the Boo method:

public class Foo { public void Test() { var type = typeof(int); dynamic instance = Activator.CreateInstance(type); Boo(instance); } public void Boo(object o) { } }

.Lambda CallSite.Target<System.Action`3[Actionsss.CallSite,ConsoleApp12.Foo,System.Object]>( Actionsss.CallSite $$site, ConsoleApp12.Foo $$arg0, System.Object $$arg1) { .Block() { .If ($$arg0 .TypeEqual ConsoleApp12.Foo && $$arg1 .TypeEqual System.Int32) { .Return #Label1 { .Block() { .Call $$arg0.Boo((System.Object)((System.Int32)$$arg1)); .Default(System.Object) } } } .Else { .Default(System.Void) }; .Block() { .Constant<Actionsss.Ast.Expression>(IIF((($arg0 TypeEqual Foo) AndAlso ($arg1 TypeEqual Int32)), returnUnamedLabel_0 ({ ... }) , default(Void))); .Label .LabelTarget CallSiteBinder.UpdateLabel: }; .Label .If ( .Call Actionsss.CallSiteOps.SetNotMatched($$site) ) { .Default(System.Void) } .Else { .Invoke (((Actionsss.CallSite`1[System.Action`3[Actionsss.CallSite,ConsoleApp12.Foo,System.Object]])$$site).Update)( $$site, $$arg0, $$arg1) } .LabelTarget #Label1: } }

The most important block for us:

.If ($$arg0 .TypeEqual ConsoleApp12.Foo && $$arg1 .TypeEqual System.Int32)

$$ arg0 and $$ arg1 are the parameters with which Target is called:

Foo.o__0.p__0.Target((CallSite) Foo.o__0.p__0, <b>this</b>, <b>instance</b>);

Translated into human, this means the following:

We have already verified that if the first parameter is of type Foo and the second parameter is Int32 , then you can safely call Boo ((object) $$ arg1) .

.Return #Label1 { .Block() { .Call $$arg0.Boo((System.Object)((System.Int32)$$arg1)); .Default(System.Object) }

Note: in case of a binding error, the Label1 block looks something like this:

.Return #Label1 { .Throw .New Microsoft.CSharp.RuntimeBinderException("NoSuchMember")

These checks are called constraints . There are two types of restrictions : by type of object and by specific instance of the object (the object must be exactly the same). If at least one of the restrictions fails, we will have to re-check the dynamic expression for validity, for this we call the Update delegate. According to the scheme already known to us, he will perform binding with new types and save the new ExpressionTree in Target .

Cache

We already found out that Target is an L0 cache . Each time Target is called, the first thing we will do is go through the restrictions already stored in it. If the restrictions fail and a new binding is generated, then the old rule goes simultaneously to L1 and L2 . In the future, when you miss the L0 cache, the rules from L1 and L2 will be searched until a suitable one is found.

- L1 : The last ten rules that have left L0 (stored directly in CallSite )

- L2 : The last 128 rules created using a specific binder instance (which is CallSiteBinder , unique to each CallSite )

Now we can finally add these details into a single whole and describe in the form of an algorithm what happens when Foo.Bar (someDynamicObject) is called :

1. A binder is created that remembers the context and the called method at the level of their signatures

2. The first time the operation is called, ExpressionTree is created, which stores:

2.1 Limitations . In this case, these will be two restrictions on the type of current binding parameters

2.2 Objective function : either throw some exception (in this case it is impossible, since any dynamic will successfully lead to object) or a call to the Bar method

3. Compile and execute the resulting ExpressionTree

4. When you recall the operation, two options are possible:

4.1 Limitations worked : just call Bar

4.2 Limitations did not work : repeat step 2 for new binding parameters

So, with the example of the Managed type, it became approximately clear how dynamic works from the inside. In the described case, we will never miss the cache, since the types are always the same *, therefore Update will be called exactly once when CallSite is initialized. Then, for each call, only restrictions will be checked and the objective function will be called immediately. This is in excellent agreement with our observations of memory: no computation - no leaks.

* For this reason, the compiler generates its CallSites for each one: the probability of missing the L0 cache is extremely reduced

It's time to find out how this scheme differs in the case of native COM objects. Let's take a look at ExpressionTree :

.Lambda CallSite.Target<System.Action`3[Actionsss.CallSite,ConsoleApp12.Foo,System.Object]>( Actionsss.CallSite $$site, ConsoleApp12.Foo $$arg0, System.Object $$arg1) { .Block() { .If ($$arg0 .TypeEqual ConsoleApp12.Foo && .Block(System.Object $var1) { $var1 = .Constant<System.WeakReference>(System.WeakReference).Target; $var1 != null && (System.Object)$$arg1 == $var1 }) { .Return #Label1 { .Block() { .Call $$arg0.Boo((System.__ComObject)$$arg1); .Default(System.Object) } } } .Else { .Default(System.Void) }; .Block() { .Constant<Actionsss.Ast.Expression>(IIF((($arg0 TypeEqual Foo) AndAlso {var Param_0; ... }), returnUnamedLabel_1 ({ ... }) , default(Void))); .Label .LabelTarget CallSiteBinder.UpdateLabel: }; .Label .If ( .Call Actionsss.CallSiteOps.SetNotMatched($$site) ) { .Default(System.Void) } .Else { .Invoke (((Actionsss.CallSite`1[System.Action`3[Actionsss.CallSite,ConsoleApp12.Foo,System.Object]])$$site).Update)( $$site, $$arg0, $$arg1) } .LabelTarget #Label1: } }

It can be seen that the difference is only in the second restriction:

.If ($$arg0 .TypeEqual ConsoleApp12.Foo && .Block(System.Object $var1) { $var1 = .Constant<System.WeakReference>(System.WeakReference).Target; $var1 != null && (System.Object)$$arg1 == $var1 })

If in the case of Managed code we had two restrictions on the type of objects, then here we see that the second restriction checks the equivalence of instances through WeakReference .

Note: Instance restriction in addition to COM objects is also used for TransparentProxy

In practice, based on our knowledge of the cache, this means that every time we recreate a COM object in a loop, we will miss the L0 cache (and L1 / L2 too, because the old rules with links will be stored there to old instances). The first assumption that asks you in the head is that the rules cache is flowing. But the code there is quite simple and everything is fine there: the old rules are deleted correctly. At the same time, using WeakReference in ExpressionTree does not block the GC from collecting unnecessary objects.

The mechanism for saving rules in the L1 cache:

const int MaxRules = 10; internal void AddRule(T newRule) { T[] rules = Rules; if (rules == null) { Rules = new[] { newRule }; return; } T[] temp; if (rules.Length < (MaxRules - 1)) { temp = new T[rules.Length + 1]; Array.Copy(rules, 0, temp, 1, rules.Length); } else { temp = new T[MaxRules]; Array.Copy(rules, 0, temp, 1, MaxRules - 1); } temp[0] = newRule; Rules = temp; }

So what's the deal? Let's try to clarify the hypothesis: a memory leak occurs somewhere when binding a COM object.

Experiments, part 2

Let's move on from speculative conclusions to experiments. First, let’s repeat what the compiler does for us:

//Simple COM object var comType = Type.GetTypeFromCLSID(new Guid("435356F9-F33F-403D-B475-1E4AB512FF95")); var autogeneratedBinder = Binder.InvokeMember(CSharpBinderFlags.ResultDiscarded, "Boo", null, typeof(Foo), new CSharpArgumentInfo[2] { CSharpArgumentInfo.Create( CSharpArgumentInfoFlags.UseCompileTimeType, null), CSharpArgumentInfo.Create(CSharpArgumentInfoFlags.None, null) }); var callSite = CallSite<Action<CallSite, Foo, object>>.Create(autogeneratedBinder); while (true) { object instance = Activator.CreateInstance(comType); callSite.Target(callSite, this, instance); }

We check:

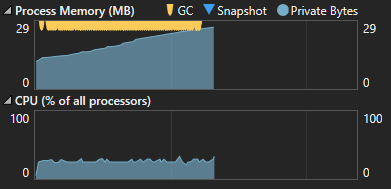

The leak was preserved. Fair. But what is the reason? After studying the code of the binders (which we leave behind the brackets), it is clear that the only thing that affects the type of our object is the restriction option. Perhaps the matter is not in COM objects, but in the binder? There isn’t much choice, let's provoke multiple binding for the Managed type:

while (true) { object instance = Activator.CreateInstance(typeof(int)); var autogeneratedBinder = Binder.InvokeMember(CSharpBinderFlags.ResultDiscarded, "Boo", null, typeof(Foo), new CSharpArgumentInfo[2] { CSharpArgumentInfo.Create( CSharpArgumentInfoFlags.UseCompileTimeType, null), CSharpArgumentInfo.Create(CSharpArgumentInfoFlags.None, null) }); var callSite = CallSite<Action<CallSite, Foo, object>>.Create(autogeneratedBinder); callSite.Target(callSite, this, instance); }

Wow! It seems we caught him. The problem is not at all with the COM object , as it seemed to us initially, just because of the limitations on the instance, this is the only case in which the binding occurs many times inside our loop. In all other cases, the L0 cache got up and the binning worked out once.

findings

Memory leak

If you work with dynamic variables that contain native COM or TransparentProxy , never pass them as method parameters. If you still need to do this, use the explicit cast to object and then the compiler will lag behind you

Wrong :

dynamic com = Activator.CreateInstance(comType); //do some work Marshal.FinalReleaseComObject(com);

Correctly :

dynamic com = Activator.CreateInstance(comType); //do some work Marshal.FinalReleaseComObject((object) com);

As an added precaution, try to instantiate such objects as rarely as possible. Actual for all versions of the .NET Framework . (For now) is not very relevant for. NET Core , since there is no support for dynamic COM objects.

Performance

It is in your interest that cache misses occur as rarely as possible, since in this case there is no need to find a suitable rule in high-level caches. Misses in the L0 cache will occur mainly in the case of a mismatch of the dynamic object type with the restrictions preserved.

dynamic com = GetSomeObject(); public object GetSomeObject() { //: //: }

However, in practice, you probably will not notice the difference in performance unless the number of calls to this function is measured in millions or if the variability of types is not unusually large. The costs in case of miss on L0 cache are such, N is the number of types:

- N <10. If you miss, only iterate over the existing L1 cache rules

- 10 < N <128 . Enumeration of L1 and L2 cache (maximum 10 and N iterations). Creating and populating an array of 10 elements

- N > 128. Iterate over L1 and L2 cache. Creation and filling of arrays of 10 and 128 elements. If you miss the L2 cache, re-binding

In the second and third cases, the load on the GC will increase.

Conclusion

Unfortunately, we did not find a real reason for the memory leak, this will require a separate study of the binder. Fortunately, WinDbg provides a hint for further investigation: something bad happens in DLR . The first column is the number of objects

Bonus

Why does casting to object explicitly prevent a leak?

Any type can be cast to object , so the operation ceases to be dynamic.

Why are there no leaks when working with fields and methods of a COM object?

This is what ExpressionTree looks like for field access:

.If ( .Call System.Dynamic.ComObject.IsComObject($$arg0) ) { .Return #Label1 { .Dynamic GetMember ComMarks(.Call System.Dynamic2.ComObject.ObjectToComObject($$arg0)) } }

All Articles