We already talked about Tarantool Cartridge , which allows you to develop distributed applications and pack them. All that's left is to learn how to deploy these applications and manage them. Do not worry, we have provided for everything! We brought together all the best practices for working with Tarantool Cartridge and wrote an ansible role that decomposes the package into servers, launches the instances, combines them into a cluster, configures authorization, starts vshard, enables automatic failover and patches the cluster config.

Interesting? Then I ask for a cut, we will tell and show everything.

Let's start with an example.

We will consider only part of the functionality of our role. You can always find a full description of all its features and input parameters in the documentation . But it’s better to try once than see a hundred times, so let's install a small application.

Tarantool Cartridge has a tutorial on creating a small Cartridge application that stores information about bank customers and their accounts, and also provides an API for managing data through HTTP. For this, the application describes two possible roles: api

and storage

, which can be assigned to instances.

Cartridge itself does not say anything about how to start processes, it only provides the ability to configure already running instances. The user must do the rest: decompose the configuration files, start the services and configure the topology. But we will not do all this, Ansible will do it for us.

From words to deeds

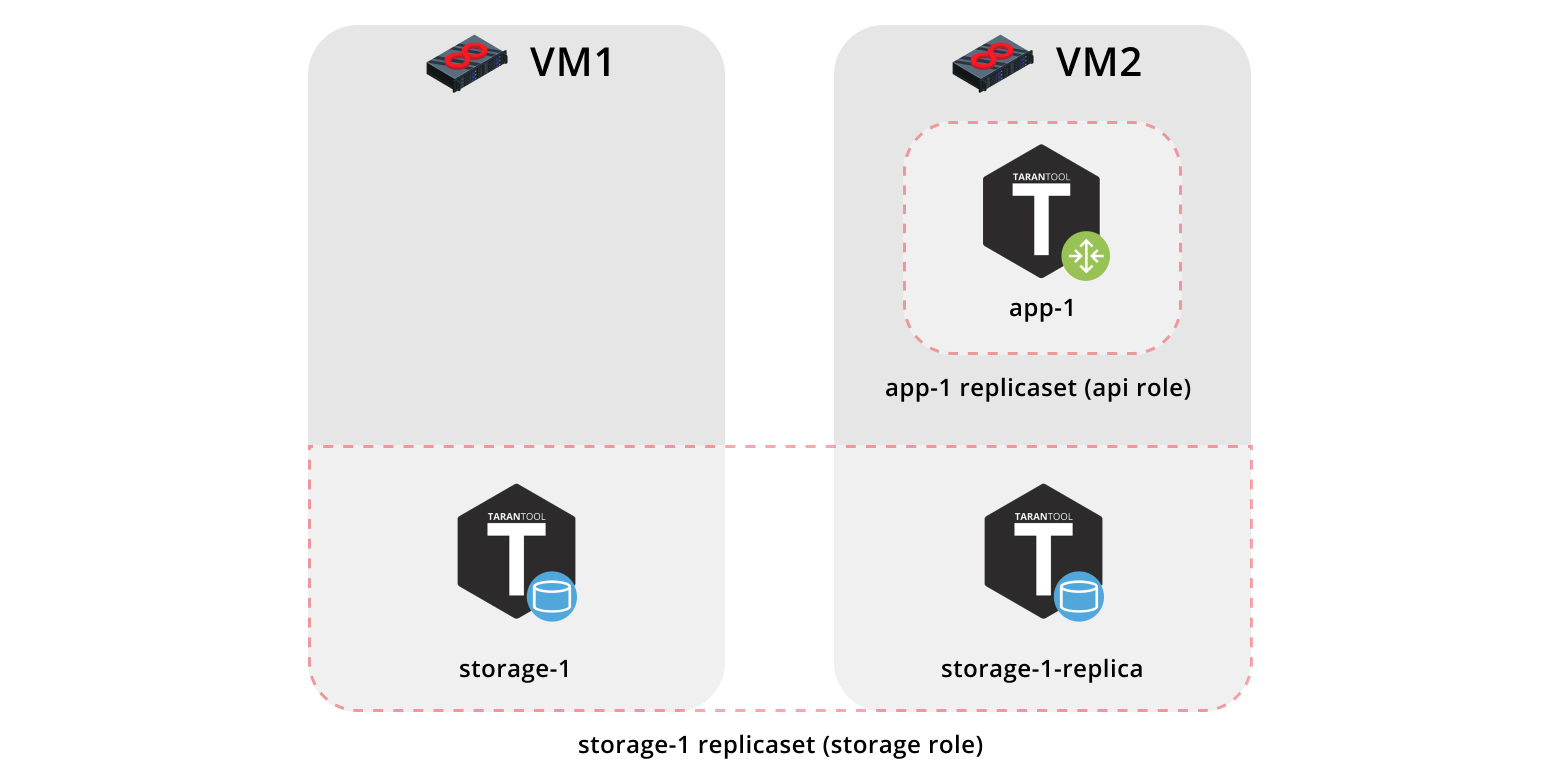

So, let's install our application on two virtual machines and set up a simple topology:

- The replicaet

app-1

will implement theapi

role, which includes thevshard-router

role. There will be only one instance. - The

storage-1

replicaset implements thestorage

role (and simultaneouslyvshard-storage

), here we add two instances from different machines.

To run the example, we need Vagrant and Ansible (version 2.8 or later).

The role itself is in Ansible Galaxy . This is a repository that allows you to share your best practices and use ready-made roles.

We clone the repository with an example:

$ git clone https://github.com/dokshina/deploy-tarantool-cartridge-app.git $ cd deploy-tarantool-cartridge-app && git checkout 1.0.0

Raise virtual machines:

$ vagrant up

Install the Tarantool Cartridge ansible role:

$ ansible-galaxy install tarantool.cartridge,1.0.1

Run the installed role:

$ ansible-playbook -i hosts.yml playbook.yml

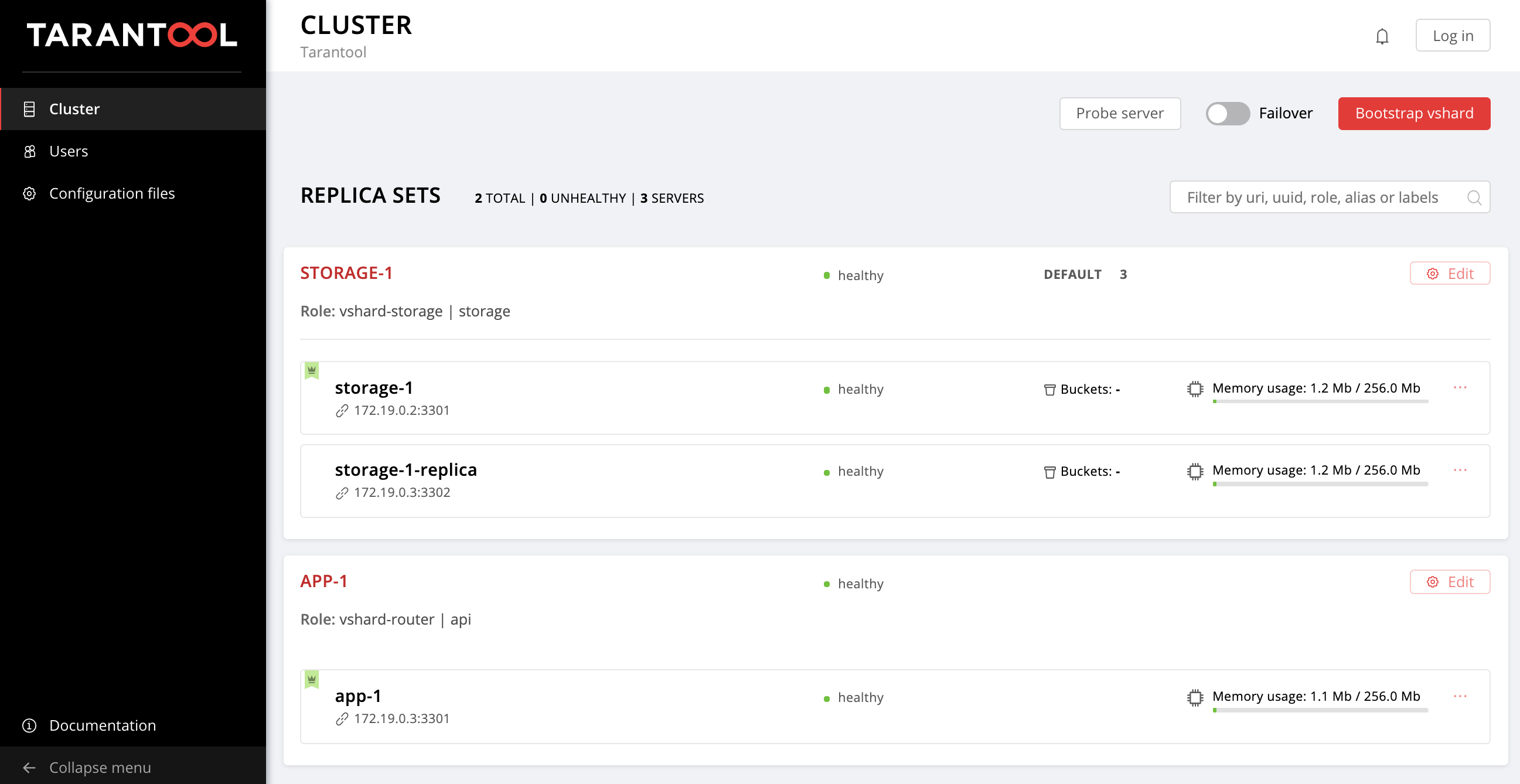

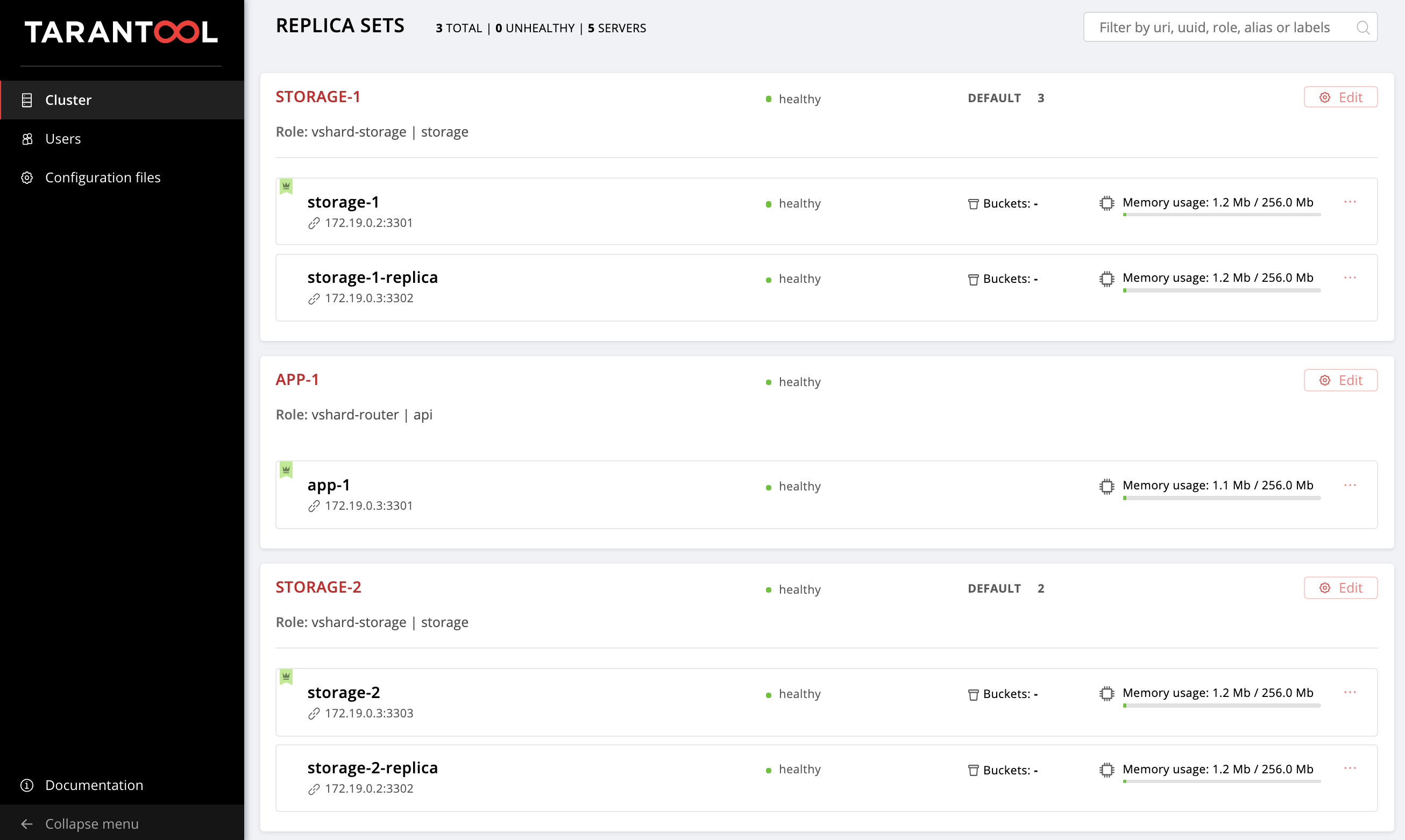

We are waiting for the completion of the playbook, go to http: // localhost: 8181 / admin / cluster / dashboard and enjoy the result:

You can pour data. Cool, right?

Now let's figure out how to work with this, and at the same time add another replica set to the topology.

Start to understand

So what happened?

We picked up two virtual machines and launched the ansible playbook that configured our cluster. Let's look at the contents of the playbook.yml

file:

--- - name: Deploy my Tarantool Cartridge app hosts: all become: true become_user: root tasks: - name: Import Tarantool Cartridge role import_role: name: tarantool.cartridge

Nothing interesting happens here, we launch an ansible role called tarantool.cartridge

.

All the most important (namely, cluster configuration) is in the hosts.yml

inventory file:

--- all: vars: # common cluster variables cartridge_app_name: getting-started-app cartridge_package_path: ./getting-started-app-1.0.0-0.rpm # path to package cartridge_cluster_cookie: app-default-cookie # cluster cookie # common ssh options ansible_ssh_private_key_file: ~/.vagrant.d/insecure_private_key ansible_ssh_common_args: '-o IdentitiesOnly=yes -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no' # INSTANCES hosts: storage-1: config: advertise_uri: '172.19.0.2:3301' http_port: 8181 app-1: config: advertise_uri: '172.19.0.3:3301' http_port: 8182 storage-1-replica: config: advertise_uri: '172.19.0.3:3302' http_port: 8183 children: # GROUP INSTANCES BY MACHINES host1: vars: # first machine connection options ansible_host: 172.19.0.2 ansible_user: vagrant hosts: # instances to be started on the first machine storage-1: host2: vars: # second machine connection options ansible_host: 172.19.0.3 ansible_user: vagrant hosts: # instances to be started on the second machine app-1: storage-1-replica: # GROUP INSTANCES BY REPLICA SETS replicaset_app_1: vars: # replica set configuration replicaset_alias: app-1 failover_priority: - app-1 # leader roles: - 'api' hosts: # replica set instances app-1: replicaset_storage_1: vars: # replica set configuration replicaset_alias: storage-1 weight: 3 failover_priority: - storage-1 # leader - storage-1-replica roles: - 'storage' hosts: # replica set instances storage-1: storage-1-replica:

All we need to do is learn how to manage instances and replicasets by modifying the contents of this file. Further we will add new sections to it. In order not to get confused where to add them, you can peek at the final version of this file, hosts.updated.yml

, which is located in the repository with an example.

Instance management

In terms of Ansible, each instance is a host (not to be confused with an iron server), i.e. An infrastructure node that Ansible will manage. For each host, we can specify connection parameters (such as ansible_host

and ansible_user

), as well as the instance configuration. Description of instances is in the hosts

section.

Consider the configuration of the storage-1

instance:

all: vars: ... # INSTANCES hosts: storage-1: config: advertise_uri: '172.19.0.2:3301' http_port: 8181 ...

In the config

variable, we specified the instance parameters - advertise URI

and HTTP port

.

Below are the app-1

and storage-1-replica

instance parameters.

We need to provide Ansible connection parameters for each instance. It seems logical to group instances into virtual machine groups. For this, instances are grouped into host1

and host2

, and in each group in the vars

section the values ansible_host

and ansible_user

for one vars

are indicated. And in the hosts

section there are hosts (they are instances) that are included in this group:

all: vars: ... hosts: ... children: # GROUP INSTANCES BY MACHINES host1: vars: # first machine connection options ansible_host: 172.19.0.2 ansible_user: vagrant hosts: # instances to be started on the first machine storage-1: host2: vars: # second machine connection options ansible_host: 172.19.0.3 ansible_user: vagrant hosts: # instances to be started on the second machine app-1: storage-1-replica:

We begin to change hosts.yml

. Add two more instances, storage-2-replica

on the first virtual machine and storage-2

on the second:

all: vars: ... # INSTANCES hosts: ... storage-2: # <== config: advertise_uri: '172.19.0.3:3303' http_port: 8184 storage-2-replica: # <== config: advertise_uri: '172.19.0.2:3302' http_port: 8185 children: # GROUP INSTANCES BY MACHINES host1: vars: ... hosts: # instances to be started on the first machine storage-1: storage-2-replica: # <== host2: vars: ... hosts: # instances to be started on the second machine app-1: storage-1-replica: storage-2: # <== ...

Run the ansible playbook:

$ ansible-playbook -i hosts.yml \ --limit storage-2,storage-2-replica \ playbook.yml

Pay attention to the --limit

option. Since each instance of the cluster is a host in terms of Ansible, we can explicitly indicate which instances should be configured when playing a playbook.

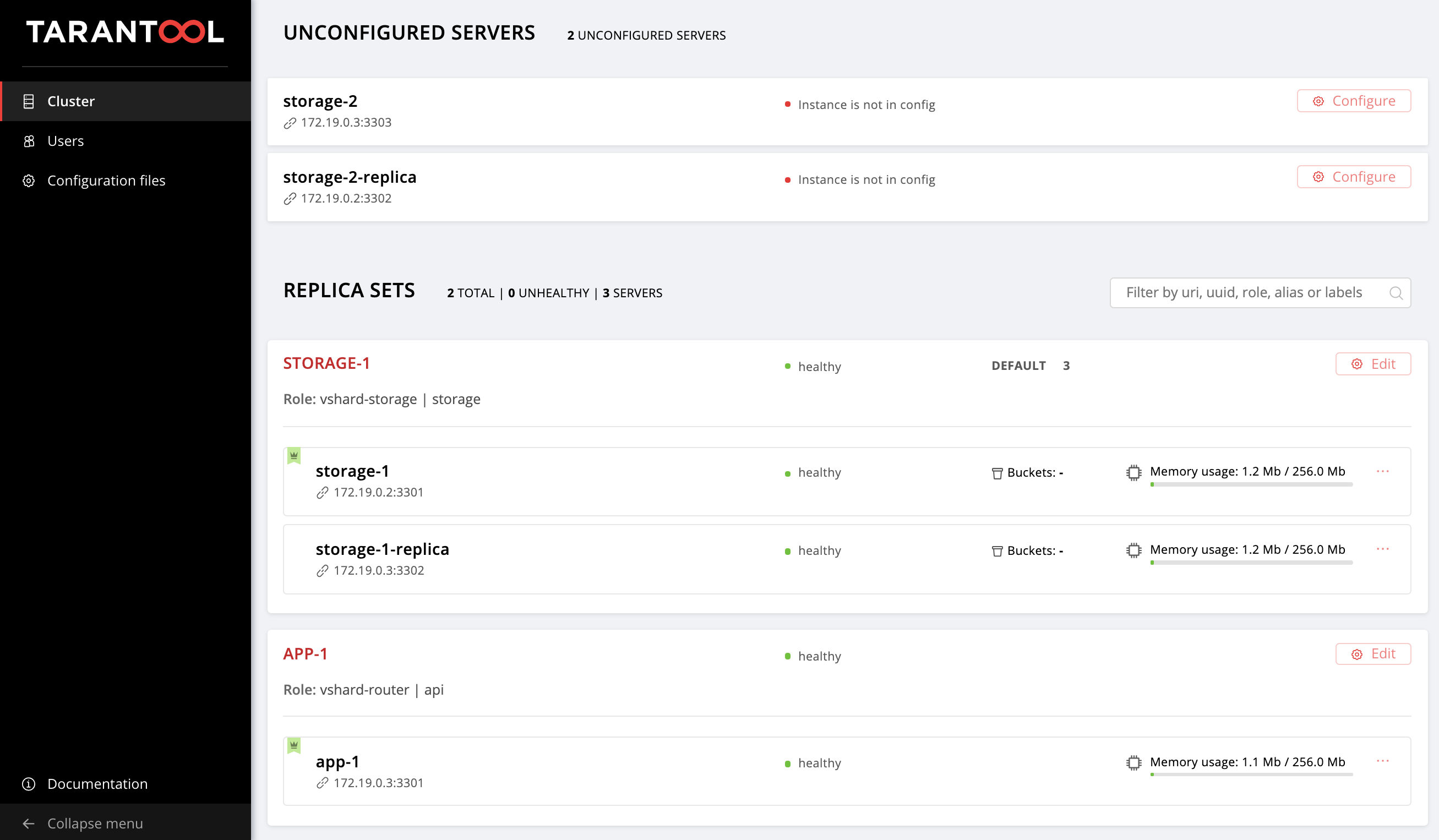

Again, go to the Web UI http: // localhost: 8181 / admin / cluster / dashboard and observe our new instances:

We will not stop there and master the management of topology.

Topology management

Combine our new instances into storage-2

replicaset. Add a new group replicaset_storage_2

and describe the replicaset parameters in its variables by analogy with replicaset_storage_1

. In the hosts

section, we indicate which instances will be included in this group (that is, our replica set):

--- all: vars: ... hosts: ... children: ... # GROUP INSTANCES BY REPLICA SETS ... replicaset_storage_2: # <== vars: # replicaset configuration replicaset_alias: storage-2 weight: 2 failover_priority: - storage-2 - storage-2-replica roles: - 'storage' hosts: # replicaset instances storage-2: storage-2-replica:

Launch the playbook again:

$ ansible-playbook -i hosts.yml \ --limit replicaset_storage_2 \ --tags cartridge-replicasets \ playbook.yml

This time in the parameter --limit

we passed the name of the group that corresponds to our replicaset.

Consider the tags

option.

Our role consistently performs various tasks, which are marked with the following tags:

-

cartridge-instances

: management of instances (setup, connection to membership); -

cartridge-replicasets

: topology management (managing replicasets and permanently deleting (expel) instances from the cluster); -

cartridge-config

: management of the remaining cluster parameters (vshard bootstrapping, automatic failover mode, authorization parameters and application configuration).

We can explicitly indicate what part of the work we want to do, then the role will skip the execution of the remaining tasks. In our case, we want to work only with the topology, therefore we indicated cartridge-replicasets

.

Let's evaluate the result of our efforts. Find the new replica set at http: // localhost: 8181 / admin / cluster / dashboard .

Hooray!

Experiment with changing the configuration of instances and replicasets and see how the cluster topology changes. You can try out various operational scenarios, for example, rolling update or increase memtx_memory

. The role will attempt to do this without restarting the instance to reduce the possible downtime of your application.

Remember to start vagrant halt

to stop vagrant halt

when you are finished working with them.

And what's under the hood?

Here I will tell you more about what happened under the hood of the ansible role during our experiments.

Let’s take a look at the deployment steps of the Cartridge application.

Installing a package and starting instances

First you need to deliver the package to the server and install it. Now the role is able to work with RPM and DEB packages.

Next, run the instances. Everything is very simple here: each instance is a separate systemd

service. I tell with an example:

$ systemctl start myapp@storage-1

This command will launch the storage-1

instance of myapp

. The running instance will look for its configuration in /etc/tarantool/conf.d/

. journald

can be viewed using journald

.

The unit file /etc/systemd/system/myapp@.sevice

for the systemd service will be delivered with the package.

Ansible has built-in modules for installing packages and managing systemd services, here we have not invented anything new.

Configure Cluster Topology

And here the fun begins. Agree, it would be strange to bother with a special ansible-role for installing packages and running systemd

services.

You can configure the cluster manually:

- The first option: open the Web UI and click on the buttons. For a one-time start of several instances it is quite suitable.

- Second option: you can use the GraphQl API. Here you can already automate something, for example, write a script in Python.

- The third option (for those who are strong in spirit): we go to the server,

tarantoolctl connect

to one of the instances usingtarantoolctl connect

and perform all the necessary manipulations with thecartridge

Lua-module.

The main objective of our invention is to do exactly this, the most difficult part of the work for you.

Ansible allows you to write your module and use it in a role. Our role uses such modules to manage various cluster components.

How it works? You describe the desired state of the cluster in the declarative config, and the role feeds its configuration section to the input of each module. The module receives the current state of the cluster and compares it with what came in. Then, through the socket of one of the instances, the code is launched, which brings the cluster to the desired state.

Summary

Today we talked and showed how to deploy your application on Tarantool Cartridge and set up a simple topology. To do this, we used Ansible, a powerful tool that is easy to use and allows you to simultaneously configure many infrastructure nodes (in our case, these are cluster instances).

Above, we figured out one of many ways to describe the configuration of a cluster using Ansible. Once you realize that you are ready to move on, learn the best practices for writing playbooks. You may find it more convenient to manage the topology with group_vars

and host_vars

.

Very soon we will tell you how to permanently delete (expel) instances from the topology, bootstrap vshard, manage the automatic failover mode, configure authorization and patch the cluster config. In the meantime, you can independently study the documentation and experiment with changing cluster parameters.

If something doesn't work, be sure to let us know about the problem. We will quickly destroy everything!