Server based on Intel NUC, which processes data from 80 cameras with aggregation of this data through Cumulator software

Goals and objectives that the Cumulator software product solves:

- Collect data from multiple devices

- Single point of access to events via REST API

- Centralized event storage

- Real-time visualization and display of data on installed and connected devices and events on them

- Management of multiple devices (monitoring, connection)

For integration, we settled on QNAP AppCenter, because Inside, Docker containers are used, within which third-party software can work. QNAP Test Equipment:

QNAP storage for data aggregation in object video analytics

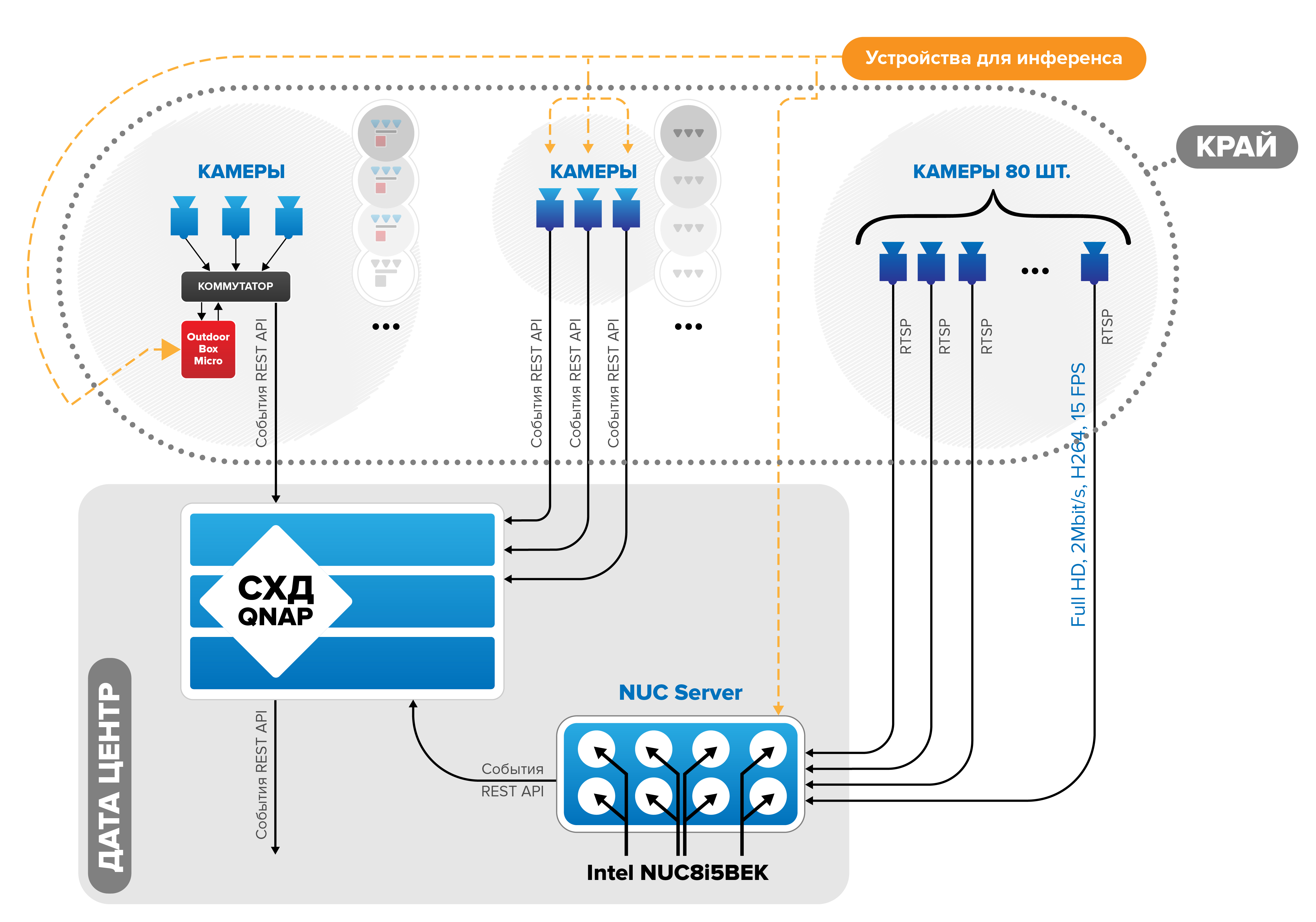

The general scheme of hybrid inference

Three types of devices are used for inference (execution of neural networks): a server in a DC in racks on Intel NUC8i5BEK in the 1U form factor, a server on Intel VCA2 on the Supermicro 1U platform, cameras with installed software (Axis, Vivotek, etc.), microcomputers of outdoor installation (in our version it is either ARM based on FriendlyARM Nano Pi M4, or x86 UP Board Intel Atom X5). Further, from multiple devices (for example, 2 servers, 15 cameras, 30 microcomputers), data aggregation and storage are required. For this, we chose storage from QNAP.

The general scheme of hybrid inference

Technical details

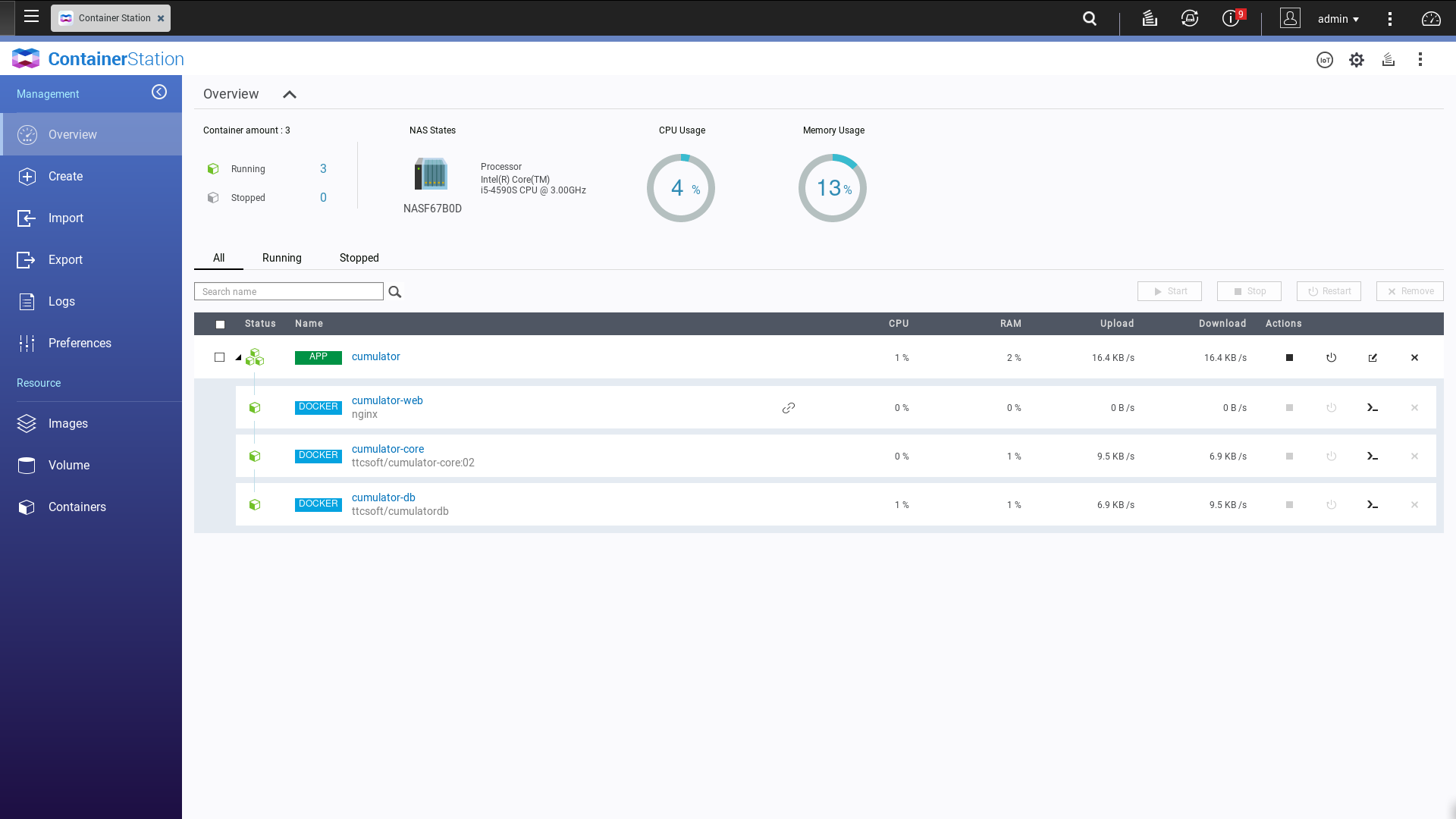

All software was run on QNAP TVS-871T, Intel Core (TM) i5-4590S CPU 3.00GHz, Version 4.4.1.1086 (2019/10/10), Linux-kernel 4.14.24-qnap, under system-docker Version: 17.09 .1-ce, API version: 1.32, OS / Arch: linux / amd64

When testing the solutions, the storage systems were equipped with Seagate 7200 Exos 7E2 disks with a volume of 1 Tb.

Cumulator started from the console using system-docker and through Container Station V2.0.356 (application in the GUI wrapper for docker from AppCenter).

QNAP Container Station

In parallel, we tested the work of inference on storage systems. We were able to run inference only in the console on system-docker. The reason is that in the graphical interface there are no settings for advanced mounting of partitions (the problem with all GUI applications is trimming settings that ordinary users do not need).

Docker containers are assembled from the image using docker-compose, at this stage they did not build from Dockerfile. The launch line looks like this:

system-docker run --detach --name=edgeserver-testing -p 18081:8081 -p 18082:8082 -p 15433:5433 --mount type=bind,source=/sys/fs/cgroup,target=/sys/fs/cgroup --mount type=bind,source=/sys/fs/fuse,target=/sys/fs/fuse --mount type=tmpfs,destination=/run --privileged -v /dev/bus/usb:/dev/bus/usb --mount type=tmpfs,destination=/run/lock ubuntu-edge

As you can see, we launched Docker in privileged mode so that the software could detect Sentinel USB keys (all ports, since we don’t know in advance where the keys can be inserted, in what quantity and whether they will then be moved to another port ) At this point, starting in privileged mode was considered acceptable.

Summary

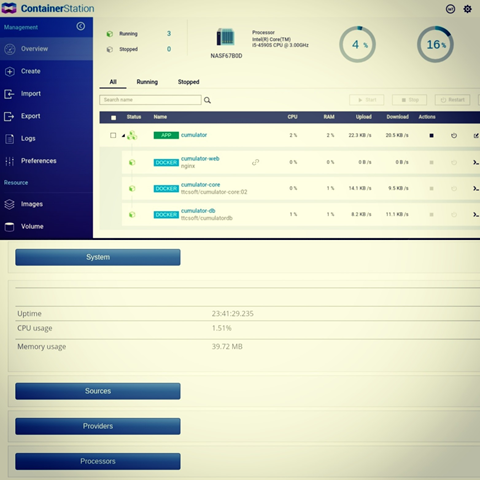

Cumulator application running on QNAP with statistics on CPU, RAM:

Cumulator application running on QNAP storage

According to the statistics of resource consumption, it is clear that they are enough in the framework of storage. In fact, inference, i.e. execution of neural networks is performed on remote devices (servers, cameras, specialized devices near cameras), and the aggregation, assembly and storage of data is carried out by the storage system itself with specialized software. This software collects data using the REST API and provides it to related information systems as needed.