Firstly, there were 8 pieces of microcomputers in the server. That is, heat dissipation proportional to energy consumption is already equal to 25 * 8 = 200 watts. And already at this stage no passive cooling can be said, but the experiment was continued. Including, for reasons of further adaptation of the technology for the execution of outdoor enclosures. The development of the hardware took place in parallel with the OCD on the use of the necessary software. We used a complex for the detection and recognition of license plates and makes / models of VIT vehicles called EDGE. As it turned out, when measuring power consumption at 100% load, Intel NUC8i5BEK consumes 46 watts instead of the declared 25. With this approach, the server's power consumption and heat dissipation already becomes 46 * 8 = 368 watts. And this is without taking into account power supplies and additional components.

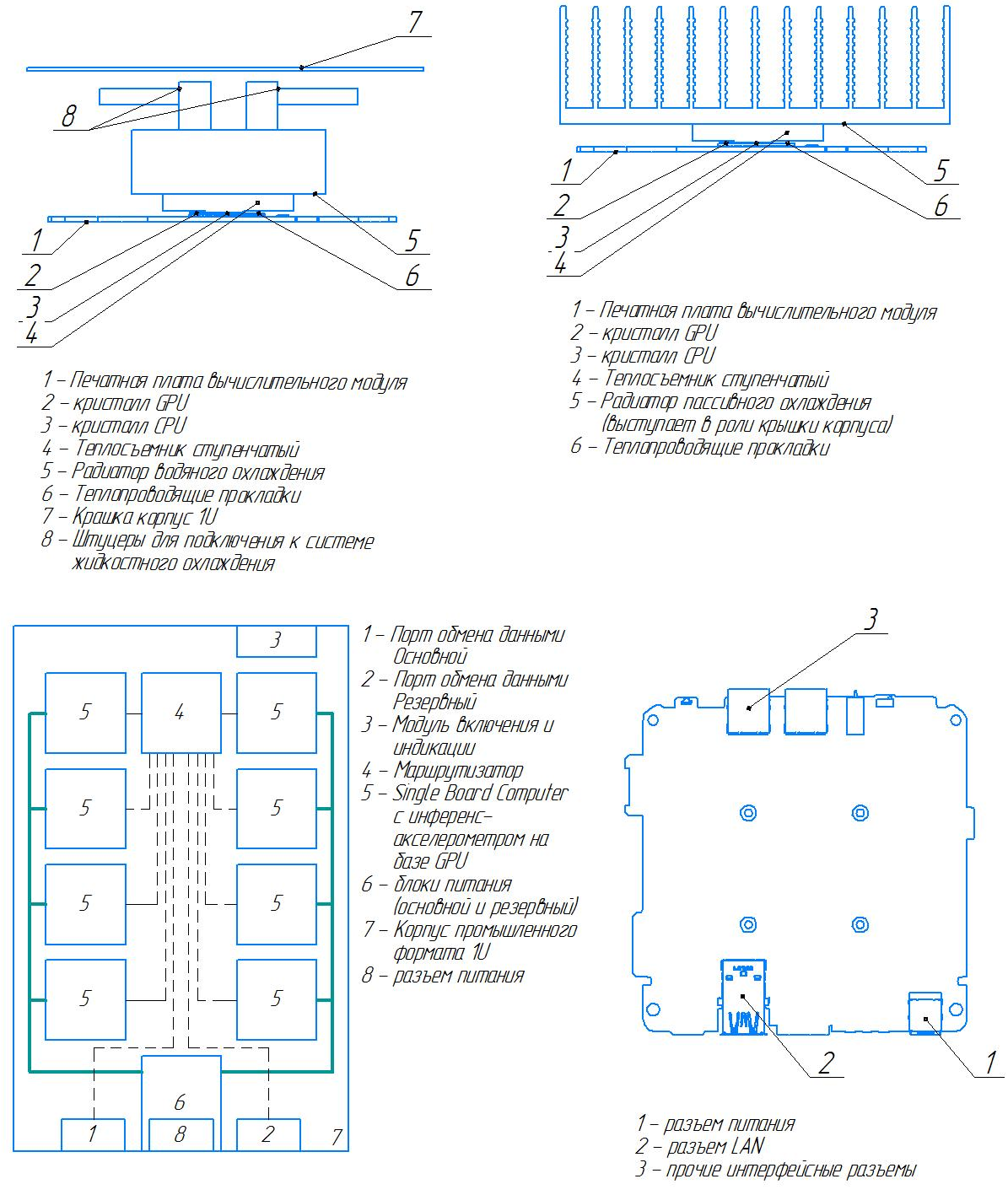

As a heat sink, we used copper plates after grinding and special engraving. Further, the copper plate gave off heat to the aluminum heat exchanger on the top of the server. Due to errors in the calculations, the heat exchanger occupied 2/3 of the top cover and was located on both sides parallel to 4 microcomputers along the left and right sides of the server. In the central area, there was a 550 W power supply in the back and a Mikrotik 1 Gbit router in the front.

Server design phase based on 8 microcomputers

And the result that turned out:

NUC Server with Passive Cooling

Of course, this design worked at medium load, but at 100% it continued to slowly heat up and give a significant part of the heat to the environment. Even within the server cabinet with a hot and cold corridor, the design inevitably heated up a higher “neighbor”. Reducing the number of microcomputers to 4 pieces allowed stabilizing the heating temperature and avoiding throttling even at 100% load, but the problem of a higher “neighbor” remained.

The technological solution stack has diverged in two directions:

- Passive cooling of Intel NUC8i5BEK in the case of external use

- Active air cooling in 1U server chassis

1U server with 8 Core i5 processors and 8 GPUs installed

Yes, yes, it is a lot and yes, it is possible. How, you ask? By combining multiple microcomputers inside a 1U chassis at the network level through a router. Specifically, for the implementation of neural networks and the processing of incoming RTSP streams, this solution is optimal. In addition, it allows you to build scalable systems based on a bunch of Docker, Docker Swarm and Ansible.

During the development process, we had to design several different versions:

Prototype of the future decision

The original idea left well-proven copper heat sinks and aluminum radiators, but now located directly on each computing node inside the case. Further, to remove heat, 2 fans were used, installed in front of each computing module, directing warm air from a cold corridor to hot (from the front of the server). For active fan control, special boards were developed that changed their speed based on the temperature sensors on each microcomputer. Due to the lack of need for PoE, the router was deprived of active cooling; it was switched to passive with a heat release of not more than 10 watts. The power supply in the first version remained 500 W, but it was installed on special mounts with the possibility of quick replacement, but with a power outage. To monitor the status of computing nodes, an indication of 8 diodes was added on the front panel.

NUC Server prototype from ComBox Technology, 8 CPU Core i5 and 8 GPU Iris Plus 655 in 1U form factor

Technical characteristics of the first version of the server on Intel NUC:

- Intel NUC8i5BEK (without housing with a modified cooling system), 8 pcs.

- AMD Radeon R7 Performance DDR4 SO-DIMM memory modules, 8 GB, 8 pcs.

- Additional memory modules DDR4 SO-DIMM Kingston, 4 GB, 8 pcs.

- SSD M.2 drive WD Green, 240Gb, 8 pcs.

- 1U case (own production)

- MikroTik RB4011iGS + RM Router (without housing with a modified cooling system), 1 pc.

- Connecting wires (patch cords), 11 pcs.

- BP 94Y8187, 550 W.

How it looks in the process of work:

Production Version of NUC Server

The production version has undergone significant changes. Instead of one power supply, 2 appeared on the server with the possibility of hot swapping. We used the compact server power supply 600 W from Supermicro. For computing modules, special footprints appeared and the ability to hot-swap them without powering down the entire server. Many network cables were replaced by fixed busbars fixed to the seats. FriendlyARM's Nano Pi ARM architecture microcomputer has been added to manage the server, for which software has been written and installed to check and monitor the status of computing nodes, reporting errors and hardware reset of specific computing nodes via GPIO, if necessary. Fans in the production version are installed behind the front panel in the direction from the cold corridor towards the hot one, and the fins of the aluminum heat exchanger are now directed down rather than up, which made available the memory and disks of each computing node.

Intel NUC Outdoor Enclosure

Since the microcomputers themselves are not industrial, outdoor use requires not only a case, but also appropriate climatic conditions. We needed dust and moisture protection IP66, as well as a temperature range of operation from -40 to +50 degrees Celsius.

We tested two hypotheses for efficient heat dissipation: heat pipes and copper plates as a heat sink. The latter turned out to be more effective, although with a large area and more expensive solutions. As a heat exchanger, an aluminum sleeper with the calculated dimensions of the ribs is also used.

To start the device in sub-zero temperatures, we used ceramics for heating. For ease of operation and implementation in various fields, power supplies of a wide range of 6-36 V.

The main purpose of the resulting device: the execution of resource-intensive neural networks in the immediate vicinity of the data source. Such tasks include the calculation of passenger traffic in vehicles (passenger cars, trains), as well as centralized processing of data on face detection and recognition in industrial operation.

Intel NUC in outdoor enclosure, IP66 with climate module

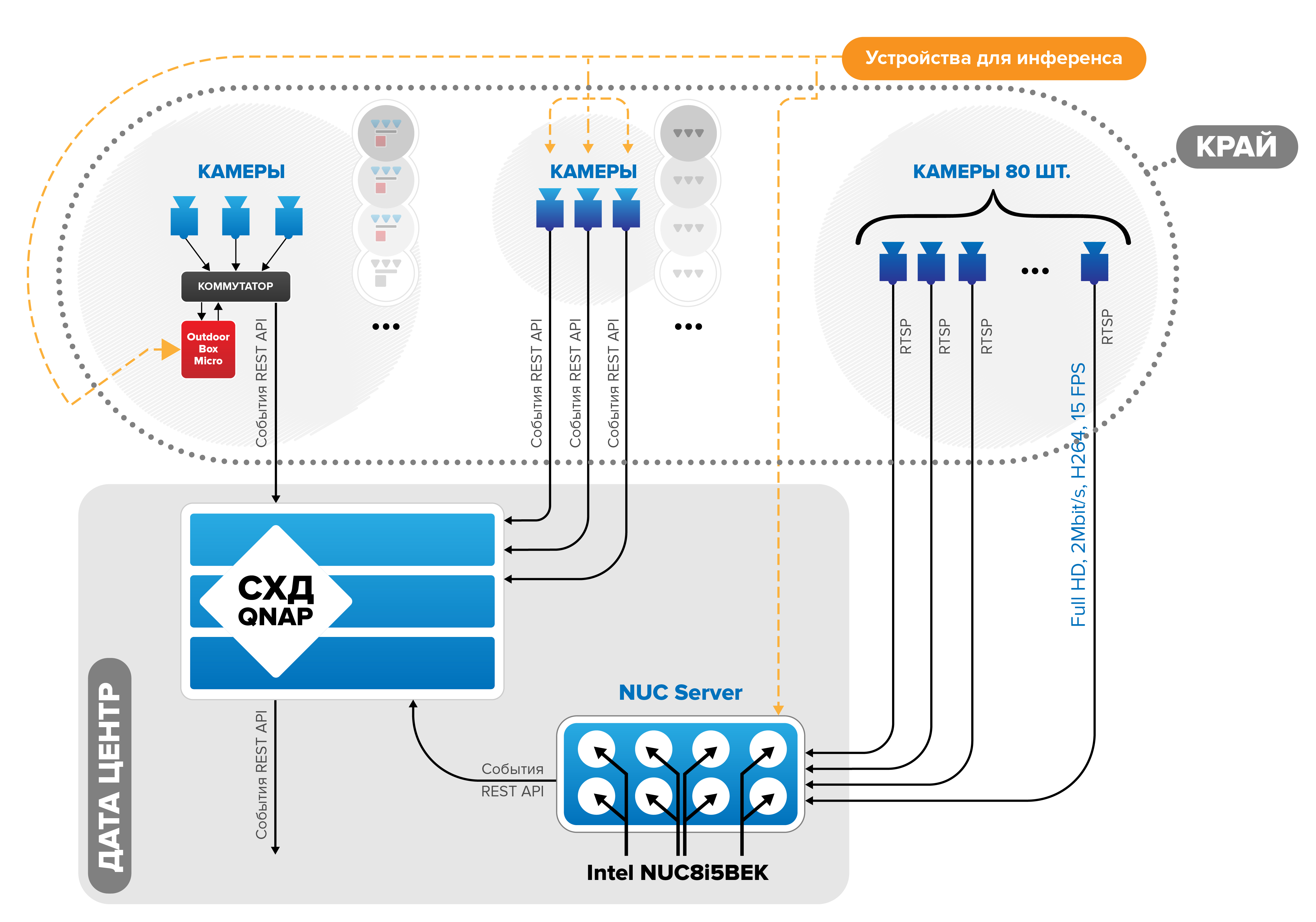

General Hybrid Inference Scheme

Usually, three types of devices are used for inference: a server in a data center, cameras with software for detecting and recognizing objects installed in them, and microcomputers of an outdoor installation (to which cameras are connected, for example, via a switch). Further, from multiple devices (for example, 2 servers, 15 cameras, 30 microcomputers), aggregation and storage of data (events) is required.

In our solutions, we use the following scheme of data aggregation and storage using storage systems:

The general scheme of hybrid inference