Posts in the series:

8. We control from the phone-ROS Control, GPS-node

7. Robot localization: gmapping, AMCL, reference points on the room map

6. Odometry with wheel encoders, room map, lidar

5. We work in rviz and gazebo: xacro, new sensors.

4. Create a robot simulation using the rviz and gazebo editors.

3. Accelerate, change the camera, fix the gait

2. Software

1. Iron

When building maps on a robot within a limited space, there are no problems. By controlling the robot from the keyboard from the control station or on the robot itself, you can visually observe its movements and avoid unwanted obstacles in time. The situation is complicated if there are several rooms. And here there are several options for observing a robot building a map if it leaves the operator’s room:

- connect the camera directly to the robot;

- use the existing video surveillance system outside the robot;

- control with wi-fi, bluetooth keyboard, being near the robot

- “carry the robot in your arms” so that the lidar collects data;

- control the robot from the phone.

The latter option may seem convenient. To control the robot from the phone, simply tilting it (the phone) in the right direction, while the robot is building a map. Such a mission is possible.

Let's consider how to steer the robot from the phone, sending the appropriate messages to the ROS topics on the robot.

There is no need to write a separate application on the phone for the stated purposes. To take advantage of existing practices, just download to your phone and install the android application from the market - “ROS Control”.

Set up the ROS Control app on your phone.

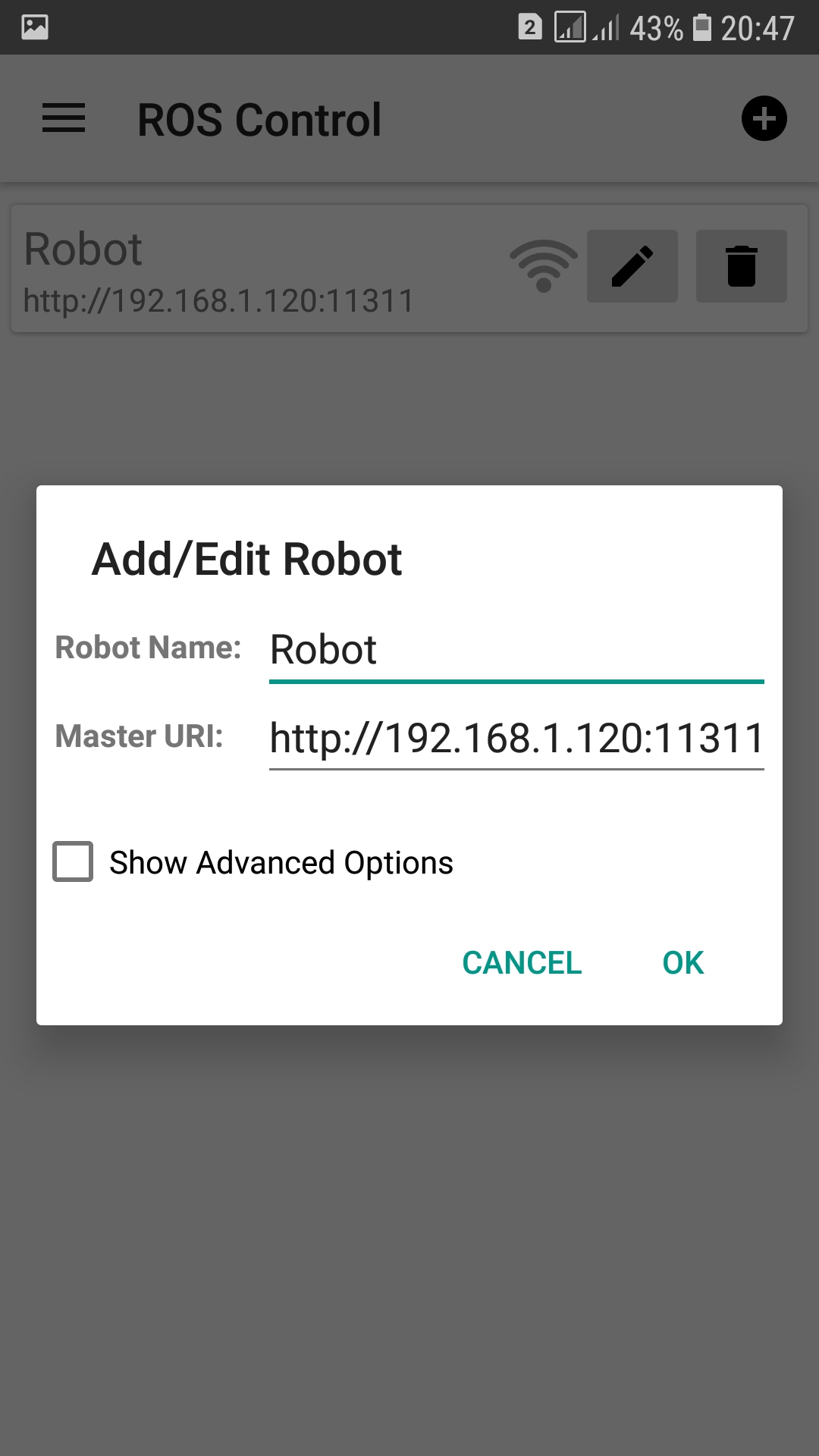

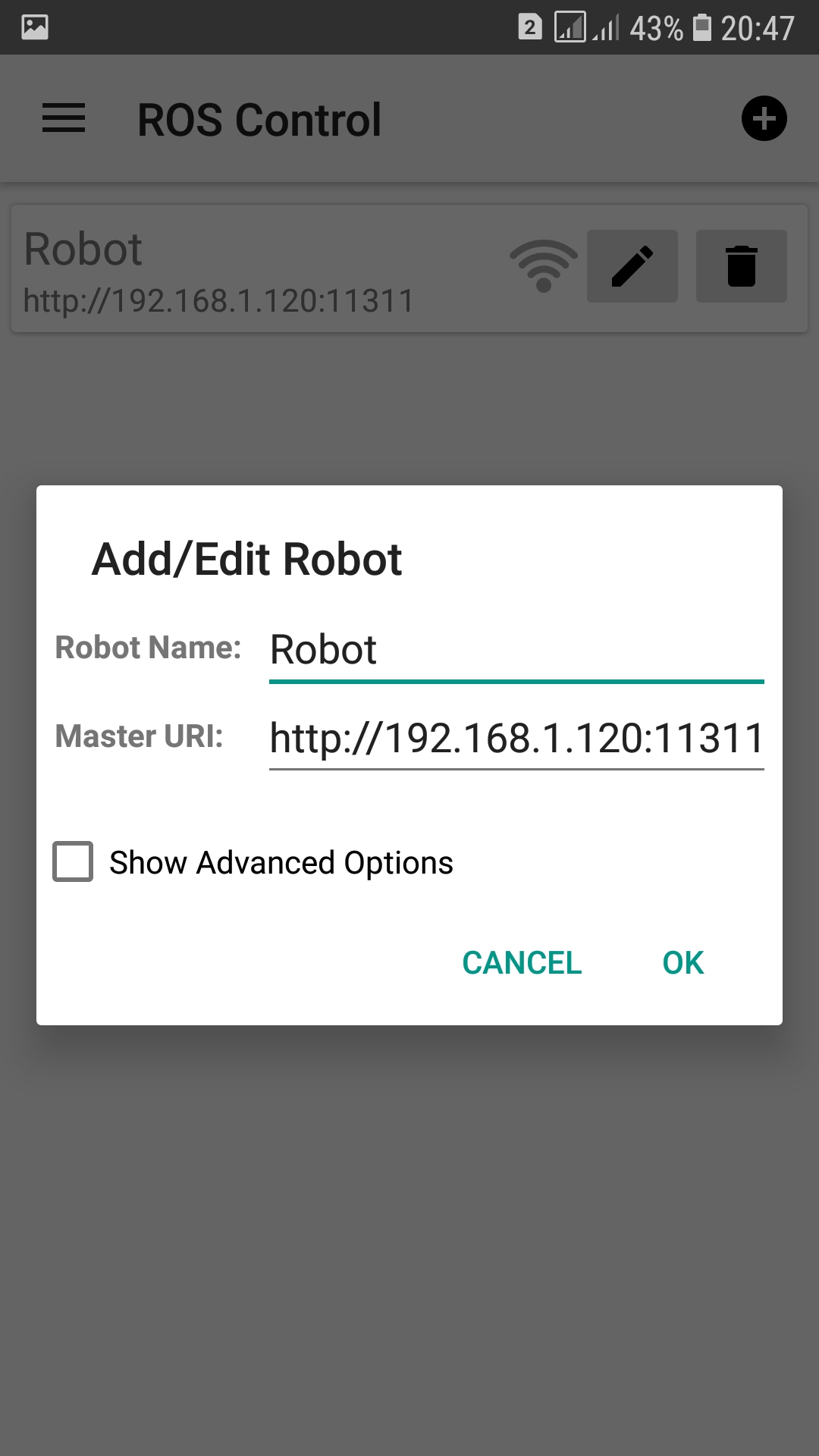

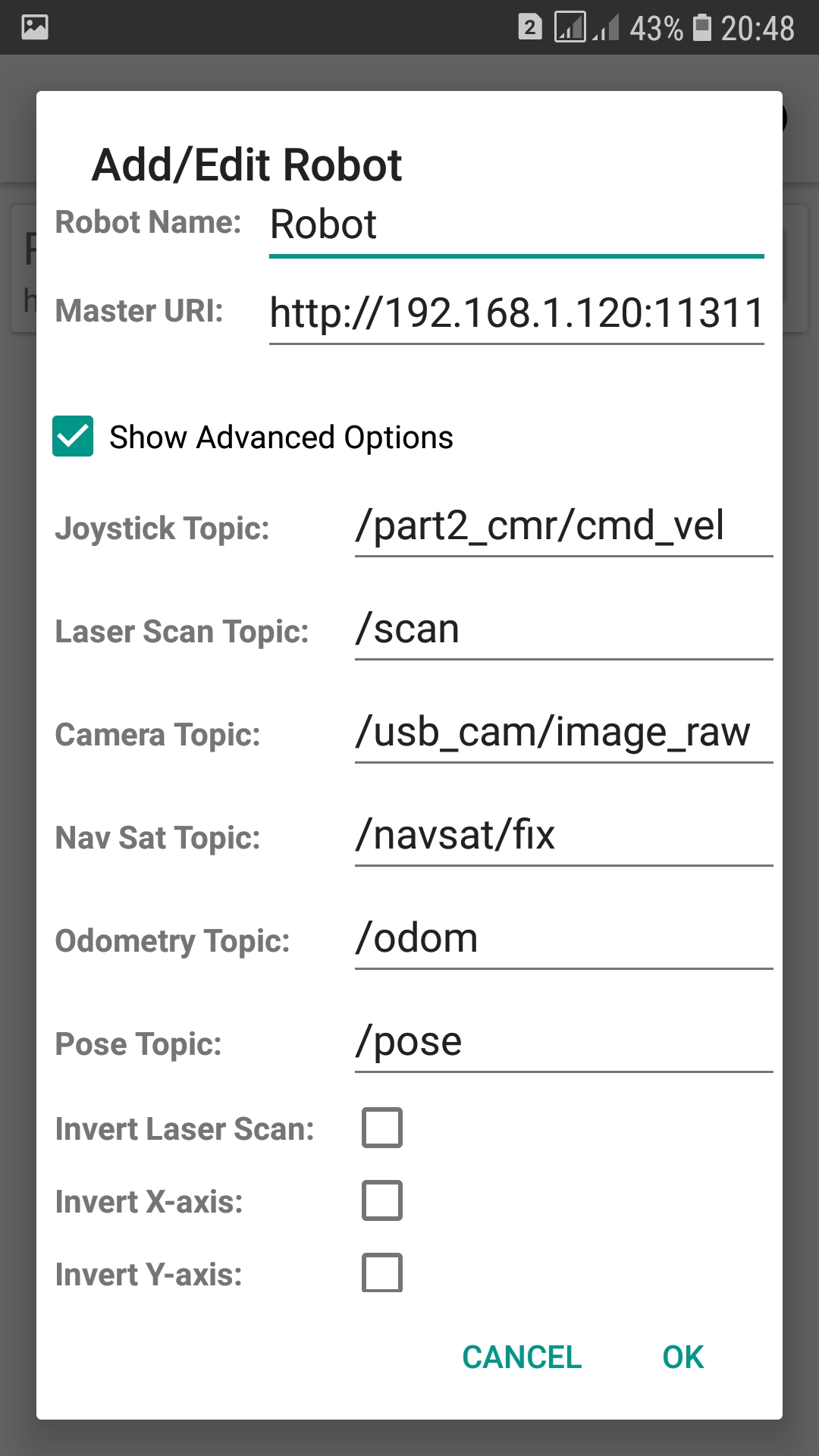

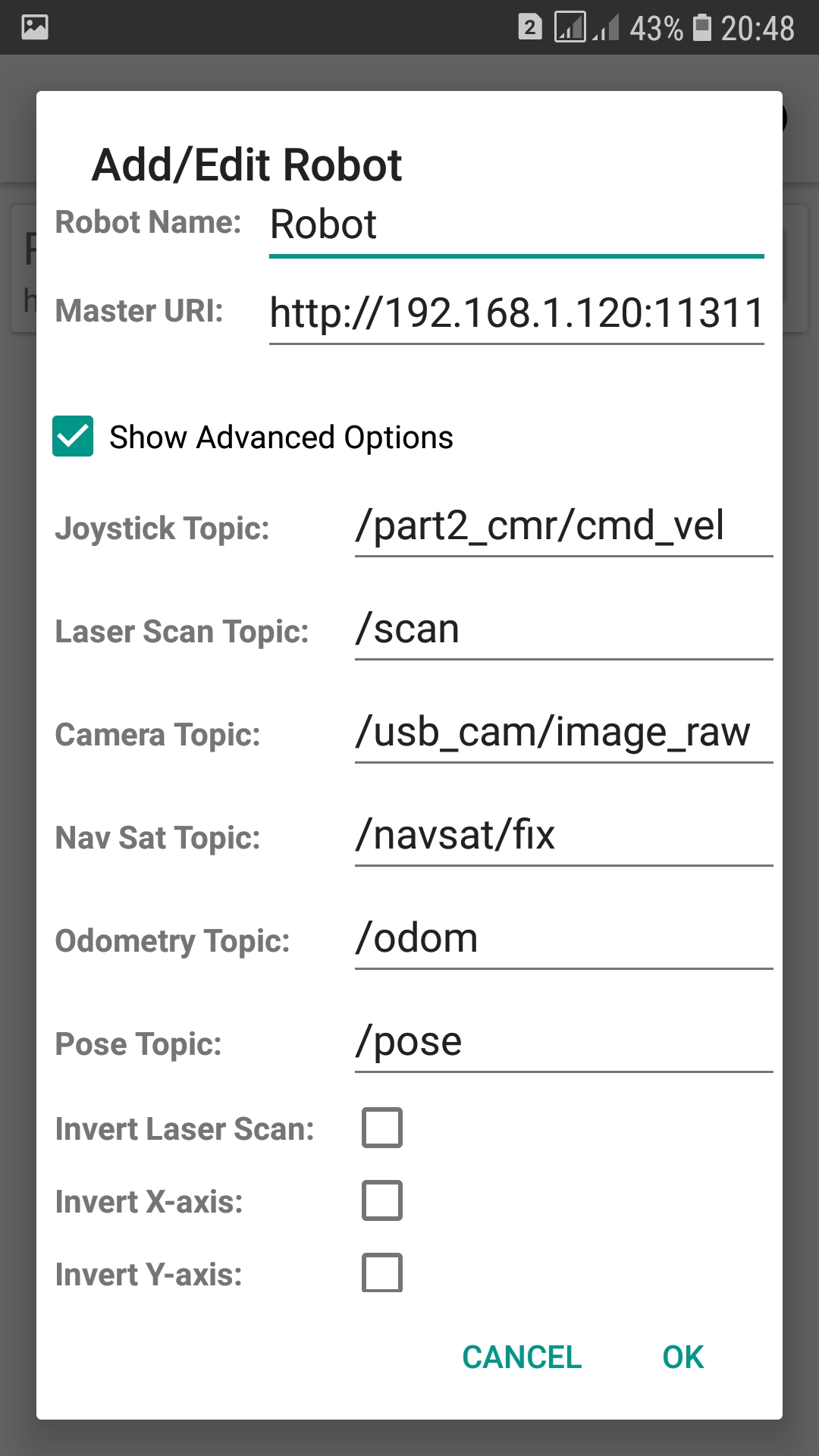

The application is intuitive - a robot is added with a "+" sign, its ip is set:

picture

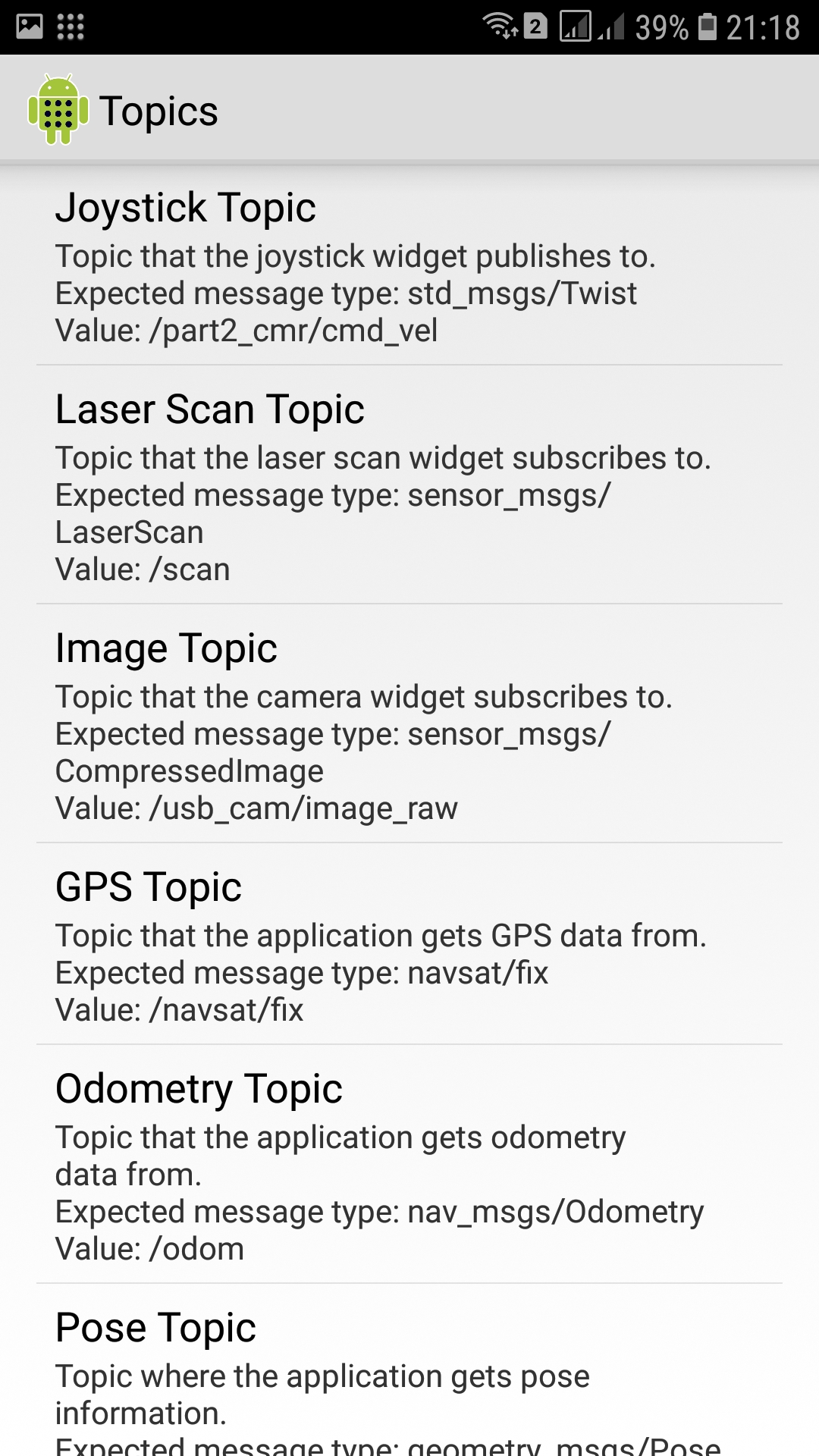

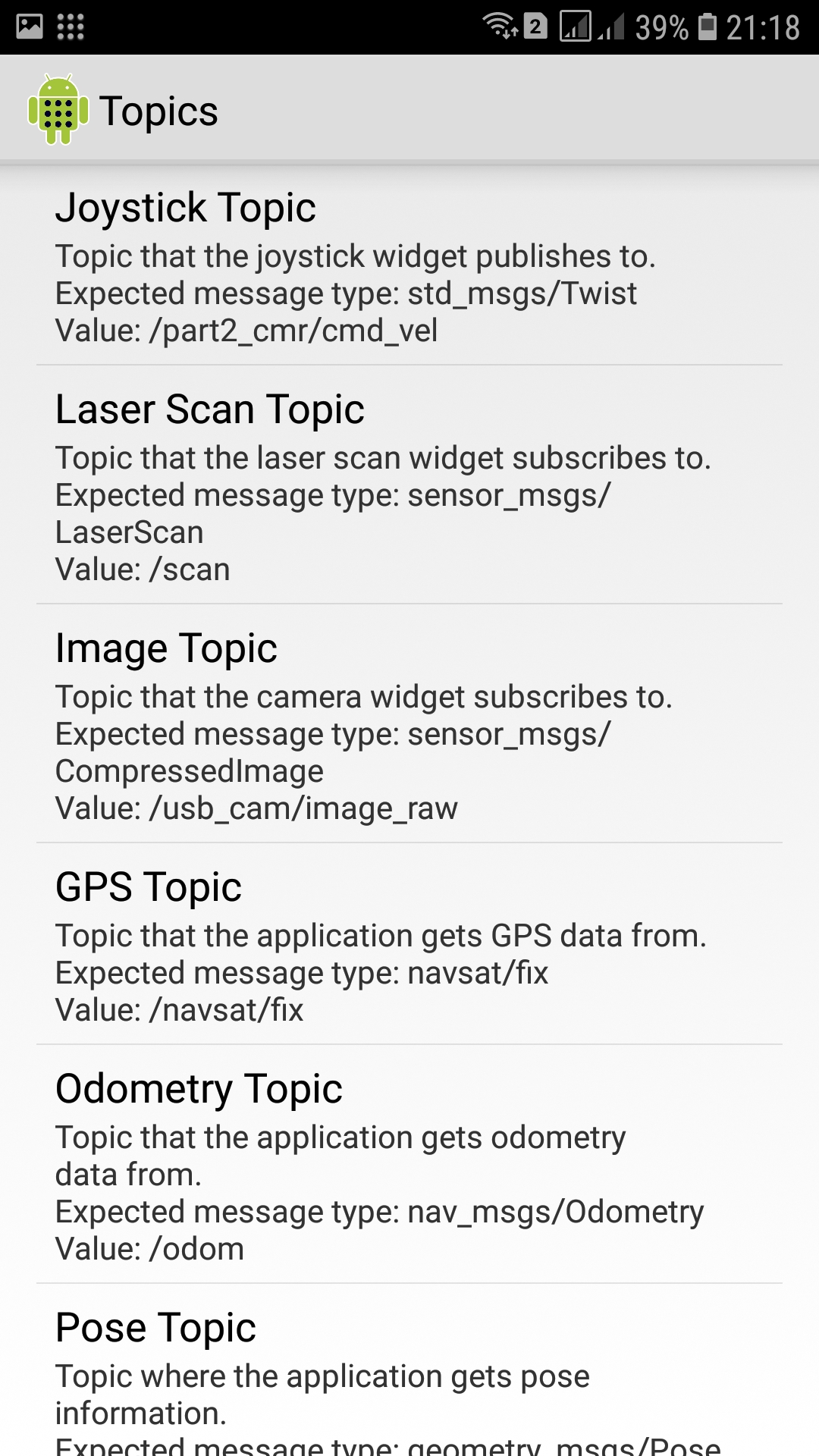

Next, select "Show advanced options" and prescribe topics for the robot cart:

picture

picture

Messages from the phone will fly into these topics, and will also be accepted in a format that ROS understands.

In the application, topics are available to us:

- joystick topic - control from the phone;

- laser scan topic - data from the lidar;

- nav sat topic - gps robot data;

- odom - odometry (everything that gets there from encoders and / or imu and / or lidar);

- pose - "position", the location of the robot.

Master the joystick topic.

The robot itself will not go, therefore, we will launch a topic on the robot with a motion driver, which has already been featured in previous posts:

1st terminal:

rosrun rosbots_driver part2_cmr.py

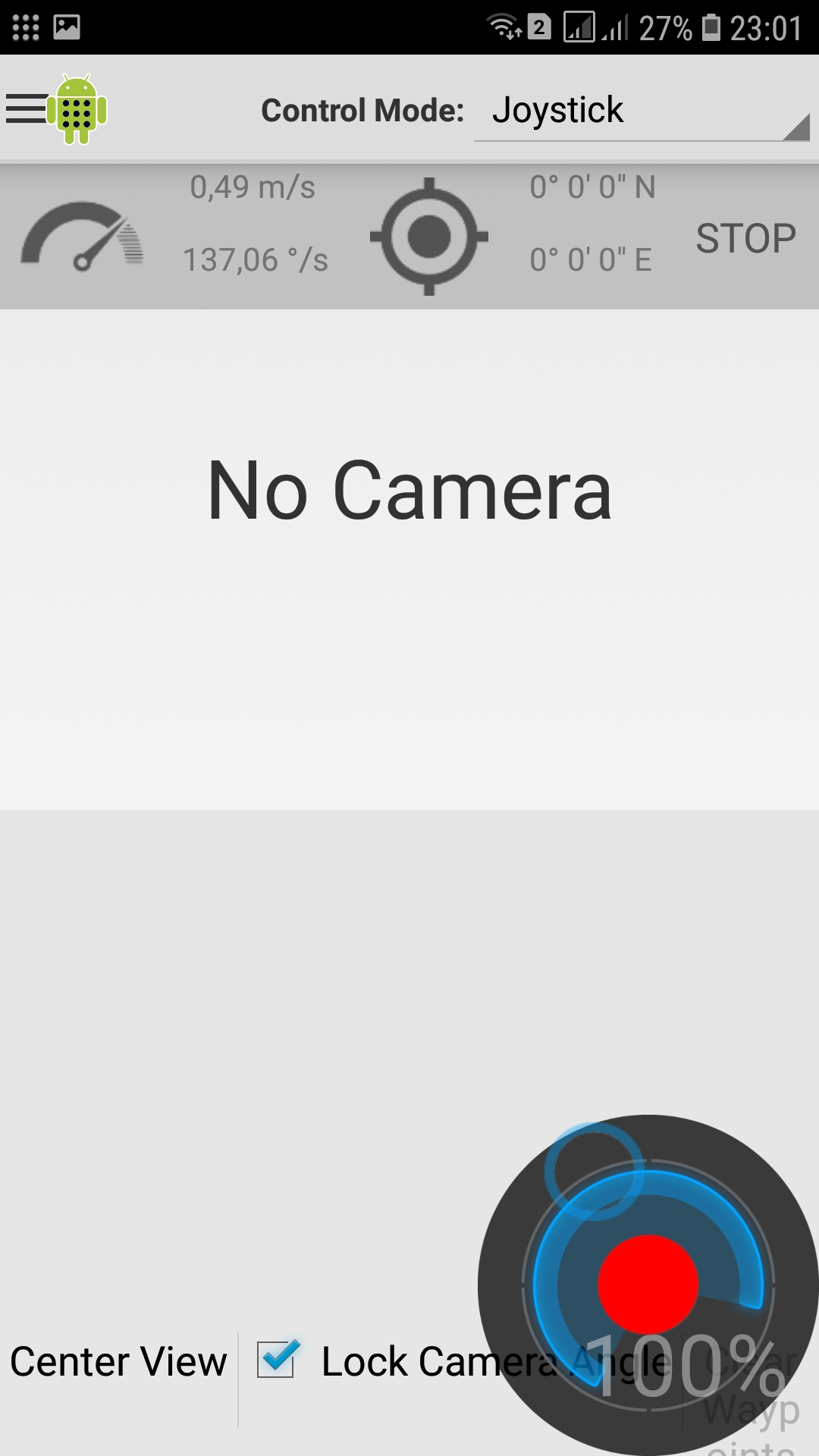

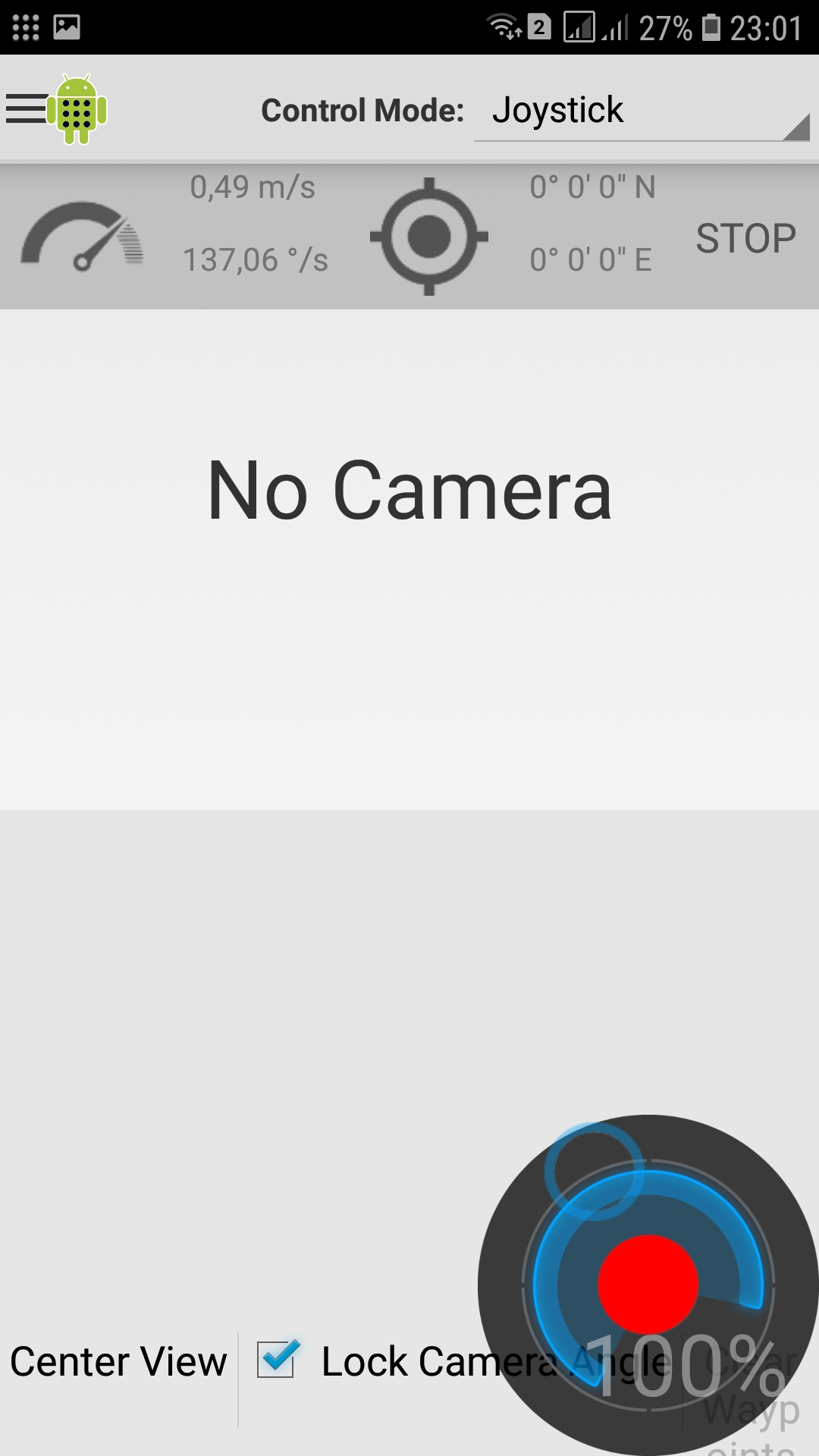

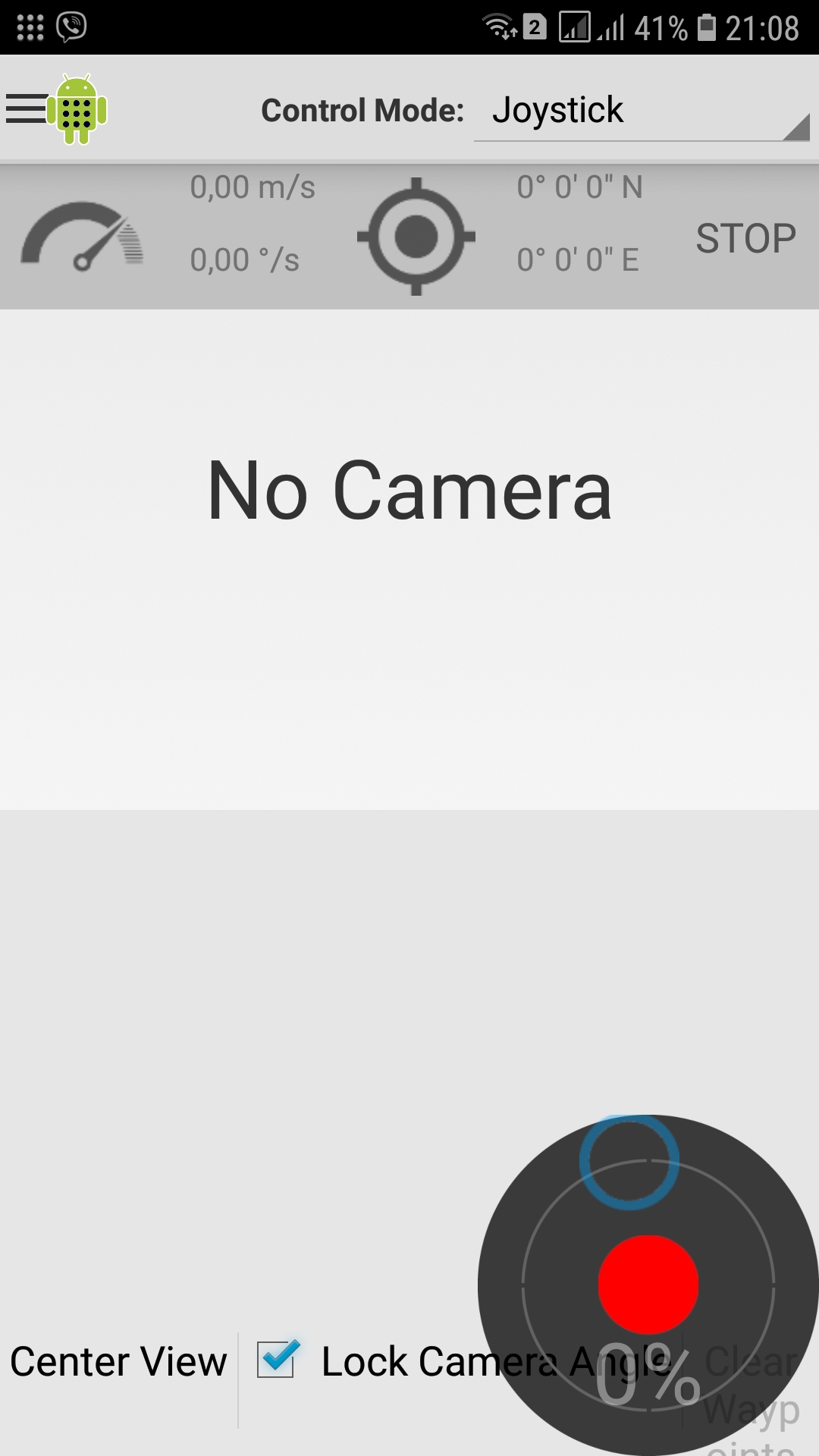

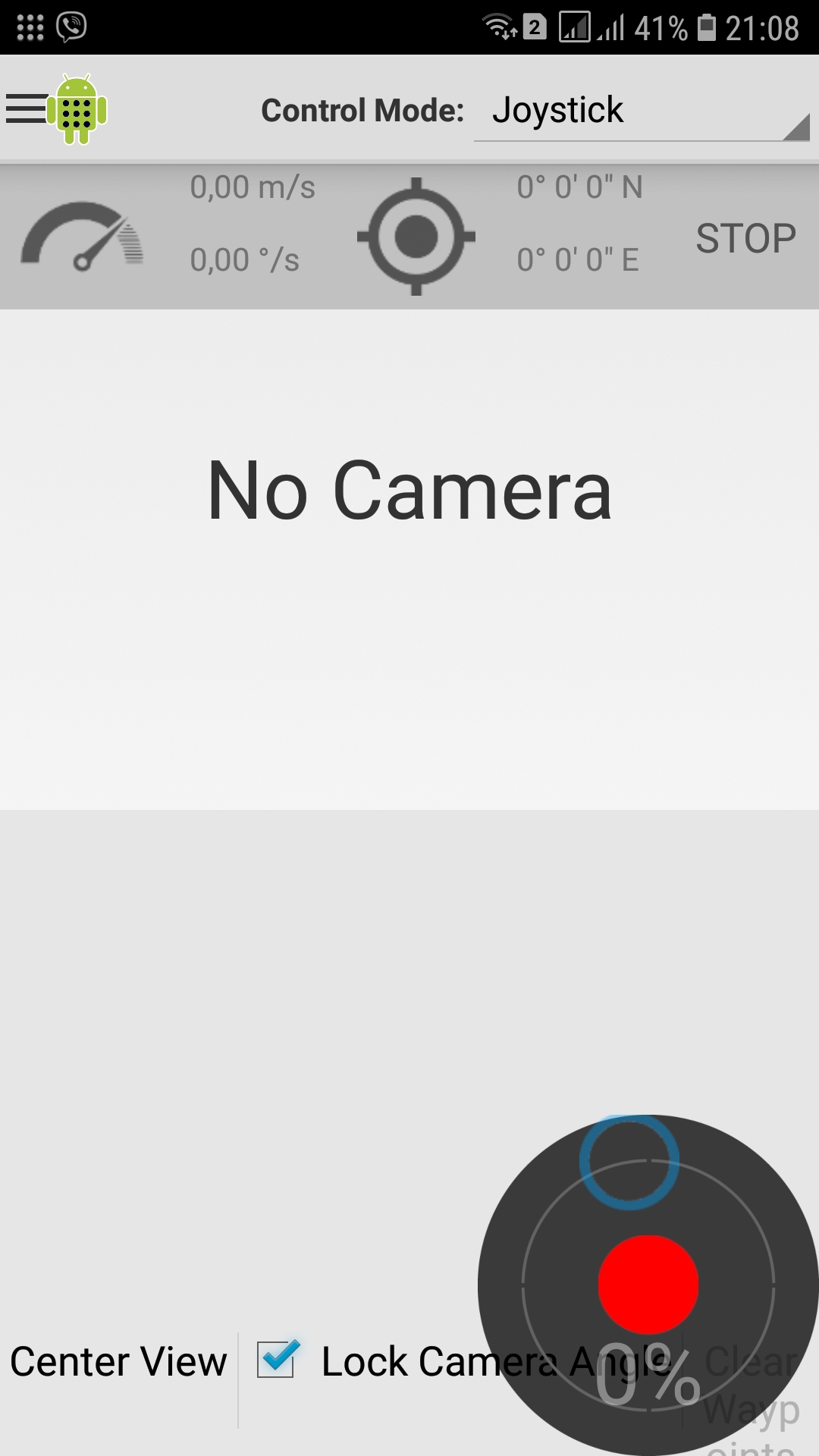

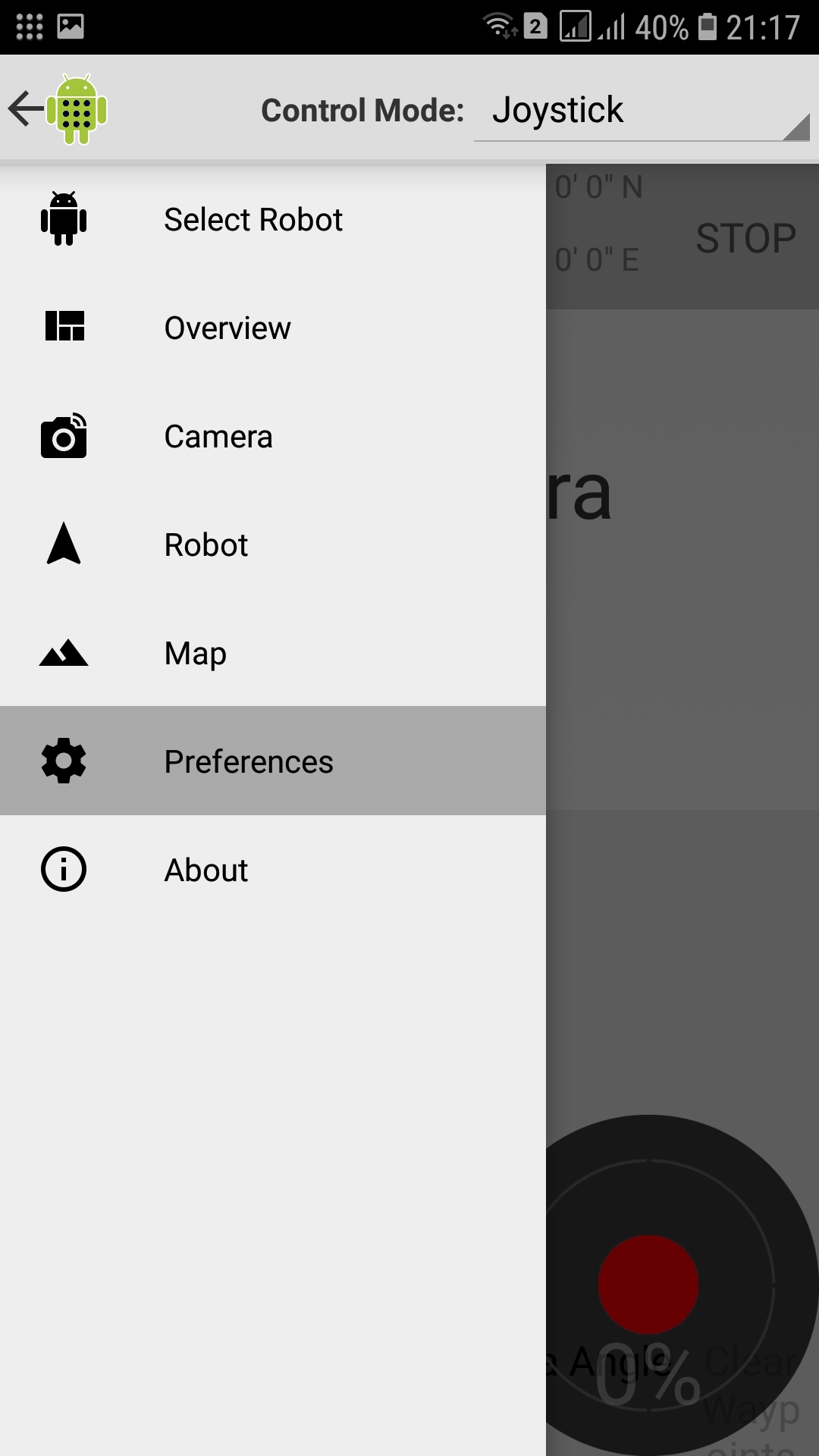

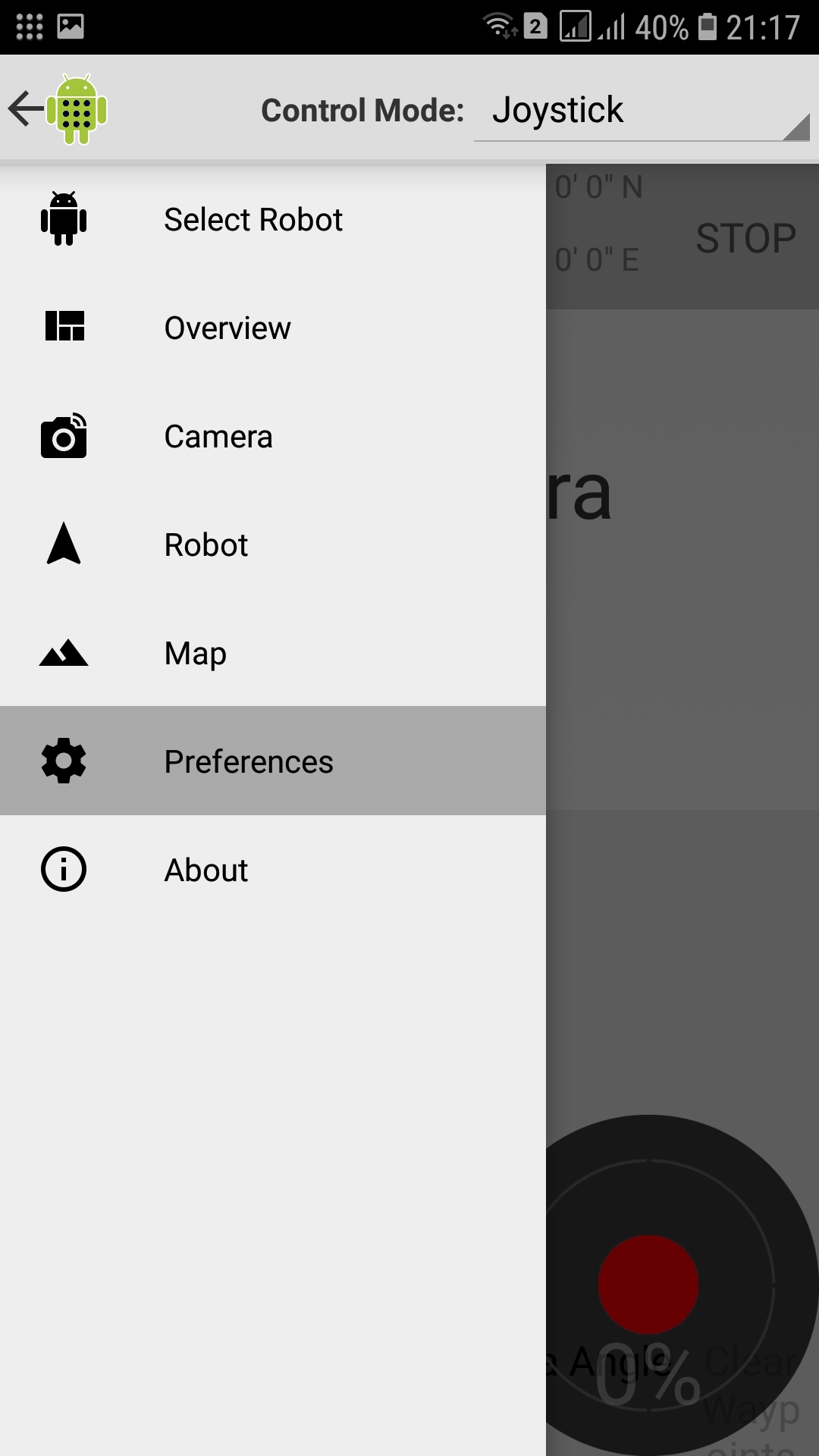

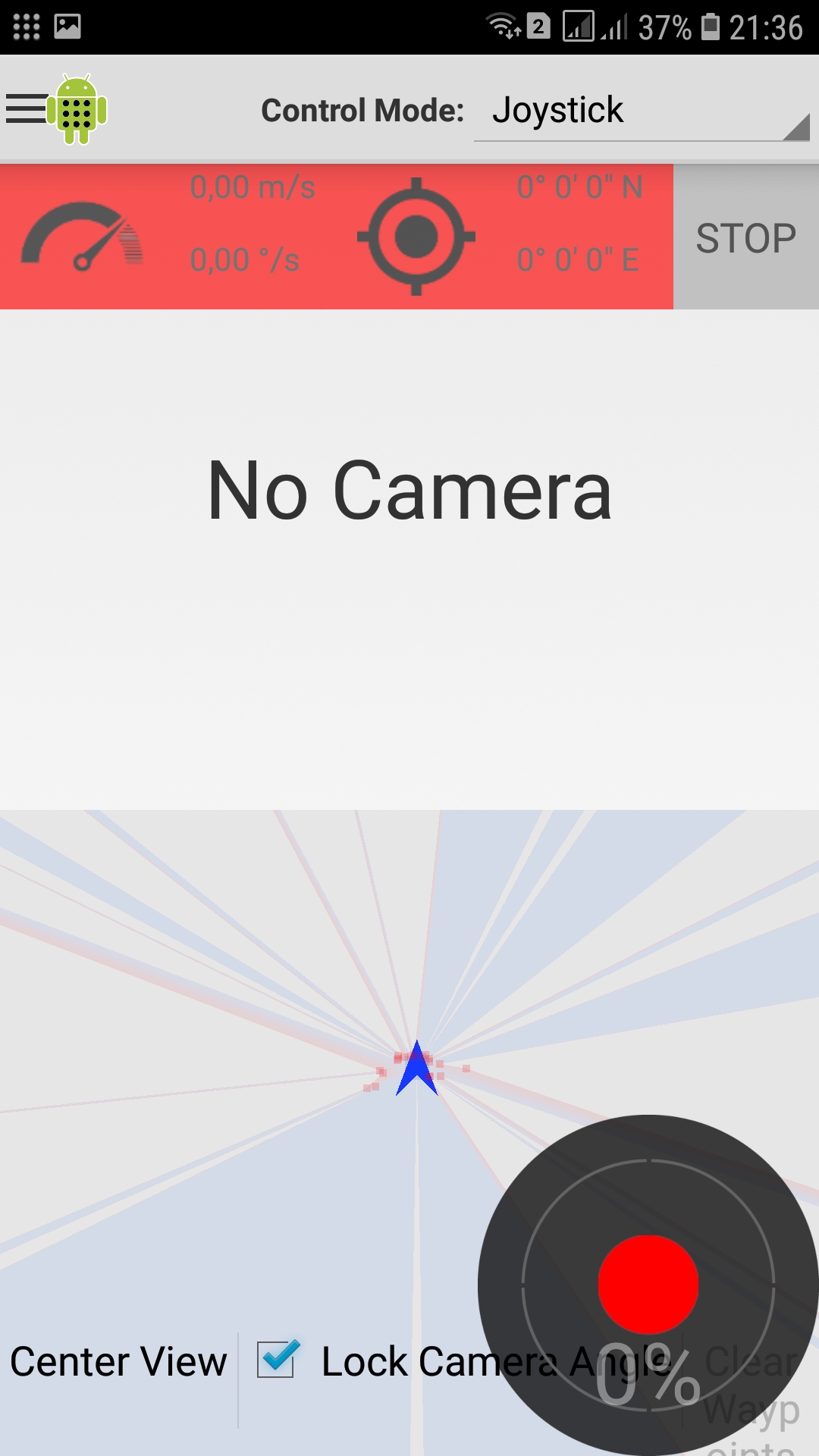

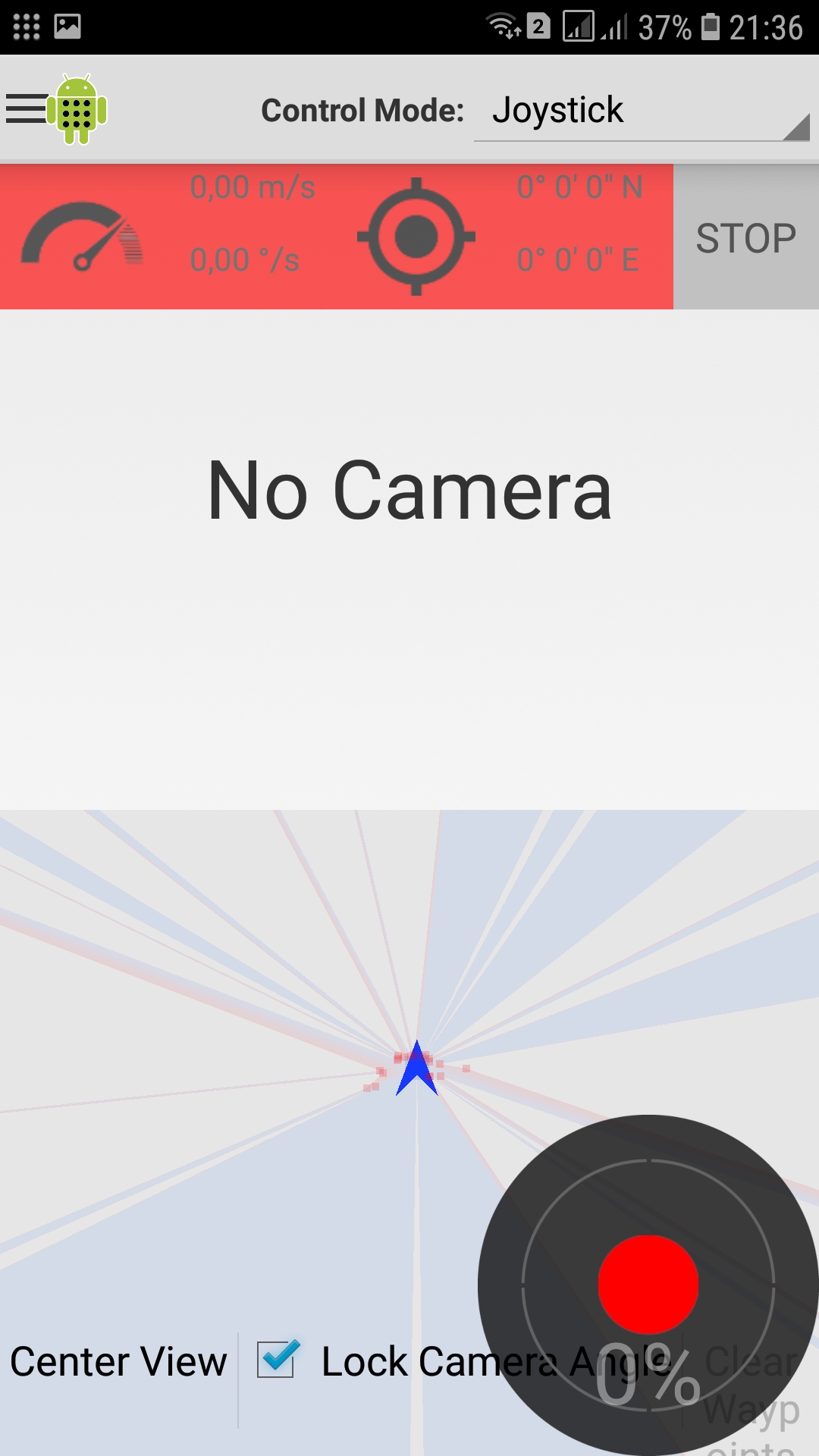

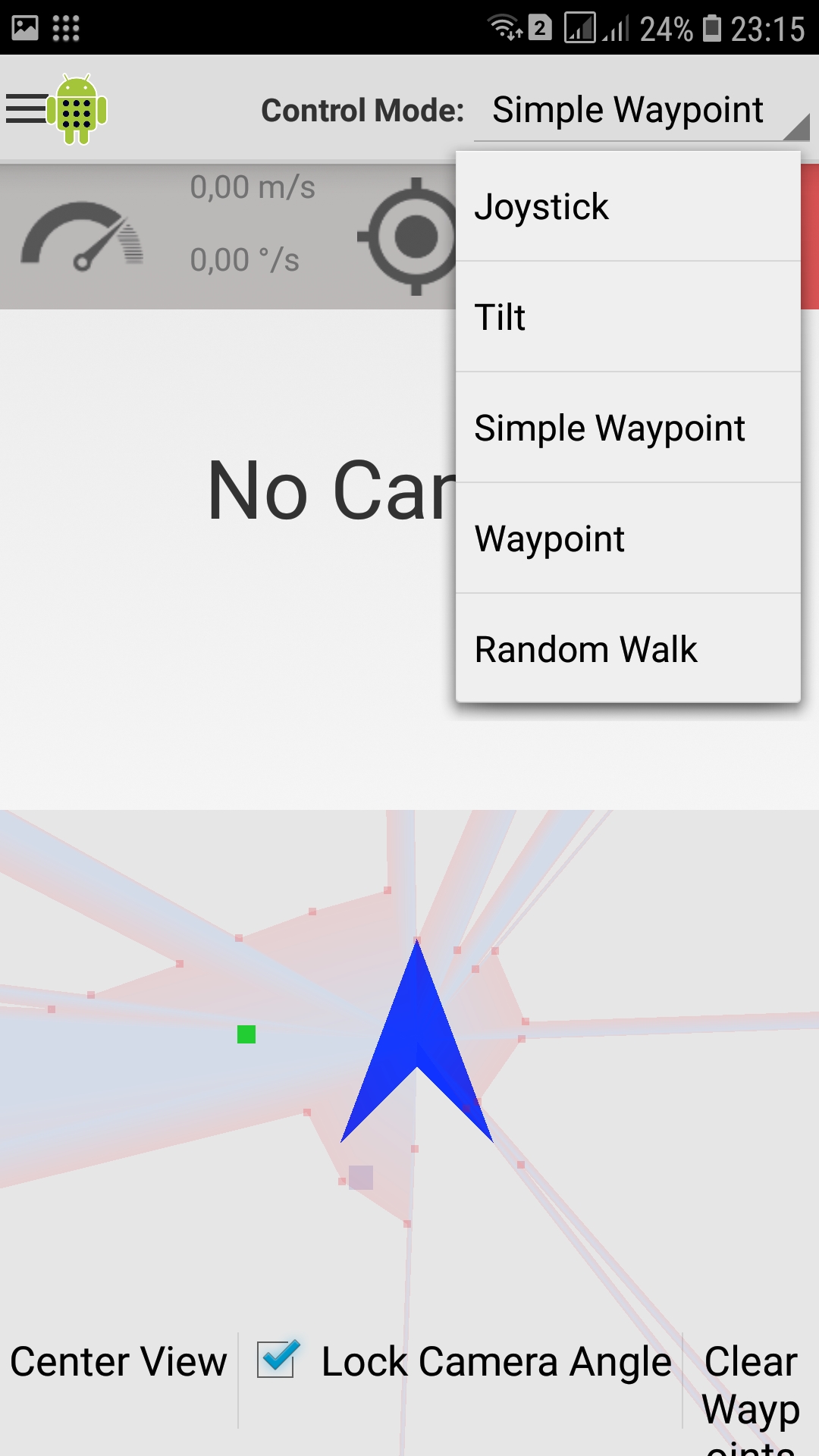

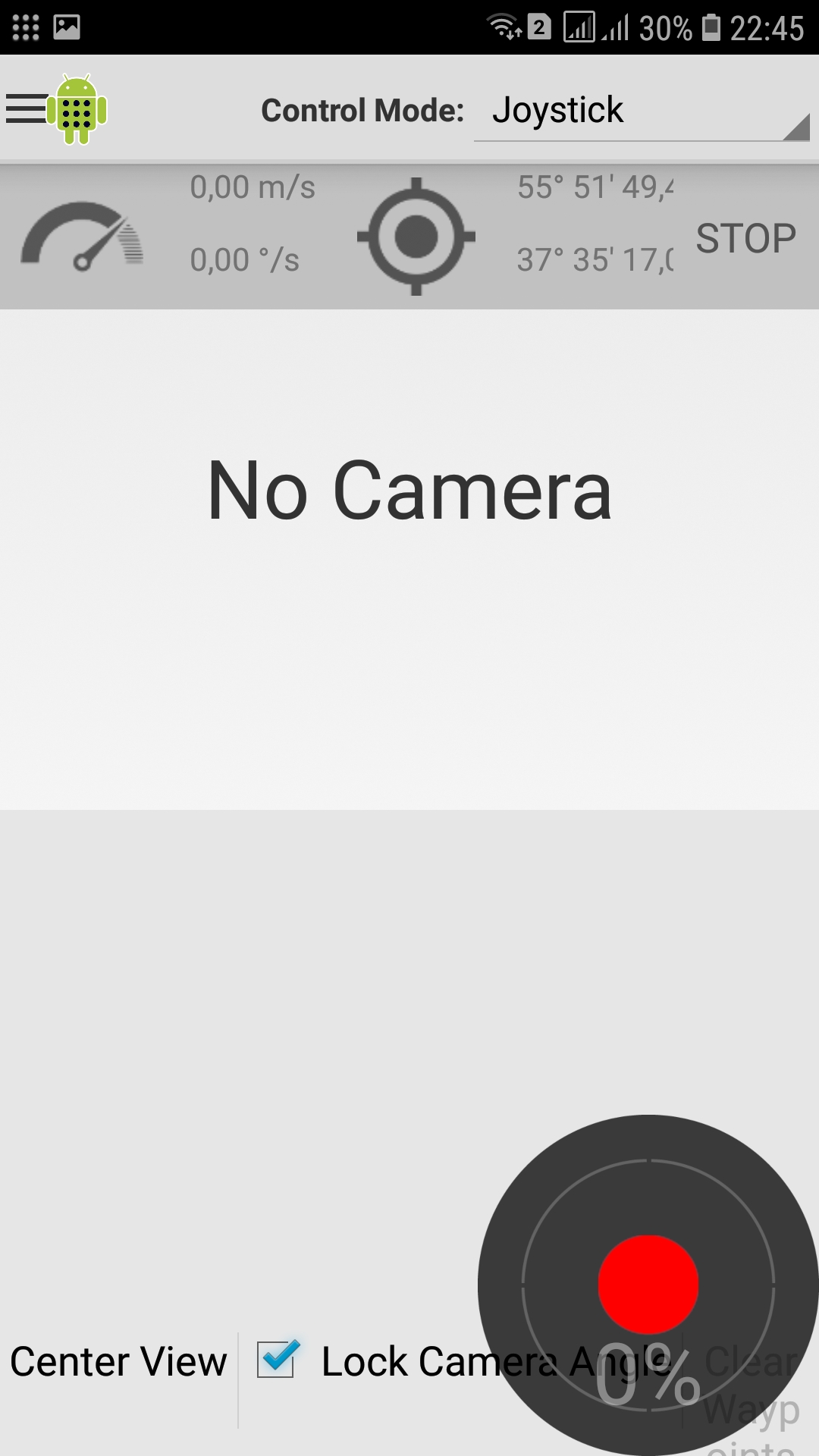

On the phone in the ROS Control application, click on the line with the name of the robot and fail in the robot control menu:

picture

Now, if you pull the joystick with your finger (in any direction), the trolley will go. Everything is simple here.

If you look at what happens with the engine driver topic running on raspberry, you will see that the phone publishes and the driver receives messages in the appropriate format:

All other topics work on the same principle.

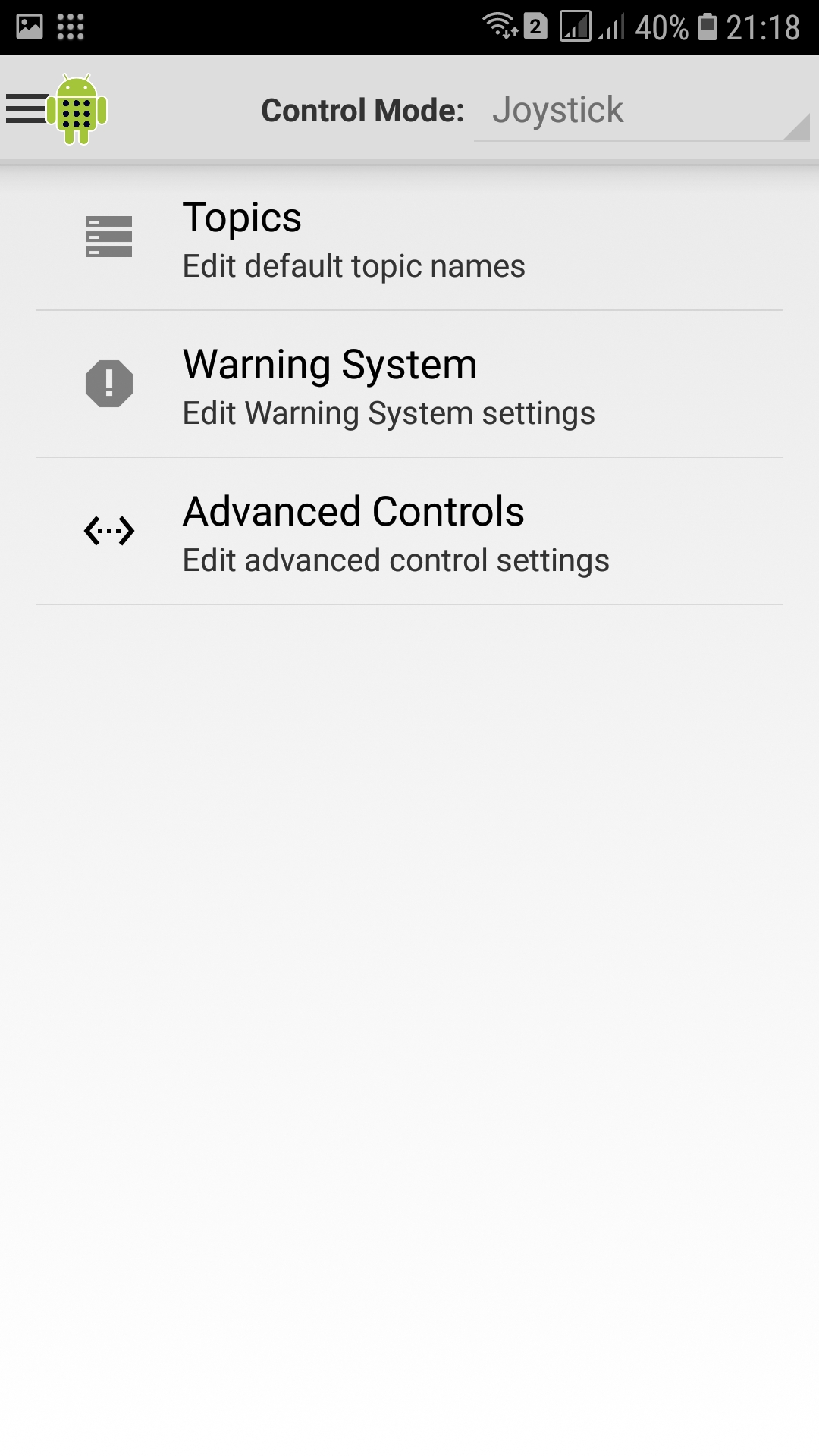

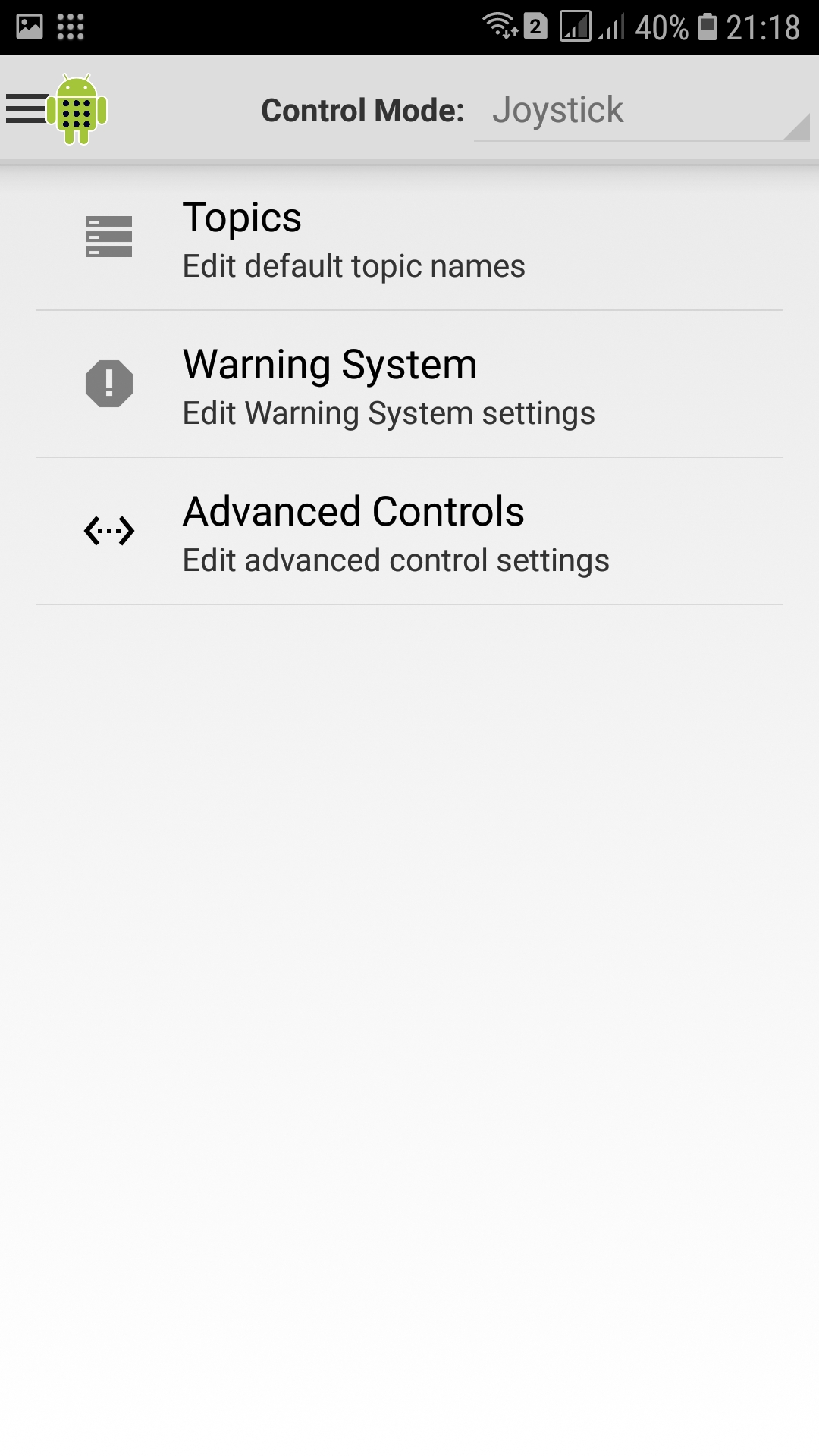

* If, nevertheless, something went wrong, it is necessary, while in the robot control mode, to click on three parallel strips in the upper left of the application screen. Fail in the “Preferences” and then select “Topics”:

picture

picture

Next, clicking on the appropriate topic, register the path (Value):

picture

Let's see what lidar is in the application.

On the robot, run the lidar node:

roslaunch rplidar_ros rplidar.launch

If you re-enter the application, then on the robot control screen we will see characteristic points and a blue arrow:

picture

* You can also ride by tilting the phone in different directions and selecting the Tilt Control Mode:

picture

There are other modes of "driving", even with the ability to indicate a point on the map, directly on the phone, but since the map is very arbitrary, the trip will also be conditional, that is, going nowhere.

With motion topics, like cameras (we will not consider it), everything is more or less clear, we will deal with gps.

There is little sense in the room from gps, but it's interesting to see how it works.

For tests, any GPS whistle is suitable. My option is as follows -

picture

To make it work, you first need to install the gps node on raspberry.

But first, let’s check if the GPS whistle works, otherwise all other conversations will be in favor of the poor.

We put packages on raspberry:

sudo apt-get install gpsd

sudo apt-get install gpsd-clients

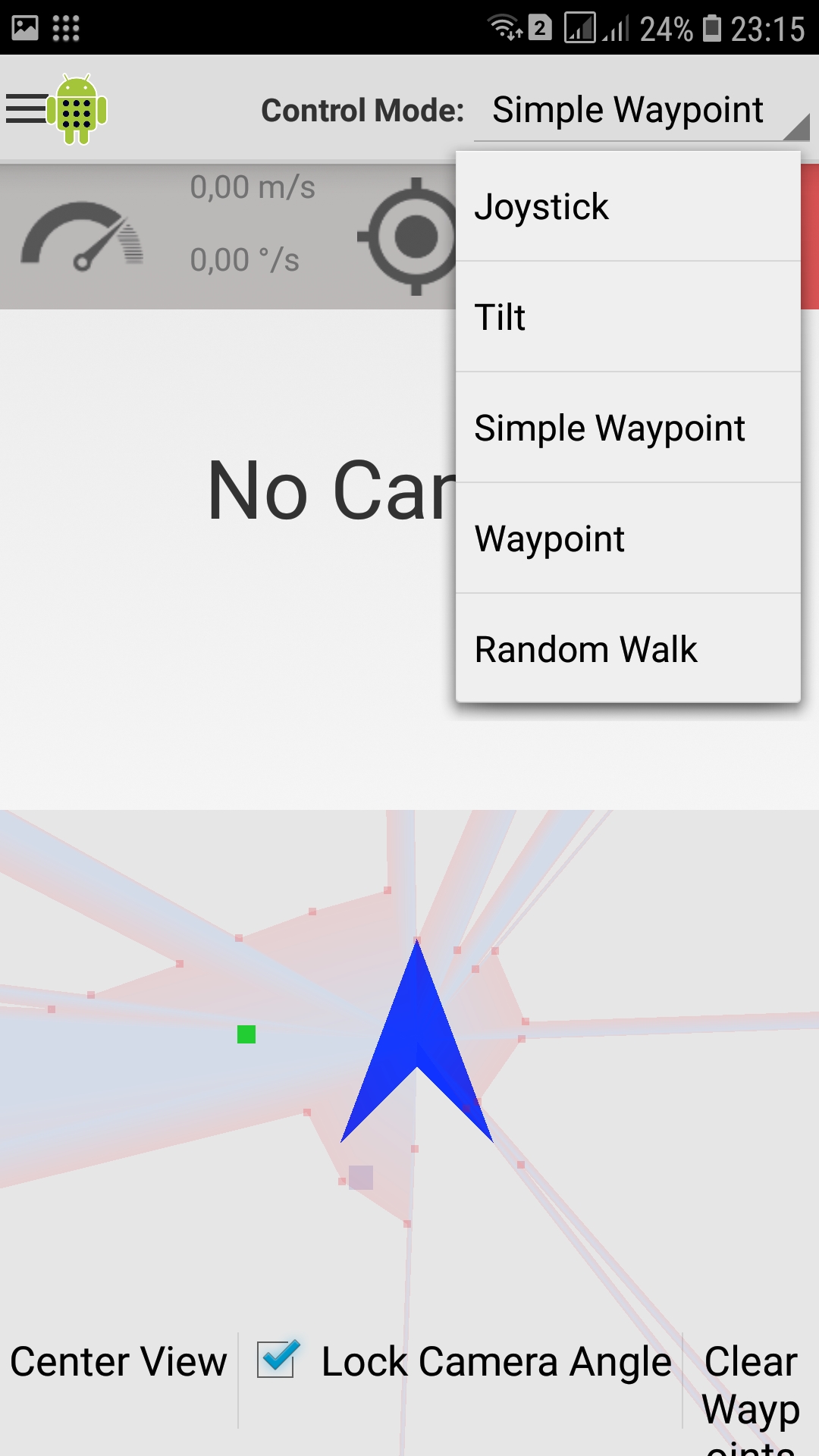

Check that the GPS whistle is defined among usb devices:

lsusb

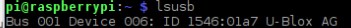

Now, check that gps is accepting something:

gpscat -s 9600 /dev/ttyACM1

* Instead of ttyACM1, there may be other values (ttyACM0, ttyUSB0, ttyAMA0) depending on which port the GPS whistle is on.

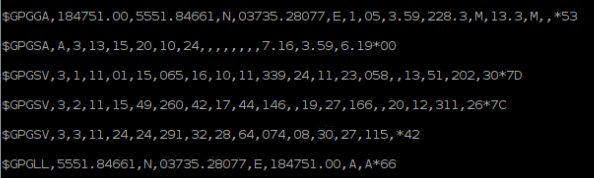

GPS messages will sprinkle in the terminal:

picture

More structured (pleasing to the eye) data can be obtained as follows:

gpsmon /dev/ttyACM1

picture

* the coordinates are not mine, which is strange. It is not clear what this is connected with.

Install the gps node on raspberry:

sudo apt-get install libgps-dev

cd catkin_ws/src

git clone https://github.com/ros-drivers/nmea_navsat_driver

git clone https://github.com/ros/roslint

cd /home/pi/rosbots_catkin_ws

catkin_make

Now that we have assembled the GPS node, run it (on the robot):

rosrun nmea_navsat_driver nmea_serial_driver _port:=/dev/ttyACM1 _baud:=9600

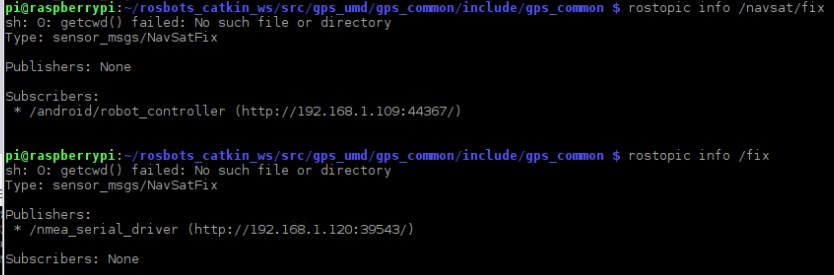

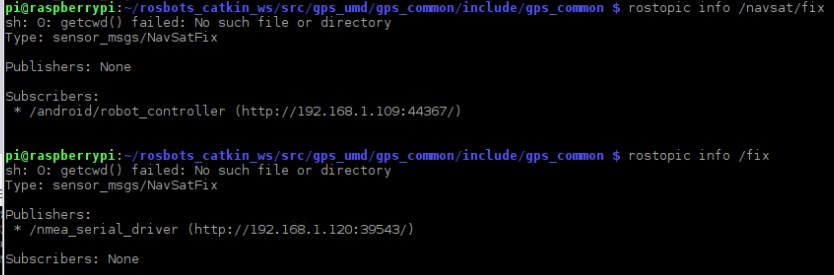

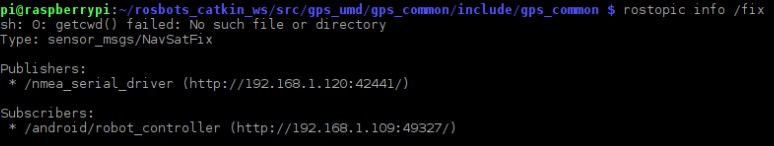

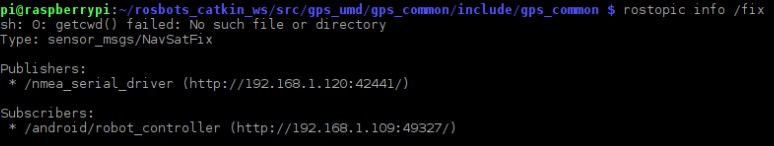

If you look at the topics (in another terminal of the robot), you can see that the phone is subscribed to one topic, and the publication of a gps node goes to another:

picture

Change the receiving topic on the phone to the / fix topic through the settings as described above.

After the change, the publication and reception in one topic:

picture

And on the phone you can observe the coordinates:

picture

Odometry (topic / odom).

If you run the odometry node from previous posts, then, when the robot moves, you can also get the linear and angular speeds of the robot on the phone (speeds are not calculated using gps).

At work:

cd /home/pi/rosbots_catkin_ws/src/rosbots_driver/scripts/rosbots_driver

python diff_tf.py

On the phone:

picture