My favorite pastime is to open the DevTools tab and check how much website artifacts weigh. In this article I’ll tell you how we reduced the weight of the application by 30% with the help of the platform front-end team in one day without changing the site code. No tricks and registrations - only nginx, docker and node.js (optional).

What for

Now front-end applications weigh a lot. Collected artifacts can weigh 2-3 MB, or even more. However, compression algorithms come to the aid of users.

Until recently, we used only Gzip, which was introduced to the world back in 1992. This is probably the most popular compression algorithm on the web, it is supported by all browsers above IE 6.

Let me remind you that the compression level of Gzip varies in the range from 1 to 9 (more is more efficient), and you can compress it either on the fly or statically.

- “On the fly” (dynamically) - artifacts are stored in the form received after assembly, their compression occurs during delivery to the client. In our case, at the nginx level.

- Statically - artifacts are compressed after assembly, and the HTTP server sends them to the client "as is".

Obviously, the first option requires more server resources for each request. The second is at the stage of assembly and preparation of the application.

Our frontend was compressed dynamically by the fourth level. I will demonstrate the difference between a compressed artifact and the original:

| Compression level

| Artifact Weight, Kb

| Compression time, ms

|

|---|---|---|

| 0

| 2522

| -

|

| one

| 732

| 42

|

| 2

| 702

| 44

|

| 3

| 683

| 48

|

| 4

| 636

| 55

|

| 5

| 612

| 65

|

| 6

| 604

| 77

|

| 7

| 604

| 80

|

| 8

| 603

| 104

|

| nine

| 601

| 102

|

You may notice that even the fourth level reduces the size of the artifact by 4 times! And the difference between the fourth level and the ninth is 35 Kb, that is 1.3% of the original, but the compression time is 2 times longer.

And recently, we thought: why not switch to Brotli? Yes, and at the most powerful compression level!

By the way, this algorithm was introduced by Google in 2015 and has 11 compression levels. At the same time, the fourth level of Brotli is more effective than the ninth in Gzip. I got motivated and quickly threw code to compress artifacts with Brotli algorithm. The results are presented below:

| Compression level

| Artifact Weight, Kb

| Compression time, ms

|

|---|---|---|

| 0

| 2522

| - |

| one

| 662

| 128

|

| 2

| 612

| 155

|

| 3

| 601

| 156

|

| 4

| 574

| 202

|

| 5

| 526

| 227

|

| 6

| 512

| 249

|

| 7

| 501

| 303

|

| 8

| 496

| 359

|

| nine

| 492

| 420

|

| 10

| 452

| 3708

|

| eleven

| 446

| 8257

|

However, the table shows that even the first Brotli compression level takes longer than the ninth level in Gzip. And the last level - as much as 8.3 seconds! It alerted me.

On the other hand, the result is clearly impressive. Next, I tried to transfer compression to nginx - google the documentation . Everything turned out to be extremely simple:

brotli on; brotli_comp_level 11; brotli_types text/plain text/css application/javascript;

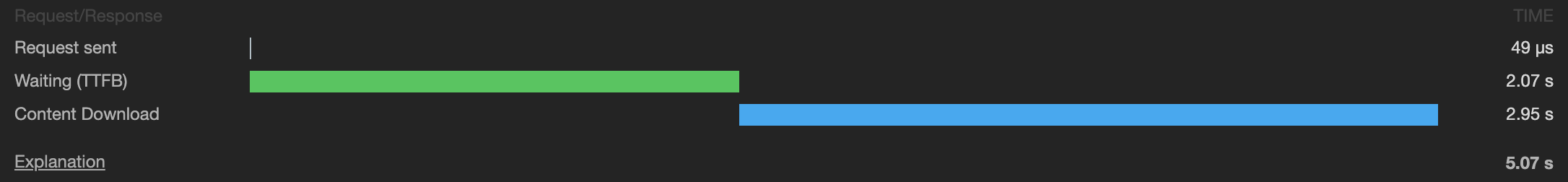

He collected the docker image, launched the container and was terribly surprised:

The download time of my file increased tenfold - from 100 ms to 5 seconds! The application has become impossible to use.

Having studied the documentation deeper, I realized that you can distribute statically. I used a previously written script, compressed the same artifacts, put it in a container, and launched it. Download time back to normal - victory! However, it’s too early to rejoice, because the proportion of browsers that support this type of compression is about 80% .

This means that you must maintain backward compatibility, while additionally you want to use the most effective level of Gzip. So the idea came up to make a file compression utility, which later got the name “Jackal”.

What do we need?

Nginx, Docker, and Node.js, although you can also use bash if you wish.

With Nginx, almost everything is clear:

brotli off; brotli_static on; gzip_static on;

But what to do with applications that have not yet managed to update the docker image? Right, add backward compatibility:

gzip on; gzip_level 4; gzip_types text/plain text/css application/javascript;

I will explain the principle of operation:

At each request, the client sends an Accept-Encoding header, which lists the supported compression algorithms, separated by commas. Usually it is deflate, gzip, br.

If the client has br in the line, then nginx looks for files with the extension .br, if there are no such files and the client supports Gzip, then it looks for .gz. If there are no such files, then it will shake "on the fly" and give it back with the fourth level of compression.

If the client does not support any type of compression, then the server will issue artifacts in their original form.

However, a problem arose: our nginx docker image does not support the Brotli module. As a basis, I took a ready-made docker image .

Dockerfile for "packing" nginx in a project

FROM fholzer/nginx-brotli # RUN rm -rf /usr/share/nginx/html/ # COPY app/nginx /etc/nginx/conf.d/ # COPY dist/ /usr/share/nginx/html/ # CMD nginx -c /etc/nginx/conf.d/nginx.conf

We figured out traffic balancing, but where to get artifacts from? This is where the Jackal comes to the rescue.

"Jackal"

This is a utility for compressing the statics of your application.

Now these are three node.js scripts wrapped in a docker image with node: alpine. Let's go over the scripts.

base-compressor is a script that implements basic compression logic.

Input Arguments:

- Compression function - any javascript function, you can implement your own compression algorithm.

- Compression parameters - an object with parameters necessary for the transferred function.

- Extension - expansion of compression artifacts. Must be specified starting with a period character.

gzip.js - a file with a base-compressor call with the Gzip function passed from the zlib package and indicating the ninth level of compression.

brotli.js - a file with a base-compressor call with the Brotli function passed from the same npm package and indicating the 11th level of compression.

Dockerfile creating the Jackal image

FROM node:8.12.0-alpine # COPY scripts scripts # package.json package-lock.json COPY package*.json scripts/ # WORKDIR scripts # # node_modules/ # , RUN npm ci # CMD node gzip.js | node brotli.js

We figured out how it works, now you can safely run:

docker run \ -v $(pwd)/dist:/scripts/dist \ -e 'dirs=["dist/"]' \ -i mngame/shakal

- -v $ (pwd) / dist: / scripts / dist - specify which local directory to consider the directory in the container (link to the mount). Specifying the scripts directory is required, as it is working inside the container.

- -e 'dirs = ["dist /"]' - specify the environment parameter dirs - an array of lines that describe the directories inside scripts / that will be compressed.

- -i mngame / shakal - specifying an image with docker.io.

In the specified directories, the script recursively compresses all files with the specified extensions .js, .json, .html, .css and saves files with the extensions .br and .gz next to it. On our project, this process takes about two minutes with the weight of all artifacts about 6 MB.

At this point, and maybe even earlier, you might have thought: “What docker? Which node? Why not just add two packages to yourself in the package.json of the project and call directly on postbuild? ”

It’s very painful for me to see when, for the sake of running linters in CI, the project installs 100+ packages for itself, of which it needs a maximum of 10 at the linting stage. This is agent time, your time, after all, time to market.

In the case of the docker, we get a pre-assembled image in which everything necessary for compression is installed. If you don’t need to compress anything now - do not compress. We need a lint - run only it, tests are needed - run only them. Plus, we get a good version of Jackal: we don’t need to update its dependencies in each project - just release a new version, and use the latest tag for the project.

Result:

- The size of artifacts has changed from 636 Kb to 446 Kb.

- Percentage size decreased by 30%.

- Download time decreased by 10-12%.

- The time for decompression, based on the article , remained the same.

Total

You can help your users right now, right by the next PR: add a step after assembly - “Jackal” compression, and then deliver artifacts to your container. After half an hour, your users feel a little better.

We managed to reduce the weight of the front-end by 30% - you will succeed! All easy sites.

References:

- Docker utility image

- Utility repository

- UPD: Thanks to kellas , the CLI version appeared as an npm package