Note: the full source code for this project is available here: [ source ].

When the project I'm working on begins to run out of steam, I add new visualizations that give me motivation to move on.

After the release of the original Task-Bot concept [ translation into Habré], I felt that I was limited by the two-dimensional space in which I worked. It seemed that it was holding back the possibilities for the emergent behavior of bots.

Previous unsuccessful attempts to learn modern OpenGL have put before me a mental barrier, but at the end of July I somehow finally broke through it. Today, at the end of October, I already have a fairly confident understanding of the concepts, so I released my own simple voxel engine, which will be the environment for the life and prosperity of my Task-Bots.

I decided to create my own engine, because I needed full control over the graphics; besides, I wanted to test myself. In a way, I was inventing a bicycle, but I really liked this process!

The ultimate goal of the entire project was a complete simulation of the ecosystem, where bots in the role of agents manipulate the environment and interact with it.

Since the engine has already moved quite a bit forward and I will move on to programming bots again, I decided to write a post about the engine, its functions and implementation in order to focus on higher-level tasks in the future.

Engine concept

The engine is completely written from scratch in C ++ (with some exceptions, such as finding a path). I use SDL2 to render the context and process the input, OpenGL to render the 3D scene, and DearImgui to control the simulation.

I decided to use voxels mainly because I wanted to work with a grid that has many advantages:

- Creating meshes for rendering is well understood to me.

- The world’s data storage capabilities are more diverse and understandable.

- I have already created systems for generating terrain and climate simulations based on meshes.

- The tasks of bots in the grid are easier to parameterize.

The engine consists of a world data system, a rendering system and several auxiliary classes (for example, for sound and input processing).

In the article, I will talk about the current list of features, as well as take a closer look at more complex subsystems.

World class

The world class serves as the base class for storing all the information in the world. It handles the generation, loading, and storage of block data.

The block data is stored in chunks of constant size (16 ^ 3), and the world stores the fragment vector loaded into virtual memory. In large worlds, it is practically necessary to remember only a certain part of the world, which is why I chose this approach.

class World{ public: World(std::string _saveFile){ saveFile = _saveFile; loadWorld(); } //Data Storage std::vector<Chunk> chunks; //Loaded Chunks std::stack<int> updateModels; //Models to be re-meshed void bufferChunks(View view); //Generation void generate(); Blueprint blueprint; bool evaluateBlueprint(Blueprint &_blueprint); //File IO Management std::string saveFile; bool loadWorld(); bool saveWorld(); //other... int SEED = 100; int chunkSize = 16; int tickLength = 1; glm::vec3 dim = glm::vec3(20, 5, 20); //...

Fragments store block data, as well as some other metadata, in a flat array. Initially, I implemented my own sparse octree tree for storing fragments, but it turned out that the random access time is too high to create meshes. And although a flat array is not optimal from the point of view of memory, it provides the ability to very quickly build meshes and manipulate blocks, as well as access to the search path.

class Chunk{ public: //Position information and size information glm::vec3 pos; int size; BiomeType biome; //Data Storage Member int data[16*16*16] = {0}; bool refreshModel = false; //Get the Flat-Array Index int getIndex(glm::vec3 _p); void setPosition(glm::vec3 _p, BlockType _type); BlockType getPosition(glm::vec3 _p); glm::vec4 getColorByID(BlockType _type); };

If I ever implement multi-threaded saving and loading fragments, then converting a flat array into a sparse octree tree and vice versa can be a completely possible option for saving memory. There is still room for optimization!

My implementation of a sparse octree tree is stored in the code, so you can safely use it.

Fragment storage and memory handling

Fragments are visible only when they are within the rendering distance of the current camera position. This means that when the camera moves, you need to dynamically load and compose fragments in the meshes.

Fragments are serialized using the boost library, and world data is stored as a simple text file, in which each fragment is a line of the file. They are generated in a specific order so that they can be "ordered" in a world file. This is important for further optimizations.

In the case of a large world, the main bottleneck is reading the world file and loading / writing fragments. Ideally, we only need to download and transfer the world file.

To do this, the

World::bufferChunks()

method removes fragments that are in virtual memory but are invisible, and intelligently loads new fragments from the world file.

By intelligence is meant that he simply decides which new fragments to load, sorting them by their position in the save file, and then performing a single pass. Everything is very simple.

void World::bufferChunks(View view){ //Load / Reload all Visible Chunks evaluateBlueprint(blueprint); //Chunks that should be loaded glm::vec3 a = glm::floor(view.viewPos/glm::vec3(chunkSize))-view.renderDistance; glm::vec3 b = glm::floor(view.viewPos/glm::vec3(chunkSize))+view.renderDistance; //Can't exceed a certain size a = glm::clamp(a, glm::vec3(0), dim-glm::vec3(1)); b = glm::clamp(b, glm::vec3(0), dim-glm::vec3(1)); //Chunks that need to be removed / loaded std::stack<int> remove; std::vector<glm::vec3> load; //Construct the Vector of chunks we should load for(int i = ax; i <= bx; i ++){ for(int j = ay; j <= by; j ++){ for(int k = az; k <= bz; k ++){ //Add the vector that we should be loading load.push_back(glm::vec3(i, j, k)); } } } //Loop over all existing chunks for(unsigned int i = 0; i < chunks.size(); i++){ //Check if any of these chunks are outside of the limits if(glm::any(glm::lessThan(chunks[i].pos, a)) || glm::any(glm::greaterThan(chunks[i].pos, b))){ //Add the chunk to the erase pile remove.push(i); } //Don't reload chunks that remain for(unsigned int j = 0; j < load.size(); j++){ if(glm::all(glm::equal(load[j], chunks[i].pos))){ //Remove the element from load load.erase(load.begin()+j); } } //Flags for the Viewclass to use later updateModels = remove; //Loop over the erase pile, delete the relevant chunks. while(!remove.empty()){ chunks.erase(chunks.begin()+remove.top()); remove.pop(); } //Check if we want to load any guys if(!load.empty()){ //Sort the loading vector, for single file-pass std::sort(load.begin(), load.end(), [](const glm::vec3& a, const glm::vec3& b) { if(ax > bx) return true; if(ax < bx) return false; if(ay > by) return true; if(ay < by) return false; if(az > bz) return true; if(az < bz) return false; return false; }); boost::filesystem::path data_dir( boost::filesystem::current_path() ); data_dir /= "save"; data_dir /= saveFile; std::ifstream in((data_dir/"world.region").string()); Chunk _chunk; int n = 0; while(!load.empty()){ //Skip Lines (this is dumb) while(n < load.back().x*dim.z*dim.y+load.back().y*dim.z+load.back().z){ in.ignore(1000000,'\n'); n++; } //Load the Chunk { boost::archive::text_iarchive ia(in); ia >> _chunk; chunks.push_back(_chunk); load.pop_back(); } } in.close(); } }

An example of loading fragments with a small rendering distance. Screen distortion artifacts are caused by video recording software. Noticeable spikes in downloads sometimes occur, mainly caused by meshing

In addition, I set a flag indicating that the renderer should recreate the mesh of the loaded fragment.

Blueprint Class and editBuffer

editBuffer is a sortable bufferObjects container that contains information about editing in world space and fragment space.

//EditBuffer Object Struct struct bufferObject { glm::vec3 pos; glm::vec3 cpos; BlockType type; }; //Edit Buffer! std::vector<bufferObject> editBuffer;

If, when making changes to the world, writing them to a file immediately after making the change, then we will have to transfer the entire text file and write EVERY change. This is terrible in terms of performance.

Therefore, I first write all the changes that need to be made to editBuffer using the addEditBuffer method (which also calculates the position of the changes in the fragment space). Before writing them to a file, I sort the changes by the order of the fragments to which they belong by their location in the file.

Writing changes to a file consists of one file transfer, loading of each line (i.e. fragment), for which there are changes in editBuffer, making all changes and writing it to a temporary file until editBuffer becomes empty. This is done in the

evaluateBlueprint()

function, which is fast enough.

bool World::evaluateBlueprint(Blueprint &_blueprint){ //Check if the editBuffer isn't empty! if(_blueprint.editBuffer.empty()){ return false; } //Sort the editBuffer std::sort(_blueprint.editBuffer.begin(), _blueprint.editBuffer.end(), std::greater<bufferObject>()); //Open the File boost::filesystem::path data_dir(boost::filesystem::current_path()); data_dir /= "save"; data_dir /= saveFile; //Load File and Write File std::ifstream in((data_dir/"world.region").string()); std::ofstream out((data_dir/"world.region.temp").string(), std::ofstream::app); //Chunk for Saving Data Chunk _chunk; int n_chunks = 0; //Loop over the Guy while(n_chunks < dim.x*dim.y*dim.z){ if(in.eof()){ return false; } //Archive Serializers boost::archive::text_oarchive oa(out); boost::archive::text_iarchive ia(in); //Load the Chunk ia >> _chunk; //Overwrite relevant portions while(!_blueprint.editBuffer.empty() && glm::all(glm::equal(_chunk.pos, _blueprint.editBuffer.back().cpos))){ //Change the Guy _chunk.setPosition(glm::mod(_blueprint.editBuffer.back().pos, glm::vec3(chunkSize)), _blueprint.editBuffer.back().type); _blueprint.editBuffer.pop_back(); } //Write the chunk back oa << _chunk; n_chunks++; } //Close the fstream and ifstream in.close(); out.close(); //Delete the first file, rename the temp file boost::filesystem::remove_all((data_dir/"world.region").string()); boost::filesystem::rename((data_dir/"world.region.temp").string(),(data_dir/"world.region").string()); //Success! return true; }

The blueprint class contains editBuffer, as well as several methods that allow you to create editBuffers for specific objects (trees, cacti, huts, etc.). Then blueprint can be converted to the position where you want to place the object, and then simply write it to the memory of the world.

One of the biggest difficulties when working with fragments is that changes in several blocks between the boundaries of fragments can turn out to be a monotonous process with a lot of arithmetic modulo and dividing the changes into several parts. This is the main problem that the blueprint class handles brilliantly.

I actively use it at the stage of world generation to expand the “bottleneck” of writing changes to a file.

void World::generate(){ //Create an editBuffer that contains a flat surface! blueprint.flatSurface(dim.x*chunkSize, dim.z*chunkSize); //Write the current blueprint to the world file. evaluateBlueprint(blueprint); //Add a tree Blueprint _tree; evaluateBlueprint(_tree.translate(glm::vec3(x, y, z))); }

The world class stores its own blueprint of changes made to the world, so that when bufferChunks () is called, all changes are written to the hard disk in one pass and then deleted from virtual memory.

Rendering

The renderer in its structure is not very complicated, but it requires knowledge of OpenGL to understand. Not all of its parts are interesting, mainly they are OpenGL functionality wrappers. I have been experimenting with visualization for quite some time to get what I like.

Since the simulation is not from the first person, I chose the orthographic projection. It could be implemented in pseudo-3D format (i.e., to pre-project tiles and overlay them in a software renderer), but it seemed silly to me. I'm glad I switched to using OpenGL.

The base class for rendering is called View, it contains most of the important variables that control the simulation rendering:

- Screen size and shadow texture

- Shader objects, camera, matrix, etc. zoom factors

- Boolean values for almost all renderer functions

- Menu, fog, depth of field, grain texture, etc.

- Colors for lighting, fog, sky, window selection, etc.

In addition, there are several helper classes that do the rendering and wrapping of OpenGL itself!

- Class shader

- Loads, compiles, compiles and uses GLSL shaders

- Model Class

- Contains VAO (Vertex Arrays Object) data fragments for rendering, the function of creating meshes and the render method.

- Class billboard

- Contains the FBO (FrameBuffer Object) to render to - useful for creating post-processing and shadowing effects.

- Sprite class

- Draws a quadrilateral oriented relative to the camera, loaded from a texture file (for bots and objects). Also can handle animations!

- Interface class

- To work with ImGUI

- Audio class

- Very rudimentary sound support (if you compile the engine, press “M”)

High Depth of Field (DOF). At large rendering distances, it can be slow, but I did all this on my laptop. Perhaps on a good computer the brakes will be invisible. I understand that it strains my eyes and did so just for fun.

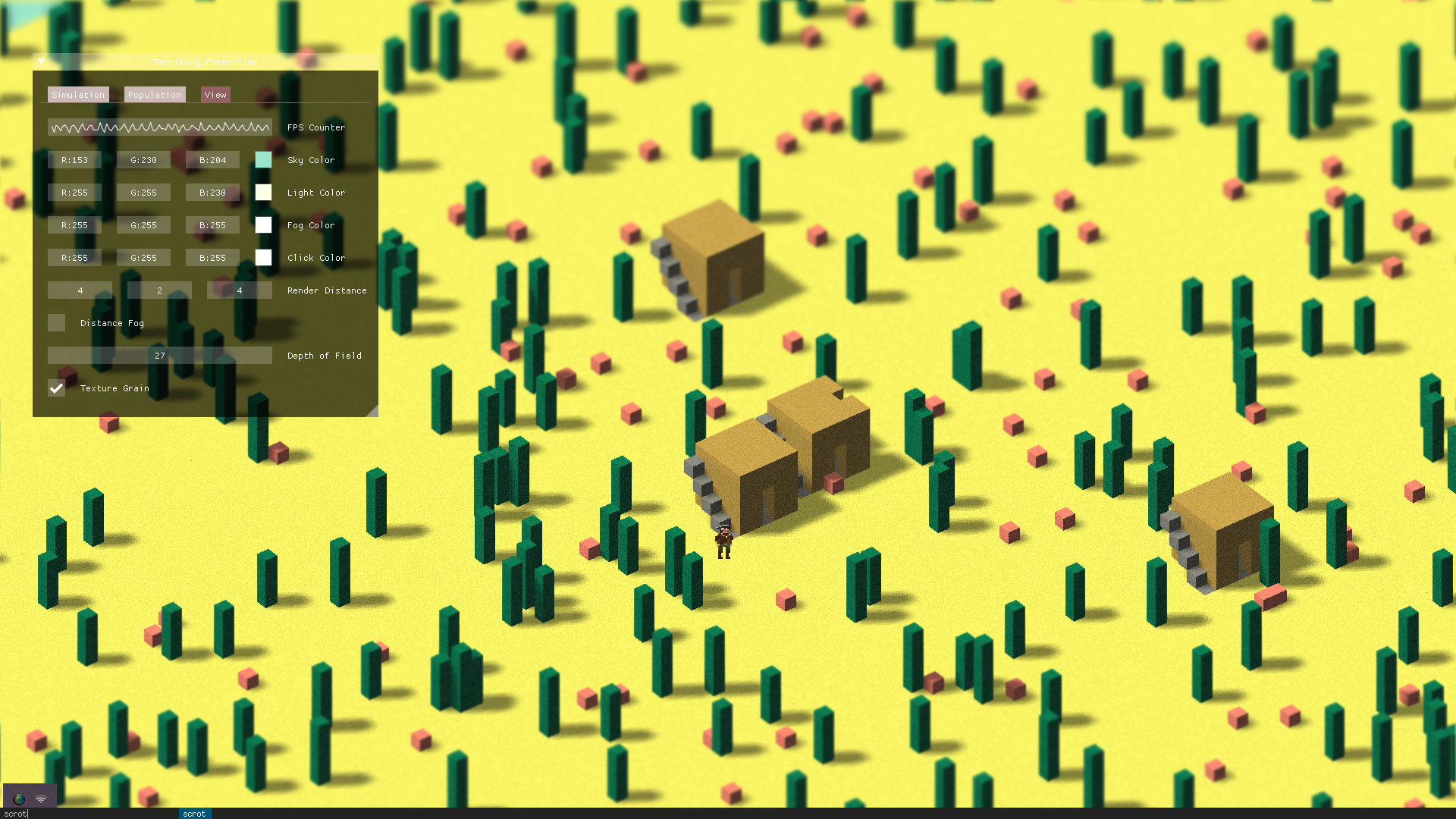

The image above shows some parameters that can be changed during the manipulation. I also implemented switching to full screen mode. The image shows an example of a bot sprite rendered as a textured quadrilateral directed towards the camera. The houses and cacti in the image are built using blueprint.

Creating Fragment Meshes

Initially, I used the naive version of creating meshes: I simply created a cube and discarded vertices that did not touch empty space. However, this solution was slow, and when loading new fragments, the creation of the meshes turned out to be even more narrow “bottlenecks” than access to the file.

The main problem was the efficient creation of rendered VBOs from fragments, but I managed to implement in C ++ my own version of “greedy meshing” (compatible with OpenGL (without strange structures with loops). You can use my code with a clear conscience.

void Model::fromChunkGreedy(Chunk chunk){ //... (this is part of the model class - find on github!) }

In general, the transition to greedy meshing reduced the number of drawn quadrangles by an average of 60%. Then, after further minor optimizations (VBO indexing), the number was reduced by another 1/3 (from 6 vertices per edge to 4 vertices).

When rendering a scene of 5x1x5 fragments in a window that is not maximized, I get an average of about 140 FPS (with VSYNC disabled).

Although I am quite happy with this result, I would still like to come up with a system for rendering non-cubic models from world data. It’s not so easy to integrate with greedy meshing, so it’s worth considering.

Shaders and voxel highlighting

The implementation of GLSL shaders is one of the most interesting and at the same time the most annoying parts of writing the engine due to the complexity of debugging on the GPU. I am not a GLSL specialist, so I had to learn a lot on the go.

The effects I have implemented actively use FBO and texture sampling (for example, blurring, shadowing, and using depth information).

I still don't like the current lighting model, because it doesn’t handle the “dark” very well. I hope this will be fixed in the future when I work on the cycle of changing the day and night.

I also implemented a simple voxel selection function using the modified Bresenham algorithm (this is another advantage of using voxels). It is useful for obtaining spatial information during the simulation. My implementation works only for orthographic projections, but you can use it.

"Highlighted" pumpkin.

Game classes

Several auxiliary classes have been created for processing input, debugging messages, as well as a separate Item class with basic functionality (which will be further expanded).

class eventHandler{ /* This class handles user input, creates an appropriate stack of activated events and handles them so that user inputs have continuous effect. */ public: //Queued Inputs std::deque<SDL_Event*> inputs; //General Key Inputs std::deque<SDL_Event*> scroll; //General Key Inputs std::deque<SDL_Event*> rotate; //Rotate Key Inputs SDL_Event* mouse; //Whatever the mouse is doing at a moment SDL_Event* windowevent; //Whatever the mouse is doing at a moment bool _window; bool move = false; bool click = false; bool fullscreen = false; //Take inputs and add them to stack void input(SDL_Event *e, bool &quit, bool &paused); //Handle the existing stack every tick void update(World &world, Player &player, Population &population, View &view, Audio &audio); //Handle Individual Types of Events void handlePlayerMove(World &world, Player &player, View &view, int a); void handleCameraMove(World &world, View &view); };

My event handler is ugly, but functional. I will gladly accept recommendations for its improvement, especially on the use of SDL Poll Event.

Latest notes

The engine itself is just a system in which I put my task-bots (I will talk about them in detail in the next post). But if you found my methods interesting, and you want to know more, then write to me.

Then I ported the task-bot system (the real heart of this project) to the 3D world and significantly expanded its capabilities, but more on that later (however, the code has already been posted online)!