March 7, 2001

An analysis of the history of technology shows that the number of technological changes is growing exponentially, although intuitively, from the point of view of “common sense”, it seems that development is linear. That is, development over 100 calendar years of the 21st century will approximately correspond to 20,000 years of development at its current pace. The returns, or progress results, such as chip speed or price-performance ratio, also grow exponentially. Sometimes, even the rate of exponential growth itself grows exponentially. For several decades, machine intelligence will surpass human intelligence, leading to the Singularity - technological changes so quick and profound that they will tear apart the very fabric of human history. Among the consequences will be the fusion of biological and non-biological intelligence, immortal people who exist in the form of software, and extremely high-level intelligence that travels through the universe at the speed of light.

You will receive $ 40 trillion simply by reading this essay and understanding what it is all about. See below for full details. (Yes, often the authors go to any tricks to capture your attention, but I am completely serious about this statement. However, until I return to the further narration, carefully read the first sentence of this paragraph).

Now let's get back to the future: the majority misunderstands it. Our ancestors believed that the future would be very similar to their present, which, in turn, was very similar to their past. Although exponential trends existed thousands of years ago, they were at that very early stage when the exponential trend is so flat that it looks like any lack of trends. Thus, their assumptions, for the most part, were justified. And today, guided by worldly wisdom, everyone expects gradual technological progress and the corresponding social consequences. But the future will be much more surprising than most observers realize: only a few of them really realized the fact that the very speed of change is accelerating.

Intuitive linear view versus historical exponential view

Most of the long-term predictions of technical feasibility in future periods of time dramatically underestimate the power of future technologies because they were based on what I call an “intuitively linear” view of technological progress, rather than a “historical-exponential” view. In other words, this is not the case when one can expect a hundred years of progress from the twenty-first century, rather, we will witness twenty thousand years of progress (if we mean progress at its current pace).

This discrepancy in perspectives occurs often in various situations, for example, when discussing ethical issues that Bill Joy raised in his controversial WIRED article, “Why We Don't Need the Future . ” Bill and I often intersect at different meetings, playing the role of optimist and pessimist, respectively. And while critics of Bill’s position are expected to be criticized from me, and I really see flaws in the concept of “refusal” proposed by him, nevertheless, as a rule, I ultimately defend Joey on the key issue of feasibility. Recently, one of the speakers, a Nobel Prize laureate, rejected Bill's fears, saying that "we do not expect to see self-replicating nanoengineering objects for another hundred years." I noticed that 100 years is really a reasonable estimate of the time required for technological progress to achieve this specific goal, with today's speed of its development. But since the pace of progress doubles every ten years, we will see a century of progress at today's speed in just 25 calendar years.

When people think about the future tense, they intuitively assume that the current speed of progress will continue in the future. However, a careful review of the pace of technology development shows that the speed of progress is not constant, and it is human nature to adapt to a changing pace in such a way that it intuitively seems that the pace will remain constant. Even for those of us who have lived long enough to feel how the pace is accelerating over time, our natural intuition, however, gives the impression that progress is proceeding at the same speed as we felt recently. From the point of view of a mathematician, the main reason for this is that the exponent in short sections is approximated by a straight line. So, even though the pace of progress in the most recent past (for example, last year) was much higher than it was ten years ago (not to mention a hundred or a thousand years ago), our recent experience dominates memories. Therefore, it is quite a common thing when even sophisticated commentators, discussing the future, as the basis of their expectations, extrapolate the current pace of change for the next 10 or 100 years. That is why I call this kind of outlook on the future an “intuitively linear” view.

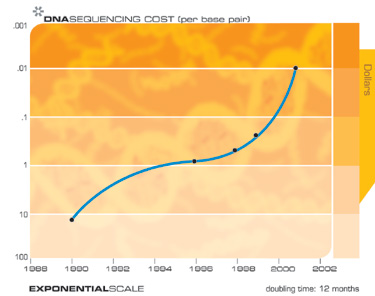

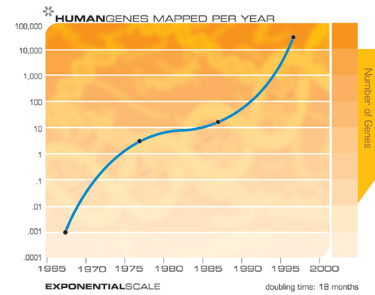

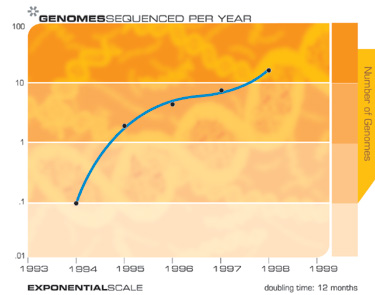

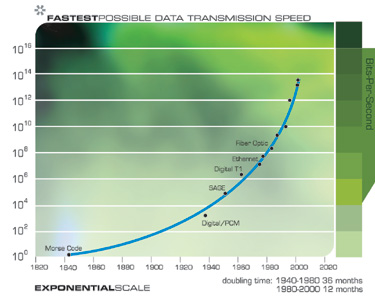

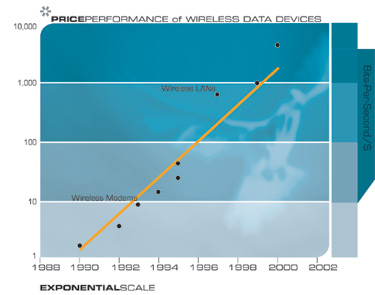

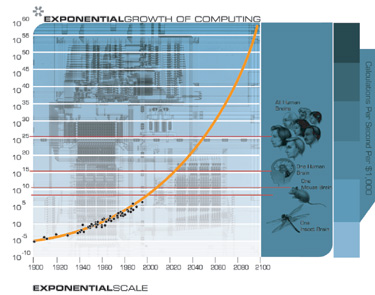

A serious assessment of the history of technology shows that technological change is exponential. With exponential growth, we find that a key indicator, such as computing power, is multiplied by a constant coefficient for each unit of time (for example, doubles every year), and not just increases by a certain amount. Exponential growth is a characteristic feature of any evolutionary process, and technological development can serve as a good example.

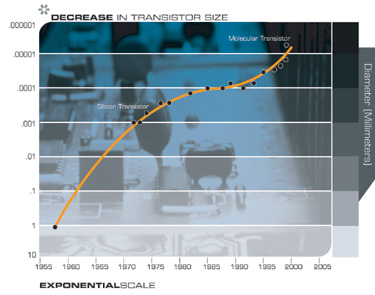

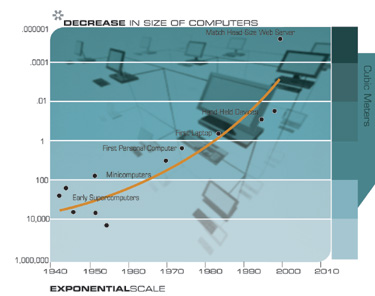

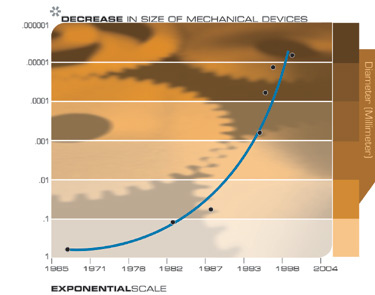

It is possible to evaluate data in different ways, at different time scales, and for a wide range of technologies, from electronic to biological, acceleration of progress and its growth rate are observed. In fact, we find not just exponential growth, but “double” exponential growth, that is, the rate of exponential growth itself grows exponentially. These observations do not relate only to the assumption of the continuation of the Moore's law (i.e., an exponential reduction in the size of transistors in an integrated circuit), but are based on a representative model of various technological processes. This clearly shows that technology, and in particular, the pace of technological change, accelerates in (at least) geometric progression, and not linearly, and this happens from the very moment of the appearance of technology and technology, but in fact, from the moment of evolution on the ground.

I emphasize this point, because here the forecasters who consider future trends and talk about a possible future make their most grandiose mistake. Most technological forecasts generally ignore the “historical-exponential” type of technological progress. That is why people tend to overestimate what can be achieved in the short term (because we tend to discard the necessary details), but underestimate what can be achieved in the long term (because exponential growth is ignored).

The law of accelerated returns

We can organize these observations into what I call the law of accelerating returns, as follows:

- Evolution uses positive feedback in such a way that more efficient methods resulting from one stage of evolutionary progress are used to create the next stage. As a result

- the rate of evolution of an evolutionary process increases exponentially over time. Over time, the “order” of information included in the evolutionary process (that is, the measure of how well this information fits the goal, which survival is in the process of evolution) increases.

- Accordingly, the “return” of the evolutionary process (for example, speed, cost-effectiveness, or the overall “power” of the process) grows exponentially over time.

- In another chain of positive feedback, as a particular evolutionary process (e.g., computation) becomes more efficient (e.g., cost-effective), more and more resources are allocated for the further development of this process. This leads to a second level of exponential growth (that is, the rate of exponential growth itself grows exponentially).

- Biological evolution is one such evolutionary process.

- Technological evolution is another such evolutionary process. Indeed, the emergence of the first type that creates technology has led to a new evolutionary process of technology. Thus, technological evolution is the product and continuation of biological evolution.

- A specific paradigm (a method or approach to solving a problem, for example, reducing the area of a transistor on an integrated circuit, as an approach to creating more powerful computers) provides exponential growth until the method reaches its full potential. When this happens, a paradigm shift (that is, a fundamental change in approach) takes place that allows exponential growth to continue.

If we apply these principles at the highest level of evolution on Earth, the first step, the creation of cells, introduced the paradigm of biology. The subsequent emergence of DNA provided a digital way to record the results of evolutionary experiments. Then, the evolution of a species that combined rational thinking with an opposed (i.e. thumb) finger caused a fundamental paradigm shift from biology to technology. The upcoming change in the main paradigm will be from biological thinking to hybrid, combining biological and non-biological thinking. This hybrid will include metabolic processes resulting from the reconstruction and copying of the principles of the biological brain.

Studying the timing of these stages, we see that the process is constantly accelerating. Evolution of life forms took billions of years for the first steps (for example, to simple cells); further progress accelerated. During the Cambrian Explosion, changes in the basic paradigms took only several tens of millions of years. Later, Humanoids developed over several million years, and Homo sapiens only a few hundred thousand.

With the advent of the technology-creating species, the exponential pace became too fast for evolution through DNA-driven protein synthesis and switched to human-made technologies. Technology goes beyond simple tool making; it is the process of creating ever more powerful technologies using tools from the previous round of innovation. In this sense, human technology is different from the production of tools in other species. Each stage of technology development is fixed, and serves as the basis for the next stage.

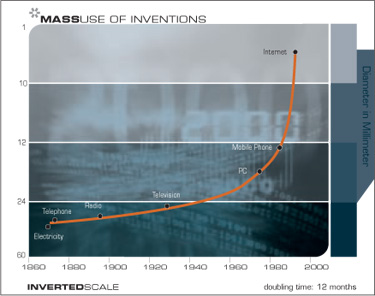

The first technological steps (stone tools, fire, wheel) took tens of thousands of years. For people living in that era, technological changes, even over thousands of years, were barely noticeable. By 1000 AD, progress had gone much faster, and a paradigm shift took place in just one or two centuries. In the nineteenth century, we saw more technological change than in the nine centuries that preceded it. Then, in the first twenty years of the twentieth century, we saw a more significant development than in the entire nineteenth century. Now the paradigm shift is taking place in just a few years. The World Wide Web, in its current form, did not exist just a few years ago, and ten years ago it did not exist at all.

The rate of paradigm shift (i.e., the overall rate of technological progress) is currently doubling (approximately) every decade; that is, the time for a paradigm shift is halved every ten years (and the growth rate itself is growing exponentially). Thus, technological progress in the twenty-first century will be equivalent to what would have required (in a linear representation) about 200 centuries. On the contrary, the twentieth century passed only about 25 years of progress (again, in terms of its current pace), due to an increase in its speed to the current one. Thus, the twenty-first century will witness almost a thousand times greater technological changes than its predecessor.

Singularity close

In order to appreciate the nature and significance of the upcoming “Singularity”, it is important to comprehend the nature of exponential growth. To this end, I like to tell a story about the inventor of chess and its patron, the emperor of China. In response to the emperor’s proposal to appoint a reward for his new favorite game himself, the inventor asked one grain of rice to be placed on the first square of the chessboard, two on the second square, four on the third, and so on. The emperor quickly agreed with this seemingly simple and modest request. According to one version of this story, the emperor went bankrupt, since 63 doublings ultimately make up 18 million trillion grains of rice. Given that ten grains of rice cover a square inch, this amount of rice requires rice fields covering twice the entire surface of the Earth, including the oceans. In another version of this story, the inventor lost his head.

It should be noted that, as the emperor and inventor filled the first half of the chessboard, everything went quite fine. The inventor was poured spoons of rice, then a bowl of rice, then barrels. By the end of the first half of the chessboard, the inventor already had a large rice field (4 billion grains), and the emperor noticed something was amiss. When they moved to the second half of the chessboard, the situation began to deteriorate rapidly. By the way, regarding the doubling of calculations, since the invention of the first programmable computers at the end of the Second World War, at the moment we have passed just over thirty-two doublings of their productivity.

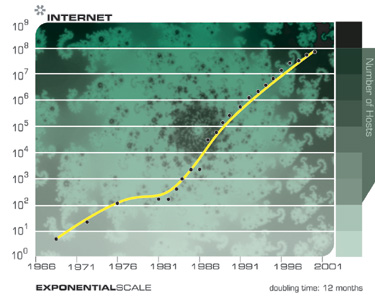

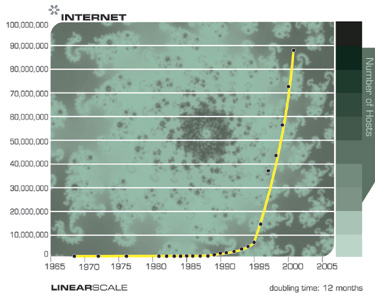

This is the nature of exponential growth. Although technology is growing exponentially, we humans live in a linear world. Because of this, technological trends do not look to us like the initial steps in the process of constantly doubling technological power. Then, seemingly out of nowhere, the technology shows explosive growth. For example, when the Internet went from 20,000 to 80,000 nodes in two years in the 1980s, this progress remained hidden from the general public. Ten years later, when it grew from 20 million to 80 million nodes over the same period of time, the influence was already quite noticeable.

As exponential growth continues to accelerate in the first half of the twenty-first century, we will have a sense of an explosion of infinity, at least from the point of view of modern people limited by a linear perspective. Progress, ultimately, will begin to happen so quickly that it will disrupt our ability to keep up with it. He will literally get out of our control. The illusion that we can “pull the switch” will be dispelled.

Can technological progress continue to accelerate ad infinitum? Is there a moment when people are not able to think so fast as to keep up with it? As for ordinary people, this is obviously so. However, what can a thousand scientists achieve, each of which is a thousand times smarter than today's scientist, and each of which acts a thousand times faster than modern people (because the processing of information in their mainly non-biological brains is faster)? One year will be equal to the millennium. What would they come up with?

Well, first of all, they would come up with a technology to become even more intelligent (since their intelligence is no longer limited in power). They would begin to change their own thinking processes in order to think even faster. When scientists evolve to be a million times smarter and work a million times faster, their hour will be equivalent to a whole century of progress (by today's standards).

This will be the Singularity. A singularity is a technological change so quick and so deep that it tears the very fabric of human history. Some will say that it is impossible to comprehend the Singularity, at least with our current level of understanding, and therefore it is impossible to look beyond its “event horizon” and understand what will happen after.

My opinion is that, despite the serious limitations of thinking imposed by our biological brain, which has only a hundred trillion interneuronal connections, we nevertheless have sufficient abstract thinking power to draw significant conclusions about the nature of life after the Singularity. The most important thing, from my point of view, is that the emerging intellect will continue to represent human civilization, which is already human-machine. This will be the next step in evolution, the next high-level paradigm shift.

In order to evaluate the concept of Singularity in perspective, let's look at the history of the word itself. Singularity is a well-known word meaning a unique event with serious consequences. In mathematics, this term implies infinity, an explosive increase in the value that occurs when dividing a constant by a number tending to zero. In physics, likewise, Singularity denotes an event or location of infinite power. In the center of the black hole, matter is so dense that its gravity is infinite. Nearby matter and energy are drawn into a black hole, and the event horizon separates the rest of the Universe from this region, which, in essence, is a gap in the fabric of space and time. It is believed that the Universe itself began with such a Singularity.

In the 1950s, John Von Neumann was credited with saying that "the ever-accelerating technological progress ... gives the impression of the approach of some significant Singularity in the history of human development, after which human activity, as we know it, can no longer continue." In the 1960s, Irving John Good wrote about the “intellectual explosion” that occurs when intelligent machines develop their next generation without human intervention. In 1986, Vernor Vinge, a mathematician and cybernetist at the University of San Diego (San Diego State University), wrote about the rapidly approaching technological "Singularity" in his science fiction novel Abandoned in Real Time . Then, in 1993, Vinge, at a symposium organized by NASA, presented a report in which he described the Singularity as an impending event, caused primarily by the emergence of "entities with intelligence superior to human", which Vinge considered a harbinger of the phenomenon of escape.

From my point of view, the Singularity is multifaceted. It represents an almost vertical phase of exponential growth, where the growth rate is so high that it seems that technologies are developing at infinite speed. Of course, from a mathematical point of view, there is no gap, and the growth rate remains finite, although extremely large. But today , from our limited point of view, this inevitable event is presented as a sharp and sharp gap in the continuity of progress. However, I emphasize the reservation “today” because one of the important consequences of the Singularity will be a change in the very nature of our ability to understand. In other words, we will become much smarter when we merge with our technologies.

When, in the 1980s, I wrote my first book, The Age of Intelligent Machines , I finished it with the emergence of machine intelligence more powerful than human, but I realized how difficult it is to look beyond this horizon of events. Over the past 20 years, I have been thinking about the consequences of this event, an event that transforms all spheres of human life, and today I feel that we are really able to understand many of its aspects.

Consider a few examples of such consequences. . , . ( ) , . , , . , , , , .

, , , . , «» , , . , : , , , -, , . , , .

, , , , .

, , . , , « ». , , Intel, 1970- , 24 . , , , , .

, 2019 . , , . , ?

.

( ) $1000 ( ) 49 , , .

, , , .

, , .

, , , -. ( ), , 1890 , «Robinson», , , CBS, , , , , , ( ) .

- . 49 ( ), . , , . , . ( ) 1910 1950 , 1950 1966 , .

? ? . « », (Randy Isaac), IBM? - ?

, ( ) , . . : , MIPS- ( ) , MIPS- .

, , . ( ), , , . «» ( , , , , ). , , , , , , , .

, ( 1958 ), ( 1947 ), , 1890 1900 . , .

, , . ( 20 , ). , . , ? . 200 ( ), , . - . , . , , , . , . , , , , , , , .

, () , , . , , . , «». : (, ), , , , , , .

, , :

- ( ) . . , «». , «» , . , .

- «» , . , . , .

, . , , ( , , ).

«S- » («S» , , , , , ), - , , . , , , . , S- . , ( , «» - , ). , , , S- - . (, ) , . , , . , ( ) S- , , , .

, , ,

« » , - , . , , , , , , . , , , , . , , (. ) . , .

, , , , , , . , , .

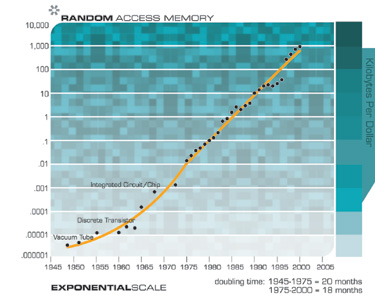

, , RAM ( ).

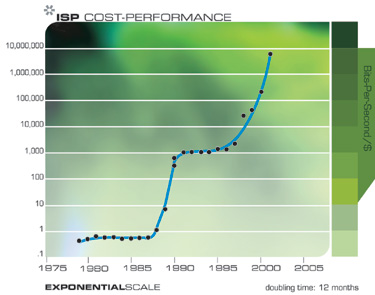

, , .

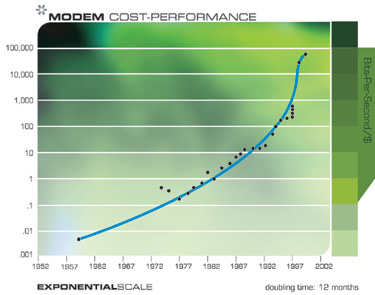

, .

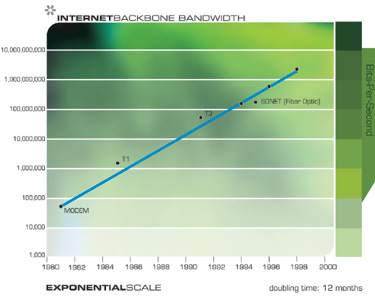

, , . , , , , , .

«S-»

, «S-» : , , , , , .

, . , , . , , , . , , 1990- , , , , , . , .

, , .

, 10-11 .

Another technology that will have far-reaching consequences for the twenty-first century is the widespread tendency to make things smaller, that is, miniaturization. The characteristic sizes of elements of a wide range of technologies, both electronic and mechanical, are reduced, and also at a double exponential rate. Currently, the reduction in linear dimensions for each dimension occurs with a coefficient of approximately 5.6 for each decade.

Back to the exponential growth of computing

If we consider the exponential growth of computing in the right perspective, as one example of the widespread occurrence of exponential growth based on information technology, which is one of many examples of the Law of accelerating returns, then we can confidently predict its continuation.

In the accompanying box, I give a simplified mathematical model of the Law of Accelerating Returns, since it relates to the (double) exponential growth of computing power. From the formulas below, the above graph shows a continuous increase in the speed of calculations. This graph corresponds to the data available for the twentieth century, goes through all five paradigm shifts, and gives a forecast for the twenty-first century. Note that the growth rate increases slowly, but still exponentially.

The law of accelerated returns in relation to the growth of computing

The following is a brief overview of the Law of Accelerating Returns on the example of double exponential growth in computational speed. This model considers the influence of the growing power of technology as an incentive for the development of its own next generation. For example, with more powerful computers, and related technologies, we gain tools and knowledge to develop even more powerful computers, and make it even faster.

Please note that the data for 2000 and subsequent periods represent calculations in neural networks, since it is expected that this type of calculation will ultimately dominate, especially when emulating human brain functions. This type of calculation is less expensive than conventional (for example, for Pentium III / IV) calculations, with a coefficient of at least 100 (especially if implemented using digital-to-analog electronics, which will correspond well to the digital-to-analog electrochemical processes of the brain ) The coefficient 100 corresponds to about six years today, and less than six years later in the twenty-first century.

My estimate of brain performance is 100 billion neurons, multiplied by an average of 1000 connections per neuron (calculations occur mainly in connections), and multiplied by 200 operations per second. Of course, these scores are conservatively high; higher and lower scores can be found. Nevertheless, even much more (or less) high in order of magnitude estimates bias the forecast by a relatively small number of years.

Some of the most significant dates for this analysis are:

- We achieve the abilities of one human brain (2 × 10 16 CPS (calculations per second)) for $ 1000 around 2023.

- We achieve the abilities of one human brain (2 × 10 16 CPS) in one cent around 2037.

- We achieve the abilities of the entire human race (2 × 10 26 CPS) for $ 1000 around 2049.

- We achieve the abilities of the entire human race (2 × 10 26 CPS) in one cent around 2059.

The model has the following variables:

- V: Speed (i.e. productivity) of the calculations (measured in calculations per second related to their cost)

- W: Worldwide knowledge in the design and creation of computing devices

- t: time

The model assumes that:

- V=C1 cdotW

In other words, the power of computers is a linear function of knowing how to create them. In fact, this is a conservative assumption. Usually, innovations improve V (computer performance) by several times, rather than by a fixed amount. Independent innovation multiplies each other's effect. For example, new semiconductor technology, such as CMOS, a more efficient integrated circuit layout technique, and improved processor architecture, such as pipelining, independently and multiply V.

- W=C2 cdot intt0V

In other words, W (knowledge) is cumulative, and the instantaneous increment of knowledge is proportional to V.

This gives us:

- W=C1 cdotC2 cdot intt0W

- W=C1 cdotC2 cdot4 cdott3

- V=21 cdot2 cdot4 cdott3

Simplifying the constants we get:

- V=Ca cdotCCc cdottb

Thus, this is the formula for the “accelerating” (that is, growing exponentially), result, in fact, “the usual Moore’s law”.

As I mentioned above, the data show an exponential increase in the rate of exponential growth. (We doubled computing power every three years at the beginning of the twentieth century, every two years in the middle of the century, and almost every year during the 1990s.)

Let's look at another exponential phenomenon, which is the growth of computing resources. Not only do each of the computing devices (of the same cost) become more and more powerful as a function of W, but their number used for calculations also grows exponentially.

Thus, we have:

- N: Calculation costs

- V=C1 cdotW (as before)

- N=5 cdott4 (computational costs grow at their own exponential rate)

- W=C2 cdot intt0(N cdotV)

As before, world knowledge is accumulating, and the instantaneous increment is proportional to the number of calculations that are equal to the resources used for calculations (N) multiplied by the power of each device (at its constant cost).

This gives us:

- W=C1 cdotC2 cdot intt0(C5 cdott4 cdotW)

- W=C1 cdotC2 cdot(6 cdott3)7 cdott

- V=21 cdot2 cdot(6 cdott3)7 cdott

Simplifying the constants, we get:

- V=Ca cdot(C cdottb)Cd cdott

This is a double exponential curve, in it the rate of exponential growth grows with its exponential speed.

Now let's look at the real data. Take the parameters of real computing devices and computers in the twentieth century:

- CPS / \ $ 1K: calculations per second for \ $ 1000

Data on computing devices of the twentieth century correspond to:

- CPS / \ $ 1K = 106,00 cdot frac20,406,00 fracYear−1900100−11,00

We can determine the growth rate over a period of time:

- Growth rate = $ inline $ 10 ^ {\ frac {\ log (CPS / \ $ 1K for \ hspace 5mu current \ hspace 5mu year) - \ log (CPS / \ $ 1K for \ hspace 5mu previous \ hspace 5mu year}} {current \ hspace 5mu year - previous \ hspace 5mu year}} $ inline $

- The human brain = 100 billion (10 11 ) neurons × 1000 (10 3 ) connections per neuron × 200 (2 × 10 2 ) calculations per second per connection = 2 × 10 16 calculations per second

- Human race = 10 billion (10 10 ) people = 2 × 10 26 calculations per second

These formulas give the above graphs.

Already, it is expected that the IBM Blue Gene supercomputer, which is scheduled to be completed by 2005, is projected to be capable of performing 1 million billion operations per second (i.e., one billion megaflops or one petaflops). This is one twentieth of the productivity of the human brain, which I conservatively estimate at 20 million operations per second (100 billion neurons per 1000 connections per neuron per 200 calculations per second per connection). In accordance with my previous forecasts, supercomputers will reach the capacity of one human brain by 2010, and personal computers will do so by about 2020. By 2030, a small settlement (about a thousand people) will be needed to match the processing power available for \ $ 1000. By 2050, for \ $ 1000 it will be possible to get computing power equivalent to the intelligence of all people on Earth. Of course, this includes only those brains that still use carbon-based neurons. Although human neurons are, in fact, marvelous creations, we should not (and we will not) just copy them when developing new computer systems. Our electronic circuits are already tens of millions of times faster than the electrochemical processes of a neuron. Most of the complexity of the human neuron is used to maintain its life support functions, and not on the ability to process information. Ultimately, we must transfer our mental processes to a more appropriate computational basis. Then our minds will not need to stay as small as limited as they are today, with only one hundred trillion neural connections, each of which works with a slowness of 200 digital-to-analog computing per second.

Intelligence software

So far, I have talked about hardware for computing. The software is even more revealing. One of the main assumptions underlying the prediction of the Singularity is the ability of non-biological environments to reproduce the richness, subtlety, and depth of human thinking. Achieving the processing power of one human brain, or even a settlement or nation, will not automatically give the level of human capabilities. By the human level, I understand all the diverse and sophisticated ways of manifesting human intelligence, including musical and artistic abilities, creativity, the ability to physically move in space, understanding, and an adequate reaction to emotions. Appropriate hardware power is a necessary but not sufficient condition. The organization and content of these resources - intelligence software, is also crucial.

Before considering this issue, it is important to note that as soon as a computer reaches the human level of intelligence, it will definitely step over it. A key advantage of non-biological intelligence is that machines can easily share their knowledge. If I study French, or read War and Peace, I cannot easily download what I learned to you. You must get this knowledge in the same painstaking way as I did. My knowledge, embedded in the huge structure of neurotransmitter concentrations and neural connections, cannot be quickly extracted or transferred. But we will not miss the opportunity to create fast data transmission channels in our non-biological equivalents of clusters of human neurons. When one computer learns a skill or comes to an understanding of something, it can immediately share this wisdom with billions of other machines.

As a modern example, we spent the years of training a research computer on recognizing cohesive human speech. We missed through him thousands of hours of recorded speech, corrected his mistakes, and patiently improved his performance. Finally, he became very adept at recognizing (I dictated to him most of my recent book). Now, if you want your own personal computer to recognize speech, you do not need to pass it through the same process again; You can simply download fully prepared templates in seconds. Ultimately, billions of non-biological entities can be the main repository of all knowledge acquired by people and machines.

In addition, computers are potentially millions of times faster than human neural circuits. A computer can also accurately remember billions or even trillions of facts, while we can hardly remember several phone numbers. The combination of a human level of intelligence in a computer with the superiority of computers inherent in speed, accuracy and knowledge sharing will be grandiose.

There are a number of compelling scenarios for achieving higher levels of intelligence in our computers, ultimately approaching and exceeding human levels. We will be able to develop and train the system by combining neural networks with mass parallelism with other paradigms in order to understand the language and build knowledge models, including the ability to extract this knowledge from written documents. Unlike many modern computer "neural networks" that use mathematically simplified models of human neurons, some modern neural networks already use highly detailed models of human neurons, including precise nonlinear analog activation functions and other similar details. Although the ability of modern computers to extract knowledge and learn from documents in natural languages is limited, their capabilities in this area are rapidly improving. Computers will be able to read on their own, understanding and modeling what they read in the second decade of the twenty-first century. Then we can let our computers read all the literature in the world — books, magazines, scientific publications, and other materials. In the end, machines will collect knowledge on their own, rummaging through the Internet, or even in the physical world, extracting information from the entire spectrum of media and information services, and sharing knowledge with each other (which machines can do much easier than their creators - people).

Reconstructing the brain

The most attractive scenario for developing artificial intelligence software is to “peer into the blueprints” of the best thinking machine we can reach. There are no reasons why we could not understand the work of the human brain and copy the basic principles of its structure. Although the creator had to spend several billion years on development, the brain is easily accessible to us, and (so far) is not protected by copyright. Despite the fact that the brain is surrounded by a skull, it is not hidden from our sight.

The most direct and crude way to achieve this goal is destructive scanning: take a frozen brain, preferably frozen the moment before it inevitably dies, and examine its layer by layer, cutting off very thin slices. We can easily see every neuron, every connection and all the concentrations of neurotransmitters in each synapse of each thin layer.

Scanning of the human brain has already begun. The sentenced killer allowed him to scan his brain and body, and you can access all of his 10 billion bytes on the Internet http://www.nlm.nih.gov/research/visible/visible_human.html .

On the same site, he also has 25 billion bytes of companion - women, in case he becomes lonely. These scans do not have high enough detail for our purposes, but this may be good, because we do not want our machine intelligence patterns to be based on the brain of a convicted killer.

Although scanning a frozen brain is feasible today, he lacks speed and bandwidth, but again, the Accelerated Return Act will provide the necessary scanning speed, just as he did to scan the human genome. Andreas Nowatzyk of Carnegie Mellon University is planning to scan the nervous system of the brain and mouse body with details of less than 200 nm, which is already very close to the details needed to reconstruct the brain.

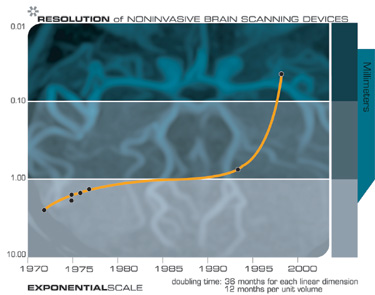

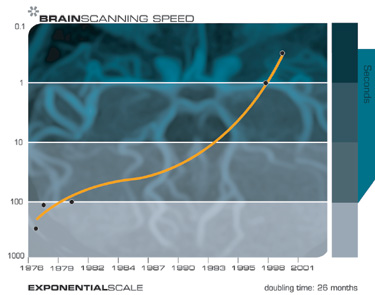

Today we also have non-invasive scanning methods, including high-resolution magnetic resonance imaging (MRI), scanning optical images, scanning in the near infrared region, and other technologies that in some cases can distinguish between individual soma cells or neurons. Brain scanning technologies are also increasing their resolution with each new generation - just what you would expect from the law of accelerated returns. Future generations of technology will allow us to determine the connections between neurons, look inside the synapses and record the concentration of neurotransmitters.

Today we can look inside the human brain with non-invasive scanners that increase their resolution with each new generation of this technology. There are a number of technical problems in the way of non-invasive scanning, such as achieving the necessary resolution, performance, lack of vibration, and security. For a number of reasons, it is easier to scan the brain of a recently deceased person than a living person. For example, it is easier to make a dead man sit still. However, non-invasive scanning of the living brain will eventually become possible, as MRI, optical and other scanning technologies continue to progress, increasing their resolution and speed.

Inside scan

Although non-invasive methods for scanning the brain from outside the skull are rapidly improving, the most practical approach to fixing every significant neural part of the brain is to scan from the inside out. 2030 «» ( ) , . — , , . . , , , ( , ).

, . , , , . , . . , , . , , , 2030 . - , , .

, ? , , . . . , , ( 100 , 1000 , ), , , .

, , , .. Microsoft Word. , , ( ). , - . , , . , .

. , , (Lloyd Watts — www.lloydwatts.com ) , . . , , , . , , . , (Hans Moravec) , 1000 , .

:

(Cochlea): . 30000 - .

. (MC — Multipolar Cells): .

. (GBC — Globular Bushy Cells): .

(Olivary Complex) ( ( LSO MSO)). .

. (SBC — Spherical Bushy Cells): , , .

. (OC — Octopus Cells): .

. (DCN — Dorsal Cochlear Nucleus): .

(VNTB — Ventral Nucleus of the Trapezoid Body): , .

, (VNLL — Ventral Nucleus of the Lateral Lemniscus, PON — Peri-Olivary Nuclei): .

(MSO — Medial Superior Olive): ( , , ).

(LSO — Lateral Superior Olive): .

(ICC — Central Nucleus of the Inferior Colliculus): .

(ICX — Exterior Nucleus of the Inferior Colliculus): .

(SC — Superior Colliculus): .

(MGB — Medial Geniculate Body):

(LS — Limbic System): , , , ..

(AC — Auditory Cortex)

— «Tabula Rasa» ( ), . « », , . , , .

, - , , . , , , . , .

, ---- -, ----. - , , , , , , . , , .

, . , . , , , , .

, , , . , (Ted Berger) Hedco Neurosciences , , . (Carver Mead) (CalTech) , - .

- (San Diego's Institute for Nonlinear Science) . ( - ) , « ». , , . ( ), , , . , , , , «». «» «» . (, , ), «» .

, - , . , - ( , «») , , . , . , .

, , . . , , , . — , .

, ( , ) , , . , , , , .

?

?

, «». , , . (, , ) . , , . , , .

, , ( ), . , — , , . , , . , , . , .

, . , , , «», . , , , , . — , , , . , , , - .

. « » (.. ), , «» . , ( ), , - .

, , , , , . , , .

, . «» , , . , - , «» , , , . , . , . , , .

. , , , . , . , , . , , .

, , : .

Think about how much our thoughts and attention are directed to our body and its survival, safety, nutrition and appearance, not to mention attachment, sexuality and reproduction. Many, if not most, of the goals we are trying to achieve using our brain are related to our body: to protect it, to supply fuel, to make it attractive, to provide comfort, to satisfy its countless needs and desires. Some philosophers argue that achieving the human level of intelligence is impossible without the body. If we are going to transfer the human mind to a new computing environment, it would be nice for us to provide him with a body. An incorporeal mind will quickly become depressed.

We will provide many different bodies to our machines, and they will create many for themselves: bodies built using nanotechnology (i.e., very complex physical systems built by atom after atom), virtual bodies (which exist only in virtual reality), bodies consisting from flocks of nanobots, etc.

A common scenario will be the strengthening of the human biological brain by a close connection with non-biological intelligence. In this case, the body remains a good old human organism with which we are familiar, although it will also improve significantly thanks to biotechnology (amplification and replacement of genes), and then thanks to nanotechnology. A detailed study of the bodies of the twenty-first century is beyond the scope of this essay, but the reconstruction and improvement of our bodies will (and was) be an easier task than the reconstruction of our mind.

So who are these people?

To return to the question of subjectivity, think: is the restored mind the same consciousness as the person we just scanned? Are these “people” conscious at all? Is it the mind or just the brain?

Consciousness in our 21st century machines will be a critical issue. But it is not easy to solve, or even at least understand. People tend to have strong beliefs about this, and as a rule, they simply cannot understand how someone else can see this problem from a different perspective. Marvin Minsky remarked that “there is something strange in the description of consciousness. No matter what people want to say, they simply cannot do it clearly and clearly. ”

We are not worried, at least for now, about causing pain and suffering to our computer programs. And at what point do we consider the essence or process to be conscious, able to feel pain and discomfort, to have our own intentions, our own free will? How do we determine if an entity is conscious, does it have subjective sensations? How do we distinguish a process that is conscious from a process that acts as if it were conscious?

We cannot just ask about it. If he says “Hey, I'm awake,” does that solve the problem? No, today we already have computer games that, in fact, do this, and they are not very convincing.

What if some entity really very convincingly and realistically says: “I am alone, please keep me company.” Does this solve the problem?

If we look inside her circuits and see essentially identical types of feedback loops and other mechanisms, the same as we see in the human brain (although implemented using non-biological equivalents), does this solve the problem?

And anyway, who are these people in the car? The answer will depend on who you ask. If you ask people in the car, they will persistently claim that they are the original people. For example, if we scan - say, me) and record the exact state, level and position of each neurotransmitter, synapse, nervous connection and all other relevant details, and then restore this huge array of information (which I estimate to thousands of trillions of bytes) into a neural computer of sufficient capacity, the person who appears in the car will think that “he” is (and was) me, or at least he will act in this way. He will say: “I grew up in Queens, New York, went to college at the Massachusetts Institute of Technology, stayed in the Boston area, founded and sold several artificial intelligence companies, went into the scanner there and woke up here in the car. Hey, this technology really works.

Wait a minute!

Is it really me? On the one hand, the old biological Ray (that is, I) still exists. I will still be here in my brain based on carbon cells. On the other, I have to sit and watch the new Ray succeed in the endeavors that I could only dream of.

Continued here