Stupid reason why your cunning machine vision application doesn't work: orientation in EXIF

I wrote a lot about computer vision and machine learning projects, such as object recognition systems and face recognition projects . I also have an open source Python face recognition library that somehow made it into the top 10 most popular machine learning libraries on Github . All this has led newcomers to Python and machine vision to ask me a lot of questions.

From experience, there is one specific technical problem that most often confuses people. No, this is not a difficult theoretical question or a problem with expensive GPUs. The fact is that almost everyone loads images into the memory rotated, without even knowing about it. And computers do not detect objects very well or recognize faces in rotated images.

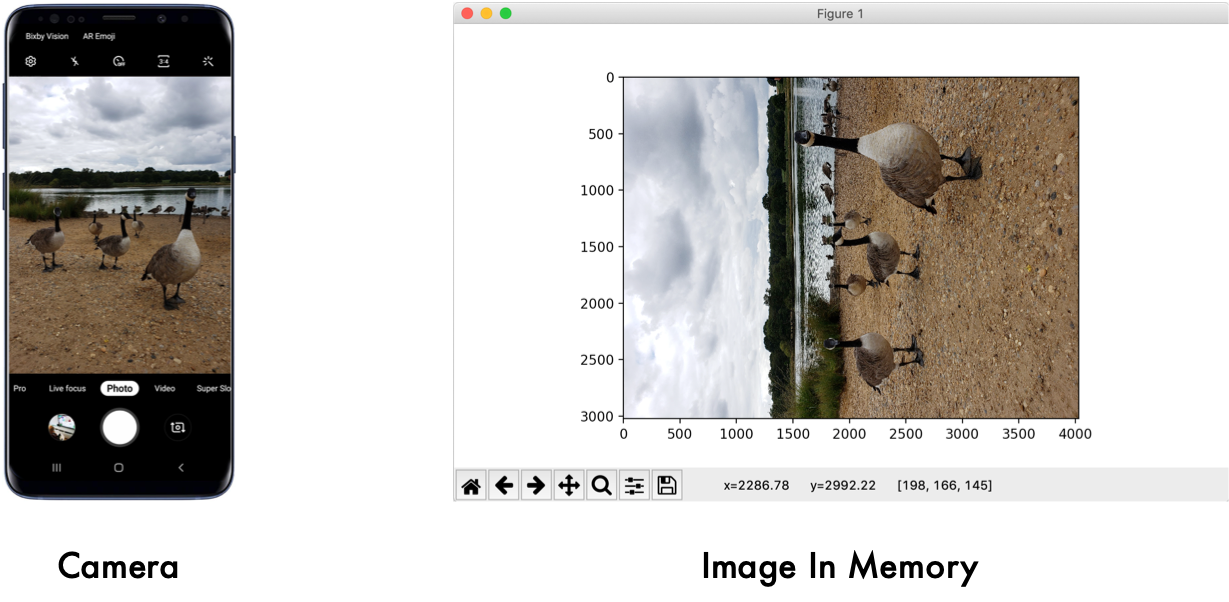

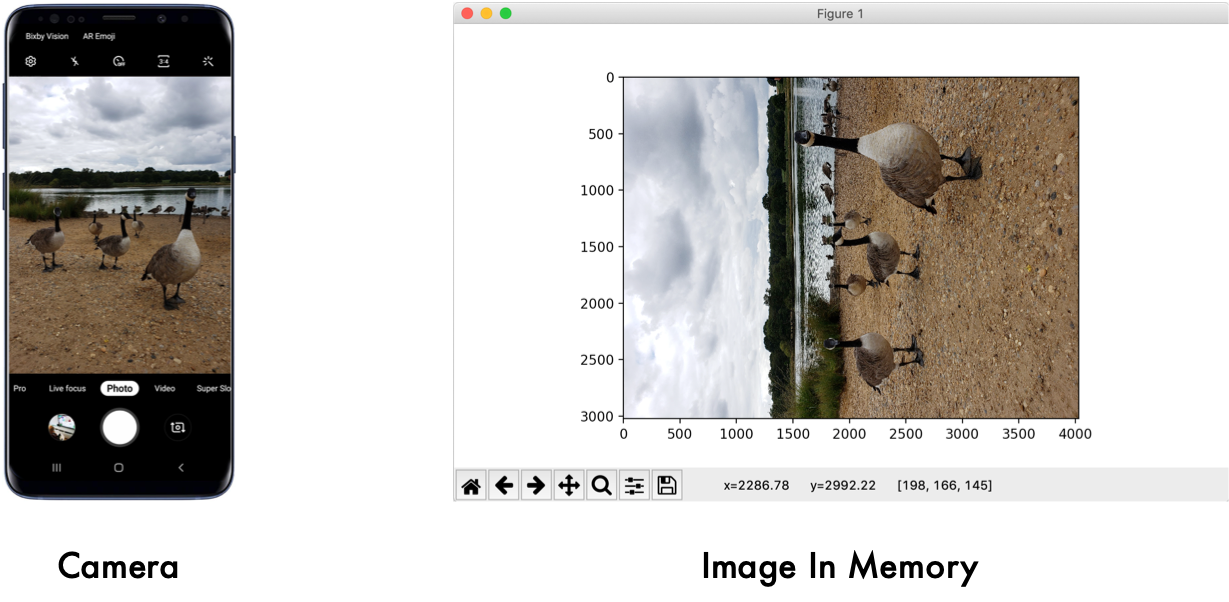

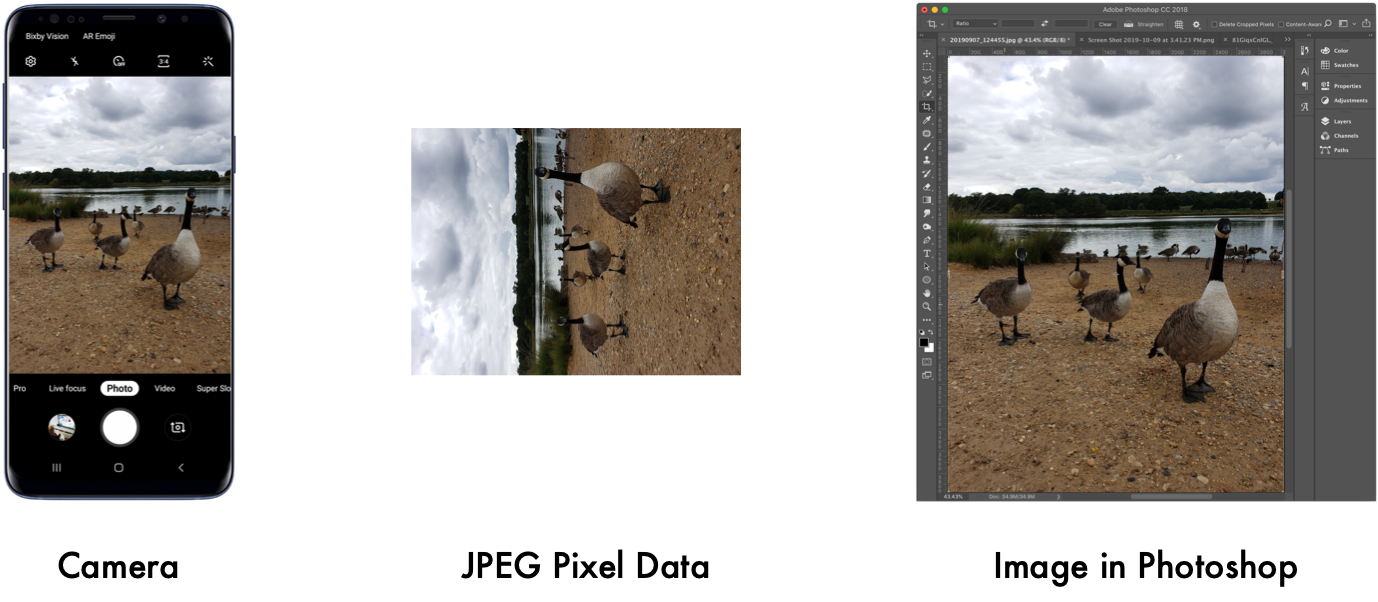

When you take a picture, the camera registers the position of the phone, so that in another program the picture will be displayed in the correct orientation:

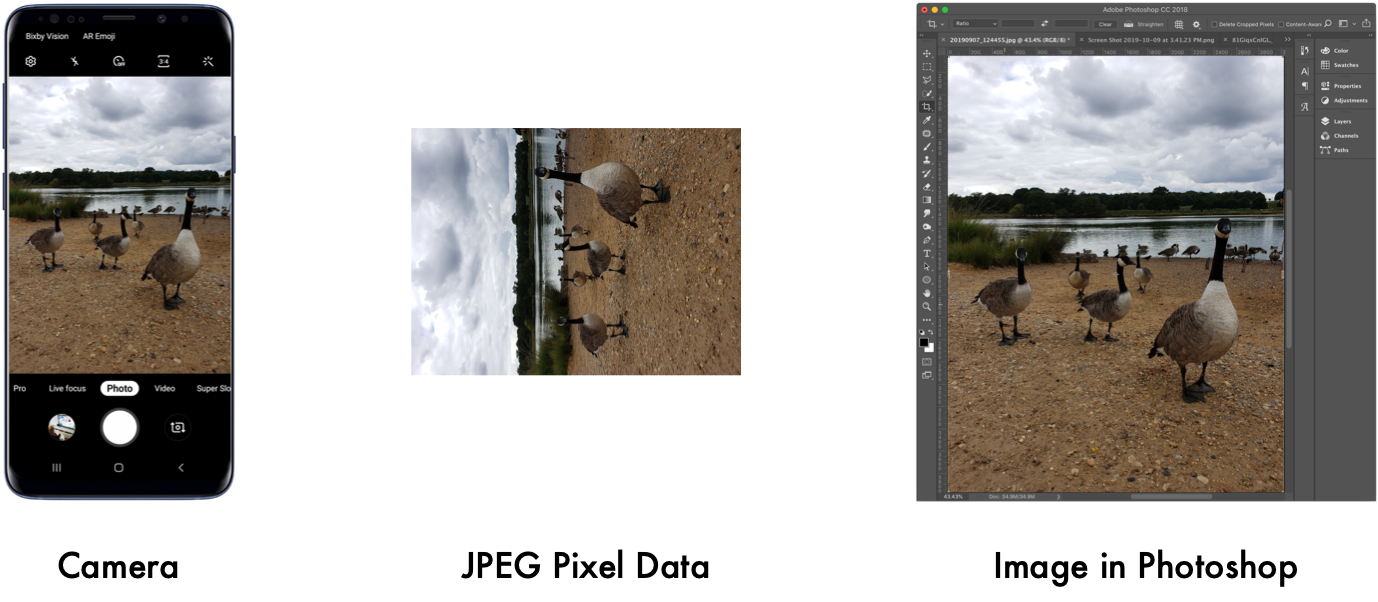

But the camera does not actually rotate the pixel data inside the file. Since image sensors in digital cameras are read line by line as a continuous stream of pixel information, it is easier for the camera to always store pixel data in the same order, regardless of the actual position of the phone.

This is the concern of the program for viewing - correctly rotate the picture before displaying it on the screen. Along with the data of the image itself, the camera also saves metadata - lens settings, location data and, of course, the angle of rotation of the camera. The viewer should use this information to display correctly.

The most common image metadata format is called EXIF (short for Exchangeable Image File Format). EXIF format metadata is embedded in every jpeg file. You cannot see them on the screen, but they are read by any program that knows where to look.

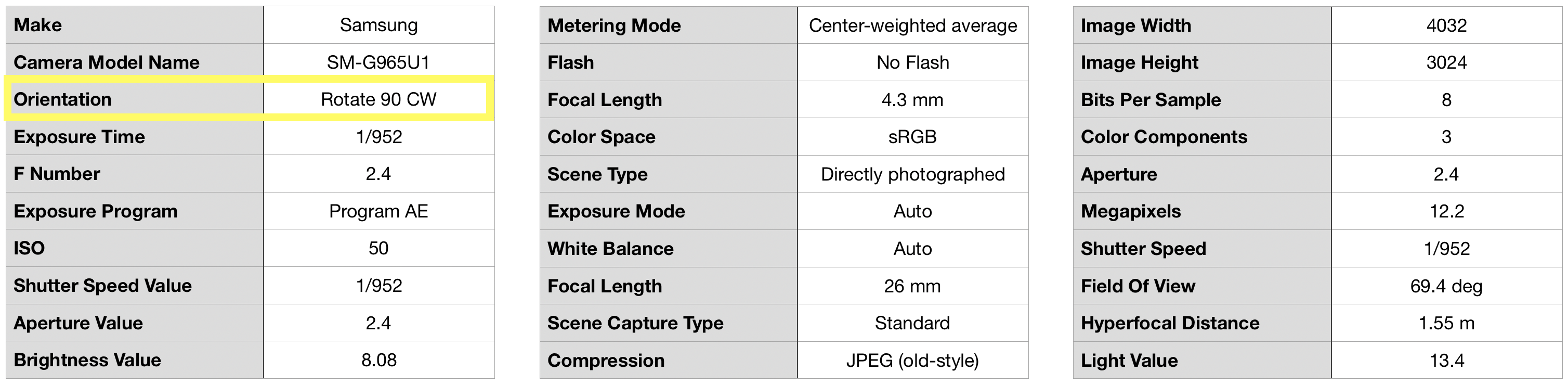

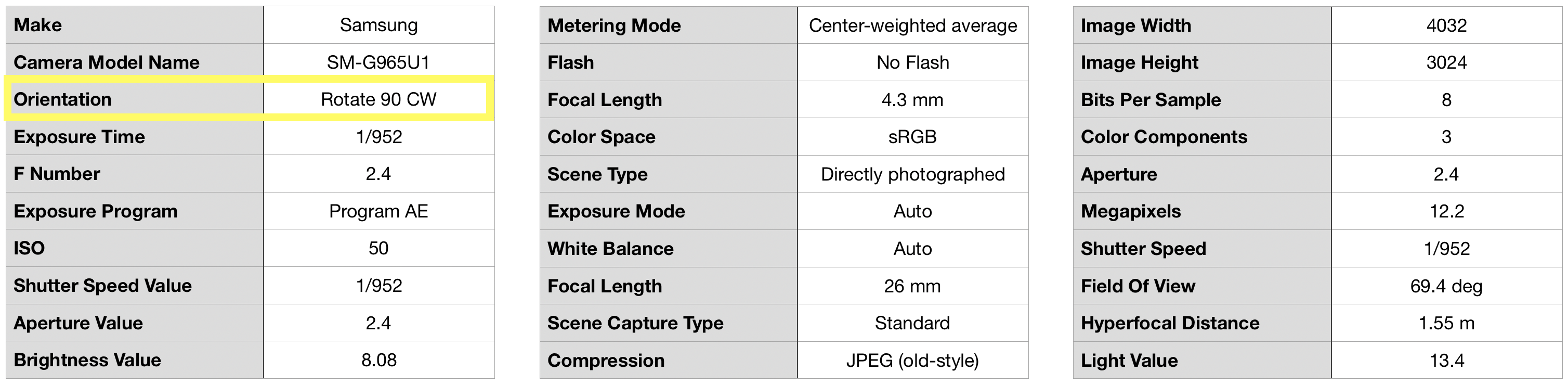

Here is the EXIF metadata inside our goose’s JPEG image from the exiftool tool:

See the 'Orientation' element? He tells the viewer that before displaying on the screen, the picture should be rotated 90 degrees clockwise. If the program forgets to do this, the image will be on its side!

EXIF metadata was not originally part of the JPEG format. They were introduced much later, borrowing the idea from the TIFF format. For backward compatibility, this metadata is optional, and some programs do not bother to parse it.

Most Python image libraries, such as numpy, scipy, TensorFlow, Keras, etc., consider themselves scientific tools for serious people who work with shared datasets. They do not care about consumer-level issues, such as automatic image rotation, although this is required for almost all photographs in the world taken with modern cameras.

This means that when processing an image with almost any Python library, you get the original image data without rotation. And guess what happens when you try to upload a photo on your side or upside down in the face or object detection model? The detector does not fire because you gave it bad data.

You may think that problems arise only in the programs of beginners and students, but this is not so! Even the demo version of Google’s flagship Vision API does not handle EXIF orientation correctly:

Demo Google Vision API does not know how to rotate portrait-oriented image taken from a standard mobile phone

Although Google Vision recognizes some animals on its side, it marks them with the common label “animal”, because machine vision models are much more difficult to recognize a goose on its side than a vertical goose. Here is the result, if you rotate the image correctly before submitting it to the model:

With the correct orientation, Google detects birds with a more specific goose mark and a higher confidence indicator. Much better!

This is a super obvious problem when you clearly see that the image is on its side , as in this demo. But it is here that everything becomes insidious - usually you don’t see it! All normal programs on your computer will display the image in the correct orientation, and not how it is actually stored on disk. Therefore, when you try to view an image to see why your model is not working, it will be displayed correctly, and you will not understand why the model is not working!

Finder on Mac always displays photos with the correct rotation from EXIF. There is no way to see that the image is actually stored on its side

This inevitably leads to a lot of open tickets on Github: people complain that open source projects are broken and models are not very accurate. But the problem is much simpler - they just feed rotated or inverted photos to the entrance!

The solution is that each time you load images into Python programs, you should check the EXIF orientation metadata and rotate the images if necessary. It's pretty easy to do, but on the Internet it is surprisingly hard to find code examples that do it right for all orientations.

Here is the code to load any image into a numpy array with the correct orientation:

From here, you can pass an array of image data into any standard Python machine vision library that expects an array at the input: for example, Keras or TensorFlow.

Since the problem is ubiquitous, I published this function as a pip library called image_to_numpy . You can install it as follows:

It works with any Python program, fixing image loading, for example:

See the readme file for more details.

Enjoy it!

From experience, there is one specific technical problem that most often confuses people. No, this is not a difficult theoretical question or a problem with expensive GPUs. The fact is that almost everyone loads images into the memory rotated, without even knowing about it. And computers do not detect objects very well or recognize faces in rotated images.

How digital cameras automatically rotate images

When you take a picture, the camera registers the position of the phone, so that in another program the picture will be displayed in the correct orientation:

But the camera does not actually rotate the pixel data inside the file. Since image sensors in digital cameras are read line by line as a continuous stream of pixel information, it is easier for the camera to always store pixel data in the same order, regardless of the actual position of the phone.

This is the concern of the program for viewing - correctly rotate the picture before displaying it on the screen. Along with the data of the image itself, the camera also saves metadata - lens settings, location data and, of course, the angle of rotation of the camera. The viewer should use this information to display correctly.

The most common image metadata format is called EXIF (short for Exchangeable Image File Format). EXIF format metadata is embedded in every jpeg file. You cannot see them on the screen, but they are read by any program that knows where to look.

Here is the EXIF metadata inside our goose’s JPEG image from the exiftool tool:

See the 'Orientation' element? He tells the viewer that before displaying on the screen, the picture should be rotated 90 degrees clockwise. If the program forgets to do this, the image will be on its side!

Why does this break so many machine vision applications in Python?

EXIF metadata was not originally part of the JPEG format. They were introduced much later, borrowing the idea from the TIFF format. For backward compatibility, this metadata is optional, and some programs do not bother to parse it.

Most Python image libraries, such as numpy, scipy, TensorFlow, Keras, etc., consider themselves scientific tools for serious people who work with shared datasets. They do not care about consumer-level issues, such as automatic image rotation, although this is required for almost all photographs in the world taken with modern cameras.

This means that when processing an image with almost any Python library, you get the original image data without rotation. And guess what happens when you try to upload a photo on your side or upside down in the face or object detection model? The detector does not fire because you gave it bad data.

You may think that problems arise only in the programs of beginners and students, but this is not so! Even the demo version of Google’s flagship Vision API does not handle EXIF orientation correctly:

Demo Google Vision API does not know how to rotate portrait-oriented image taken from a standard mobile phone

Although Google Vision recognizes some animals on its side, it marks them with the common label “animal”, because machine vision models are much more difficult to recognize a goose on its side than a vertical goose. Here is the result, if you rotate the image correctly before submitting it to the model:

With the correct orientation, Google detects birds with a more specific goose mark and a higher confidence indicator. Much better!

This is a super obvious problem when you clearly see that the image is on its side , as in this demo. But it is here that everything becomes insidious - usually you don’t see it! All normal programs on your computer will display the image in the correct orientation, and not how it is actually stored on disk. Therefore, when you try to view an image to see why your model is not working, it will be displayed correctly, and you will not understand why the model is not working!

Finder on Mac always displays photos with the correct rotation from EXIF. There is no way to see that the image is actually stored on its side

This inevitably leads to a lot of open tickets on Github: people complain that open source projects are broken and models are not very accurate. But the problem is much simpler - they just feed rotated or inverted photos to the entrance!

Correction

The solution is that each time you load images into Python programs, you should check the EXIF orientation metadata and rotate the images if necessary. It's pretty easy to do, but on the Internet it is surprisingly hard to find code examples that do it right for all orientations.

Here is the code to load any image into a numpy array with the correct orientation:

import PIL.Image import PIL.ImageOps import numpy as np def exif_transpose(img): if not img: return img exif_orientation_tag = 274 # Check for EXIF data (only present on some files) if hasattr(img, "_getexif") and isinstance(img._getexif(), dict) and exif_orientation_tag in img._getexif(): exif_data = img._getexif() orientation = exif_data[exif_orientation_tag] # Handle EXIF Orientation if orientation == 1: # Normal image - nothing to do! pass elif orientation == 2: # Mirrored left to right img = img.transpose(PIL.Image.FLIP_LEFT_RIGHT) elif orientation == 3: # Rotated 180 degrees img = img.rotate(180) elif orientation == 4: # Mirrored top to bottom img = img.rotate(180).transpose(PIL.Image.FLIP_LEFT_RIGHT) elif orientation == 5: # Mirrored along top-left diagonal img = img.rotate(-90, expand=True).transpose(PIL.Image.FLIP_LEFT_RIGHT) elif orientation == 6: # Rotated 90 degrees img = img.rotate(-90, expand=True) elif orientation == 7: # Mirrored along top-right diagonal img = img.rotate(90, expand=True).transpose(PIL.Image.FLIP_LEFT_RIGHT) elif orientation == 8: # Rotated 270 degrees img = img.rotate(90, expand=True) return img def load_image_file(file, mode='RGB'): # Load the image with PIL img = PIL.Image.open(file) if hasattr(PIL.ImageOps, 'exif_transpose'): # Very recent versions of PIL can do exit transpose internally img = PIL.ImageOps.exif_transpose(img) else: # Otherwise, do the exif transpose ourselves img = exif_transpose(img) img = img.convert(mode) return np.array(img)

From here, you can pass an array of image data into any standard Python machine vision library that expects an array at the input: for example, Keras or TensorFlow.

Since the problem is ubiquitous, I published this function as a pip library called image_to_numpy . You can install it as follows:

pip3 install image_to_numpy

It works with any Python program, fixing image loading, for example:

import matplotlib.pyplot as plt import image_to_numpy # Load your image file img = image_to_numpy.load_image_file("my_file.jpg") # Show it on the screen (or whatever you want to do) plt.imshow(img) plt.show()

See the readme file for more details.

Enjoy it!

All Articles