What is the resolution of the human eye (or how many megapixels we see at any given time)

Very often, photographers, and sometimes people from other specialties, show interest in their own vision.

The question would seem simple at first glance ... you can google it, and everything will become clear. But almost all articles on the network give either "cosmic" numbers - like 400-600 megapixels (megapixels), or this is some kind of poor reasoning.

Therefore, I will try briefly, but consistently, so that no one misses anything, to reveal this topic.

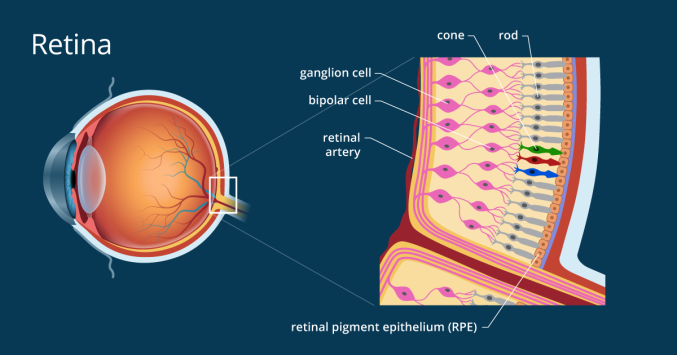

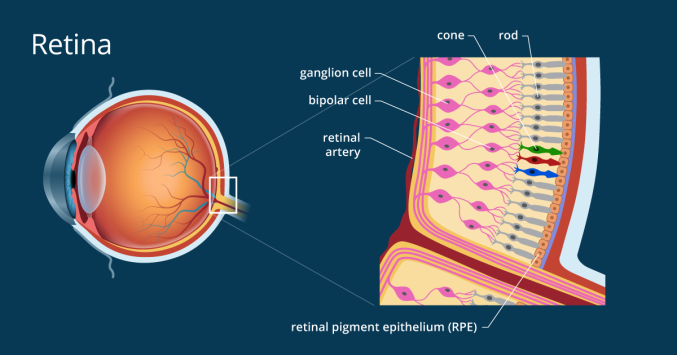

The retina consists of three types of receptors: rods, cones, photoreceptors (ipRGC) .

We are only interested in cones and sticks, as they create a picture.

Cones on average 7 million, and rods - about 120 million.

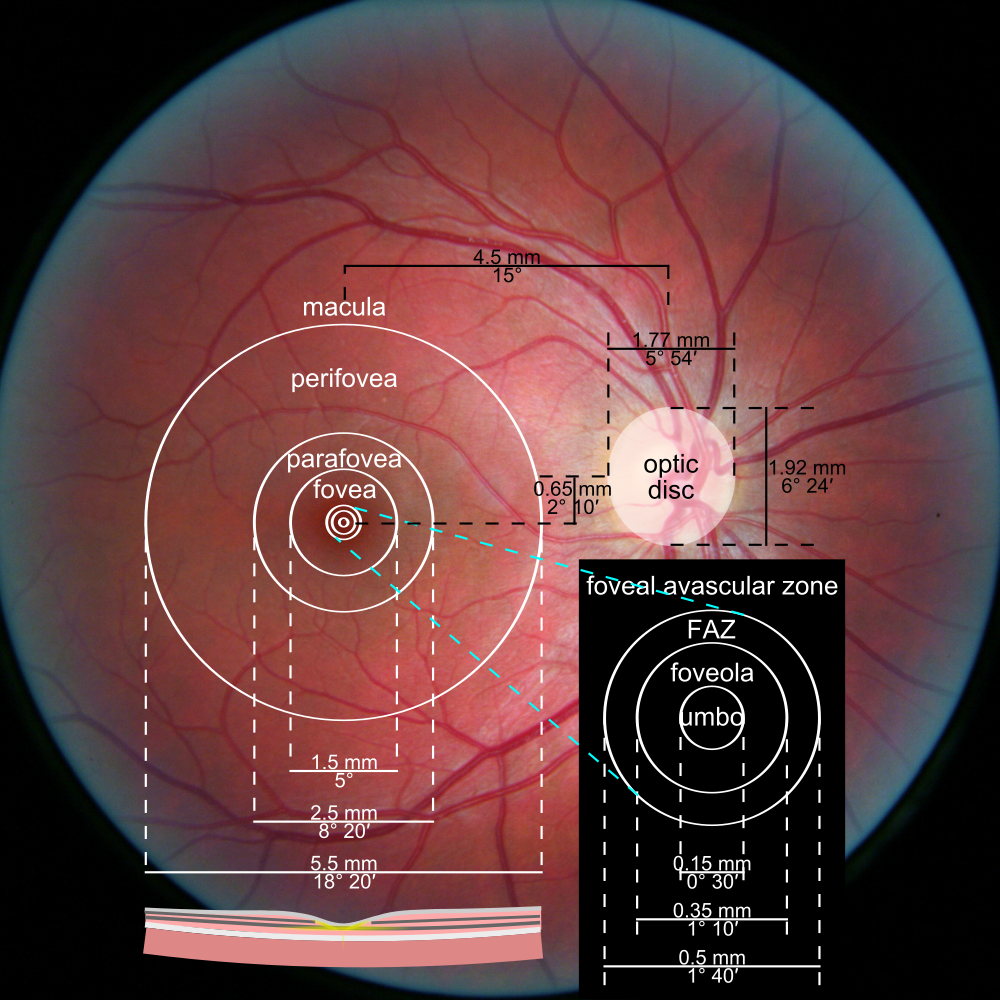

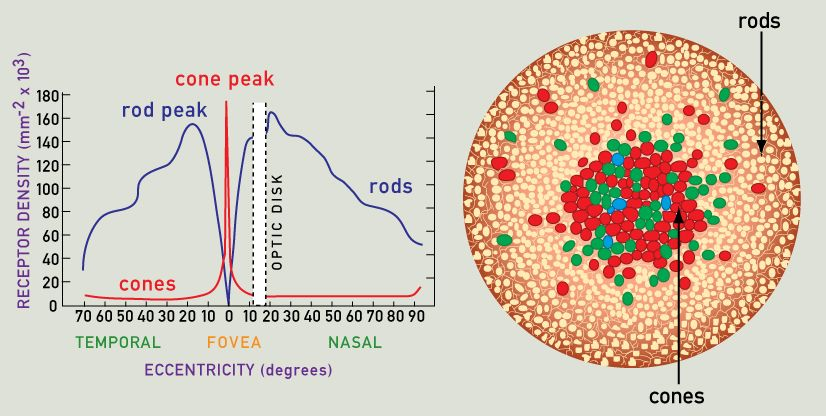

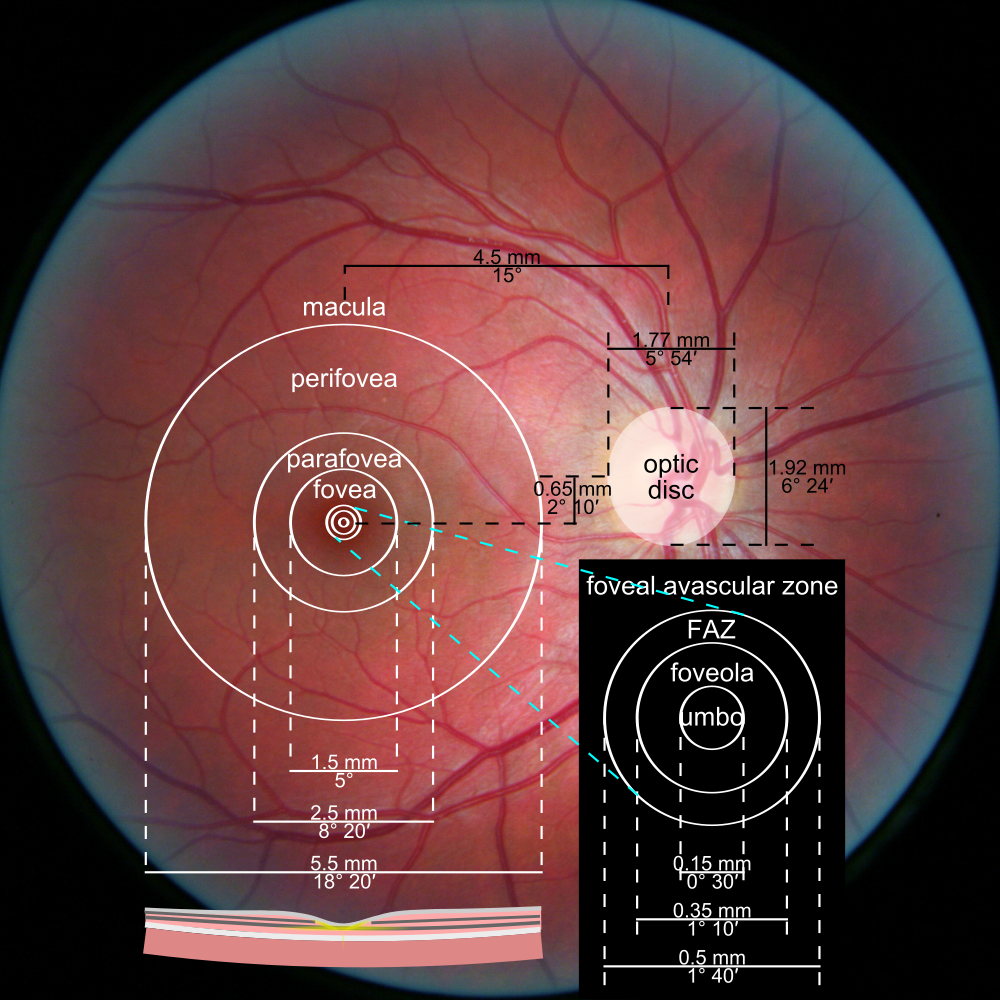

Almost all cones are located in the central fovea of FOVEA (a yellow spot in the center of the retina). It is fovea that is responsible for the clearest area of the visual field.

For a better understanding, I’ll make it clear - fovea covers the nail on the little finger on an outstretched arm, allowing an angle of about 1.5 degrees. The farther from the center of fovea, the more blurry the image we see.

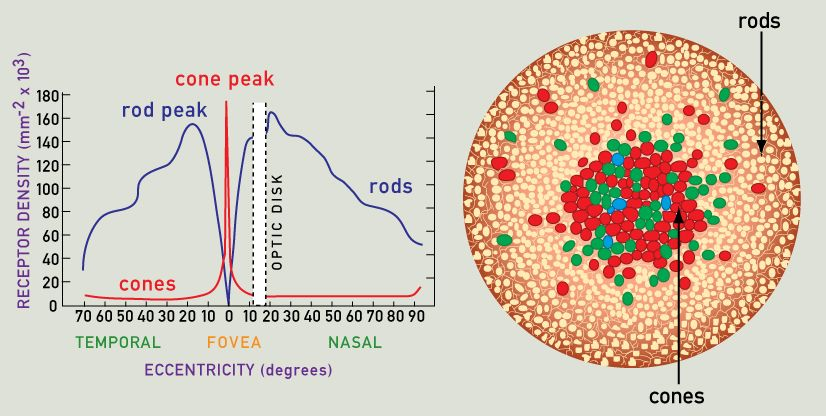

Distribution density of rods and cones in the retina.

The sticks are responsible for the perception of brightness / contrast. The highest density of sticks is approximately in the middle between the central fossa and the edge of the retina.

An interesting fact - many of you noticed the flickering of old monitors and TVs when you looked at them with “side vision”, and when you look directly, everything is fine, was it, right?)

This is due to the highest density of sticks in the lateral part of the retina. The clarity of vision there is lousy, but the sensitivity to changes in brightness is the highest.

Just this feature helped our ancestors to quickly respond to the smallest movements on the periphery of vision, so that tigers would not bite their asses)

So, what do we have - the retina contains a total of about 130 megapixels. Hooray, here's the answer!

No ... this is just the beginning and the figure is far from true.

Let's go back to the central fovea fossa.

The cones in the very central part of the umbo fossa each have their own axon (nerve fiber).

Those. these receptors, one might say, are the highest priority - the signal from them almost directly enters the visual cortex.

The cones located further from the center are already gathering in groups of several pieces - they are called “receptive fields” .

For example, 5 cones connect to one axon, and then the signal goes along the optic nerve into the cortex.

This diagram just shows the case of such a grouping of several cones in a receptive field.

The sticks, in turn, are collected in groups of several thousand - for them it is important not the sharpness of the picture, but the brightness .

So, the intermediate output:

Here the fun begins - ~ 130 million receptors are transformed due to the grouping of 1 million nerve fibers (axons).

Yes, just one million!

But how so ?!

There are 100500 megapixels in the photos of the matrix, and our eyes are still cooler!

Now and get to it)

So, 130 megapixels turned into 1 megapixels, and every day we look at the world around ... good graphics, right?)

There are a couple of tools that help us see the world around us almost always almost clear:

1.Our eyes make micro and macro steps - something like constant eye movements.

Macrosaccades are voluntary eye movements when a person considers something. At this time, “buffering” or merging of neighboring images occurs, so the world around us seems clear.

Microsaccades are involuntary, very fast and small (a few arc minutes) movements.

They are necessary for retinal receptors to have time to synthesize new visual pigments — otherwise the field of view will simply be gray.

2.Retinal projection

I'll start with an example - when we read something from the monitor and gradually turn the mouse wheel to move the text, the text does not get blurry ... although it should) This is a very interesting trick - here the visual cortex is connected to the work.

She constantly holds the picture in the buffer and with a sharp shift of the object / text in front of the viewer, she quickly shifts the picture and superimposes it on the real image.

But how does she know where to shift?

It’s very simple - your finger movement on the wheel has already been studied by the motor cortex up to millimeters ... The visual and motor areas work synchronously, so you can’t see the grease.

But when someone else spins the wheel .... :)

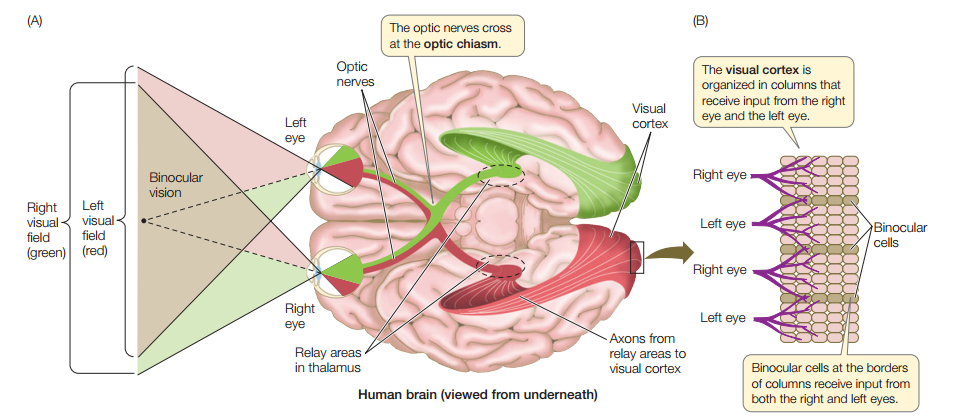

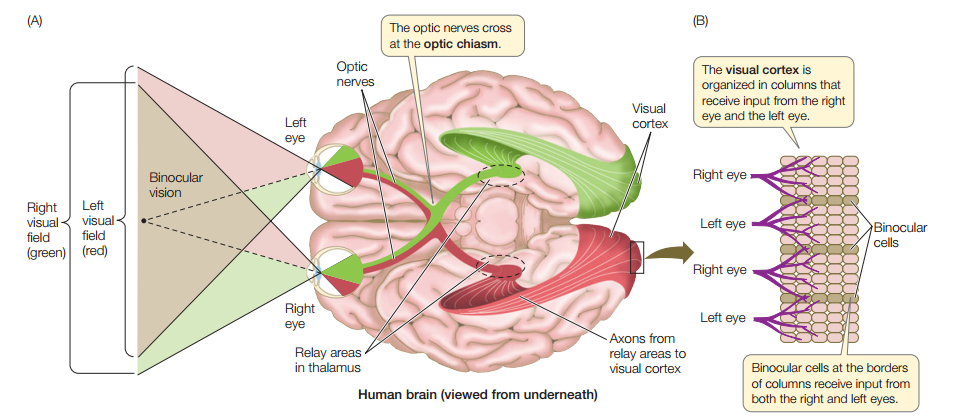

An optic nerve with a density of ~ 1 megapixels comes out from each eye (from 770 thousand to 1.6 million pixels - someone is lucky) , then the nerves from the left and right eyes intersect in optical chiasm - this can be seen in the first picture - axons are mixed in about 53 % from each eye.

Then these two beams fall into the left and right parts of the thalamus - this is such a “distributor” of signals in the very center of the brain.

In the thalamus, one can say, the primary “retouching” of the picture - the contrast increases.

Next, the signal from the thalamus enters the visual cortex .

And here an incredible number of processes take place, here are the main ones:

So why, you ask, we don’t see individual pixels? The picture should be completely wretched, like on an old console!

This is the essence of the phenomenology of vision - you have ONE visual system. You cannot look at your picture from the side.

If a person possessed two visual systems and, if desired, could switch from system 1 to system 2 and evaluate how the first system works, then yes, the situation would be sad :)

But having one visual system YOU yourself are this picture that you see!

The visual cortex itself is aware of the process of vision. Reread this several times.

With a trauma to the primary visual cortex, a person does not understand that he is blind - this is called anosognosia , i.e. he does not see the picture at all, but he can normally walk along the corridor with obstacles (the first link in the list).

Concluding this, I hope, a short and understandable article, I want to remind you that we all have a ~ 1 megapixel picture ... live with this :)

Literature:

David Hubel - Eye, Brain, Vision

Stephen Palmer - From Photons to Phenomenology

Baars B., Gage N. - “Brain, Cognition, Mind”

John Nicholls, A. Martin, B. Wallas, P. Fuchs - “From Neuron to Brain”

Michael Gazzaniga - “Who's in charge?”

References:

https://www.cell.com/fulltext/S0960-9822(08)01433-4

https://iovs.arvojournals.org/article.aspx?articleid=2161180

https://en.wikipedia.org/wiki/Fovea_centralis

https://en.wikipedia.org/wiki/Photoreceptor_cell

UPD: received a noticeable number of comments / questions about color perception. If this topic is interesting - write the tag # color perception - I will be engaged in the creation of the article.

The question would seem simple at first glance ... you can google it, and everything will become clear. But almost all articles on the network give either "cosmic" numbers - like 400-600 megapixels (megapixels), or this is some kind of poor reasoning.

Therefore, I will try briefly, but consistently, so that no one misses anything, to reveal this topic.

Let's start with the general structure of the visual system

- Retina

- Optic nerve.

- Thalamus ( LKT ).

- The visual cortex.

The retina consists of three types of receptors: rods, cones, photoreceptors (ipRGC) .

We are only interested in cones and sticks, as they create a picture.

- Cones perceive blue, green, red colors.

- The sticks form the luminance component with the highest sensitivity in turquoise color.

Cones on average 7 million, and rods - about 120 million.

Almost all cones are located in the central fovea of FOVEA (a yellow spot in the center of the retina). It is fovea that is responsible for the clearest area of the visual field.

For a better understanding, I’ll make it clear - fovea covers the nail on the little finger on an outstretched arm, allowing an angle of about 1.5 degrees. The farther from the center of fovea, the more blurry the image we see.

Distribution density of rods and cones in the retina.

The sticks are responsible for the perception of brightness / contrast. The highest density of sticks is approximately in the middle between the central fossa and the edge of the retina.

An interesting fact - many of you noticed the flickering of old monitors and TVs when you looked at them with “side vision”, and when you look directly, everything is fine, was it, right?)

This is due to the highest density of sticks in the lateral part of the retina. The clarity of vision there is lousy, but the sensitivity to changes in brightness is the highest.

Just this feature helped our ancestors to quickly respond to the smallest movements on the periphery of vision, so that tigers would not bite their asses)

So, what do we have - the retina contains a total of about 130 megapixels. Hooray, here's the answer!

No ... this is just the beginning and the figure is far from true.

Let's go back to the central fovea fossa.

The cones in the very central part of the umbo fossa each have their own axon (nerve fiber).

Those. these receptors, one might say, are the highest priority - the signal from them almost directly enters the visual cortex.

The cones located further from the center are already gathering in groups of several pieces - they are called “receptive fields” .

For example, 5 cones connect to one axon, and then the signal goes along the optic nerve into the cortex.

This diagram just shows the case of such a grouping of several cones in a receptive field.

The sticks, in turn, are collected in groups of several thousand - for them it is important not the sharpness of the picture, but the brightness .

So, the intermediate output:

- each cone in the very center of the retina has its own axon,

- cones at the borders of the central fossa are collected in receptive fields of several pieces,

- several thousand sticks connect to one axon.

Here the fun begins - ~ 130 million receptors are transformed due to the grouping of 1 million nerve fibers (axons).

Yes, just one million!

But how so ?!

There are 100500 megapixels in the photos of the matrix, and our eyes are still cooler!

Now and get to it)

So, 130 megapixels turned into 1 megapixels, and every day we look at the world around ... good graphics, right?)

There are a couple of tools that help us see the world around us almost always almost clear:

1.Our eyes make micro and macro steps - something like constant eye movements.

Macrosaccades are voluntary eye movements when a person considers something. At this time, “buffering” or merging of neighboring images occurs, so the world around us seems clear.

Microsaccades are involuntary, very fast and small (a few arc minutes) movements.

They are necessary for retinal receptors to have time to synthesize new visual pigments — otherwise the field of view will simply be gray.

2.Retinal projection

I'll start with an example - when we read something from the monitor and gradually turn the mouse wheel to move the text, the text does not get blurry ... although it should) This is a very interesting trick - here the visual cortex is connected to the work.

She constantly holds the picture in the buffer and with a sharp shift of the object / text in front of the viewer, she quickly shifts the picture and superimposes it on the real image.

But how does she know where to shift?

It’s very simple - your finger movement on the wheel has already been studied by the motor cortex up to millimeters ... The visual and motor areas work synchronously, so you can’t see the grease.

But when someone else spins the wheel .... :)

Optic nerve

An optic nerve with a density of ~ 1 megapixels comes out from each eye (from 770 thousand to 1.6 million pixels - someone is lucky) , then the nerves from the left and right eyes intersect in optical chiasm - this can be seen in the first picture - axons are mixed in about 53 % from each eye.

Then these two beams fall into the left and right parts of the thalamus - this is such a “distributor” of signals in the very center of the brain.

In the thalamus, one can say, the primary “retouching” of the picture - the contrast increases.

Next, the signal from the thalamus enters the visual cortex .

And here an incredible number of processes take place, here are the main ones:

- merging pictures from two eyes into one - something like overlays happens (1 Mp remains),

- definition of elementary forms - sticks, circles, triangles,

- definition of complex patterns - faces, houses, cars, etc.,

- motion processing

- painting pictures. Yes, it’s painting , before that, the bark simply received analog pulses of different frequencies,

- retouching of the blind areas of the retina - without it, we would constantly see two dark gray spots the size of an apple in front of us,

- a lot of photoshop,

- and finally, the output of the final image — what you call vision — is a phenomenon of vision.

So why, you ask, we don’t see individual pixels? The picture should be completely wretched, like on an old console!

This is the essence of the phenomenology of vision - you have ONE visual system. You cannot look at your picture from the side.

If a person possessed two visual systems and, if desired, could switch from system 1 to system 2 and evaluate how the first system works, then yes, the situation would be sad :)

But having one visual system YOU yourself are this picture that you see!

The visual cortex itself is aware of the process of vision. Reread this several times.

With a trauma to the primary visual cortex, a person does not understand that he is blind - this is called anosognosia , i.e. he does not see the picture at all, but he can normally walk along the corridor with obstacles (the first link in the list).

Concluding this, I hope, a short and understandable article, I want to remind you that we all have a ~ 1 megapixel picture ... live with this :)

Literature:

David Hubel - Eye, Brain, Vision

Stephen Palmer - From Photons to Phenomenology

Baars B., Gage N. - “Brain, Cognition, Mind”

John Nicholls, A. Martin, B. Wallas, P. Fuchs - “From Neuron to Brain”

Michael Gazzaniga - “Who's in charge?”

References:

https://www.cell.com/fulltext/S0960-9822(08)01433-4

https://iovs.arvojournals.org/article.aspx?articleid=2161180

https://en.wikipedia.org/wiki/Fovea_centralis

https://en.wikipedia.org/wiki/Photoreceptor_cell

UPD: received a noticeable number of comments / questions about color perception. If this topic is interesting - write the tag # color perception - I will be engaged in the creation of the article.

All Articles