.NET multithreading: when performance is lacking

The .NET platform provides many pre-built synchronization primitives and thread-safe collections. If you need to implement, for example, a thread-safe cache or a request queue when developing an application, these ready-made solutions are usually used, sometimes several at once. In some cases, this leads to performance problems: a long wait on locks, excessive memory consumption and long garbage collection.

These problems can be solved if we take into account that standard solutions are made quite general - they may have an overhead in our scenarios that is redundant. Accordingly, you can write, for example, your own effective thread-safe collection for a specific case.

Under the cutscene is a video and a transcript of my report from the DotNext conference, where I analyze a few examples when using tools from the standard .NET library (Task.Delay, SemaphoreSlim, ConcurrentDictionary) led to performance drawdowns, and offer solutions tailored for specific tasks and devoid of these shortcomings.

At the time of the report, he worked in the circuit. Kontur develops various applications for business, and the team I worked in deals with infrastructure and develops various support services and libraries that help developers in other teams create product services.

The Infrastructure team builds its data warehouse, an application hosting system for Windows and various libraries for developing microservices. Our applications are based on a microservice architecture - all services interact with each other over the network, and, of course, they use quite a lot of asynchronous and multi-threaded code. Some of these applications are quite performance critical; they need to be able to handle a lot of requests.

What are we going to talk about today?

- Multithreading and asynchrony in .NET;

- Stuffing synchronization primitives and collections;

- What to do if standard approaches cannot cope with the load?

Let's analyze some features of working with multithreaded and asynchronous code in .NET. Let's look at some synchronization primitives and concurrent collections, see how they are arranged inside. We will discuss what to do if there is not enough performance, if the standard classes can not cope with the load, and whether something can be done in this situation.

I will tell you four stories that happened at our production site.

History 1: Task.Delay & TimerQueue

This story is already quite well-known, including about it at previous DotNext. However, it got a rather interesting sequel, so I added it. So what is the point?

1.1 Polling and long polling

The server performs long operations, the client waits for them.

Polling: the client periodically asks the server about the result.

Long polling: the client sends a request with a long timeout, and the server responds when the operation is completed.

Benefits:

- Less traffic

- The client learns about the result faster

Imagine that we have a server that can handle some long requests, for example, an application that converts XML files to PDF, and there are clients who run these tasks for processing and want to wait for their result asynchronously. How can such an expectation be realized?

The first way is polling . The client starts the task on the server, then periodically checks the status of this task, while the server returns the status of the task ("completed" / "failed" / "completed with an error"). The client periodically sends requests until the result appears.

The second way is long polling . The difference here is that the client sends requests with long timeouts. The server, receiving such a request, will not immediately report that the task has not been completed, but will try to wait a while for the result to appear.

So what is the advantage of long polling over regular polling? Firstly, less traffic is generated. We make fewer network requests - less traffic is being chased across the network. Also, the client will be able to find out about the result faster than with regular polling, because he does not need to wait for the interval between several polling requests. What we want to get is understandable. How will we implement this in code?

Task: timeoutFor example, we have a Task that sends a request to the server, and we want to wait for its result with a timeout, that is, we will either return the result of this Task or send some kind of error. C # code will look like this:

We want to wait for Task with a timeout

await SendAsync ();

var sendTask = SendAsync(); var delayTask = Task.Delay(timeout); var task = await Task.WhenAny(sendTask, delayTask); if (task == delayTask) return Timeout;

This code launches our Task, the result of which we want to wait, and Task.Delay. Next, using Task.WhenAny, we are waiting for either our Task or Task.Delay. If it turns out that Task.Delay is executed first, then the time is up and we have a timeout, we must return an error.

This code, of course, is not perfect and can be improved. For example, it would not hurt to cancel Task.Delay if SendAsync returned earlier, but this is not very interesting for us now. The bottom line is that if we write such a code and apply it for long polling with long timeouts, we will get some performance problems.

1.2 Problems with long polling

- Big timeouts

- Many concurrent queries

- => High CPU utilization

In this case, the problem is the high consumption of processor resources. It may happen that the processor is fully loaded at 100%, and the application generally stops working. It would seem that we do not consume processor resources at all: we do some asynchronous operations, wait for a response from the server, and the processor is still loaded with us.

When we faced this situation, we removed a memory dump from our application:

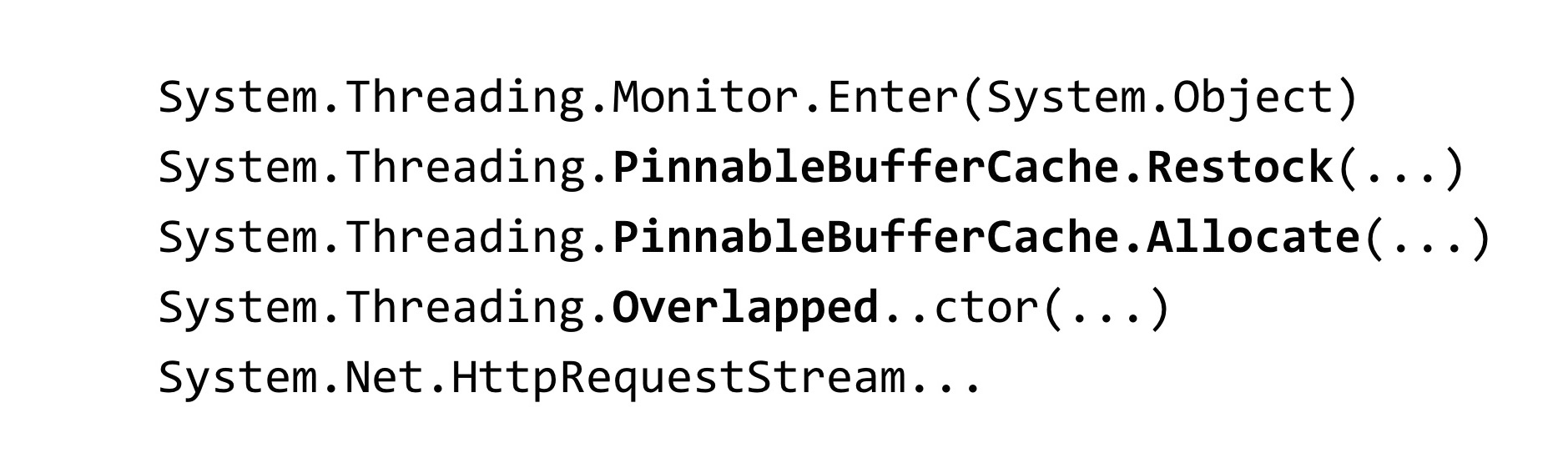

~*e!clrstack System.Threading.Monitor.Enter(System.Object) System.Threading.TimerQueueTimer.Change(…) System.Threading.Timer.TimerSetup(…) System.Threading.Timer..ctor(…) System.Threading.Tasks.Task.Delay(…)

To analyze the dump, we used the WinDbg tool. We entered a command that shows stack traces of all managed threads, and saw such a result. We have so many threads in the process that are waiting on some lock. The Monitor.Enter method is what the lock construct in C # expands into. This lock is captured inside classes called Timer and TimerQueueTimer. In Timer, we came from Task.Delay when we tried to create them. What is it? When Task.Delay starts, the lock inside the TimerQueue is captured.

1.3 Lock convoy

- Many threads try to lock one lock

- Under the lock, little code is executed

- Time is spent on thread synchronization, not code execution.

- Threadblocks are blocked - they are not infinite

We had a lock convoy in the application. Many threads try to capture the same lock. Under this lock, quite a bit of code is executed. Processor resources here are not spent on the application code itself, but on operations to synchronize threads among themselves on this lock. It is also worth noting a feature related to .NET: the threads that participate in lock convoy are threads from the thread pool.

Accordingly, if threads from the thread pool are blocked, they can end - the number of threads in the thread pool is limited. It can be configured, but there is still an upper limit. After it is reached, all threadpool threads will participate in lock convoy, and any code involving the threadpool will cease to be executed in the application. This greatly worsens the situation.

1.4 TimerQueue

- Manages timers in a .NET application.

- Timers are used in:

- Task.Delay

- CancellationTocken.CancelAfter

- HttpClient

TimerQueue is a class that manages all timers in a .NET application. If you once programmed in WinForms, you may have created timers manually. For those who do not know what timers are: they are used in Task.Delay (this is just our case), they are also used inside the CancellationToken, in the CancelAfter method. That is, replacing Task.Delay with CancellationToken.CancelAfter would not help us in any way. In addition, timers are used in many internal .NET classes, for example, in HttpClient.

As far as I know, some implementations of HttpClient handlers have timers. Even if you do not use them explicitly, do not start Task.Delay, most likely, you still use them anyway.

Now let's look at how TimerQueue is arranged inside.

- Global state (per-appdomain):

- Double linked list of TimerQueueTimer

- Lock object - Routine Timer Callbacks

- Timers not ordered by response time

- Adding a timer: O (1) + lock

- Timer Removal: O (1) + lock

- Start timers: O (N) + lock

Inside TimerQueue there is a global state, it is a doubly linked list of objects of type TimerQueueTimer. TimerQueueTimer contains a link to other TimerQueueTimer, to neighboring in a linked list, it also contains the time of the timer and callback, which will be called when the timer fires. This doubly linked list is protected by a lock object, just the one on which lock convoy happened in our application. Also inside TimerQueue there is a Routine that launches callbacks tied to our timers.

Timers are in no way ordered by response time, the whole structure is optimized for adding / removing new timers. When Routine starts, it runs through the entire doubly linked list, selects the timers that should work, and calls them back.

The complexity of the operation here is such. Adding and removing a timer takes place O per unit, and the start of timers takes place per line. Moreover, if everything is acceptable with the algorithmic complexity, there is one problem: all these operations capture the lock, which is not very good.

What situation can happen? We have too many timers accumulated in TimerQueue, so when Routine starts, it locks its long linear operation, at that time those who try to start or remove timers from TimerQueue cannot do anything about it. Because of this, lock convoy occurs. This issue has been fixed in .NET Core.

Reduce Timer lock contention (coreclr # 14527)How was it fixed? They messed up TimerQueue: instead of one TimerQueue, which was static for the entire AppDomain, for the whole application, several TimerQueue were made. When threads arrive there and try to start their timers, these timers will fall into a random TimerQueue, and the threads will have less chance of colliding on one lock.

- Lock sharding

- Environment.ProcessorCount TimerQueue's TimerQueueTimer- Separate queues for short / long-living timers

- Short timer: time <= 1/3 second

https://github.com/dotnet/coreclr/issues/14462

https://github.com/dotnet/coreclr/pull/14527

Also in .NET Core applied some optimizations. Timers were divided into long-lived and short-lived, separate TimerQueue are now used for them. The short-lived timer was selected to be less than 1/3 of a second. I don’t know why such a constant was chosen. In .NET Core, we failed to catch problems with timers.

https://github.com/Microsoft/dotnet-framework-early-access/blob/master/release-notes/NET48/dotnet-48-changes.md

https://github.com/dotnet/coreclr/labels/netfx-port-consider

This fix was backported to the .NET Framework, version 4.8. The netfx-port-consider tag is indicated in the link above, if you go to the .NET Core, CoreCLR, CoreFX repository, by this tag you can search for issue that will be backported to the .NET Framework, now there are about fifty of them. That is, the open source .NET greatly helped, quite a few bugs were fixed. You can read changelog .NET Framework 4.8: a lot of bugs have been fixed, much more than in other .NET releases. Interestingly, this fix is turned off by default in the .NET Framework 4.8. It is included in the entire file you know called App.config

The setting in App.config that enables this fix is called UseNetCoreTimer. Before the .NET Framework 4.8 came out, for our application to work and not go to lock convoy, I had to use my implementation of Task.Delay. In it, we tried to use a binary heap in order to more efficiently understand which timers should be called now.

1.5 Task.Delay: native implementation

- Binaryheap

- Sharding

- It helped, but not in all cases

Using a binary heap allows you to optimize the Routine, which calls callbacks, but worsens the time it takes to remove an arbitrary timer from the queue - for this you need to rebuild the heap. This is most likely why .NET uses a doubly linked list. Of course, just using a binary heap would not help us here, we also had to work out TimerQueue. This solution worked for some time, but then all the same, everything again fell into lock convoy due to the fact that timers are used not only where they are started in the code explicitly, but also in third-party libraries and .NET code. To completely fix this problem, you must upgrade to the .NET Framework version 4.8 and enable the fix from the .NET developers.

1.6 Task.Delay: conclusions

- Pitfalls everywhere - even in the most used things

- Do stress testing

- Switch to Core, get bug fixes (and new bugs) first :)

What are the conclusions from this whole story? Firstly, the pitfalls can be found everywhere, even in the classes that you use every day without thinking, for example, the same Task, Task.Delay.

I recommend carrying out stress testing of your proposals. This problem we just identified at the stage of load testing. We then shot it several times on production in other applications, but, nevertheless, stress testing helped us to delay the time before we encountered this problem in reality.

Switch to .NET Core - you will be the first to receive bug fixes (and new bugs). Where without new bugs?

The story about the timers is over, and we move on to the next.

Story 2: SemaphoreSlim

The following story is about the well-known SemaphoreSlim.

2.1 Server throttling

- It is required to limit the number of concurrently processed requests on the server

We wanted to implement throttling on the server. What it is? You probably all know the throttling on the CPU: when the processor overheats, it lowers its frequency to cool down, and this limits performance. So it is here. We know that our server can process N requests in parallel and not fall. What do we want to do? Limit the number of simultaneously processed requests to this constant and make it so that if more requests come to it, they queue up and wait until those requests that came earlier are executed. How can this problem be solved? It is necessary to use some kind of synchronization primitive.

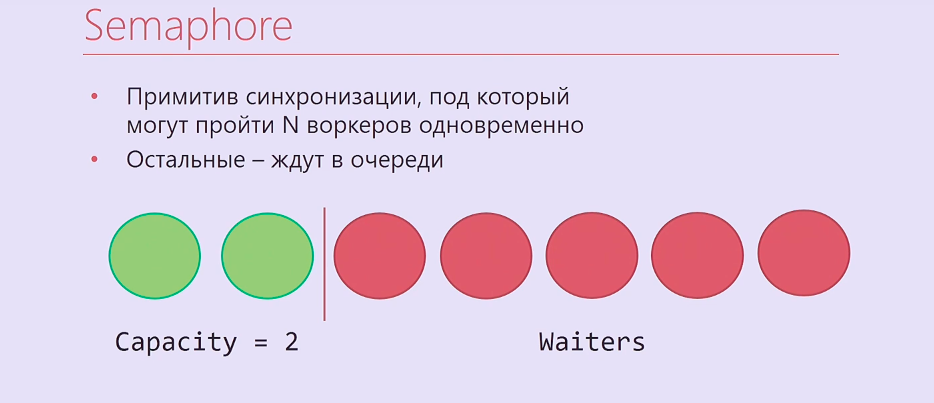

Semaphore is a synchronization primitive on which you can wait N times, after which the one who arrives N + first and so on will wait on it until those who entered it earlier release Semaphore. It turns out something like this: two threads of execution, two workers went under Semaphore, the rest stood in line.

Of course, it’s just that Semaphore is not very suitable for us, it is in .NET synchronous, so we took SemaphoreSlim and wrote this code:

var semaphore = new SemaphoreSlim(N); … await semaphore.WaitAsync(); await HandleRequestAsync(request); semaphore.Release();

We create SemaphoreSlim, wait on it, under Semaphore we process your request, after that we release Semaphore. It would seem that this is an ideal implementation of server throttling, and it could not be better. But everything is much more complicated.

2.2 Server throttling: complication

- Processing requests in LIFO order

- SemaphoreSlim

- Concurrentstack

- TaskCompletionSource

We forgot a little about business logic. The requests that come to throttling are real http requests. As a rule, they have some timeout, which is set by those who sent this request automatically, or a timeout of the user who presses F5 after some time. Accordingly, if you process requests in a queue order, like a regular Semaphore, then first of all those requests from the queue that have timed out may already be processed. If you work in stack order - process first of all those requests that came the very last, such a problem will not arise.

In addition to SemaphoreSlim, we had to use ConcurrentStack, TaskCompletionSource, to wrap a lot of code around all this, so that everything worked in the order we needed. TaskCompletionSource is such a thing, which is similar to CancellationTokenSource, but not for CancellationToken, but for Task. You can create a TaskCompletionSource, pull out a Task from it, give it out and then tell TaskCompletionSource that you need to set the result for this Task, and those who are waiting for this Task will find out about this result.

We have all implemented it. The code is awful. and, worst of all, it turned out to be inoperative.

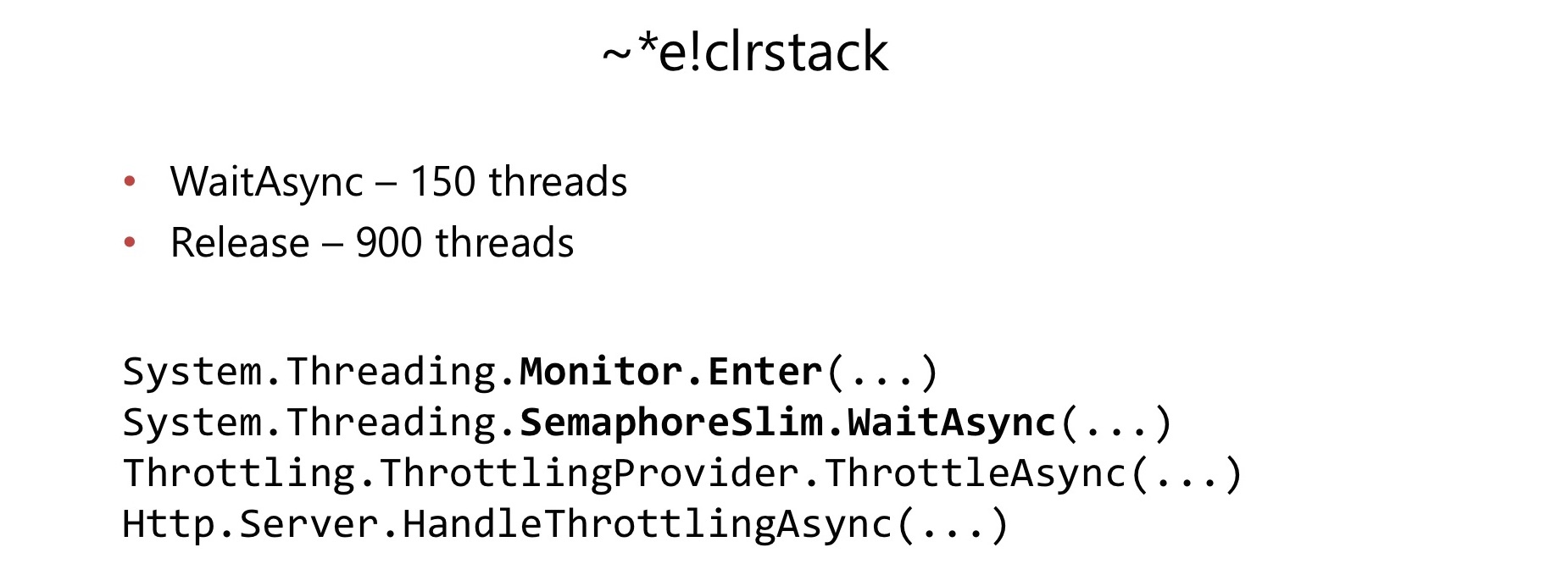

A few months after the start of its use in a rather heavily loaded application, we encountered a problem. In the same way as in the previous case, CPU consumption has increased to 100%. We did the same, removed the dump, looked at it in WinDbg, and again found the lock convoy.

This time Lock convoy occurred inside SemaphoreSlim.WaitAsync and SemaphoreSlim.Release. It turned out that there is a lock inside SemaphoreSlim, it is not lock-free. This turned out to be a rather serious drawback for us.

Inside SemaphoreSlim there is an internal state (a counter of how many workers can still go under it), and a doubly linked list of those who are waiting on this Semaphore. The ideas here are about the same: you can wait at this Semaphore, you can cancel your expectation - to leave this queue. There is a lock that just ruined our lives.

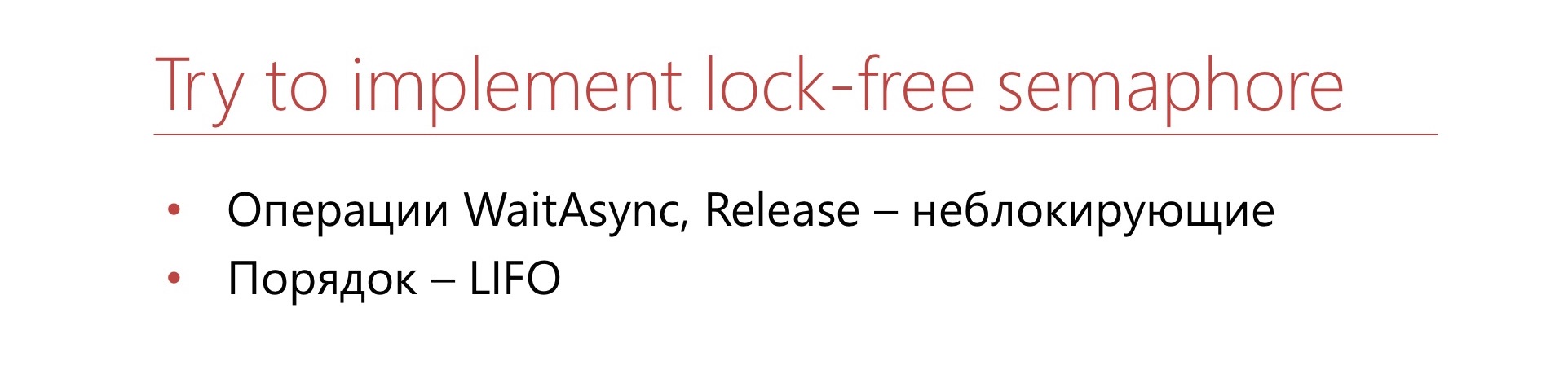

We decided: down with all the terrible code that we had to write.

Let's write our Semaphore, which will immediately be lock-free and which will immediately work in stack order. Canceling the wait is not important to us.

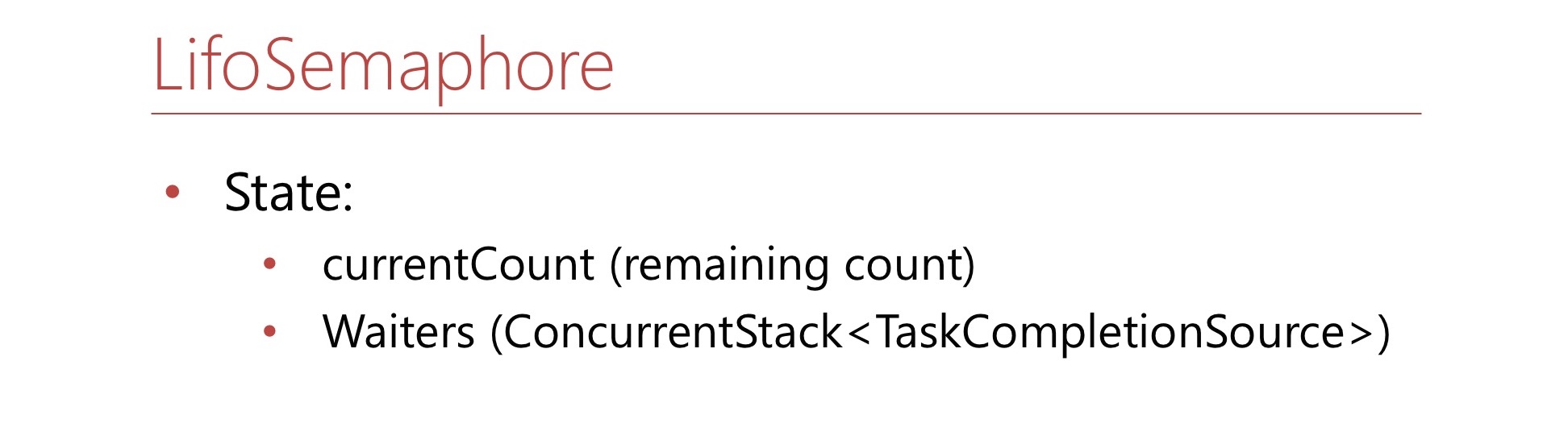

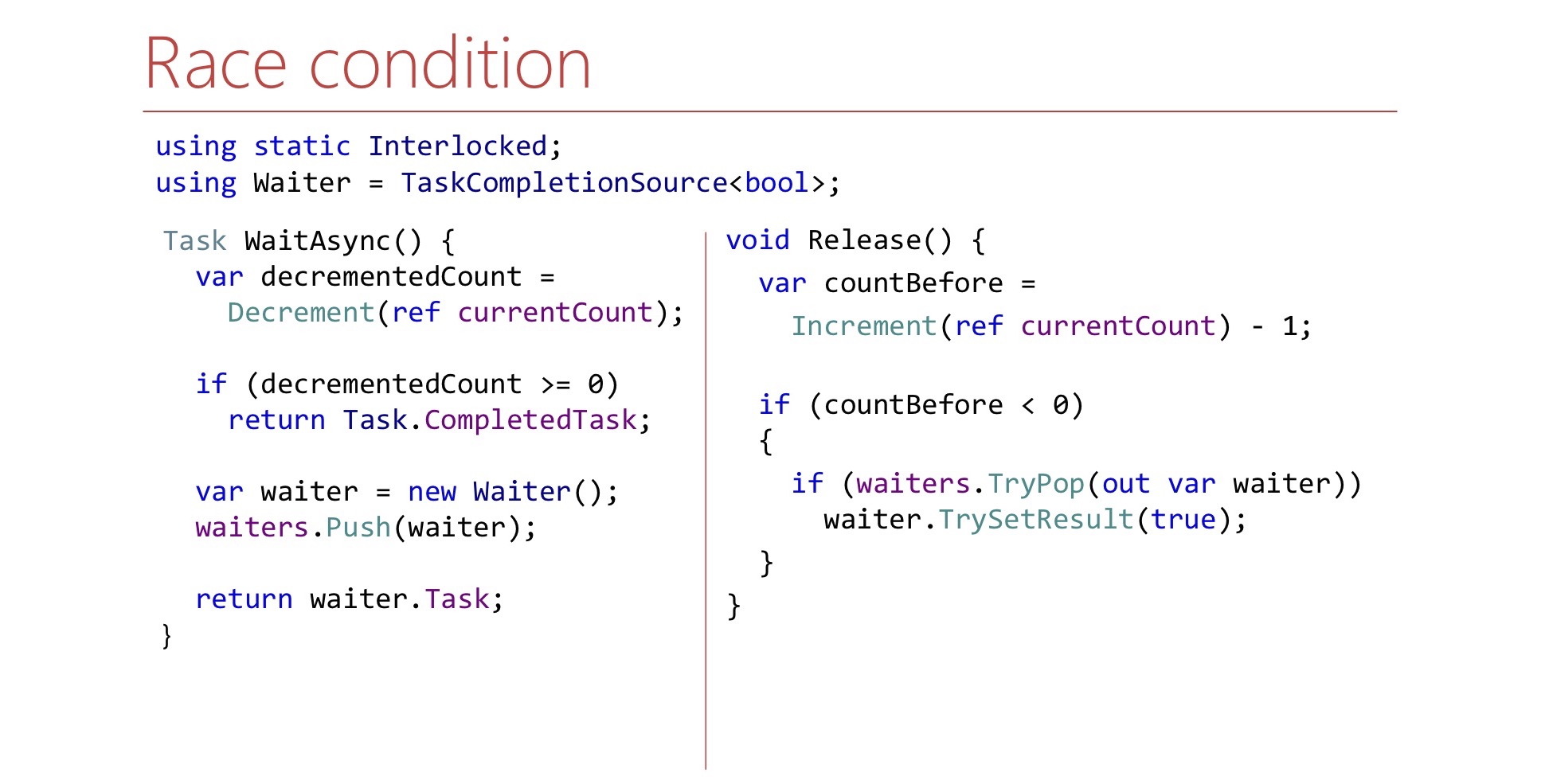

Define this condition. Here will be the number currentCount - this is how many more places are left in the Semaphore. If there are no seats left in Semaphore, this number will be negative and will show how many workers are in the queue. There will also be a ConcurrentStack, consisting of TaskCompletionSource'ov - this is just a stack of waiter'ov from which they will be pulled if necessary. Let's write the WaitAsync method.

var decrementedCount = Interlocked.Decrement(ref currentCount); if (decrementedCount >= 0) return Task.CompletedTask; var waiter = new TaskCompletionSource<bool>(); waiters.Push(waiter); return waiter.Task;

First, we decrease the counter, take one place in the Semaphore for ourselves, if we had free places, and then we say: “That's it, you went under the Semaphore”.

If there were no places in Semaphore, we create a TaskCompletionSource, throw it on the stack of waiter'ov and return Task to the outside world. When the time comes, this Task will work, and the worker will be able to continue his work and will go under Semaphore.

Now let's write the Release method.

var countBefore = Interlocked.Increment(ref currentCount) - 1; if (countBefore < 0) { if (waiters.TryPop(out var waiter)) waiter.TrySetResult(true); }

The Release method is as follows:

- Free One Seat in Semaphore

- Increment currentCount

If according to currentCount it can be said whether there are waiter inside the stack that we need to signal about, we pull such waiter from the stack and signal. Here waiter is a TaskCompletionSource. Question to this code: it seems to be logical, but does it even work? What problems are there? There is a nuance related to where continuation'y and TaskCompletionSource'y are launched.

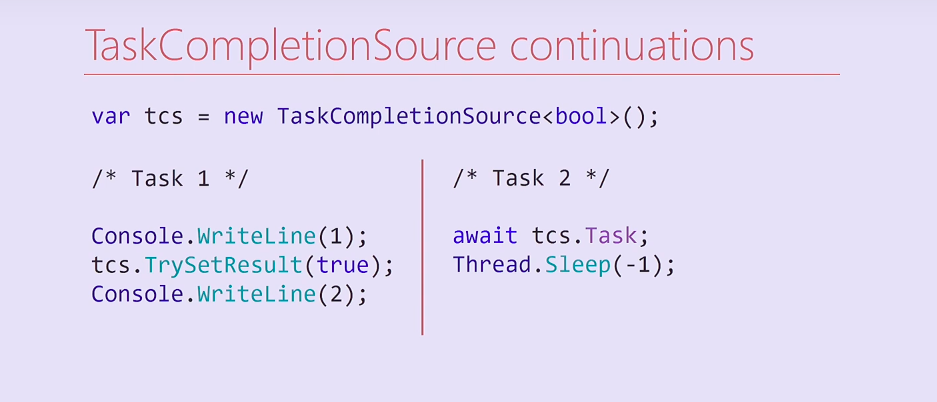

Consider this code. We created a TaskCompletionSource and launched two Task's. The first Task displays a unit, sets the result to a TaskCompletionSource, and then displays a deuce on the console. The second Task waits on this TaskCompletionSource, on its Task, and then forever blocks its thread from the thread pool.

What will happen here? Task 2 at compilation will be divided into two methods, the second of which is a continuation containing Thread.Sleep. After setting the result of the TaskCompletionSource, this continuation will be executed in the same thread in which the first Task was executed. Accordingly, the flow of the first Task will be blocked forever, and the deuce to the console will no longer be printed.

Interestingly, I tried to change this code, and if I removed the output to the console unit, continuation was launched on another thread from the thread pool and the deuce was printed. In which cases the continuation will be executed in the same thread, and in which - will get to the thread pool - a question for readers.

var tcs = new TaskCompletionSource<bool>( TaskCreationOptions.RunContinuationsAsynchronously); /* OR */ Task.Run(() => tcs.TrySetResult(true));

To solve this problem, we can either create a TaskCompletionSource with the corresponding RunContinuationsAsynchronously flag, or call the TrySetResult method inside Task.Run/ThreadPool.QueueUserWorkItem so that it does not run on our thread. If it is executed on our thread, we may have unwanted side effects. In addition, there is a second problem, we will dwell on it in more detail.

Look at the WaitAsync and Release methods and try to find another problem in the Release method.

Most likely, to find her so simply impossible. There is a race here.

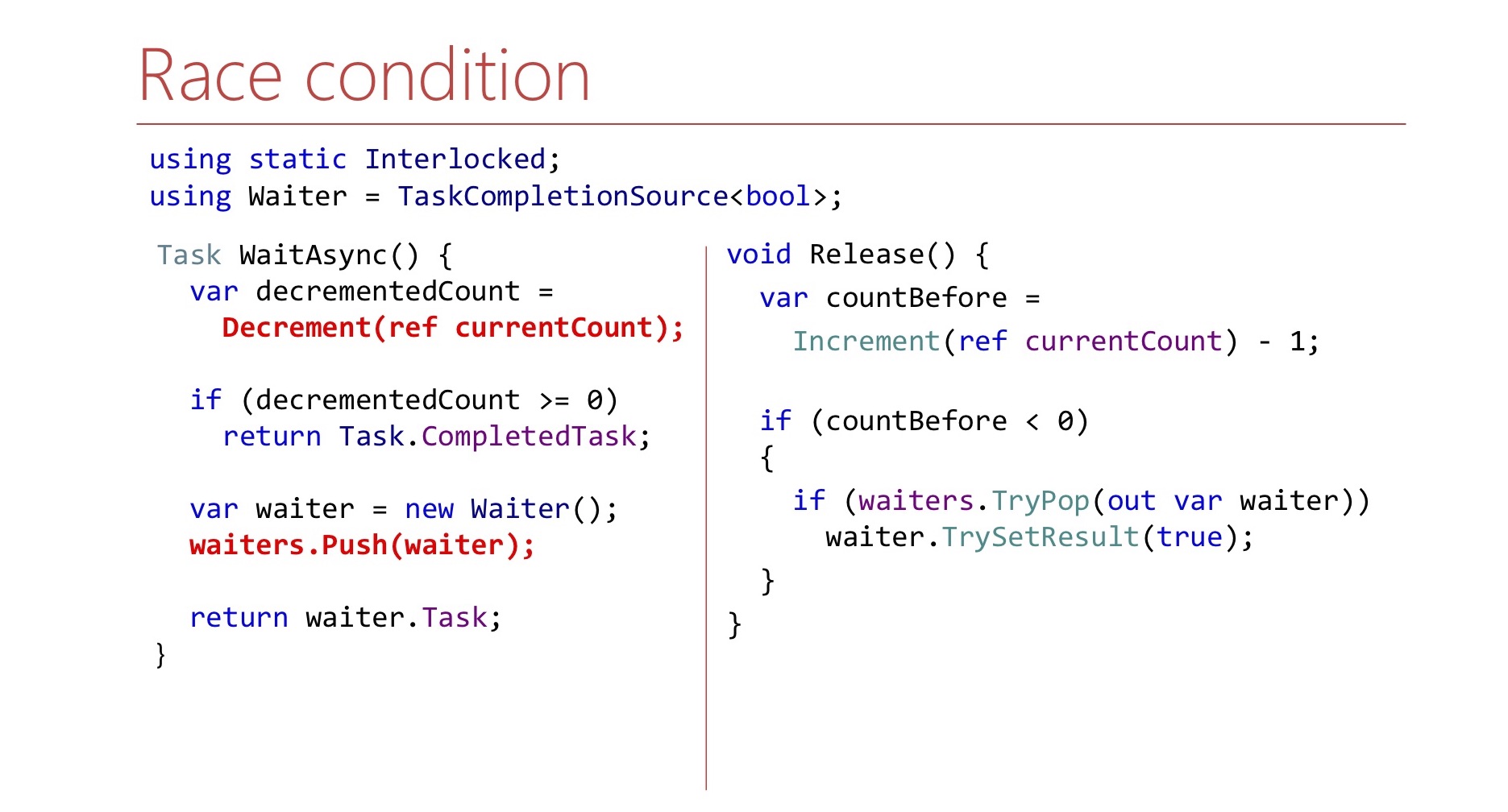

It is due to the fact that in the WaitAsync method the state change is not atomic. First we decrement the counter and only then push the waiter onto the stack. If it so happens that Release is executed between decrement and push, it may exit so that it does not pull anything from the stack. This must be taken into account, and in the Release method, wait for waiter to appear on the stack.

var countBefore = Interlocked.Increment(ref currentCount) - 1; if (countBefore < 0) { Waiter waiter; var spinner = new SpinWait(); while (!waiter.TryPop(out waiter)) spinner.SpinOnce(); waiter.TrySetResult(true); }

Here we do it in a loop until we manage to pull it out. In order not to waste processor cycles once again, we use SpinWait.

In the first few iterations, it will spin in a loop. If it becomes a lot of iterations, waiter will not appear for a long time, then our thread will go to Thread.Sleep, so as not to waste CPU resources once again.

In fact, LIFO-order Semaphore is not only our idea.

LowLevelLifoSemaphoreThere is such a Semaphore in .NET itself, but not in CoreCLR, not in CoreFX, but in CoreRT. It is sometimes quite useful to peek into the .NET repository. There is a Semaphore called LowLevelLifoSemaphore. This Semaphore would not suit us anyway: it is synchronous.

- Synchronous

- On Windows, uses the IO Completion port as a Windows stack

https://github.com/dotnet/corert/blob/master/src/System.Private.CoreLib/src/System/Threading/LowLevelLifoSemaphore.cs

Remarkably, on Windows it works through IO Completion ports. They have the property that threads can wait on them, and these threads will be released just in the LIFO order. This feature is used there, it is really LowLevel.

2.3 Conclusions:

- Do not hope that the filling of the framework will survive under your load

- It is easier to solve a specific problem than the general case.

- Stress testing does not always help

- Beware of blocking

What are the conclusions from this whole story? First of all, do not hope that some classes from the framework that you use from the standard library will cope with your load. I do not want to say that SemaphoreSlim is bad, it just turned out to be unsuitable specifically in this scenario.

It turned out to be much easier for us to write our Semaphore for a specific task. For example, it does not support cancellation of waiting. This feature is available in the usual SemaphoreSlim, we do not have it, but this allowed us to simplify the code.

Load testing, although it helps, may not always help.

.NET , — . lock, : « ?» CPU 100%, lock', , , - .NET. .

.

3: (A)sync IO

/, .

lock convoy, stack trace Overlapped PinnableBufferCache. lock. : Overlapped PinnableBufferCache?

OVERLAPPED — Windows, /. , . , . , lock convoy. , lock convoy, , .

, , .NET 4.5.1 4.5.2. .NET 4.5.2, , .NET 4.5.2. .NET 4.5.1 OverlappedDataCache, Overlapped — , , . , lock-free, ConcurrentStack, . .NET 4.5.2 : OverlappedDataCache PinnableBufferCache.

What's the Difference? PinnableBufferCache , Overlapped , , — . , , . PinnableBufferCache . , lock-free, ConcurrentStack. , . , , - lock-free list lock'.

3.1 PinnableBufferCache

LockConvoy:

lock convoy , - . list , lock , , .

PinnableBufferCache , . :

PinnableBufferCache_System.ThreadingOverlappedData_MinCount

, . : « ! - ». -:

Environment.SetEnvironmentVariable( "PinnableBufferCache_System.Threading.OverlappedData_MinCount", "10000"); new Overlapped().GetHashCode(); for (int i = 0; i < 3; i++) GC.Collect(GC.MaxGeneration, GCCollectionMode.Forced);

? , Overlapped , , . , , , , PinnableBufferCache lock convoy'. , .

.NET Core PinnableBufferCache , OverlappedData . , , Garbage collector , . .NET Core . .NET Framework, , .

3.2 :

- .NET Core

, . , .NET , . , , .NET Core. , , -.

key-value .

4: Concurrent key-value collections

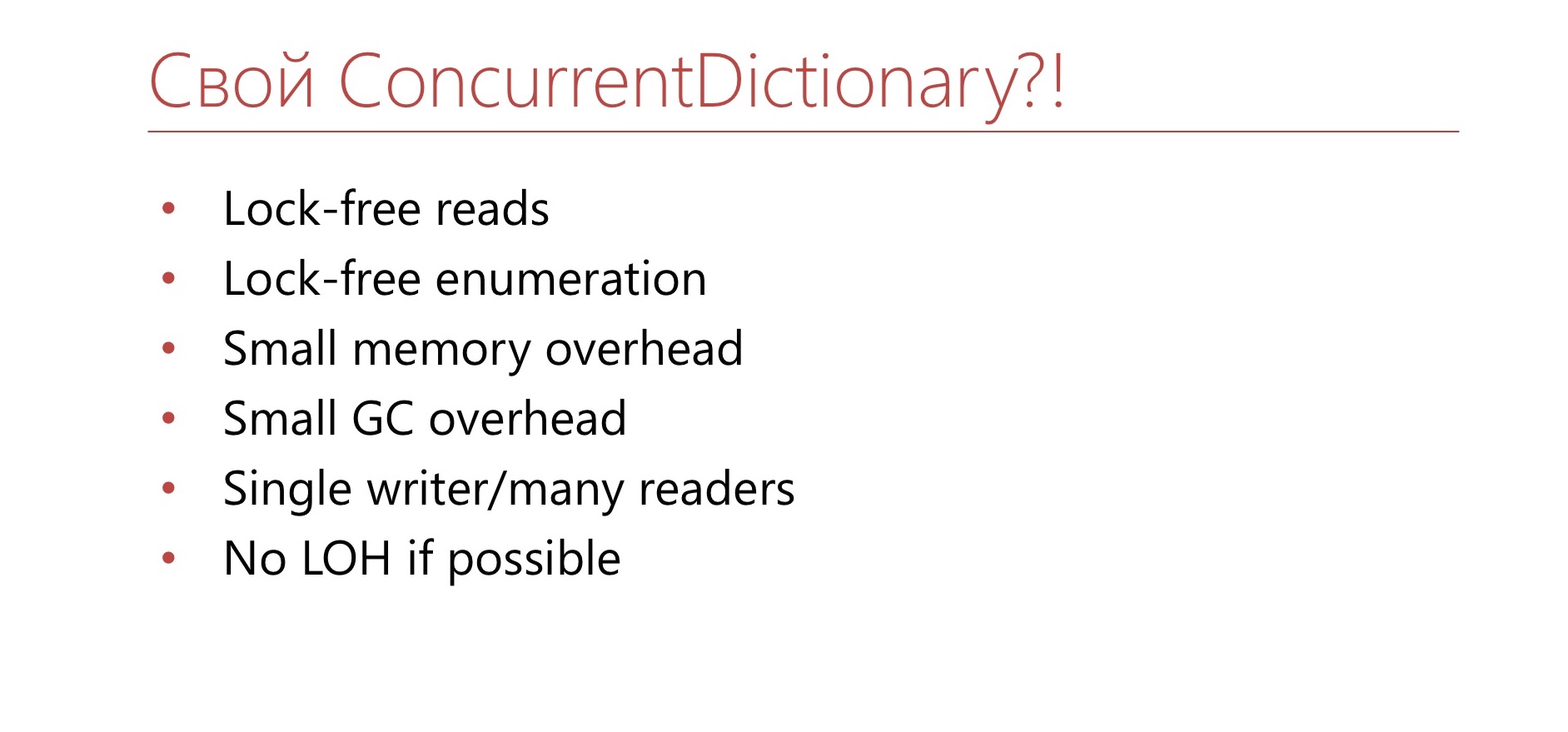

.NET concurrent-. lock-free ConcurrentStack ConcurrentQueu, . ConcurrentDictionary, . lock-free , , . ConcurrentDictionary?

4.1 ConcurrentDictionary

:

- Cache

- Index

Pros:

- (TryAdd/TryUpdate/AddOrUpdate)

- Lock-free

- Lock-free enumeration

, memory-, , . , , .NET Framework. . , , (enumeration) lock-free. , .

, , - .NET. key-value - :

-, bucket'. bucket', . , bucket , .

— , ConcurrentDictionary. ConcurrentDictionary «-» . , , , memory traffic. ConcurrentDictionary, lock'. — .

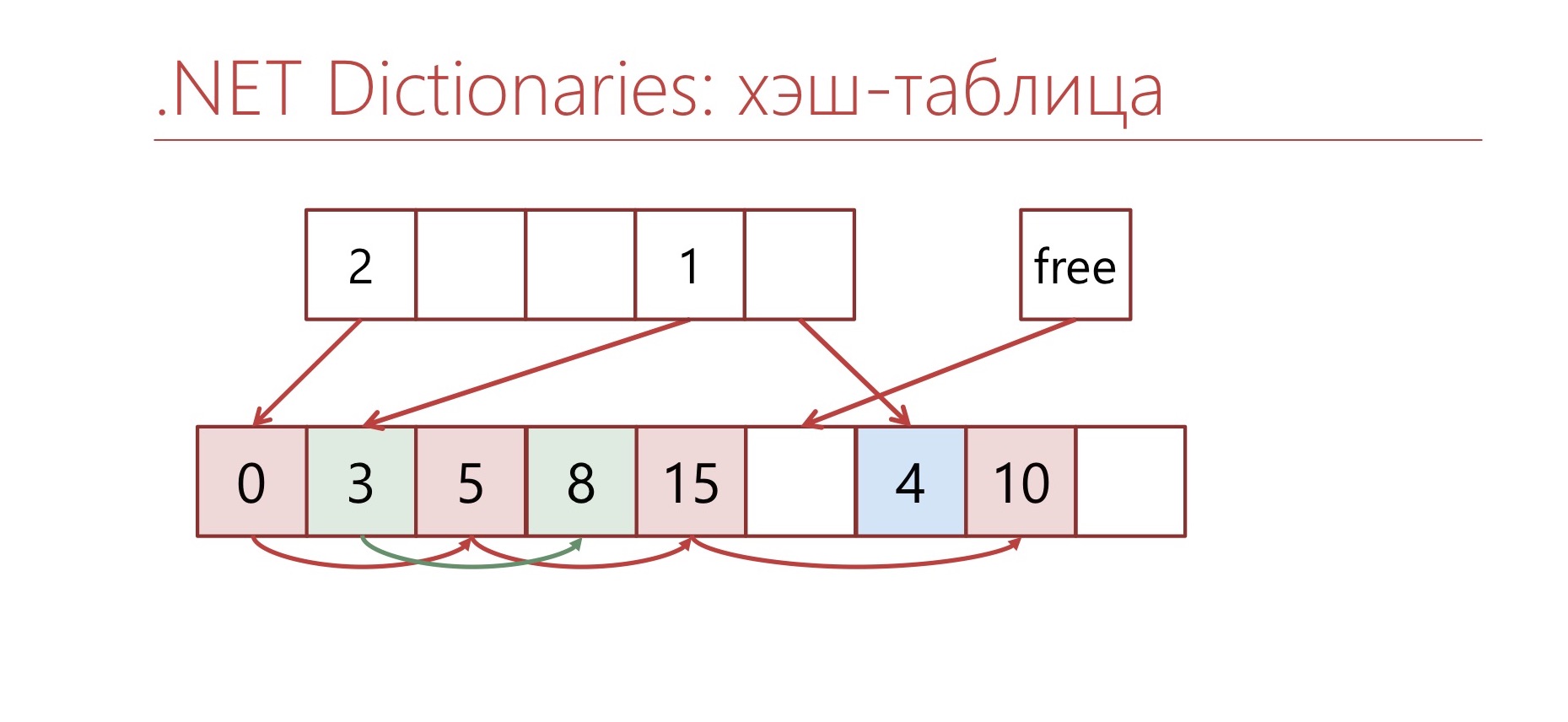

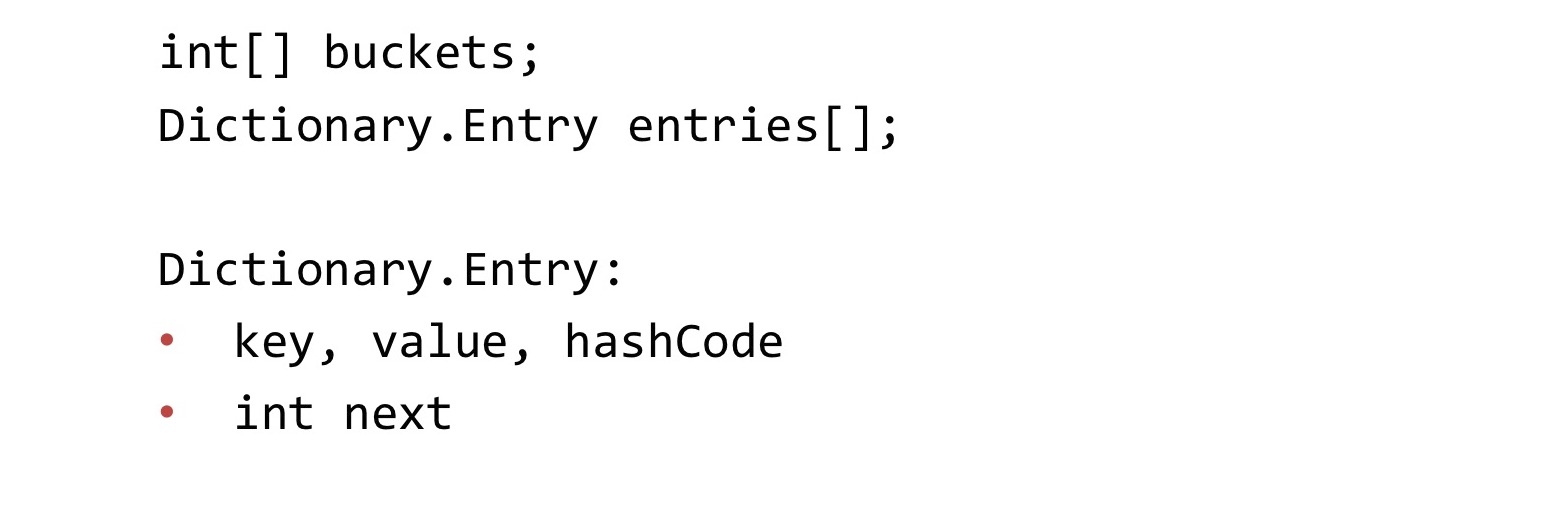

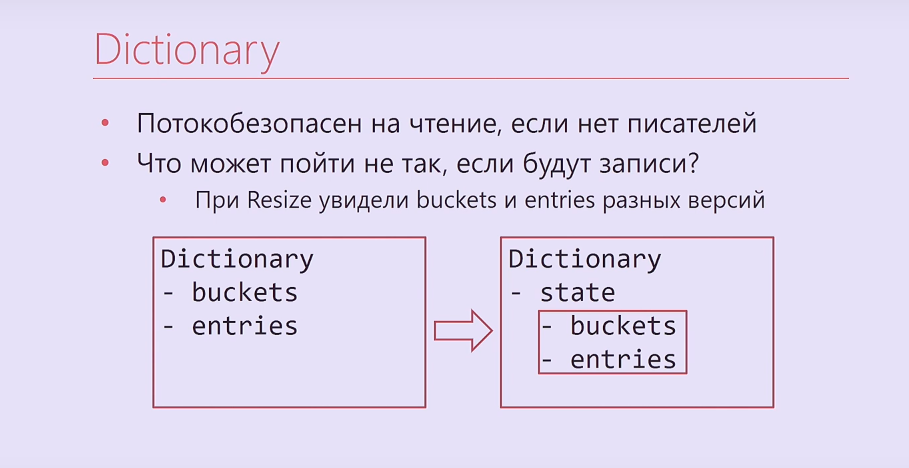

, Dictionary.

Dictionary , Concurrent, . : buckets, entries. buckets bucket' entries. «-» entries. . «-» int, bucket'.

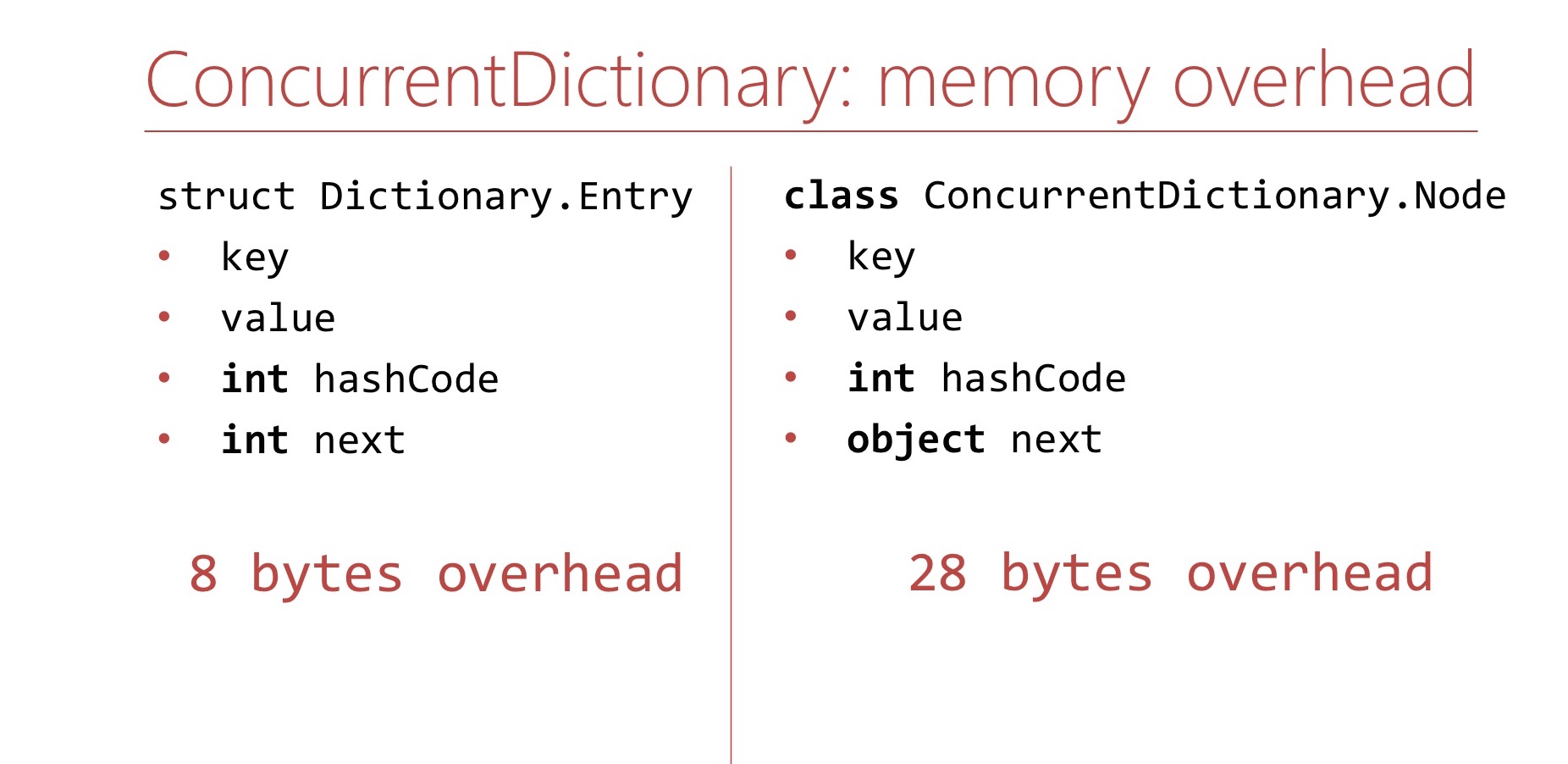

memory overhead, ConcurrentDictionary Dictionary.

Dictionary. Memory overhea' , . Dictionary overhead - , int'. 8 .

ConcurrentDictionary. ConcurrentDictionary ConcurrentDictionary.Node. , . int hashCode . , table ( 16 ), int hashCode . , 64- 28 overhead'. Dictionary.

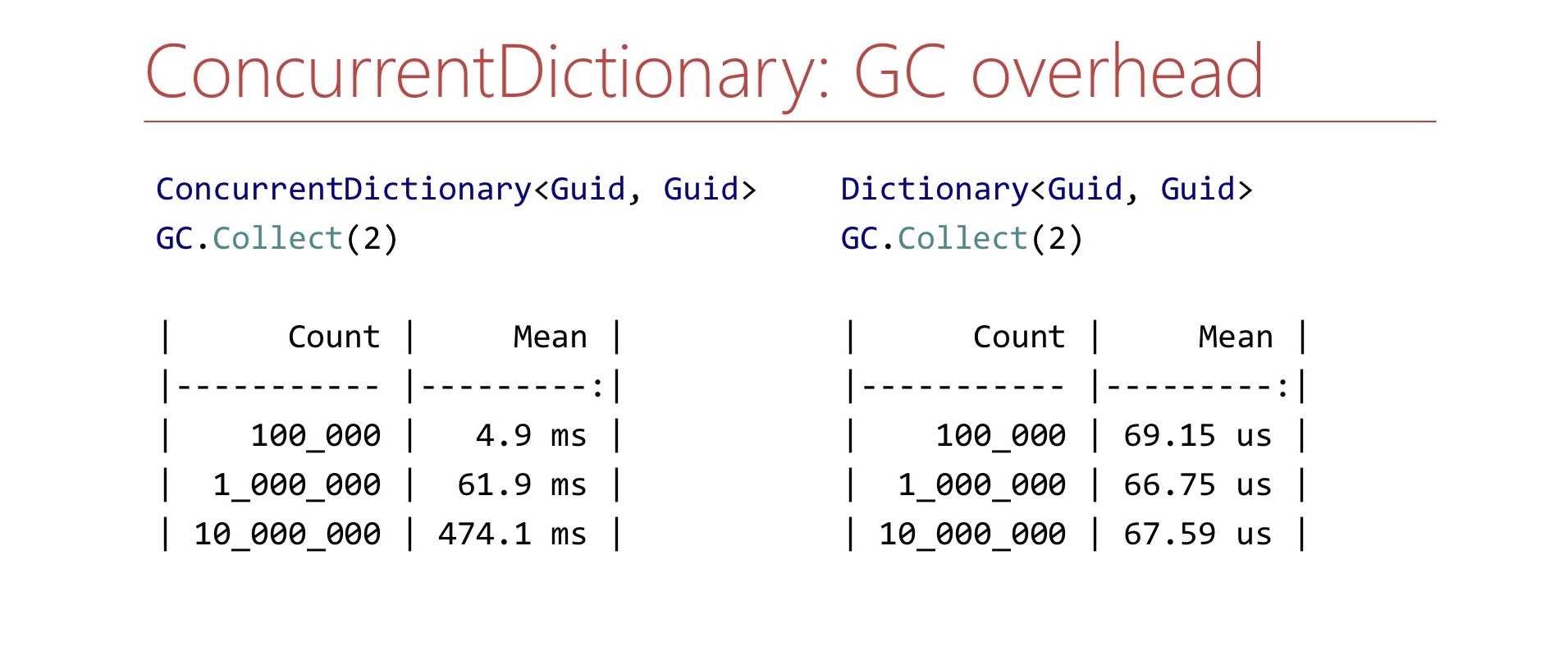

memory overhead', ConcurrentDictionary GC , . Benchmark. ConcurrentDictionary , GC.Collect. ?

. ConcurrentDictionary 10 , , , . Dictionary . , , , . .

, ConcurrentDictionary?

4.2

- TTL

- Dictionary+lock

- Sharding

. ConcurrentDictionary. 10 . , . TTL , . Dictionary lock'. , , lock . Dictionary lock' , - , lock. , .

4.3

- in-memory <Guid,Guid>

- >10 6

. — , in-memory Guid' Guid, . . - - , . , 15 . . Semaphore ConcurrentDictionary.

, lock-free , overhead GC. , . , , , . , - , , . , , Large Object Heap. Why not?

, , Dictionary .

Dictionary bucket', Entry. Entry , , , .

Dictionary , , . , - .

, - ? -, , , , . . Dictionary, , buckets, entries, Interlocked. , .

Dictionary, Dictionary - bucket'. , . , , . , , .

- ,

- , ?

— Resize buckets entries

— -

— Dictionary.Entry

— -

https://blogs.msdn.microsoft.com/tess/2009/12/21/high-cpu-in-net-app-using-a-static-generic-dictionary/

Entry Dictionary. - - . , .

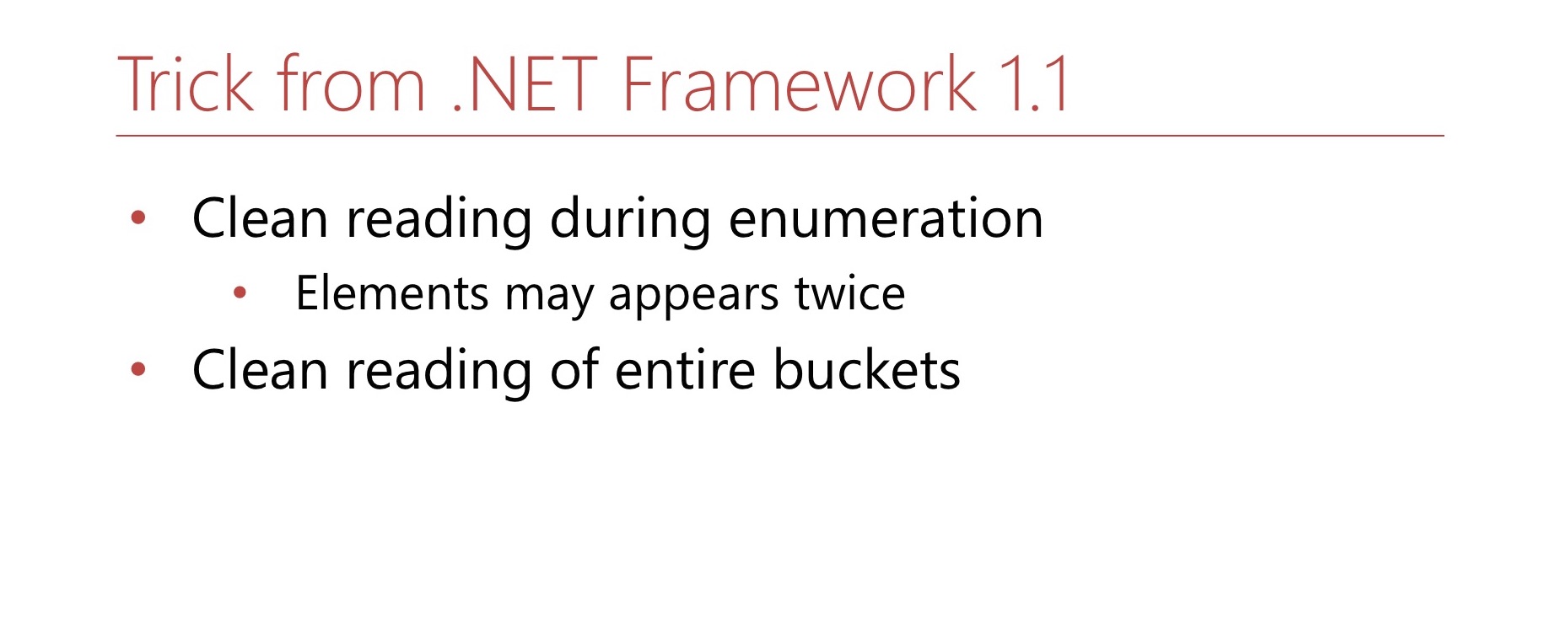

.NET Framework 1.1. Hashtable, Dictionary, object'. MSDN , . , -. . , Hashtable . , .

4.4 Dictionary.Entry

? Dictionary.Entry , , 8 , , , , . How to do it?

bool writing; int version; this.writing = true; buckets[index] = …; this.version++; this.writing = false;

: ( , ) int-. , . , , , , .

bool writing; int version; while (true) { int version = this.version; bucket = bickets[index]; if (this.writing || version != this.version) continue; break; }

, , . , . , 8 .

4.5 -

, .

Dictionary bucket , .

Dictionary, . : 0 2. bucket, 1 2. ? 0. , , 2. . , 2, , , 1. 1 2 — bucket. , , . 1 — , bucket. Hashtable , bucket' -. — double hashing .

4.6

- , resize

Reading

- ,

. , Buckets, Entries ( Buckets, Entries). - , , , , .

. , .

: , , , , . , , .

, , — .

How does he work? , - 2. - Capacity , . — 2. , . 2. ? , , , . - , , 3. , , , , , .

, Hashtable, . , double hashing. , , , .

, , — , . Hashtable. , — — . . , bucket', - , . .

, , lock-free LOH.

lock-free ? MSDN Hashtable , . , , .

, , , bucket'. Dictionary bucket', -, bucket' . - bucket, bucket . , .

, Large Object Heap.

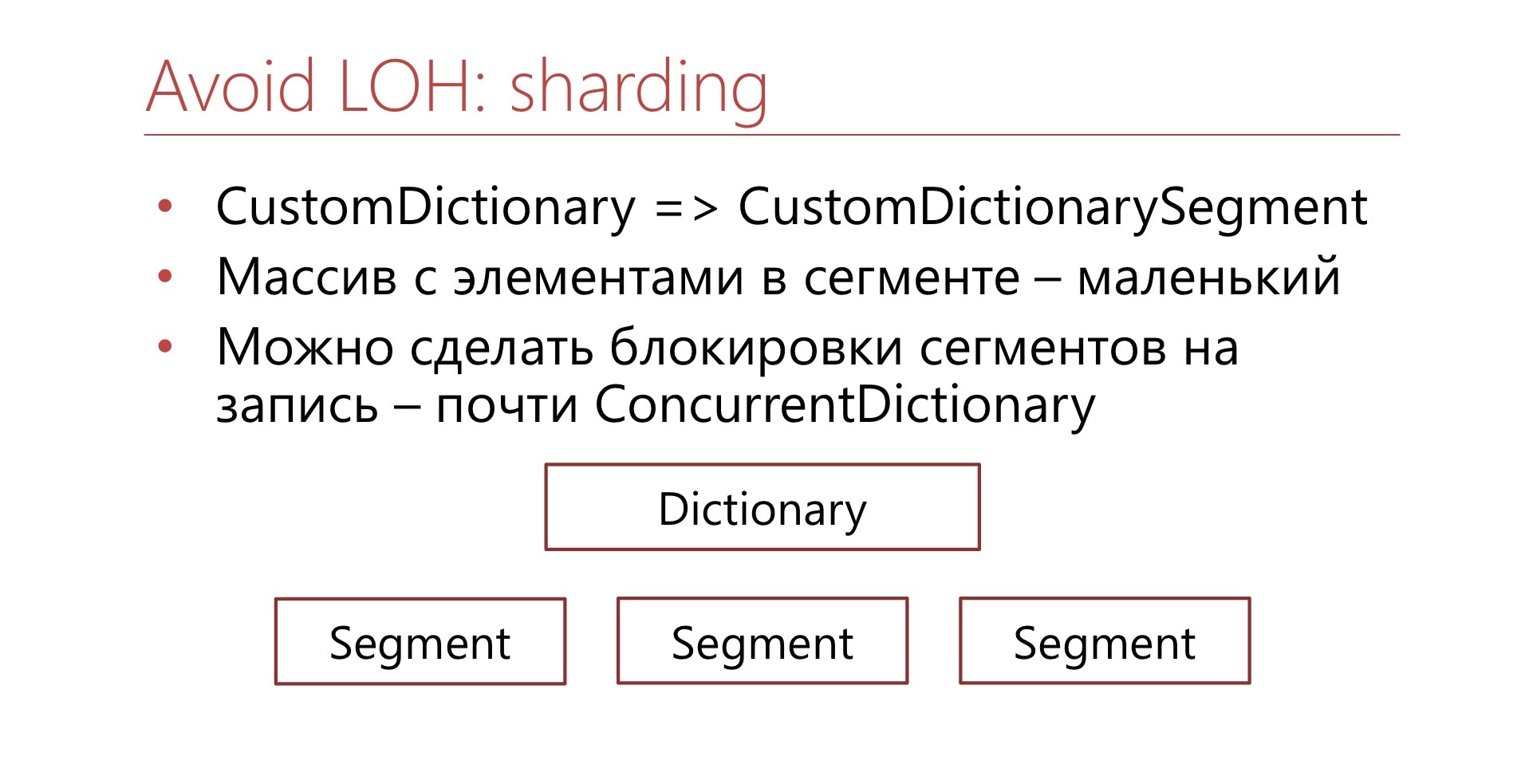

. CustomDictionary CustomDictionarySegment . Dictionary, , . — Dictionary, . , Large Object Heap. , bucket' . , , , bucket, - - .

. ConcurrentDictionary, .NET, , .

4.7

- .NET

- ,

? .NET . . , , . - — - . , , , .

- , , , , . , , , , , . — , , .

useful links

- ConcurrentDictionary: https://habr.com/ru/company/skbkontur/blog/348508/

- https://github.com/vostok/commons.threading

- https://github.com/epeshk/dotnext-2019-threading

— ConcurrentDictionary. , , ( Diafilm ), .

GitHub. — , , LIFO-Semaphore, . , .

6-7 DotNext 2019 Moscow «.NET: » , .NET Framework .NET Core, , .

All Articles