Cisco UCS C240 M5 Rack Server Anboxing

Today we have on our table a new fifth-generation Cisco UCS C240 rack server.

What makes this particular Cisco server interesting for anboxing, given that we are already in its fifth generation?

First, Cisco servers now support the latest generation of second-generation Intel Scalable processors. Secondly, you can now install Optane Memory modules to use multiple NVMe drives.

There are reasonable questions: do other server vendors do not know how? What is it, it's just an x86 server? First things first.

In addition to the tasks of a stand-alone server, the Cisco C240 M5 can become part of the Cisco UCS architecture. Here we talk about connecting to FI and fully managing servers using UCS Manager, including Auto Deploy.

So, before us is the “iron” Cisco server, its fifth generation, more than 10 years on the market.

Now we will go back to the basics, remember what components the server consists of, and what makes the Cisco C240 M5 not only modern, but truly advanced.

Let's see what is included in the package.

Box contents : server, KVM Dongle, documents, disk, 2 power cables, mounting kit.

Remove the cover. Push, move and that's it. No screwdrivers and lost bolts.

Immediately striking green labels. All items that support hot swapping have them. For example, you can easily replace the fans without turning off the power of the entire server.

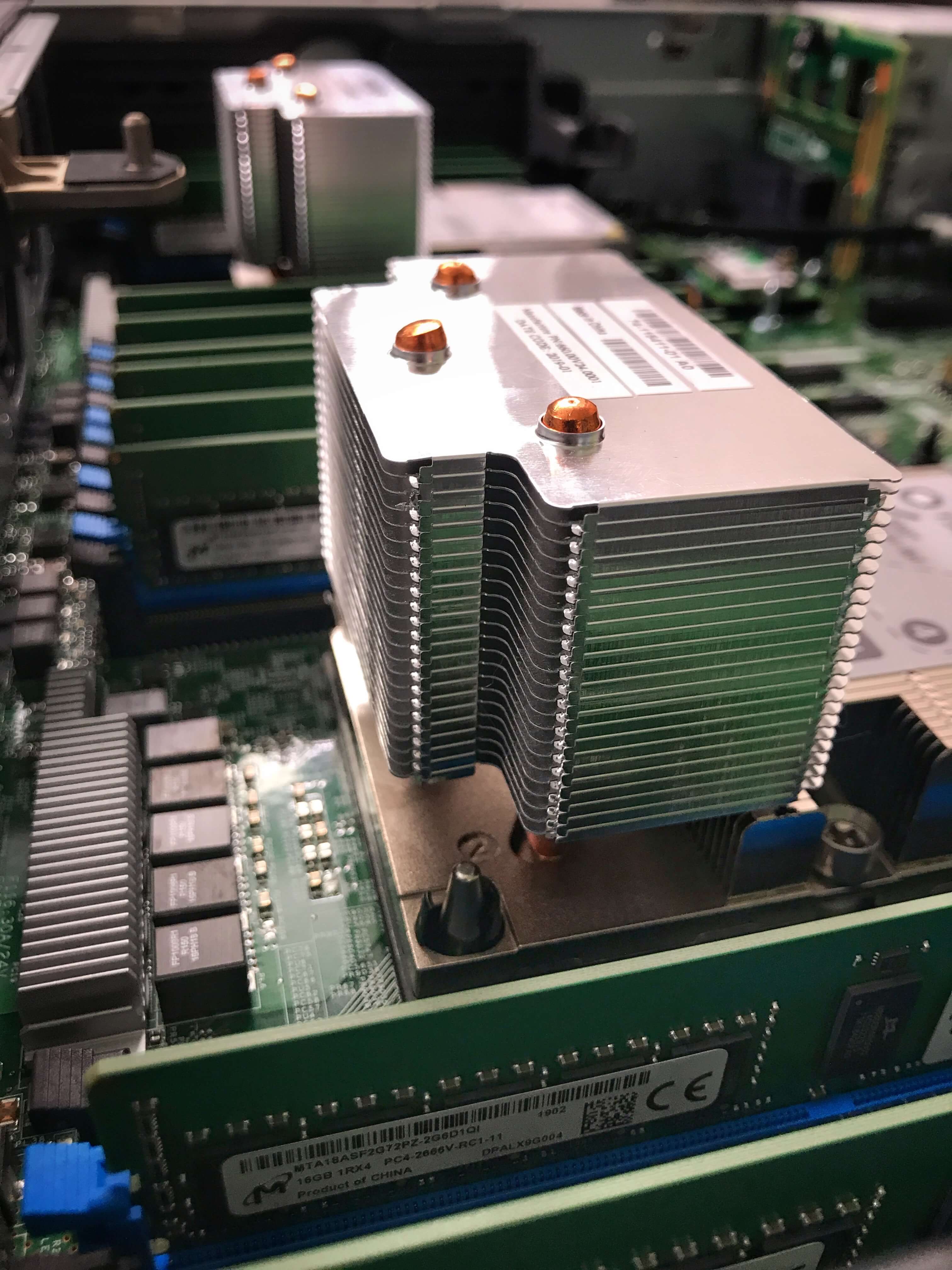

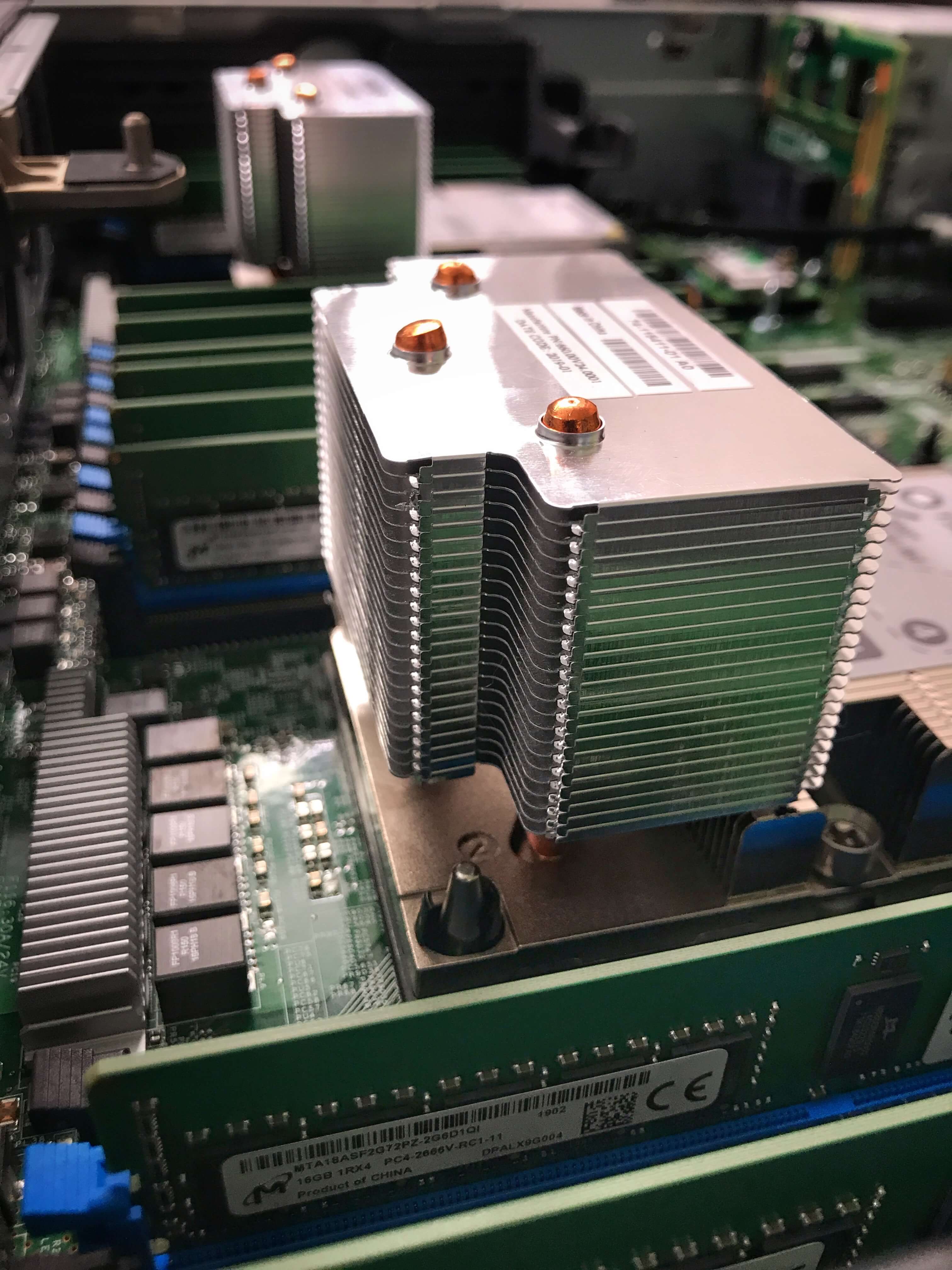

We also see massive heatsinks under which the new Intel Scal 2 Gen processors are hiding. Note that these are up to 56 cores per 2U server without any cooling problems. Plus more memory is supported, up to 1TB per processor. Supported memory frequency also increased to 2933 MHz.

Next to the CPU, we see 24 slots for RAM - you can use slats up to 128 GB or Intel Optane memory up to 512 GB per slot.

Intel Optane brings us crazy processing speeds. For example, it can be used as a superfast local SSD drive.

Now more and more requests from customers begin with the words: “I want more drives, more NVMe drives in one system.”

On the front side we see 8 2.5 inch slots for drives. The platform option for standard 24 slots from the front panel is also available to order.

Depending on the modification, up to 8 NMVe disks in the U.2 form factor can be installed in the first slots.

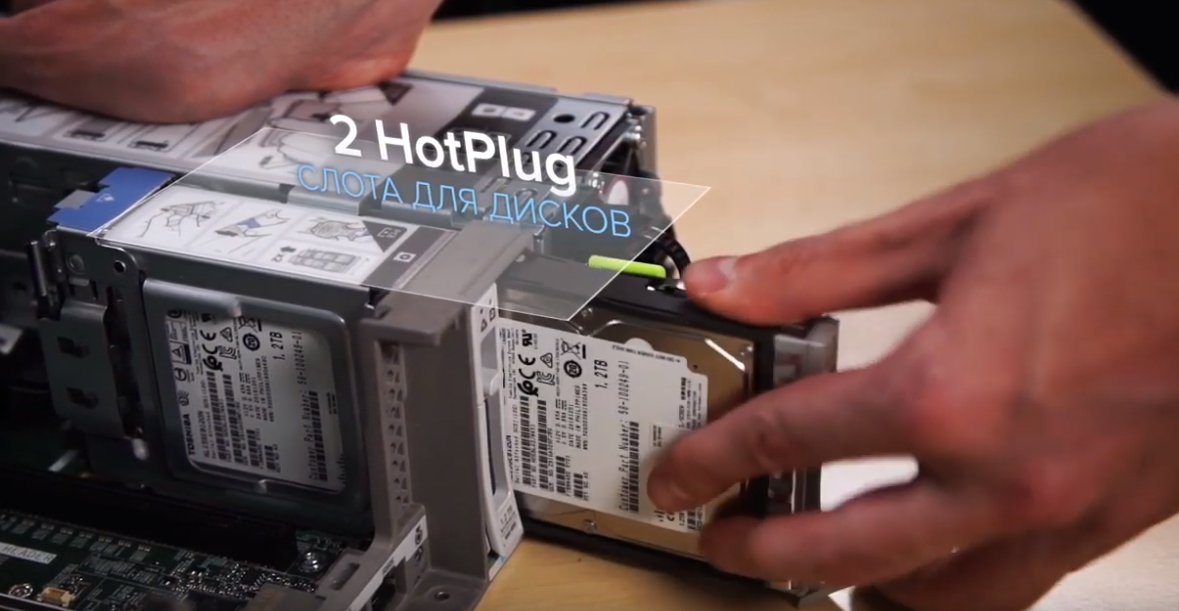

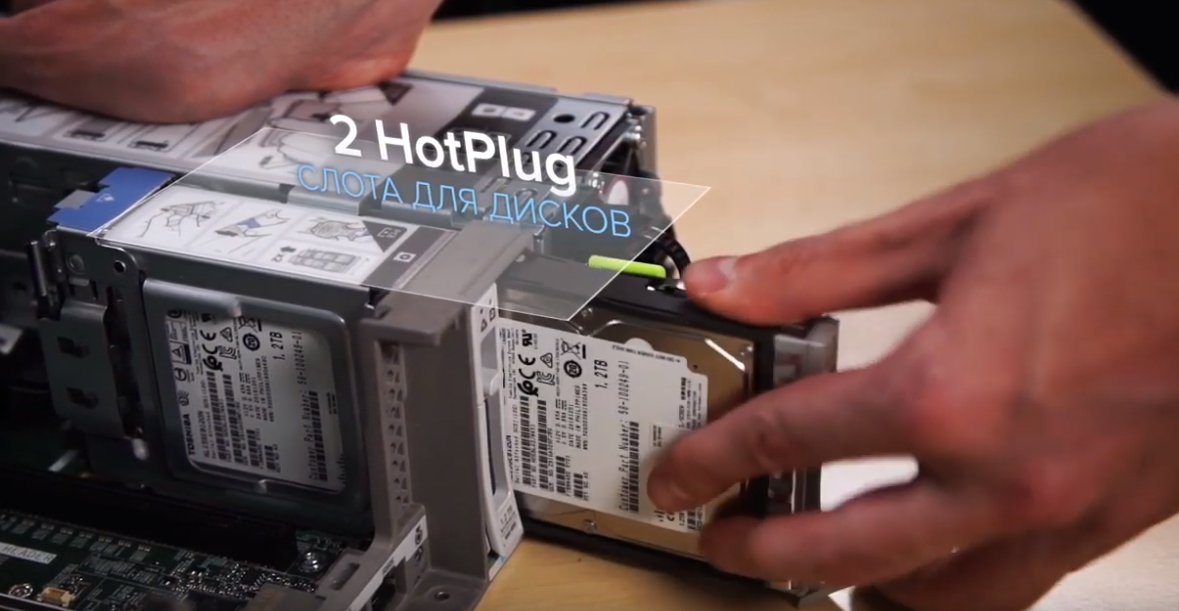

The C240 platform is generally very popular with big data customers. Their main request is the ability to have drives for local boot and preferably hot pluggable.

The answer to this request was Cisco’s decision in C240 M5 to add two hot-swap drive slots at the back of the server.

They are located to the right of expansion slots to power supplies. Storage devices can be any: SAS, SATA, SSD, NVMe.

Nearby we see 1600W power supplies. They are also Hot Pluggable and feature green labels.

To work with the disk subsystem in a dedicated slot, you can install a RAID controller from LSI with 2 GB of cache, or an HBA card for direct forwarding.

For example, this approach is used to build the Cisco HyperFlex Hyperconverged Solution.

There is another type of customer who doesn't need drives at all. They don’t want to put the whole RAID controller under the hypervisor, but they like the 2U case in terms of ease of maintenance.

Cisco also has a solution for them.

Introducing the FlexFlash module:

Two SD cards, up to 128 GB, with support for mirroring, for installing a hypervisor, for example, VMware ESXi. It is this option that we at ITGLOBAL.COM use when building our own sites around the world.

For those customers who need more space to boot the OS, there is a module option for two “satashny” SSD drives in M.2 format, each with a volume of 240 or 960 GB. By default, this is software RAID.

For 240 GB drives, there is the option of using Cisco Boot optimized M.2 Raid controller - a separate hardware RAID controller for these two SSD drives.

All this is supported by all OSs: both VMware and Windows, and various Linux OSs.

The number of PCI slots is 6, which is typical for a 2U platform.

Enough space inside. It is easy to install two full-fledged graphics accelerators from NVidia into the servers, for example, the TESLA M10 in projects for implementing virtual desktops or the latest version of the V100 with 32GB for artificial intelligence tasks. We will use it in the next anboxing.

With ports, the situation is as follows:

You can only install Cisco VIC cards in the mLOM slot, which are effective for both LAN and SAN traffic. When using a server as part of the Cisco UCS factory, this approach allows you to organize a single connection to LAN and SAN networks without the need for a separate fc adapter.

Let's install the Nvidia V100 video accelerator. We remove the second riser, remove the plug, insert the card into the PCI slot, close the plastic and then the plug. We connect additional power. First to the card, then to the riser. Put the riser in place. In general, everything goes without the use of screwdrivers and hammers. Fast and clear.

In one of the following materials we will show its initial installation.

We are also ready to answer all questions here or in the comments.

What makes this particular Cisco server interesting for anboxing, given that we are already in its fifth generation?

First, Cisco servers now support the latest generation of second-generation Intel Scalable processors. Secondly, you can now install Optane Memory modules to use multiple NVMe drives.

There are reasonable questions: do other server vendors do not know how? What is it, it's just an x86 server? First things first.

In addition to the tasks of a stand-alone server, the Cisco C240 M5 can become part of the Cisco UCS architecture. Here we talk about connecting to FI and fully managing servers using UCS Manager, including Auto Deploy.

So, before us is the “iron” Cisco server, its fifth generation, more than 10 years on the market.

Now we will go back to the basics, remember what components the server consists of, and what makes the Cisco C240 M5 not only modern, but truly advanced.

Let's see what is included in the package.

Box contents : server, KVM Dongle, documents, disk, 2 power cables, mounting kit.

Remove the cover. Push, move and that's it. No screwdrivers and lost bolts.

Immediately striking green labels. All items that support hot swapping have them. For example, you can easily replace the fans without turning off the power of the entire server.

We also see massive heatsinks under which the new Intel Scal 2 Gen processors are hiding. Note that these are up to 56 cores per 2U server without any cooling problems. Plus more memory is supported, up to 1TB per processor. Supported memory frequency also increased to 2933 MHz.

Next to the CPU, we see 24 slots for RAM - you can use slats up to 128 GB or Intel Optane memory up to 512 GB per slot.

Intel Optane brings us crazy processing speeds. For example, it can be used as a superfast local SSD drive.

Now more and more requests from customers begin with the words: “I want more drives, more NVMe drives in one system.”

On the front side we see 8 2.5 inch slots for drives. The platform option for standard 24 slots from the front panel is also available to order.

Depending on the modification, up to 8 NMVe disks in the U.2 form factor can be installed in the first slots.

The C240 platform is generally very popular with big data customers. Their main request is the ability to have drives for local boot and preferably hot pluggable.

The answer to this request was Cisco’s decision in C240 M5 to add two hot-swap drive slots at the back of the server.

They are located to the right of expansion slots to power supplies. Storage devices can be any: SAS, SATA, SSD, NVMe.

Nearby we see 1600W power supplies. They are also Hot Pluggable and feature green labels.

To work with the disk subsystem in a dedicated slot, you can install a RAID controller from LSI with 2 GB of cache, or an HBA card for direct forwarding.

For example, this approach is used to build the Cisco HyperFlex Hyperconverged Solution.

There is another type of customer who doesn't need drives at all. They don’t want to put the whole RAID controller under the hypervisor, but they like the 2U case in terms of ease of maintenance.

Cisco also has a solution for them.

Introducing the FlexFlash module:

Two SD cards, up to 128 GB, with support for mirroring, for installing a hypervisor, for example, VMware ESXi. It is this option that we at ITGLOBAL.COM use when building our own sites around the world.

For those customers who need more space to boot the OS, there is a module option for two “satashny” SSD drives in M.2 format, each with a volume of 240 or 960 GB. By default, this is software RAID.

For 240 GB drives, there is the option of using Cisco Boot optimized M.2 Raid controller - a separate hardware RAID controller for these two SSD drives.

All this is supported by all OSs: both VMware and Windows, and various Linux OSs.

The number of PCI slots is 6, which is typical for a 2U platform.

Enough space inside. It is easy to install two full-fledged graphics accelerators from NVidia into the servers, for example, the TESLA M10 in projects for implementing virtual desktops or the latest version of the V100 with 32GB for artificial intelligence tasks. We will use it in the next anboxing.

With ports, the situation is as follows:

- Console port

- Gigabit dedicated management port;

- Two usb 3.0 ports;

- Integrated 2-port Intel x550 10Gb BASE-T network card;

- Optional mLOM card, Cisco Vic 1387 40 GB dual port adapter.

You can only install Cisco VIC cards in the mLOM slot, which are effective for both LAN and SAN traffic. When using a server as part of the Cisco UCS factory, this approach allows you to organize a single connection to LAN and SAN networks without the need for a separate fc adapter.

Let's install the Nvidia V100 video accelerator. We remove the second riser, remove the plug, insert the card into the PCI slot, close the plastic and then the plug. We connect additional power. First to the card, then to the riser. Put the riser in place. In general, everything goes without the use of screwdrivers and hammers. Fast and clear.

In one of the following materials we will show its initial installation.

We are also ready to answer all questions here or in the comments.

All Articles