Real-time neural networks for hand tracking

Recently, researchers from GoogleAI showed their approach to the task of tracking the hands and determining gestures in real time. I was engaged in a similar task and therefore decided to figure out how they approached the decision, which technologies they used, and how they achieved good accuracy during real-time work on a mobile device. Also launched the model on android and tested it in real conditions.

Hand recognition is a rather non-trivial task, which at the same time is widely in demand. This technology can be used in applications of additional reality for interaction with virtual objects. It can also be the basis for understanding sign language or for creating gesture-based control interfaces.

The natural perception of hands in real time is a real challenge for computer vision, hands often overlap themselves or each other (fingers crossed or shaking hands). While faces have high-contrast patterns, for example, in the area of the eyes and mouth, the absence of such signs in the hands makes reliable detection only by their visual signs.

Hands are constantly in motion, change angles of inclination and overlap each other. For an acceptable user experience, recognition needs to work with high FPS (25+). In addition, all this should work on mobile devices, which adds to the speed requirements, as well as resource limits.

They implemented technology for precise tracking of hands and fingers using machine learning (ML). The program determines 21 key points of the hand in 3D space (height, length and depth) and on the basis of this data classifies the gestures that the hand shows. All this on the basis of just one video frame, works in real time on mobile devices and scales by several hands.

The approach is implemented using MediaPipe , an open-source cross-platform framework for building data processing pipelines (video, audio, time series). Something like Deepstream from Nvidia, but with a bunch of features and cross-platform.

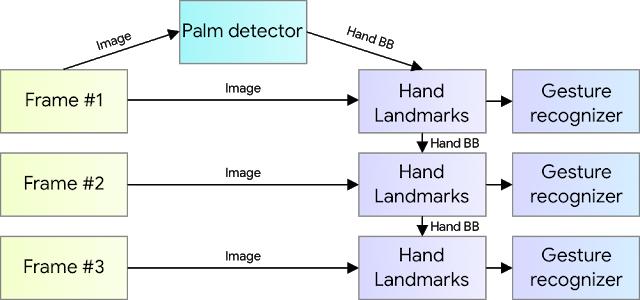

The solution consists of 3 main models working together:

Palm Detector (BlazePalm)

Model for determining key points on the hand

Gesture recognition algorithm

The architecture is similar to that used in the pose estimation task. By providing a precisely cropped and aligned hand image, the need for data augmentation (rotations, translation, and scaling) is significantly reduced, and instead the model can focus on the accuracy of coordinate prediction.

To find the palm of your hand, a model called BlazePalm is used - a single shot detector (SSD) model optimized for working on a mobile device in real time.

A GoogleAI study trained a palm detector instead of an entire arm detector (palm is the base of a palm without fingers). The advantage of this approach is that it is easier to recognize a palm or fist than the whole hand with gesturing fingers, and the palm can also be selected using square bounding boxes (anchors), ignoring aspect ratios, and thus reducing the number of anchors required by 3-5 times

Feature Pyramid Networks for Object Detection (FPN) feature extractor was also used to better understand the image context even for small objects.

As a loss function, focal loss was taken, which copes well with the imbalance of classes that occur when generating a large number of anchors.

Classical Cross Entropy: CE (pt) = -log (pt)

Focal Loss: FL (pt) = - (1-pt) log (pt)

More information about Focall loss can be found in the excellent pager from Facebook AI Research (recommended reading)

Using the above techniques, an average accuracy of 95.7% was achieved. When using simple cross-entropy and without FPN - 86.22%.

After the palm detector has determined the position of the palm on the whole image, the region is shifted up a certain factor and expands to cover the entire hand. Further on the cropped image, the regression problem is solved - the exact position of 21 points in 3D space is determined.

For training, 30,000 real images were manually marked. A realistic 3D model of the hand was also made with the help of which more artificial examples were generated on different backgrounds.

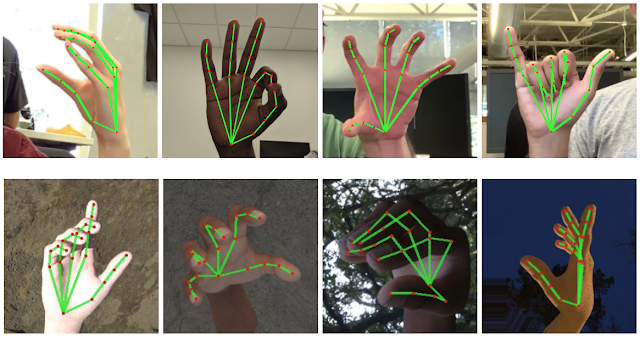

Above: real hand images with marked key points. Below: artificial images of a hand made using a 3D model

For gesture recognition, a simple algorithm was used that determines the state of each finger (for example, curved or straight) by the key points of the hand. Then all these conditions are compared with the existing set of gestures. This simple but effective method allows you to recognize basic gestures with good quality.

The main secret of real-time fast inference is hidden in one important optimization. The palm detector, which takes the most time, starts only when necessary (quite rarely). This is achieved by calculating the position of the hand in the next frame based on the previous key points of the hand.

For the sustainability of this approach, another way out was added to the model for determining key points - a scalar that shows how confident the model is that the hand is present on the cropped image and that it is correctly deployed. When the confidence value falls below a certain threshold, the palm detector is launched and applied to the entire frame.

I launched this solution on an android device (Xiaomi Redmi Note 5) for a test in real conditions. The model behaves well, correctly maps the skeleton of the hand and calculates the depth with a decent number of frames per second.

Of the minuses, it is possible to note how accuracy and speed begin to sink with a constant movement of the hand along the frame. This is due to the fact that the model constantly has to restart the detector, since it loses the position of the hand when moving. If the speed of finding a moving hand is more important to you than the definition of gestures, you should look for other approaches.

Some problems also occur when the hand intersects with the face or similar complex backgrounds. Otherwise, great work from GoogleAI, this is a big contribution to the future development of technology.

GoogleAI Blog Article

Github mediapipe hand tracking

Why is it important?

Hand recognition is a rather non-trivial task, which at the same time is widely in demand. This technology can be used in applications of additional reality for interaction with virtual objects. It can also be the basis for understanding sign language or for creating gesture-based control interfaces.

What is the difficulty?

The natural perception of hands in real time is a real challenge for computer vision, hands often overlap themselves or each other (fingers crossed or shaking hands). While faces have high-contrast patterns, for example, in the area of the eyes and mouth, the absence of such signs in the hands makes reliable detection only by their visual signs.

Hands are constantly in motion, change angles of inclination and overlap each other. For an acceptable user experience, recognition needs to work with high FPS (25+). In addition, all this should work on mobile devices, which adds to the speed requirements, as well as resource limits.

What did GoogleAI do?

They implemented technology for precise tracking of hands and fingers using machine learning (ML). The program determines 21 key points of the hand in 3D space (height, length and depth) and on the basis of this data classifies the gestures that the hand shows. All this on the basis of just one video frame, works in real time on mobile devices and scales by several hands.

How did they do it?

The approach is implemented using MediaPipe , an open-source cross-platform framework for building data processing pipelines (video, audio, time series). Something like Deepstream from Nvidia, but with a bunch of features and cross-platform.

The solution consists of 3 main models working together:

Palm Detector (BlazePalm)

- takes full image from video

- returns oriented bounding box (bounding box)

Model for determining key points on the hand

- takes a cropped picture of a hand

- returns 21 key points of a hand in 3D space + confidence indicator (more details later in the article)

Gesture recognition algorithm

- takes key points of the hand

- returns the name of the gesture that the hand shows

The architecture is similar to that used in the pose estimation task. By providing a precisely cropped and aligned hand image, the need for data augmentation (rotations, translation, and scaling) is significantly reduced, and instead the model can focus on the accuracy of coordinate prediction.

Palm detector

To find the palm of your hand, a model called BlazePalm is used - a single shot detector (SSD) model optimized for working on a mobile device in real time.

A GoogleAI study trained a palm detector instead of an entire arm detector (palm is the base of a palm without fingers). The advantage of this approach is that it is easier to recognize a palm or fist than the whole hand with gesturing fingers, and the palm can also be selected using square bounding boxes (anchors), ignoring aspect ratios, and thus reducing the number of anchors required by 3-5 times

Feature Pyramid Networks for Object Detection (FPN) feature extractor was also used to better understand the image context even for small objects.

As a loss function, focal loss was taken, which copes well with the imbalance of classes that occur when generating a large number of anchors.

Classical Cross Entropy: CE (pt) = -log (pt)

Focal Loss: FL (pt) = - (1-pt) log (pt)

More information about Focall loss can be found in the excellent pager from Facebook AI Research (recommended reading)

Using the above techniques, an average accuracy of 95.7% was achieved. When using simple cross-entropy and without FPN - 86.22%.

Defining Key Points

After the palm detector has determined the position of the palm on the whole image, the region is shifted up a certain factor and expands to cover the entire hand. Further on the cropped image, the regression problem is solved - the exact position of 21 points in 3D space is determined.

For training, 30,000 real images were manually marked. A realistic 3D model of the hand was also made with the help of which more artificial examples were generated on different backgrounds.

Above: real hand images with marked key points. Below: artificial images of a hand made using a 3D model

Gesture recognition

For gesture recognition, a simple algorithm was used that determines the state of each finger (for example, curved or straight) by the key points of the hand. Then all these conditions are compared with the existing set of gestures. This simple but effective method allows you to recognize basic gestures with good quality.

Optimizations

The main secret of real-time fast inference is hidden in one important optimization. The palm detector, which takes the most time, starts only when necessary (quite rarely). This is achieved by calculating the position of the hand in the next frame based on the previous key points of the hand.

For the sustainability of this approach, another way out was added to the model for determining key points - a scalar that shows how confident the model is that the hand is present on the cropped image and that it is correctly deployed. When the confidence value falls below a certain threshold, the palm detector is launched and applied to the entire frame.

Reality test

I launched this solution on an android device (Xiaomi Redmi Note 5) for a test in real conditions. The model behaves well, correctly maps the skeleton of the hand and calculates the depth with a decent number of frames per second.

Of the minuses, it is possible to note how accuracy and speed begin to sink with a constant movement of the hand along the frame. This is due to the fact that the model constantly has to restart the detector, since it loses the position of the hand when moving. If the speed of finding a moving hand is more important to you than the definition of gestures, you should look for other approaches.

Some problems also occur when the hand intersects with the face or similar complex backgrounds. Otherwise, great work from GoogleAI, this is a big contribution to the future development of technology.

GoogleAI Blog Article

Github mediapipe hand tracking

All Articles