Site statistics and your small repository

The Webalizer utility and the Google Analytics tool have helped me for many years to get an idea of what is happening on websites. Now I understand that they provide very little useful information. Having access to your access.log file, it’s very easy to figure out statistics and to implement quite basic tools such as sqlite, html, sql and any scripting programming language.

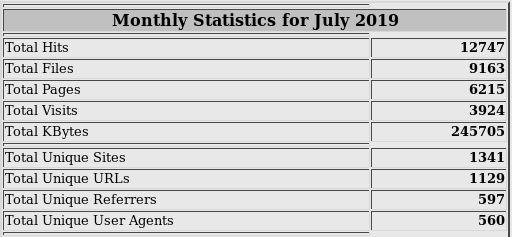

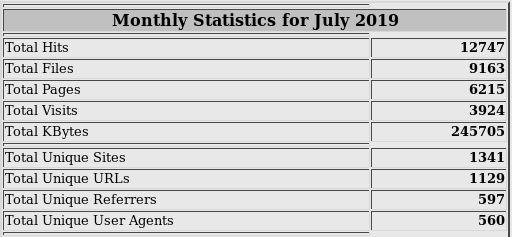

The data source for Webalizer is the server access.log file. This is how its columns and numbers look, of which only the total amount of traffic is clear:

Tools like Google Analytics collect data from the loaded page on their own. They show us a couple of diagrams and lines, on the basis of which it is often difficult to draw the right conclusions. Perhaps more effort was needed? I do not know.

So, what did I want to see in the statistics of site visits?

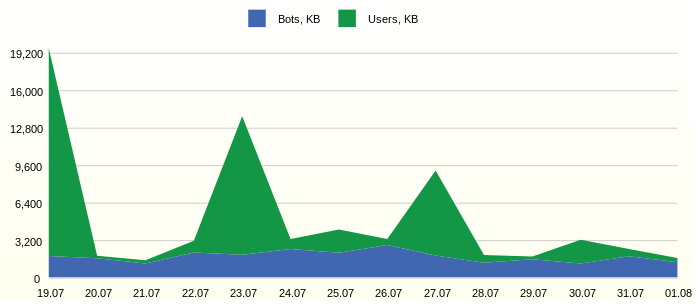

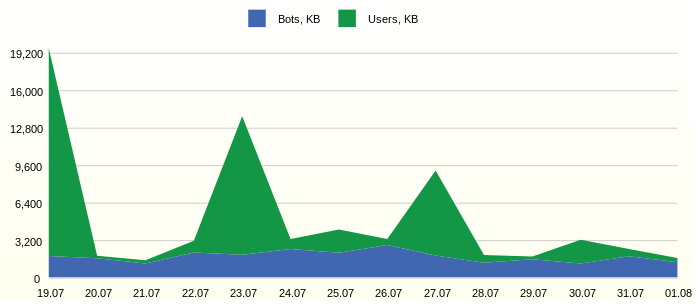

Often site traffic has a limit and you need to see how much useful traffic is used. For example, like this:

From the graph you can see the constant activity of the bots. It would be interesting to study in detail the most active representatives.

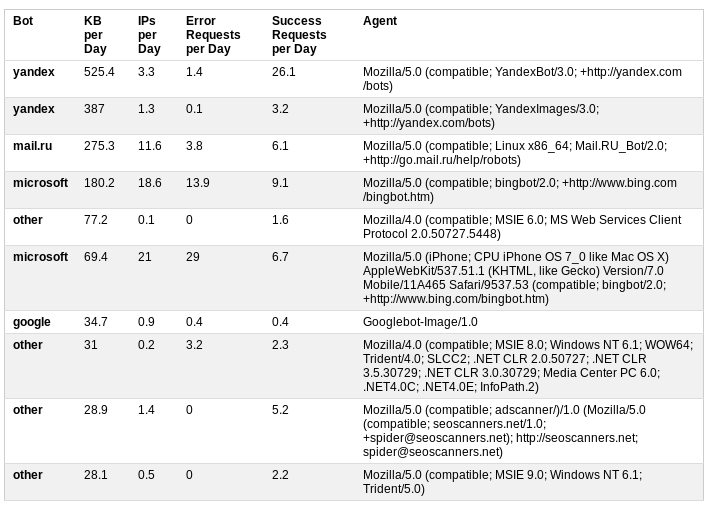

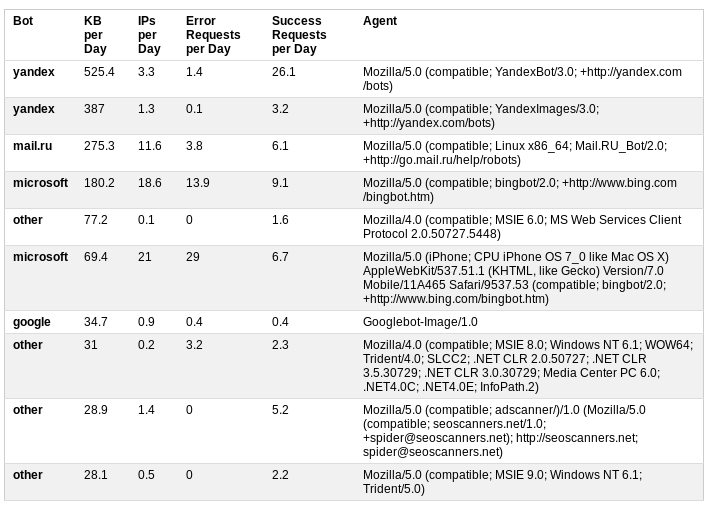

We classify bots based on user agent information. Additional statistics on daily traffic, the number of successful and unsuccessful requests gives a good idea about the activity of bots.

In this case, the analysis resulted in a decision to restrict access to the site by adding robots.txt to the file

The first two bots disappeared from the table, and the MS robots moved down from the first lines.

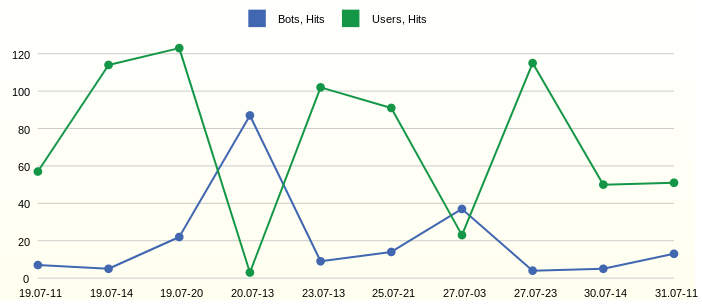

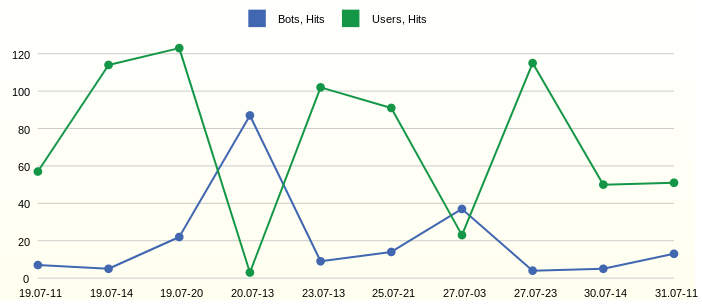

The traffic shows ups. In order to study them in detail, it is necessary to identify the time of their occurrence, while it is not necessary to display all the hours and days of time measurement. So it will be easier to find individual queries in the log file if you need detailed analysis.

We observe the most active hours 11, 14 and 20 of the first day on the chart. But the next day at 13 o’clock the bots were active.

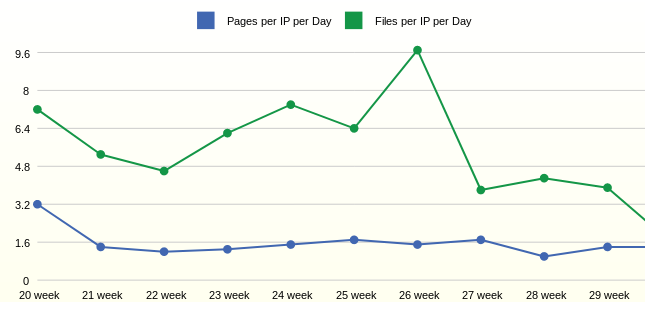

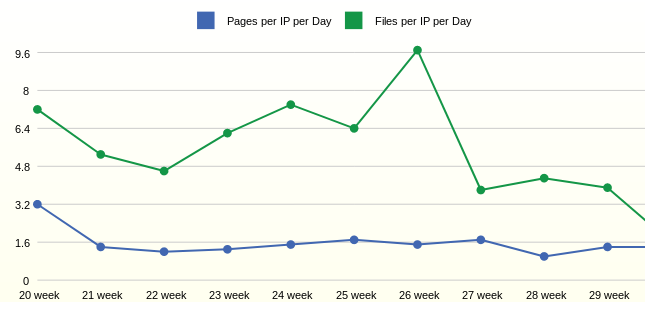

With activity and traffic a little figured out. The next question was the activity of the users themselves. For such statistics, large periods of aggregation, for example, a week, are desirable.

Statistics for the week show that on average one user opens 1.6 pages per day. The number of requested files per user in this case depends on adding new files to the site.

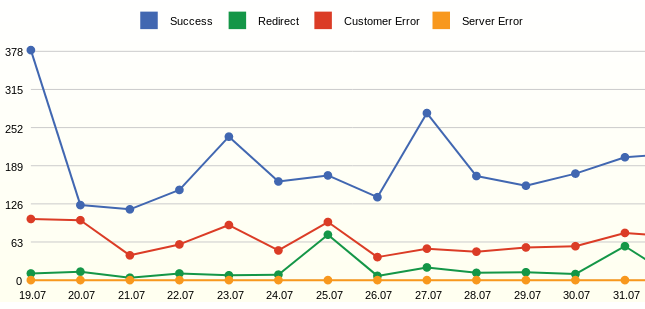

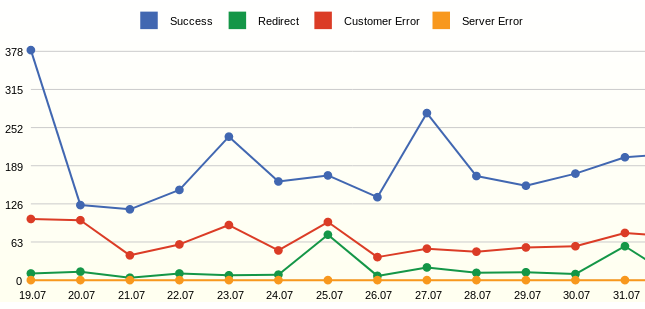

Webalizer always showed specific page codes and always wanted to see just the number of successful requests and errors.

The report displays requests, not clicks (hits), unlike LINE_CNT, the REQUEST_CNT metric is considered as COUNT (DISTINCT STG.REQUEST_NK). The goal is to show effective events, for example, MS bots hundreds of times a day polling a robots.txt file and, in this case, such polls will be counted once. This allows you to smooth the jumps on the chart.

From the graph you can see many errors - these are nonexistent pages. The result of the analysis was the addition of redirects from remote pages.

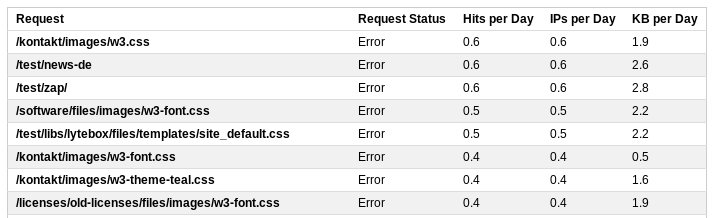

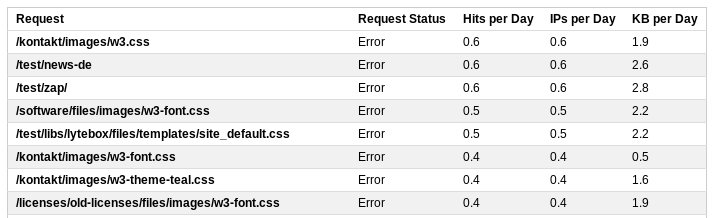

For a detailed review of requests, you can display detailed statistics.

All dialers will also be on this list, for example, a request to /wp-login.php. By adjusting the rules for rewriting requests by the server, you can adjust the server’s response to such requests and send them to the start page.

So, a few simple reports based on the server log file give a fairly complete picture of what is happening on the site.

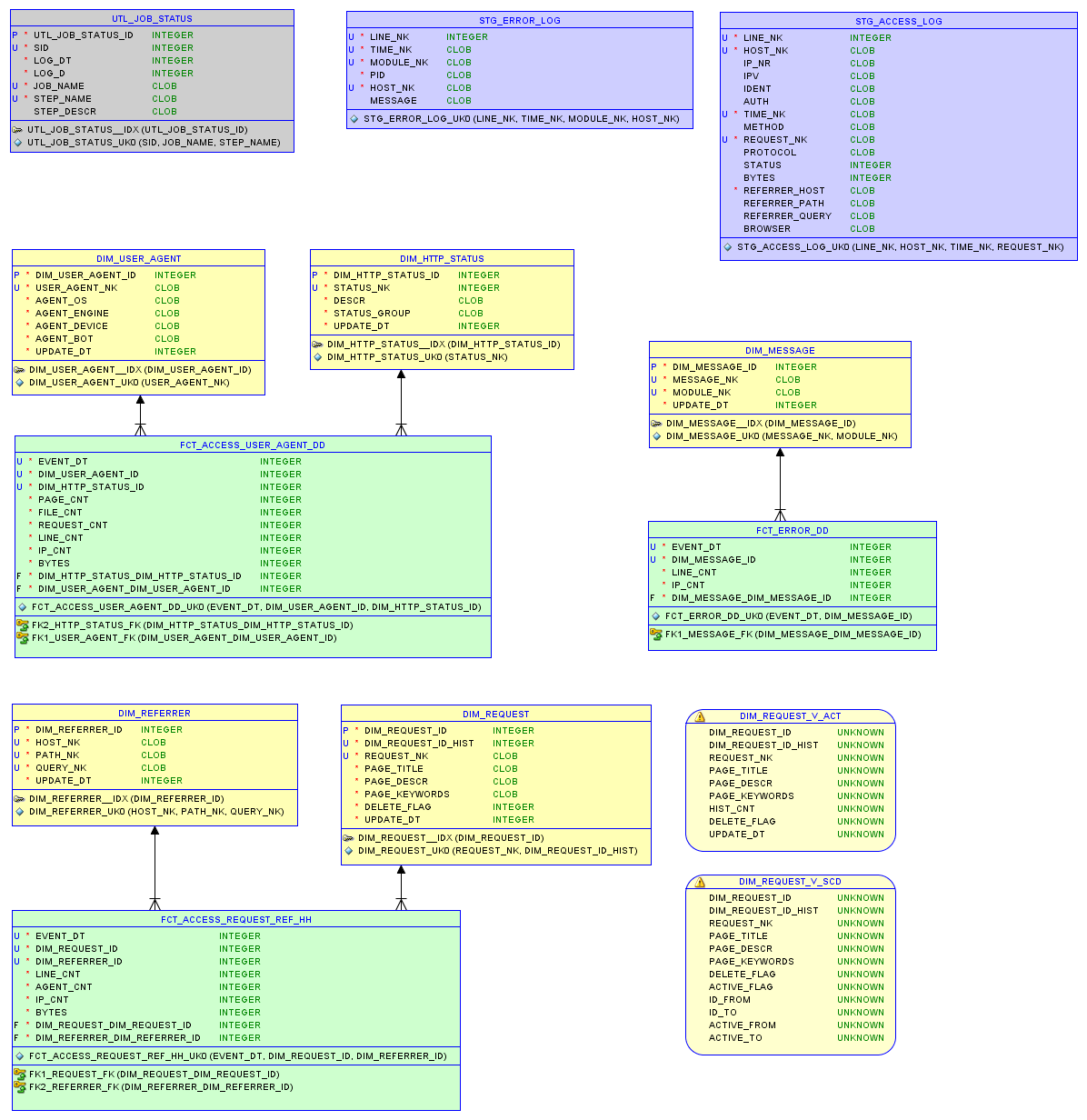

The sqlite database is quite enough. Let's create tables: auxiliary for logging of ETL processes.

Stage table, where we will write log files using PHP. Two aggregate tables. Create a daily table with statistics on user agents and request statuses. Hourly with statistics on requests, status groups and agents. Four tables of relevant measurements.

The result is the following relational model:

Script to create an object in sqlite database:

In the case of access.log file, you need to read, parse and write all requests to the database. This can be done either directly using the scripting language, or using sqlite.

Log file format:

When the raw data is in the database, you need to record keys that are not there in the measurement tables. Then it will be possible to build a reference to the measurements. For example, in the DIM_REFERRER table, the key is a combination of three fields.

Propagation to the user agent table may contain bot logic, for example, an sql excerpt:

Lastly, we will load aggregate tables, for example, a daily table can be loaded as follows:

The sqlite database allows you to write complex queries. WITH contains the preparation of data and keys. The main query collects all references to dimensions.

The condition will prevent the history from loading again: CAST (STG.EVENT_DT AS INTEGER)> $ param_epoch_from, where the parameter is the result of the request

'SELECT COALESCE (MAX (EVENT_DT), \' 3600 \ ') AS LAST_EVENT_EPOCH FROM FCT_ACCESS_USER_AGENT_DD'

The condition will load only the full day: CAST (STG.EVENT_DT AS INTEGER) <strftime ('% s', date ('now', 'start of day'))

Counting pages or files is done in a primitive way, by searching for a point.

In complex visualization systems, it is possible to create a meta-model based on database objects, dynamically manage filters and aggregation rules. Ultimately, all decent tools generate an SQL query.

In this example, we will create ready-made SQL queries and save them as a view in the database - these are the reports.

Bluff: Beautiful graphs in JavaScript was used as a visualization tool.

For this, it was necessary to use PHP to go over all the reports and generate an html file with tables.

The tool simply visualizes the result tables.

Using web analysis as an example, the article describes the mechanisms necessary for building data warehouses. As can be seen from the results, the most simple tools are enough for a deep analysis and visualization of data.

In the future, using this storage as an example, we will try to implement such structures as slowly changing measurements, master data, aggregation levels, and data integration from different sources.

Also, we will take a closer look at the simplest ETL process management tool based on a single table.

Let us return to the topic of measuring data quality and automating this process.

We will study the problems of the technical environment and maintenance of data warehouses, for which we implement a storage server with minimal resources, for example, based on the Raspberry Pi.

The data source for Webalizer is the server access.log file. This is how its columns and numbers look, of which only the total amount of traffic is clear:

Tools like Google Analytics collect data from the loaded page on their own. They show us a couple of diagrams and lines, on the basis of which it is often difficult to draw the right conclusions. Perhaps more effort was needed? I do not know.

So, what did I want to see in the statistics of site visits?

User and bot traffic

Often site traffic has a limit and you need to see how much useful traffic is used. For example, like this:

SQL report request

SELECT 1 as 'StackedArea: Traffic generated by Users and Bots', strftime('%d.%m', datetime(FCT.EVENT_DT, 'unixepoch')) AS 'Day', SUM(CASE WHEN USG.AGENT_BOT!='na' THEN FCT.BYTES ELSE 0 END)/1000 AS 'Bots, KB', SUM(CASE WHEN USG.AGENT_BOT='na' THEN FCT.BYTES ELSE 0 END)/1000 AS 'Users, KB' FROM FCT_ACCESS_USER_AGENT_DD FCT, DIM_USER_AGENT USG WHERE FCT.DIM_USER_AGENT_ID=USG.DIM_USER_AGENT_ID AND datetime(FCT.EVENT_DT, 'unixepoch') >= date('now', '-14 day') GROUP BY strftime('%d.%m', datetime(FCT.EVENT_DT, 'unixepoch')) ORDER BY FCT.EVENT_DT

From the graph you can see the constant activity of the bots. It would be interesting to study in detail the most active representatives.

Annoying bots

We classify bots based on user agent information. Additional statistics on daily traffic, the number of successful and unsuccessful requests gives a good idea about the activity of bots.

SQL report request

SELECT 1 AS 'Table: Annoying Bots', MAX(USG.AGENT_BOT) AS 'Bot', ROUND(SUM(FCT.BYTES)/1000 / 14.0, 1) AS 'KB per Day', ROUND(SUM(FCT.IP_CNT) / 14.0, 1) AS 'IPs per Day', ROUND(SUM(CASE WHEN STS.STATUS_GROUP IN ('Client Error', 'Server Error') THEN FCT.REQUEST_CNT / 14.0 ELSE 0 END), 1) AS 'Error Requests per Day', ROUND(SUM(CASE WHEN STS.STATUS_GROUP IN ('Successful', 'Redirection') THEN FCT.REQUEST_CNT / 14.0 ELSE 0 END), 1) AS 'Success Requests per Day', USG.USER_AGENT_NK AS 'Agent' FROM FCT_ACCESS_USER_AGENT_DD FCT, DIM_USER_AGENT USG, DIM_HTTP_STATUS STS WHERE FCT.DIM_USER_AGENT_ID = USG.DIM_USER_AGENT_ID AND FCT.DIM_HTTP_STATUS_ID = STS.DIM_HTTP_STATUS_ID AND USG.AGENT_BOT != 'na' AND datetime(FCT.EVENT_DT, 'unixepoch') >= date('now', '-14 day') GROUP BY USG.USER_AGENT_NK ORDER BY 3 DESC LIMIT 10

In this case, the analysis resulted in a decision to restrict access to the site by adding robots.txt to the file

User-agent: AhrefsBot

Disallow: /

User-agent: dotbot

Disallow: /

User-agent: bingbot

Crawl-delay: 5

The first two bots disappeared from the table, and the MS robots moved down from the first lines.

Day and time of the most activity

The traffic shows ups. In order to study them in detail, it is necessary to identify the time of their occurrence, while it is not necessary to display all the hours and days of time measurement. So it will be easier to find individual queries in the log file if you need detailed analysis.

SQL report request

SELECT 1 AS 'Line: Day and Hour of Hits from Users and Bots', strftime('%d.%m-%H', datetime(EVENT_DT, 'unixepoch')) AS 'Date Time', HIB AS 'Bots, Hits', HIU AS 'Users, Hits' FROM ( SELECT EVENT_DT, SUM(CASE WHEN AGENT_BOT!='na' THEN LINE_CNT ELSE 0 END) AS HIB, SUM(CASE WHEN AGENT_BOT='na' THEN LINE_CNT ELSE 0 END) AS HIU FROM FCT_ACCESS_REQUEST_REF_HH WHERE datetime(EVENT_DT, 'unixepoch') >= date('now', '-14 day') GROUP BY EVENT_DT ORDER BY SUM(LINE_CNT) DESC LIMIT 10 ) ORDER BY EVENT_DT

We observe the most active hours 11, 14 and 20 of the first day on the chart. But the next day at 13 o’clock the bots were active.

Weekly average daily user activity

With activity and traffic a little figured out. The next question was the activity of the users themselves. For such statistics, large periods of aggregation, for example, a week, are desirable.

SQL report request

SELECT 1 as 'Line: Average Daily User Activity by Week', strftime('%W week', datetime(FCT.EVENT_DT, 'unixepoch')) AS 'Week', ROUND(1.0*SUM(FCT.PAGE_CNT)/SUM(FCT.IP_CNT),1) AS 'Pages per IP per Day', ROUND(1.0*SUM(FCT.FILE_CNT)/SUM(FCT.IP_CNT),1) AS 'Files per IP per Day' FROM FCT_ACCESS_USER_AGENT_DD FCT, DIM_USER_AGENT USG, DIM_HTTP_STATUS HST WHERE FCT.DIM_USER_AGENT_ID=USG.DIM_USER_AGENT_ID AND FCT.DIM_HTTP_STATUS_ID = HST.DIM_HTTP_STATUS_ID AND USG.AGENT_BOT='na' /* users only */ AND HST.STATUS_GROUP IN ('Successful') /* good pages */ AND datetime(FCT.EVENT_DT, 'unixepoch') > date('now', '-3 month') GROUP BY strftime('%W week', datetime(FCT.EVENT_DT, 'unixepoch')) ORDER BY FCT.EVENT_DT

Statistics for the week show that on average one user opens 1.6 pages per day. The number of requested files per user in this case depends on adding new files to the site.

All requests and their statuses

Webalizer always showed specific page codes and always wanted to see just the number of successful requests and errors.

SQL report request

SELECT 1 as 'Line: All Requests by Status', strftime('%d.%m', datetime(FCT.EVENT_DT, 'unixepoch')) AS 'Day', SUM(CASE WHEN STS.STATUS_GROUP='Successful' THEN FCT.REQUEST_CNT ELSE 0 END) AS 'Success', SUM(CASE WHEN STS.STATUS_GROUP='Redirection' THEN FCT.REQUEST_CNT ELSE 0 END) AS 'Redirect', SUM(CASE WHEN STS.STATUS_GROUP='Client Error' THEN FCT.REQUEST_CNT ELSE 0 END) AS 'Customer Error', SUM(CASE WHEN STS.STATUS_GROUP='Server Error' THEN FCT.REQUEST_CNT ELSE 0 END) AS 'Server Error' FROM FCT_ACCESS_USER_AGENT_DD FCT, DIM_HTTP_STATUS STS WHERE FCT.DIM_HTTP_STATUS_ID=STS.DIM_HTTP_STATUS_ID AND datetime(FCT.EVENT_DT, 'unixepoch') >= date('now', '-14 day') GROUP BY strftime('%d.%m', datetime(FCT.EVENT_DT, 'unixepoch')) ORDER BY FCT.EVENT_DT

The report displays requests, not clicks (hits), unlike LINE_CNT, the REQUEST_CNT metric is considered as COUNT (DISTINCT STG.REQUEST_NK). The goal is to show effective events, for example, MS bots hundreds of times a day polling a robots.txt file and, in this case, such polls will be counted once. This allows you to smooth the jumps on the chart.

From the graph you can see many errors - these are nonexistent pages. The result of the analysis was the addition of redirects from remote pages.

Erroneous requests

For a detailed review of requests, you can display detailed statistics.

SQL report request

SELECT 1 AS 'Table: Top Error Requests', REQ.REQUEST_NK AS 'Request', 'Error' AS 'Request Status', ROUND(SUM(FCT.LINE_CNT) / 14.0, 1) AS 'Hits per Day', ROUND(SUM(FCT.IP_CNT) / 14.0, 1) AS 'IPs per Day', ROUND(SUM(FCT.BYTES)/1000 / 14.0, 1) AS 'KB per Day' FROM FCT_ACCESS_REQUEST_REF_HH FCT, DIM_REQUEST_V_ACT REQ WHERE FCT.DIM_REQUEST_ID = REQ.DIM_REQUEST_ID AND FCT.STATUS_GROUP IN ('Client Error', 'Server Error') AND datetime(FCT.EVENT_DT, 'unixepoch') >= date('now', '-14 day') GROUP BY REQ.REQUEST_NK ORDER BY 4 DESC LIMIT 20

All dialers will also be on this list, for example, a request to /wp-login.php. By adjusting the rules for rewriting requests by the server, you can adjust the server’s response to such requests and send them to the start page.

So, a few simple reports based on the server log file give a fairly complete picture of what is happening on the site.

How to get information?

The sqlite database is quite enough. Let's create tables: auxiliary for logging of ETL processes.

Stage table, where we will write log files using PHP. Two aggregate tables. Create a daily table with statistics on user agents and request statuses. Hourly with statistics on requests, status groups and agents. Four tables of relevant measurements.

The result is the following relational model:

Data model

Script to create an object in sqlite database:

DDL object creation

DROP TABLE IF EXISTS DIM_USER_AGENT; CREATE TABLE DIM_USER_AGENT ( DIM_USER_AGENT_ID INTEGER NOT NULL PRIMARY KEY AUTOINCREMENT, USER_AGENT_NK TEXT NOT NULL DEFAULT 'na', AGENT_OS TEXT NOT NULL DEFAULT 'na', AGENT_ENGINE TEXT NOT NULL DEFAULT 'na', AGENT_DEVICE TEXT NOT NULL DEFAULT 'na', AGENT_BOT TEXT NOT NULL DEFAULT 'na', UPDATE_DT INTEGER NOT NULL DEFAULT 0, UNIQUE (USER_AGENT_NK) ); INSERT INTO DIM_USER_AGENT (DIM_USER_AGENT_ID) VALUES (-1);

Stage

In the case of access.log file, you need to read, parse and write all requests to the database. This can be done either directly using the scripting language, or using sqlite.

Log file format:

//67.221.59.195 - - [28/Dec/2012:01:47:47 +0100] "GET /files/default.css HTTP/1.1" 200 1512 "https://project.edu/" "Mozilla/4.0" //host ident auth time method request_nk protocol status bytes ref browser $log_pattern = '/^([^ ]+) ([^ ]+) ([^ ]+) (\[[^\]]+\]) "(.*) (.*) (.*)" ([0-9\-]+) ([0-9\-]+) "(.*)" "(.*)"$/';

Key Propaganda

When the raw data is in the database, you need to record keys that are not there in the measurement tables. Then it will be possible to build a reference to the measurements. For example, in the DIM_REFERRER table, the key is a combination of three fields.

SQL key propagation query

/* Propagate the referrer from access log */ INSERT INTO DIM_REFERRER (HOST_NK, PATH_NK, QUERY_NK, UPDATE_DT) SELECT CLS.HOST_NK, CLS.PATH_NK, CLS.QUERY_NK, STRFTIME('%s','now') AS UPDATE_DT FROM ( SELECT DISTINCT REFERRER_HOST AS HOST_NK, REFERRER_PATH AS PATH_NK, CASE WHEN INSTR(REFERRER_QUERY,'&sid')>0 THEN SUBSTR(REFERRER_QUERY, 1, INSTR(REFERRER_QUERY,'&sid')-1) /* sid - */ ELSE REFERRER_QUERY END AS QUERY_NK FROM STG_ACCESS_LOG ) CLS LEFT OUTER JOIN DIM_REFERRER TRG ON (CLS.HOST_NK = TRG.HOST_NK AND CLS.PATH_NK = TRG.PATH_NK AND CLS.QUERY_NK = TRG.QUERY_NK) WHERE TRG.DIM_REFERRER_ID IS NULL

Propagation to the user agent table may contain bot logic, for example, an sql excerpt:

CASE WHEN INSTR(LOWER(CLS.BROWSER),'yandex.com')>0 THEN 'yandex' WHEN INSTR(LOWER(CLS.BROWSER),'googlebot')>0 THEN 'google' WHEN INSTR(LOWER(CLS.BROWSER),'bingbot')>0 THEN 'microsoft' WHEN INSTR(LOWER(CLS.BROWSER),'ahrefsbot')>0 THEN 'ahrefs' WHEN INSTR(LOWER(CLS.BROWSER),'mj12bot')>0 THEN 'majestic-12' WHEN INSTR(LOWER(CLS.BROWSER),'compatible')>0 OR INSTR(LOWER(CLS.BROWSER),'http')>0 OR INSTR(LOWER(CLS.BROWSER),'libwww')>0 OR INSTR(LOWER(CLS.BROWSER),'spider')>0 OR INSTR(LOWER(CLS.BROWSER),'java')>0 OR INSTR(LOWER(CLS.BROWSER),'python')>0 OR INSTR(LOWER(CLS.BROWSER),'robot')>0 OR INSTR(LOWER(CLS.BROWSER),'curl')>0 OR INSTR(LOWER(CLS.BROWSER),'wget')>0 THEN 'other' ELSE 'na' END AS AGENT_BOT

Unit Tables

Lastly, we will load aggregate tables, for example, a daily table can be loaded as follows:

SQL aggregate load request

/* Load fact from access log */ INSERT INTO FCT_ACCESS_USER_AGENT_DD (EVENT_DT, DIM_USER_AGENT_ID, DIM_HTTP_STATUS_ID, PAGE_CNT, FILE_CNT, REQUEST_CNT, LINE_CNT, IP_CNT, BYTES) WITH STG AS ( SELECT STRFTIME( '%s', SUBSTR(TIME_NK,9,4) || '-' || CASE SUBSTR(TIME_NK,5,3) WHEN 'Jan' THEN '01' WHEN 'Feb' THEN '02' WHEN 'Mar' THEN '03' WHEN 'Apr' THEN '04' WHEN 'May' THEN '05' WHEN 'Jun' THEN '06' WHEN 'Jul' THEN '07' WHEN 'Aug' THEN '08' WHEN 'Sep' THEN '09' WHEN 'Oct' THEN '10' WHEN 'Nov' THEN '11' ELSE '12' END || '-' || SUBSTR(TIME_NK,2,2) || ' 00:00:00' ) AS EVENT_DT, BROWSER AS USER_AGENT_NK, REQUEST_NK, IP_NR, STATUS, LINE_NK, BYTES FROM STG_ACCESS_LOG ) SELECT CAST(STG.EVENT_DT AS INTEGER) AS EVENT_DT, USG.DIM_USER_AGENT_ID, HST.DIM_HTTP_STATUS_ID, COUNT(DISTINCT (CASE WHEN INSTR(STG.REQUEST_NK,'.')=0 THEN STG.REQUEST_NK END) ) AS PAGE_CNT, COUNT(DISTINCT (CASE WHEN INSTR(STG.REQUEST_NK,'.')>0 THEN STG.REQUEST_NK END) ) AS FILE_CNT, COUNT(DISTINCT STG.REQUEST_NK) AS REQUEST_CNT, COUNT(DISTINCT STG.LINE_NK) AS LINE_CNT, COUNT(DISTINCT STG.IP_NR) AS IP_CNT, SUM(BYTES) AS BYTES FROM STG, DIM_HTTP_STATUS HST, DIM_USER_AGENT USG WHERE STG.STATUS = HST.STATUS_NK AND STG.USER_AGENT_NK = USG.USER_AGENT_NK AND CAST(STG.EVENT_DT AS INTEGER) > $param_epoch_from /* load epoch date */ AND CAST(STG.EVENT_DT AS INTEGER) < strftime('%s', date('now', 'start of day')) GROUP BY STG.EVENT_DT, HST.DIM_HTTP_STATUS_ID, USG.DIM_USER_AGENT_ID

The sqlite database allows you to write complex queries. WITH contains the preparation of data and keys. The main query collects all references to dimensions.

The condition will prevent the history from loading again: CAST (STG.EVENT_DT AS INTEGER)> $ param_epoch_from, where the parameter is the result of the request

'SELECT COALESCE (MAX (EVENT_DT), \' 3600 \ ') AS LAST_EVENT_EPOCH FROM FCT_ACCESS_USER_AGENT_DD'

The condition will load only the full day: CAST (STG.EVENT_DT AS INTEGER) <strftime ('% s', date ('now', 'start of day'))

Counting pages or files is done in a primitive way, by searching for a point.

Reports

In complex visualization systems, it is possible to create a meta-model based on database objects, dynamically manage filters and aggregation rules. Ultimately, all decent tools generate an SQL query.

In this example, we will create ready-made SQL queries and save them as a view in the database - these are the reports.

Visualization

Bluff: Beautiful graphs in JavaScript was used as a visualization tool.

For this, it was necessary to use PHP to go over all the reports and generate an html file with tables.

$sqls = array( 'SELECT * FROM RPT_ACCESS_USER_VS_BOT', 'SELECT * FROM RPT_ACCESS_ANNOYING_BOT', 'SELECT * FROM RPT_ACCESS_TOP_HOUR_HIT', 'SELECT * FROM RPT_ACCESS_USER_ACTIVE', 'SELECT * FROM RPT_ACCESS_REQUEST_STATUS', 'SELECT * FROM RPT_ACCESS_TOP_REQUEST_PAGE', 'SELECT * FROM RPT_ACCESS_TOP_REQUEST_REFERRER', 'SELECT * FROM RPT_ACCESS_NEW_REQUEST', 'SELECT * FROM RPT_ACCESS_TOP_REQUEST_SUCCESS', 'SELECT * FROM RPT_ACCESS_TOP_REQUEST_ERROR' );

The tool simply visualizes the result tables.

Conclusion

Using web analysis as an example, the article describes the mechanisms necessary for building data warehouses. As can be seen from the results, the most simple tools are enough for a deep analysis and visualization of data.

In the future, using this storage as an example, we will try to implement such structures as slowly changing measurements, master data, aggregation levels, and data integration from different sources.

Also, we will take a closer look at the simplest ETL process management tool based on a single table.

Let us return to the topic of measuring data quality and automating this process.

We will study the problems of the technical environment and maintenance of data warehouses, for which we implement a storage server with minimal resources, for example, based on the Raspberry Pi.

All Articles