Four new strange ways to calculate

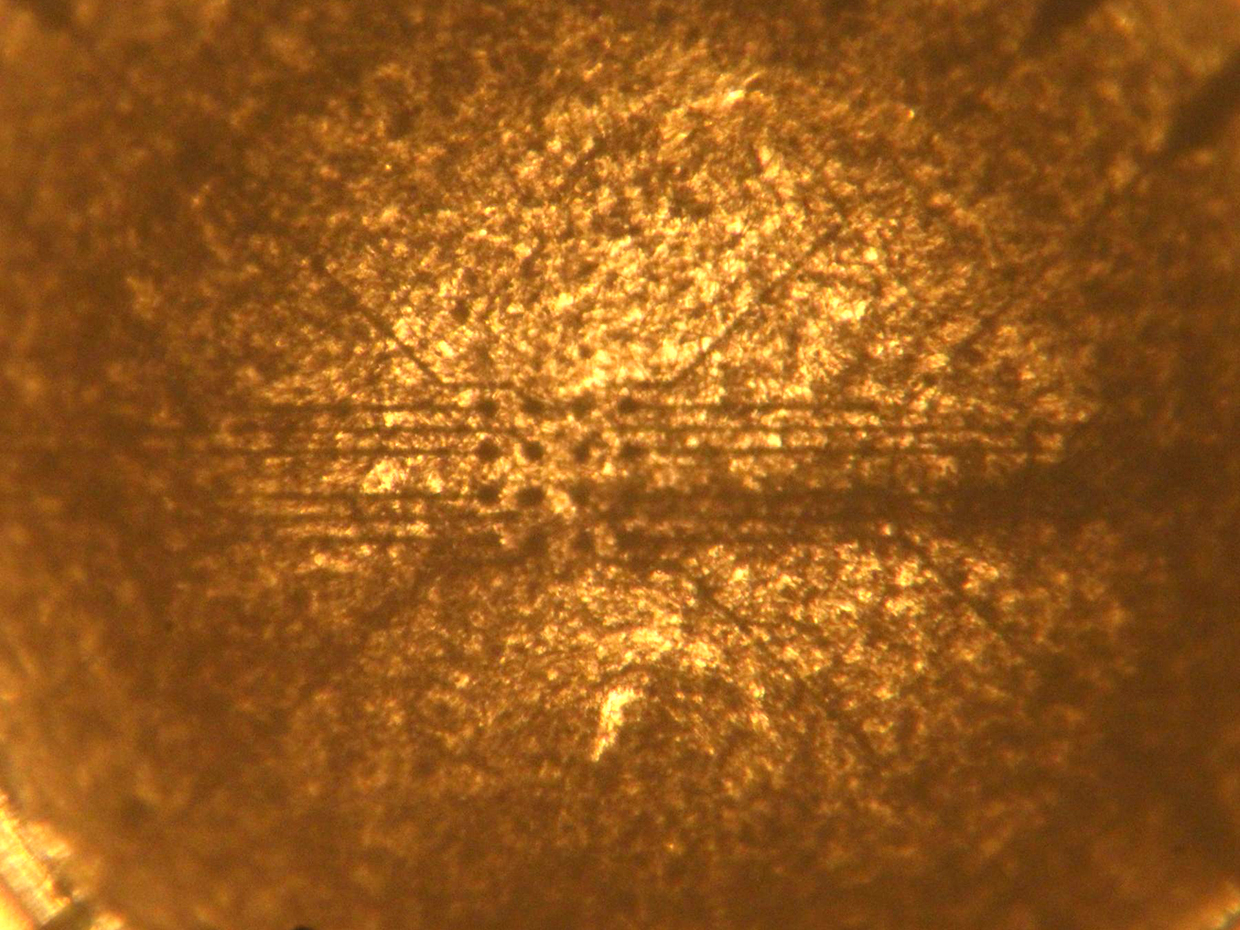

Optical micrograph of a microelectrode array under a mixture of single-walled carbon nanotubes with liquid crystals

With the slowing down of Moore’s law, engineers are carefully looking at options that will help continue the calculations when the law runs out. Artificial intelligence will certainly play a role in this. Perhaps quantum computers. But there are more strange things in the universe of computers, and some of them were shown at the IEEE International Conference on Restarting Computing in November 2017.

Also there were shown some cool variants of classical calculations, for example, reversible calculations and neuromorphic chips. In addition to them, less familiar variants were presented to the public, such as photonic chips, accelerating AI, nanomechanical comb logic and a “hyper-dimensional” speech recognition system. This article lists both strange and potentially effective options.

Cold quantum neurons

Engineers often envy the brain's marvelous energy efficiency. A single neuron spends about 10 fJ (10 -15 J) with each signal. Michael Schneider and colleagues from the National Institute of Standards and Technology (NIST) of the United States (National Institute of Standards and Technology, NIST) believe that they can get to this figure using artificial neurons created from two different types of Josephson contacts . These are superconducting devices, based on the tunneling of electron pairs through a barrier, and which serve as the basis for the most advanced quantum computers created today in industrial laboratories. One of their options, magnetic Josephson contact, has properties that can be changed on the fly, changing currents and magnetic fields. Both contacts can be used in such a way that they give out voltage spikes with an energy of the order of zeptojoule - 100,000 times less fJ [ or 10 -21 J / approx. trans. ].

Scientists from NIST have figured out how to connect these devices together and form a neural network of them. In the simulation, they trained the neural network to recognize three letters (z, v, and n is the basic test of neural networks). Ideally, the network could recognize the letters using only 2 attojoules [10 -18 J], or 2 fJ, if you turn on the waste of energy to cool this system to the required 4 K. Of course, there are moments in which everything works much less perfectly. But, if we assume that they can be eliminated with the help of the efforts of engineers, we can get a neural network that consumes energy comparable to the human brain.

Calculations using wires

In advanced processors, transistors are packed very tightly, and the connections connecting them to the circuits are closer to each other than ever before. This leads to cross distortion, when a signal from one line interferes with its neighbor through a parasitic link. Instead of trying to change the scheme to avoid distortion, Navin Kumar Mack and colleagues from the University of Missouri, Kansas City, decided to use them. According to today's views, the intervening signal is considered a glitch, Mac said to the engineers. "And now we want to use it for the work of logic."

They found that a certain arrangement of reciprocal connections can imitate the operation of logic elements and circuits. Imagine that three lines of communication run in parallel. Applying voltage to one or two side lines leads to the appearance of parasitic voltage on the central one. So you have a logical OR element with two inputs. Carefully adding a transistor here and there, the team thus created the elements AND, OR and XOR, as well as a circuit that performs the transfer function. The advantage comes when you compare the number of transistors in a given area with CMOS. For example, the logic circuitry on cross-distortion requires only three transistors to perform XOR, and CMOS uses 14, and takes up a third more space.

Attack nanobubble!

Scientists and engineers from the University of Durham in England taught a thin film of nanomaterial to solve classification problems, for example, to find a cancerous lesion on a mammogram . Using revolutionary algorithms and a specially designed electronic circuit, they sent electrical pulses through an array of electrodes into a mixture of carbon nanotubes dissolved in liquid crystals. Over time, the nanotubes — some of which were both conductive and semiconducting — self-organized into a complex network that covered the electrodes.

This network was able to perform a key part of the optimization problem. Moreover, she could learn to solve the second task if she was less complicated than the first.

Did she solve these problems well? In one case, the results were comparable with the work of a person; the other was a bit worse. And yet it is surprising that it works at all. “We need to remember that we are training a bubble of carbon nanotubes in liquid crystals,” says Eleonora Wissol-Gaudin , who helped develop this system in Durham.

Silicon circuit boards

Computer developers have long suffered from inconsistencies between how quickly and efficiently data moves inside processors, and how slowly and with losses they move between them. This problem, according to engineers at the University of California at Los Angeles , is related to the nature of the chip cases and the printed circuit boards with which they are connected. Crystal cases and printed circuit boards perform poorly in heat, so they limit energy consumption, increase the energy required to transfer a bit from one chip to another, and slow down computers by adding delays. The industry understands these shortcomings and is increasingly concentrating on the placement of several chips in one package.

Punit Gupta and his colleagues from the university believe that computers would be much better if we could get rid of crystals and circuit boards at all. They suggest replacing the circuit board with a piece of silicon substrate. On such an "integrated silicon material", chips without cases can be pressed to each other at a distance of 100 μm between them, and connected using the same conductors that are used in integrated circuits - this will help limit the delay and energy consumption, as well as develop more compact system.

If the industry goes in this direction, it will lead to a change in the manufactured integrated circuits, Gupta says. The integrated silicon material will contribute to the separation of “systems on a single chip” into smaller parts, performing the functions of various single-chip cores. After all, the close location of the nuclei on a crystal will no longer give a serious advantage in the absence of delays and efficiency, and producing smaller chips is cheaper. Moreover, silicon conducts heat better than circuit boards, so these processors can be accelerated to high clock frequencies without worrying about heat dissipation.

All Articles