今回は、プラクティスでApache Sparkを使用する方法と、リマーケティング対象ユーザーを作成できるツールについて説明します。

このツールのおかげで、ジグソーパズルを見ると、インターネットの隅々までジグソーパズルを見ることができます。

ここで、Apache Sparkの処理で最初の問題が発生しました。

アーキテクチャとスパークコードが削減されました。

はじめに

目的を理解するために、用語とソースデータを説明します。

リマーケティングとは何ですか? この質問に対する答えはwikiで見つかります )、要するに、リマーケティング(別名リターゲティング)は、ユーザーを広告主のサイトに戻してターゲットアクションを実行できる広告メカニズムです。

これを行うには、広告主自身からのデータ 、いわゆるファーストパーティデータが必要です 。これは、コードをインストールするサイトSmartPixelから自動的に収集されます。 これは、ユーザー(ユーザーエージェント)、アクセスしたページ、実行されたアクションに関する情報です。 次に、Apache Sparkを使用してこのデータを処理し、視聴者に広告を表示させます。

解決策

ちょっとした歴史

もともとはMapReduceタスクを使用して純粋なHadoopで記述することを計画していたので、成功しました。 ただし、このタイプのアプリケーションを作成するには多くのコードが必要であり、理解とデバッグが非常に困難です。

3つの異なるアプローチの例については、visitor_idにより、audience_idグループ化コードを示します。

MapReduceのコード例:

public static class Map extends Mapper<LongWritable, Text, Text, Text> { @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String s = value.toString(); String[] split = s.split(" "); context.write(new Text(split[0]), new Text(split[1])); } } public static class Reduce extends Reducer<Text, Text, Text, ArrayWritable> { @Override protected void reduce(Text key, Iterable<Text> values, Context context) throws IOException, InterruptedException { HashSet<Text> set = new HashSet<>(); values.forEach(t -> set.add(t)); ArrayWritable array = new ArrayWritable(Text.class); array.set(set.toArray(new Text[set.size()])); context.write(key, array); } } public static class Run { public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Job job = Job.getInstance(); job.setJarByClass(Run.class); job.setMapperClass(Map.class); job.setReducerClass(Reduce.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(ArrayWritable.class); FileInputFormat.addInputPath(job, new Path(args[0])); FileOutputFormat.setOutputPath(job, new Path(args[1])); System.exit(job.waitForCompletion(true) ? 0 : 1); } }

それから、ブタを見ました。 MapReduceタスクのコードを解釈したPig Latinに基づく言語。 書くのに必要なコードははるかに少なくなり、審美的な観点からははるかに優れていました。

豚のコード例:

A = LOAD '/data/input' USING PigStorage(' ') AS (visitor_id:chararray, audience_id:chararray); B = DISTINCT A; C = GROUP B BY visitor_id; D = FOREACH C GENERATE group AS visitor_id, B.audience_id AS audience_id; STORE D INTO '/data/output' USING PigStorage();

しかし、保存に問題がありました。 保存のために独自のモジュールを作成する必要がありました。 ほとんどのデータベース開発者はPigをサポートしていません。

ここで、Sparkが助けになりました。

Sparkコードの例:

SparkConf sparkConf = new SparkConf().setAppName("Test"); JavaSparkContext jsc = new JavaSparkContext(sparkConf); jsc.textFile(args[0]) .mapToPair(str -> { String[] split = str.split(" "); return new Tuple2<>(split[0], split[1]); }) .distinct() .groupByKey() .saveAsTextFile(args[1]);

ここでは、簡潔さと利便性の両方に加えて、データベースへの書き込みを容易にする多くのOutputFormatが存在します。 さらに、このツールでは、ストリーミング処理の可能性に関心がありました。

現在の実装

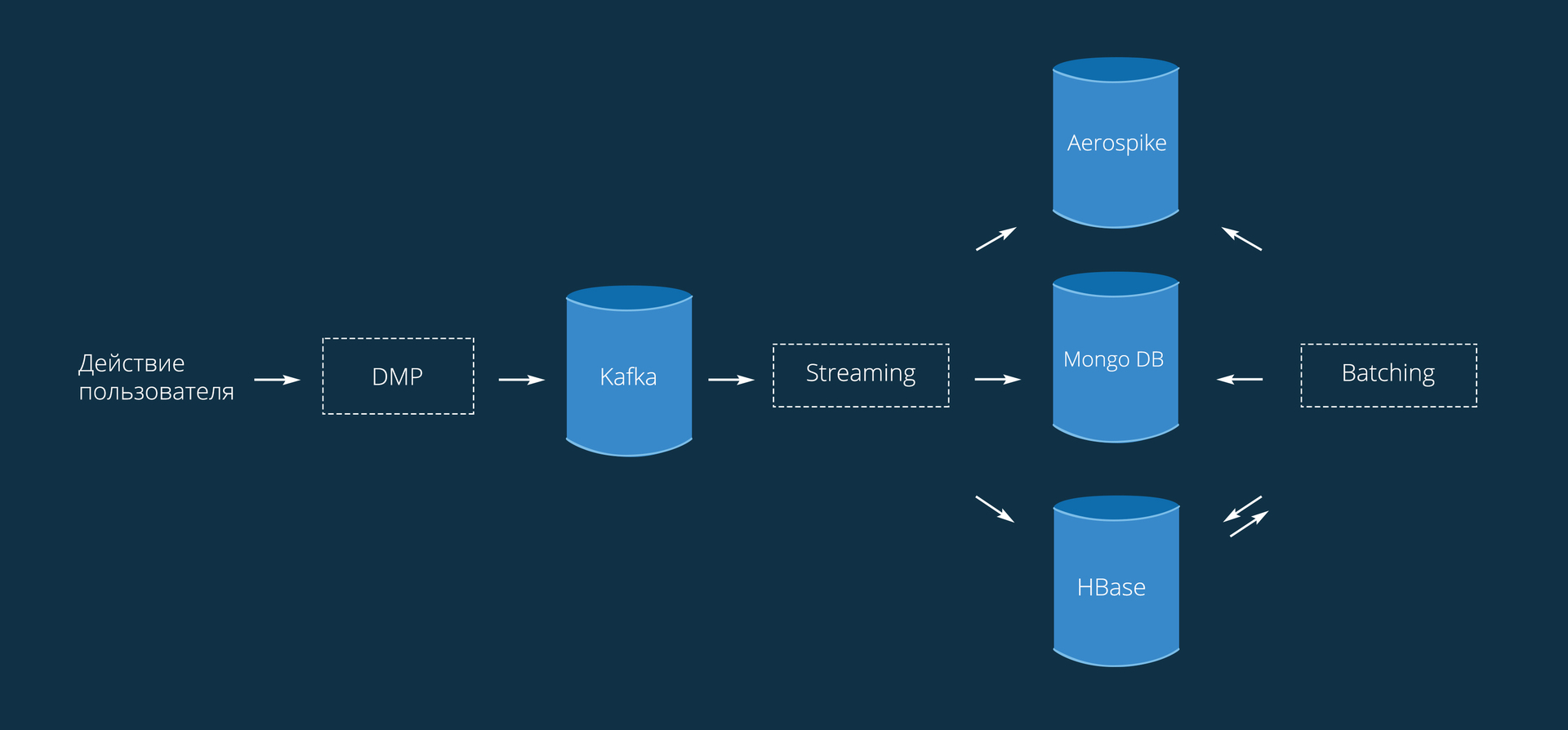

プロセス全体は次のとおりです。

サイトにインストールされたSmartPixelsからデータが届きます。 コードは提供しません。これは非常にシンプルで、外部のメトリックに似ています。 ここから、データは{Visitor_Id:Action}の形式になります。 ここで、アクションとは、ページ/製品の表示、バスケットへの追加、購入、または広告主が設定したカスタムアクションなど、ターゲットを絞ったアクションとして理解できます。

リマーケティング処理は、2つの主要なモジュールで構成されています。

- ストリーム処理( streaming )。

- バッチ処理

ストリーム処理

ユーザーをリアルタイムでオーディエンスに追加できます。 10秒の処理間隔でSpark Streamingを使用します。 ユーザーは、完了したアクションのほぼ直後(これらの10秒以内)に視聴者に追加されます。 ストリーミングモードでは、データベースへのpingまたはその他の理由により、少量のデータが許可されることに注意することが重要です。

主なものは、応答時間と帯域幅のバランスです。 batchIntervalが小さいほど、データは高速に処理されますが、接続およびその他のオーバーヘッドの初期化に多くの時間が費やされるため、一度に多くの処理を行うことはできません。 一方、間隔を長くすると、一度に大量のデータを処理できますが、アクションの瞬間から目的の視聴者に追加されるまで、より貴重な時間が無駄になります。

カフカからのイベントの選択:

メッセージフローを作成するには、コンテキスト、必要なトピック、および受信者グループの名前(それぞれ、jssc、topics、およびgroupId)を渡す必要があります。 グループごとに、トピックごとにメッセージキューの独自のシフトが形成されます。 サーバー間の負荷分散のために複数の受信者を作成することもできます。 データのすべての変換は、transformFunctionで指定され、レシーバーと同じストリームで実行されます。

public class StreamUtil { private static final Function<JavaPairRDD<String, byte[]>, JavaRDD<Event>> eventTransformFunction = rdd -> rdd.map(t -> Event.parseFromMsgPack(t._2())).filter(e -> e != null); public static JavaPairReceiverInputDStream<String, byte[]> createStream(JavaStreamingContext jsc, String groupId, Map<String, Integer> topics) { HashMap prop = new HashMap() {{ put("zookeeper.connect", BaseUtil.KAFKA_ZK_QUORUM); put("group.id", groupId); }}; return KafkaUtils.createStream(jsc, String.class, byte[].class, StringDecoder.class, DefaultDecoder.class, prop, topics, StorageLevel.MEMORY_ONLY_SER()); } public static JavaDStream<Event> getEventsStream(JavaStreamingContext jssc, String groupName, Map<String, Integer> map, int count) { return getStream(jssc, groupName, map, count, eventTransformFunction); } public static <T> JavaDStream<T> getStream(JavaStreamingContext jssc, String groupName, Map<String, Integer> map, Function<JavaPairRDD<String, byte[]>, JavaRDD<T>> transformFunction) { return createStream(jssc, groupName, map).transform(transformFunction); } public static <T> JavaDStream<T> getStream(JavaStreamingContext jssc, String groupName, Map<String, Integer> map, int count, Function<JavaPairRDD<String, byte[]>, JavaRDD<T>> transformFunction) { if (count < 2) return getStream(jssc, groupName, map, transformFunction); ArrayList<JavaDStream<T>> list = new ArrayList<>(); for (int i = 0; i < count; i++) { list.add(getStream(jssc, groupName, map, transformFunction)); } return jssc.union(list.get(0), list.subList(1, count)); } }

メッセージフローを作成するには、コンテキスト、必要なトピック、および受信者グループの名前(それぞれ、jssc、topics、およびgroupId)を渡す必要があります。 グループごとに、トピックごとにメッセージキューの独自のシフトが形成されます。 サーバー間の負荷分散のために複数の受信者を作成することもできます。 データのすべての変換は、transformFunctionで指定され、レシーバーと同じストリームで実行されます。

イベント処理:

コンテキスト作成

ここでは、2つの(イベントと条件)RDD(Resilient Distributed Dataset)を結合するために、pixel_idによる結合が使用されます。 保存方法は偽物です。 これは、送信されたコードをオフロードするために行われます。 その代わりに、いくつかの変換と保存が必要です。

打ち上げ

最初に、コンテキストが作成されて起動されます。 並行して、条件を更新してHyperLogLogを保存するために2つのタイマーが開始されます。 最後にawaitTermination()を指定してください。指定しないと、処理は開始されずに終了します。

public JavaPairRDD<String, Condition> conditions; private JavaStreamingContext jssc; private Map<Object, HyperLogLog> hlls; public JavaStreamingContext create() { sparkConf.setAppName("UniversalStreamingBuilder"); sparkConf.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer"); sparkConf.set("spark.storage.memoryFraction", "0.125"); jssc = new JavaStreamingContext(sparkConf, batchInterval); HashMap map = new HashMap(); map.put(topicName, 1); // Kafka topic name and number partitions JavaDStream<Event> events = StreamUtil.getEventsStream(jssc, groupName, map, numReceivers).repartition(numWorkCores); updateConditions(); events.foreachRDD(ev -> { // Compute audiences JavaPairRDD<String, Object> rawva = conditions.join(ev.keyBy(t -> t.pixelId)) .mapToPair(t -> t._2()) .filter(t -> EventActionUtil.checkEvent(t._2(), t._1().condition)) .mapToPair(t -> new Tuple2<>(t._2().visitorId, t._1().id)) .distinct() .persist(StorageLevel.MEMORY_ONLY_SER()) .setName("RawVisitorAudience"); // Update HyperLogLog`s rawva.mapToPair(t -> t.swap()).groupByKey() .mapToPair(t -> { HyperLogLog hll = new HyperLogLog(); t._2().forEach(v -> hll.offer(v)); return new Tuple2<>(t._1(), hll); }).collectAsMap().forEach((k, v) -> hlls.merge(k, v, (h1, h2) -> HyperLogLog.merge(h1, h2))); // Save to Aerospike and HBase save(rawva); return null; }); return jssc; }

ここでは、2つの(イベントと条件)RDD(Resilient Distributed Dataset)を結合するために、pixel_idによる結合が使用されます。 保存方法は偽物です。 これは、送信されたコードをオフロードするために行われます。 その代わりに、いくつかの変換と保存が必要です。

打ち上げ

public void run() { create(); jssc.start(); long millis = TimeUnit.MINUTES.toMillis(CONDITION_UPDATE_PERIOD_MINUTES); new Timer(true).schedule(new TimerTask() { @Override public void run() { updateConditions(); } }, millis, millis); new Timer(false).scheduleAtFixedRate(new TimerTask() { @Override public void run() { flushHlls(); } }, new Date(saveHllsStartTime), TimeUnit.MINUTES.toMillis(HLLS_UPDATE_PERIOD_MINUTES)); jssc.awaitTermination(); }

最初に、コンテキストが作成されて起動されます。 並行して、条件を更新してHyperLogLogを保存するために2つのタイマーが開始されます。 最後にawaitTermination()を指定してください。指定しないと、処理は開始されずに終了します。

バッチ処理

すべてのオーディエンスを1日に1回再構築します。これにより、古いデータや失われたデータの問題が解決されます。 リマーケティングには、ユーザーにとって不快な機能が1つあります。それは広告への執着です。 これは、 ルックバックウィンドウが入る場所です。 ユーザーごとに、オーディエンスに追加された日付が保存されるため、ユーザーに対する情報の関連性を制御できます。

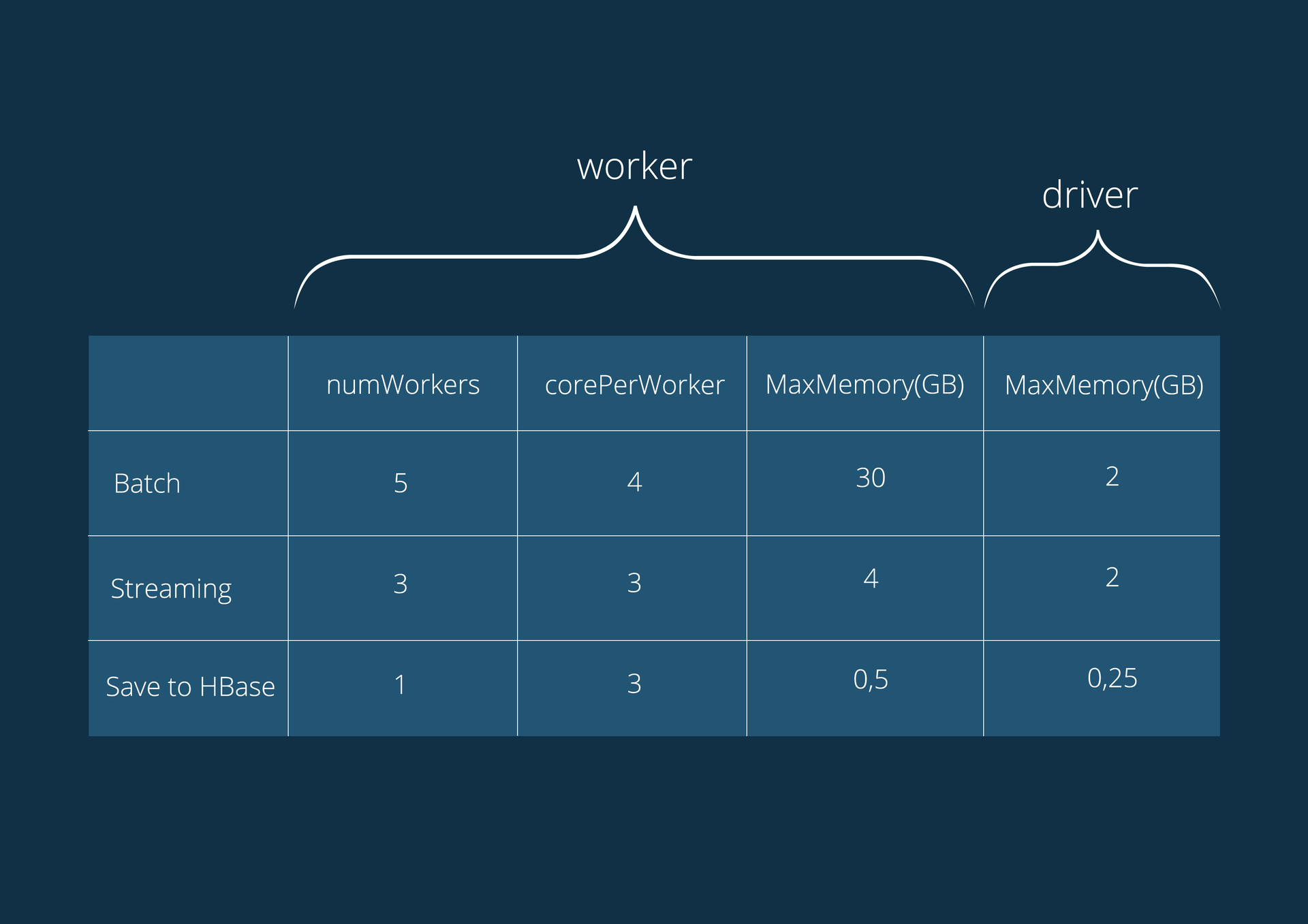

1.5〜2時間かかります。すべてネットワークの負荷に依存します。 さらに、ほとんどの場合、これはデータベースの保存です。Aerospikeで75分間(1つのパイプラインで実行)ロード、処理、記録し、残りの時間はHBaseとMongo(35分)で保存します。

バッチ処理コード:

これは、ストリーム処理とほぼ同じですが、結合は使用されません。 代わりに、同じpixel_idで条件リストのイベントチェックを使用します。 結局のところ、この設計では必要なメモリが少なく、高速です。

JavaRDD<Tuple3<Object, String, Long>> av = HbaseUtil.getEventsHbaseScanRdd(jsc, hbaseConf, new Scan()) .mapPartitions(it -> { ArrayList<Tuple3<Object, String, Long>> list = new ArrayList<>(); it.forEachRemaining(e -> { String pixelId = e.pixelId; String vid = e.visitorId; long dt = e.date.getTime(); List<Condition> cond = conditions.get(pixelId); if (cond != null) { cond.stream() .filter(condition -> e.date.getTime() > beginTime - TimeUnit.DAYS.toMillis(condition.daysInterval) && EventActionUtil.checkEvent(e, condition.condition)) .forEach(condition -> list.add(new Tuple3<>(condition.id, vid, dt))); } }); return list; }).persist(StorageLevel.DISK_ONLY()).setName("RawVisitorAudience");

これは、ストリーム処理とほぼ同じですが、結合は使用されません。 代わりに、同じpixel_idで条件リストのイベントチェックを使用します。 結局のところ、この設計では必要なメモリが少なく、高速です。

データベースへの保存

KafkaからHBaseへの保存は、もともとストリーミングサービスに関連付けられていましたが、障害や障害が発生する可能性があるため、別のアプリケーションに転送することにしました。 フォールトトレランスを実装するために、 Kafka Reliable Receiverが使用されました 。これにより、データを失わないことができます。 チェックポイントを使用して、メタデータと現在のデータを保存します。

現在、HBaseのレコード数は約4億です。 すべてのイベントはデータベースに180日間保存され、TTLによって削除されます。

Reliable Receiverの使用:

最初に、JavaStreamingContextFactoryインターフェースのcreate()メソッドを実装し、コンテキストを作成するときに次の行を追加する必要があります

代わりに

使う

sparkConf.set("spark.streaming.receiver.writeAheadLog.enable", "true"); jssc.checkpoint(checkpointDir);

代わりに

JavaStreamingContext jssc = new JavaStreamingContext(sparkConf, batchInterval);

使う

JavaStreamingContext jssc = JavaStreamingContext.getOrCreate(checkpointDir, new ());

Aerospikeでの保存は、ネイティブのOutputFormatとLuaスクリプトを使用して行われます。 非同期クライアントを使用するには、2つのクラスを公式コネクタ( fork )に追加する必要がありました。

Luaスクリプトの実行:

指定したパッケージから関数を非同期的に実行します。

public class UpdateListOutputFormat extends com.aerospike.hadoop.mapreduce.AerospikeOutputFormat<String, Bin> { private static final Log LOG = LogFactory.getLog(UpdateListOutputFormat.class); public static class LuaUdfRecordWriter extends AsyncRecordWriter<String, Bin> { public LuaUdfRecordWriter(Configuration cfg, Progressable progressable) { super(cfg, progressable); } @Override public void writeAerospike(String key, Bin bin, AsyncClient client, WritePolicy policy, String ns, String sn) throws IOException { try { policy.sendKey = true; Key k = new Key(ns, sn, key); Value name = Value.get(bin.name); Value value = bin.value; Value[] args = new Value[]{name, value, Value.get(System.currentTimeMillis() / 1000)}; String packName = AeroUtil.getPackage(cfg); String funcName = AeroUtil.getFunction(cfg); // Execute lua script client.execute(policy, null, k, packName, funcName, args); } catch (Exception e) { LOG.error("Wrong put operation: \n" + e); } } } @Override public RecordWriter<String, Bin> getAerospikeRecordWriter(Configuration entries, Progressable progressable) { return new LuaUdfRecordWriter(entries, progressable); } }

指定したパッケージから関数を非同期的に実行します。

例は、新しい値をリストに追加する機能です。

Luaスクリプト:

local split = function(str) local tbl = list() local start, fin = string.find(str, ",[^,]+$") list.append(tbl, string.sub(str, 1, start - 1)) list.append(tbl, string.sub(str, start + 1, fin)) return tbl end local save_record = function(rec, name, mp) local res = list() for k,v in map.pairs(mp) do list.append(res, k..","..v) end rec[name] = res if aerospike:exists(rec) then return aerospike:update(rec) else return aerospike:create(rec) end end function put_in_list_first_ts(rec, name, value, timestamp) local lst = rec[name] local mp = map() if value ~= nil then if list.size(value) > 0 then for i in list.iterator(value) do mp[i] = timestamp end end end if lst ~= nil then if list.size(lst) > 0 then for i in list.iterator(lst) do local sp = split(i) mp[sp[1]] = sp[2] end end end return save_record(rec, name, mp) end

このスクリプトは、オーディエンスのリストに「audience_id、timestamp」という形式の新しいエントリを追加します。 レコードが存在する場合、タイムスタンプは同じままです。

アプリケーションが実行されているサーバーの特性:

Intel Xeon E5-1650 6コア3.50 GHz(HT)、64GB DDR3 1600;

オペレーティングシステムCentOS 6;

CDHバージョン5.4.0。

アプリケーション構成:

結論として

この実装に至る過程で、いくつかのオプション(C#、Hadoop MapReduce、Spark)を試し、ストリーミング処理タスクと巨大なデータ配列の再計算の両方に同等に対応するツールを得ました。 ラムダアーキテクチャの部分的な実装により、コードの再利用が増えました。 教室のチャンネルの完全なオーバーホールの時間は、数十時間から十数分に短縮されました。 そして、水平方向のスケーラビリティがかつてないほど容易になりました。

ハイブリッドプラットフォームでいつでも当社のテクノロジーを試すことができます。

PS

この記事を書く際に非常に貴重な助けをしてくれたDanilaPerepechinに感謝します。