RTSP is a simple signaling protocol that has not been replaced by anything for many years, and we must admit that they are not really trying.

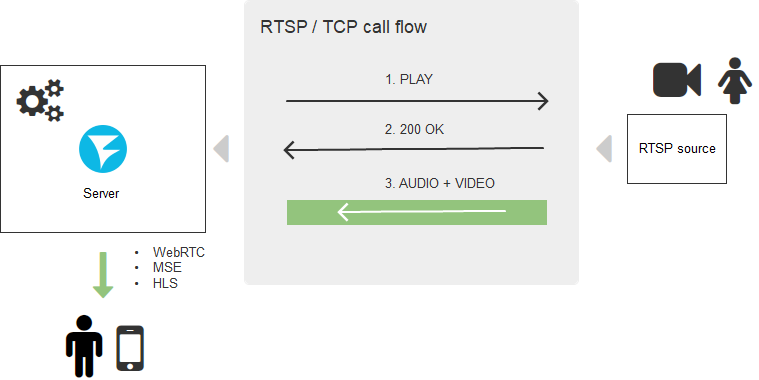

Let's say we have an IP camera with RTSP support. Anyone who felt the traffic with a shark cable will tell you that DESCRIBE goes first, then PLAY, and then the traffic poured directly via RTP or wrapped in the same TCP channel.

A typical RTSP connection setup looks like this:

It is clear that for browsers RTSP protocol support is redundant and needs a fifth leg like a dog, so browsers are not in a hurry to massively implement it and are unlikely to ever come to this. On the other hand, browsers could create direct TCP connections, and this would solve the problem, but here everything depends on security - where did you see the browser that allows the script to use transport protocols directly?

But people require a stream in “any browser without installing additional software”, and startups write on their sites “you don’t need to install anything - it works in all browsers out of the box” when they want to show the stream from the IP camera.

In this article, we will figure out how to achieve this. And since there’s a round number in the yard, we’ll give the article relevance and hang the 2020 label, all the more so as it is.

So, what technologies for displaying video on a web page should be forgotten in 2020? Flash in the browser. He died. He is no more - cross out.

Three good ways

The tools that will allow you to watch the video stream in the browser today are:

- WebRTC

- Hls

- Websocket + MSE

What's wrong with WebRTC

Two words: resource-intensive and complex.

Well, what is the resource intensity? - you wave it off, because today the processors are powerful, the memory is cheap, what's the problem? Well, firstly, it is forced encryption of all traffic, even if you do not need it. Secondly, WebRTC is a complex two-way communication and exchange of feedbacks about the quality of the channel between the peer and the peer (in this case, between the peer and the server) - at each moment the bitrate is calculated, packet losses are tracked, decisions are made to send them, and around that In total, the synchronization of audio and video, the so-called lipsync, is calculated so that the speaker’s lips do not diverge from his words. All these calculations, as well as the traffic entering the server, allocate and free up real-time gigabytes of RAM and, if something goes wrong, a 256-gigabyte server with 48 CPU cores will easily go into a tailspin despite all gigahertz, nanometers and DDR 10 on board.

It turns out shooting at Sparrows from the Iskander. We just need to suck out the RTSP stream and show it, and WebRTC says: yes, go ahead, but you have to pay for it.

What is good about WebRTC

Delay. She is really low. If you are willing to pay with performance and complexity for low latency, WebRTC is the best option.

What is good about HLS

Two words: works everywhere

HLS is the slowest all-terrain vehicle in the world of live content display. Everywhere it works thanks to two things: HTTP transport and Apple patronage. Indeed, the HTTP protocol is ubiquitous, I feel its presence, even when I write these lines. Therefore, wherever you are and no matter how ancient the tablet surfs, HLS (HTTP Live Streaming) will reach you and deliver the video to your screen, be sure.

And all would be well, but.

Than bad HLS

Delay. There are, for example, video surveillance projects for the construction site. The object has been under construction for years, and at this time an unfortunate chamber, day and night, takes off the construction site 24 hours a day. This is an example of where low latency is not needed.

Another example is wild boars. Real boars. Ohio farmers suffer from the onslaught of wild boars, which, like locusts, eat and trample crops, threatening the financial well-being of the economy. Entrepreneurial startups launched a video surveillance system with RTSP cameras, which in real time monitors the land and lowers the trap when invaded by uninvited guests. In this case, the low delay is critical and, when using HLS (with a delay of 15 seconds), the boars will run away before the trap is activated.

Another example: video presentations in which you demonstrate the product and expect a prompt response. In the event of a long delay, they will show you the goods in the camera, then they will ask “how?” And this will reach in only 15 seconds. In 15 seconds, the signal can be driven 12 times to the moon and back. No, we do not need such a delay. It looks more like showing a pre-recorded video than it does on Live. But there is nothing surprising, HLS works like this: it writes pieces of video to disk or to server memory, and the player downloads the recorded pieces. The result is HTTP Live, which is not Live at all.

Why it happens? The ubiquity of the HTTP protocol and its simplicity ultimately translate into inhibition - well, HTTP was not intended as it was originally designed to quickly download and display thousands of large pieces of video (HLS segments). Of course, they download and play with good quality, but very slowly - while downloading, while buffering, go to the decoder, those same 15 seconds or more will pass.

It should be noted that Apple in the fall of 2019 announced the HLS Low Latency, but that's another story. We will look at what happened, in more detail later. And we still have MSE in stock.

Why is MSE good?

Media Source Extension is a native support for playing batch video in a browser. We can say a native player for H.264 and AAC, which can feed video segments and which is not tied to the transport protocol, unlike HLS. For this reason, transport can be selected using the Websockets protocol. In other words, segments are no longer downloaded using the ancient Request-Response (HTTP) technology, but fun flowing through a Websockets connection - an almost direct TCP channel. This helps a lot with reducing delays, which can be reduced to 3-5 seconds. The delay is not super, but suitable for most projects that do not require hard real-time. Complexity and resource consumption are also relatively low - a TCP channel opens and almost the same HLS segments flow as it is collected by the player and placed for playback.

What is bad MSE

It does not work everywhere. Like WebRTC, browser penetration is less. IPhones (iOS) are especially involved in not playing MSE, which makes MSE unsuitable as the only solution for any startup.

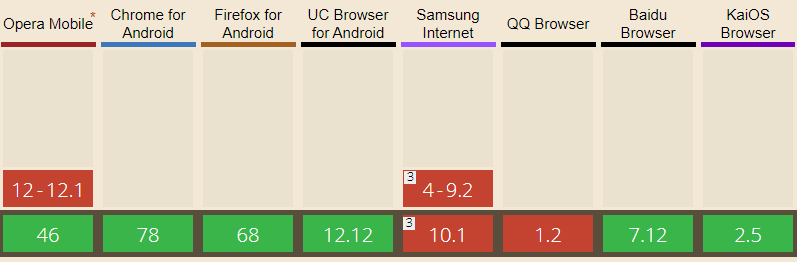

Fully available in browsers: Edge, Firefox, Chrome, Safari, Android Browser, Opera Mobile, Chrome for Android, Firefox for Android, UC Browser for Android

IOS Safari has limited support for MSE recently, starting with version 13 of iOS.

Rtsp leg

We discussed delivery in the direction of video server> browser. In addition, two more things will be required:

1) Deliver video from the IP camera to the server.

2) Convert the received video to one of the above formats / protocols.

Here the server side enters the scene.

TaTam ... Web Call Server 5 (for acquaintances just WCS). Someone should get RTSP traffic, properly de-packetize the video, convert it to WebRTC, HLS or MSE, preferably without being squeezed by a transcoder, and give it to the browser side in a decent, not spoiled by artifacts and freezes.

The task, at first glance, is not difficult, but there are so many pitfalls, Chinese cameras and conversion nuances that it’s terrible. In general, not without hacks, but it works, and works well. In production.

Delivery Scheme

As a result, a holistic scheme for delivering RTSP content with conversion on an intermediate server emerges.

One of the most frequent requests of our Indian colleagues “Is it possible? Directly without a server? ” No, it’s impossible - you need a server part that will do this job. In the cloud, on the piece of iron, on corei7 on the balcony, but without it in any way.

Back to our 2020

So, the recipe for RTSP in the browser:

- Get a fresh WCS (Web Call Server).

- Add to taste: WebRTC, HLS or MSE.

Lay out on a web page.

Enjoy your meal!

No that's not all

Inquisitive neurons will surely have the question “How?” Well how to do it? What will it look like in a browser? ” Your attention is the minimum WebRTC player made on the knee:

1) We connect the flashphoner.js script and the my_player.js script to the web page of the main API, which we will create a little later.

<script type="text/javascript" src="../../../../flashphoner.js"></script> <script type="text/javascript" src=my_player.js"></script>

2) Initialize the API in the body of the web page

<body onload="init_api()">

3) We throw on the page div, which will be a container for the video. We will show him the dimensions and the border.

<div id="myVideo" style="width:320px;height:240px;border: solid 1px"></div>

4) Add a Play button, clicking on which will initiate a connection to the server and begin playback

<input type="button" onclick="connect()" value="PLAY"/>

5) Now we create the script my_player.js - which will contain the main code of our player. We describe constants and variables

var SESSION_STATUS = Flashphoner.constants.SESSION_STATUS; var STREAM_STATUS = Flashphoner.constants.STREAM_STATUS; var session;

6) We initialize the API when loading the HTML page

function init_api() { console.log("init api"); Flashphoner.init({ }); }

7) Connect to the WCS server via WebSocket. For everything to work correctly, replace "wss: //demo.flashphoner.com" with your WCS

function connect() { session = Flashphoner.createSession({urlServer: "wss://demo.flashphoner.com"}).on(SESSION_STATUS.ESTABLISHED, function(session){ console.log("connection established"); playStream(session); }); }

8) Next, we pass two parameters: name and display: name - RTSP URL of the stream being played. display - the myVideo element in which our player will be mounted. Here also specify the URL of the stream you need, instead of ours.

function playStream() { var options = {name:"rtsp://b1.dnsdojo.com:1935/live/sys2.stream",display:document.getElementById("myVideo")}; var stream = session.createStream(options).on(STREAM_STATUS.PLAYING, function(stream) { console.log("playing"); }); stream.play(); }

We save the files and try to start the player. Is your RTSP stream playing?

When we tested, we played this one: rtsp: //b1.dnsdojo.com: 1935 / live / sys2.stream.

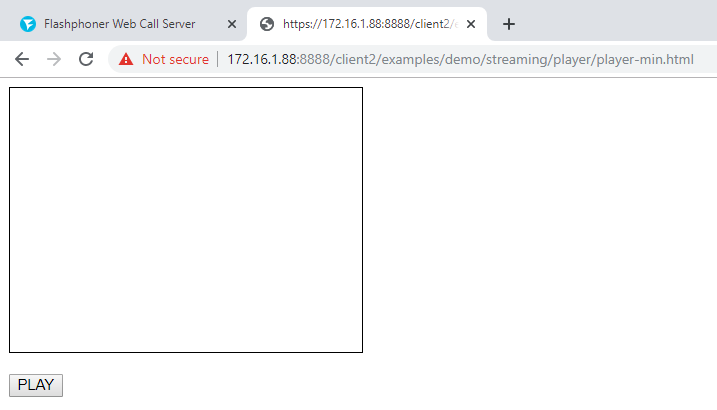

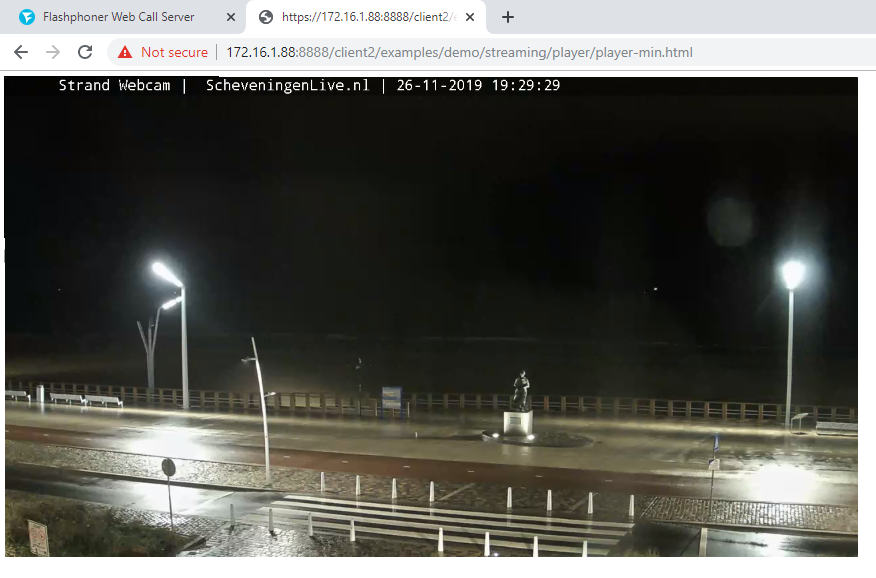

And it looks like this:

Player before pressing the "Play" button

Player with running video

There is very little code:

HTML

<!DOCTYPE html> <html lang="en"> <head> <script type="text/javascript" src="../../../../flashphoner.js"></script> <script type="text/javascript" src=my_player.js"></script> </head> <body onload="init_api()"> <div id="myVideo" style="width:320px;height:240px;border: solid 1px"></div> <br/><input type="button" onclick="connect()" value="PLAY"/> </body> </html>

Javascript

//Status constants var SESSION_STATUS = Flashphoner.constants.SESSION_STATUS; var STREAM_STATUS = Flashphoner.constants.STREAM_STATUS; //Websocket session var session; //Init Flashphoner API on page load function init_api() { console.log("init api"); Flashphoner.init({ }); } //Connect to WCS server over websockets function connect() { session = Flashphoner.createSession({urlServer: "wss://demo.flashphoner.com"}).on(SESSION_STATUS.ESTABLISHED, function(session){ console.log("connection established"); playStream(session); }); } //Playing stream with given name and mount the stream into myVideo div element function playStream() { var options = {"rtsp://b1.dnsdojo.com:1935/live/sys2.stream",display:document.getElementById("myVideo")}; var stream = session.createStream(options).on(STREAM_STATUS.PLAYING, function(stream) { console.log("playing"); }); stream.play(); }

The server side is demo.flashphoner.com. The full sample code is available below, in the basement with links. Good streaming!

References

Integration of an RTSP player into a web page or mobile application