Solving radiation problems was a “turning point in the history of space electronics”

Phobos-Grunt , one of the most ambitious space projects of modern Russia, fell into the ocean at the beginning of 2012. This spaceship was supposed to land on the surface of the shabby Martian moon Phobos, collect soil samples, and bring them back to Earth. But instead, he drifted helplessly for several weeks in low Earth orbit (DOE) due to the failure of the on-board computer before starting the engines, which were supposed to send the ship towards Mars.

In a subsequent report, Russian authorities blamed heavy charged particles in galactic cosmic rays that collided with SRAM chips and caused the chip to fail due to excessive current flowing through it. To deal with this problem, the two processors running on the computer, the computer, started a reboot. After that, the probe went into safe standby mode for commands from the Earth. Unfortunately, no instructions were received.

Communication antennas were supposed to reach the design mode of operation after the ship left LEO. However, no one foresaw the refusal, because of which the probe would not have reached this stage. After a collision with particles, Phobos-Grunt was in a strange stalemate. Starting onboard engines was supposed to trigger the deployment of antennas. Engines could only be started with a command from Earth. And this command could not arrive, because the antennas were not deployed. Computer error led to the collapse of the mission, which was preparing for several decades. In particular, the members of the NGO team named after. Lavochkin, the manufacturer of the device. During development, it was easier to list what was working on their computer than what was not working. However, every little mistake they made became a brutal reminder that developing space-class computers is terribly complicated. Once you stumble, and billions of dollars are burned.

The developers simply underestimated the difficulties of computers in space.

What is so slow?

Curiosity, the beloved Martian all-terrain vehicle, runs on two BAE RAD750 processors with a clock frequency of up to 200 MHz. It has 256 Mb RAM and an SSD of 2 Gb. On the eve of 2020, the RAD750 is the most advanced single-core space-class processor. Today it is the best that we can send into deep space.

But, unfortunately, compared to the smartphone in our pocket, the performance of the RAD750 can only cause pity. Its circuitry is based on the PowerPC 750, a processor that IBM and Motorola introduced in 1997 as a rival to the Intel Pentium II. This means that the most technologically advanced space equipment available in space today can well launch the first Starcraft (1998) without problems, however, it will run into problems in the face of something more demanding on computing power. Forget about playing Mars in Crysis.

At the same time, the RAD750 costs about $ 200,000. But you can’t just throw in your iPhone and end up with it? In terms of speed, iPhones for several generations left the RAD750 and cost only $ 1,000 apiece, which is much less than $ 200,000. The Phobos-Grunt team tried to do something similar. They tried to increase speed and save, but in the end they went too far.

The SRAM memory chip in Phobos-Grunt, damaged by heavy charged particles, was marked WS512K32V20G24M. He was well known in the space industry because in 2005 these chips were tested in the particle accelerator of the Brookhaven National Laboratory by T. Page and J. Benedetto to test how they behave when exposed to radiation. Researchers described these chips as “extremely vulnerable,” and their failures occurred even under the minimum energy exposure available in Brookhaven. The result was not surprising, since the WS512K32V20G24M was not intended for space. They were developed for military aviation. However, they were easier to find and they were cheaper than space-class memory chips, so the developers of Phobos-Grunt decided to take them.

“The discovery of the presence of various types of radiation in space has become one of the most important turning points in the history of space electronics, along with an understanding of the effect of this radiation on electronics and the development of chip strengthening and harm reduction technologies,” said Tyler Lovely, a researcher at the US Air Force research laboratory. The main sources of this radiation are cosmic rays, solar processes and the belts of protons and electrons located at the boundary of the Earth’s magnetic field, known as the Van Allen radiation belt . Of the particles colliding with the Earth’s atmosphere, 89% are protons, 9% are alpha particles, 1% are heavier nuclei, 1% are free electrons. Their energy can reach 10 19 eV. To use chips that are not suitable for space in a probe that has to travel through space for several years means asking for trouble. The newspaper Krasnaya Zvezda wrote that 62% of the chips used on Phobos-Grunt were not intended for use in space. The probe scheme for 62% consisted of the mood “let's screw an iPhone there”.

Radiation becomes a problem

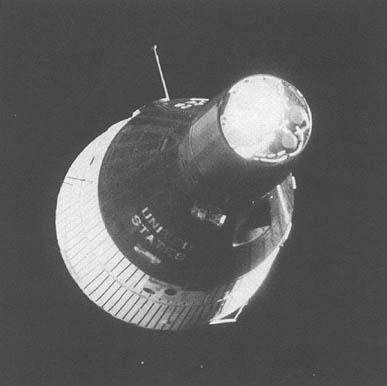

Today, cosmic rays are one of the key factors taken into account when creating space-class computers. But it was not always so. The first computer went into space aboard one of the Gemini vehicles in the 1960s. To obtain permission to fly the car, I had to go through more than a hundred different tests. Engineers checked how it behaves in response to vibration, vacuum, extreme temperatures, and so on. None of these tests took into account the effects of radiation. And yet, the on-board computer "Gemini" worked very well, without any problems. That's because he was too big to refuse. Literally. As many as 19.5 KB of memory were contained in a box of 11 liters and weighing 12 kg . And the whole computer weighed 26 kg.

In the computer industry, processor progress has typically been to reduce component size and increase clock speed. We made transistors smaller and smaller, going from 240 nm to 65 nm, then to 14 nm, and already to 7 nm, to modern smartphones. The smaller the transistor, the lower the voltage required to turn it on and off. Therefore, radiation practically did not affect the old processors with large components - more precisely, the so-called solitary disturbances . The voltage created by the collision with the particle was too small to affect the operation of a sufficiently large computer. But when people aspiring into space began to reduce the size of components in order to shove more transistors per chip, the particles created by the voltages became enough to cause problems.

More commonly, engineers to improve the performance of processors raise their clock speed. At Intel 386SX, under whose control the automation worked in the space shuttle control cabin, it worked at a frequency of 20 MHz. Modern processors at peak can reach up to 5 GHz. The clock frequency determines the number of processing cycles that the processor is capable of per unit of time. The problem with radiation is that a collision with a particle can ruin the data in the processor’s memory (L1 or L2 cache) for a short period of time. It turns out that in every single second a charged particle has a limited number of opportunities to create problems. In processors with a small clock speed, this number was quite small. But with increasing frequency, the number of these times in a second increased, which made the processors more sensitive to radiation. Therefore, processors with increased radiation resistance are almost always slower than commercial counterparts. The main reason cosmic processors work so slowly is because almost all methods that can speed them up make them more vulnerable.

Fortunately, this problem can be circumvented.

We deal with radiation

“In the past, radiation was minimized by a modified semiconductor process,” says Roland Weigand, VISI / ASIC engineer at the European Space Agency. “It was enough to take a commercial processor core and apply a process to it that increases radiation resistance.” This manufacturing radiation protection technology used materials such as sapphire or gallium arsenide, which did not react so much to radiation, unlike silicon. Processors manufactured in this way worked well in environments with high radiation, for example, in space, but for their production it was necessary to re-equip the whole factory.

“To increase performance, I had to use more and more advanced processors. Given the value of a modern semiconductor factory, special changes in the manufacturing process have ceased to be practical for a niche market such as space, ”says Weigand. As a result, this forced engineers to use commercial processors that were subject to single disturbances. “To reduce this effect, we had to switch to other technologies to increase radiation resistance - what we call design radiation protection,” adds Weigand.

Design protection enabled manufacturers to use the standard CMOS manufacturing process. Such space-class processors could be produced in commercial factories, reducing their cost to a reasonable one, and allowing space mission developers to catch up with commercial offers. Radiation was dealt with using engineering genius, and not just the physical properties of the material. “For example, triple modular redundancy (TMI) is one of the most popular ways to protect a chip from radiation, in other respects it’s completely standard,” Weigand explained. “Three identical copies of each piece of information are kept in memory all the time.” At the reading stage, all three are read and the correct version is chosen by the majority. ”

If all three copies are the same, the information is considered correct. The same thing happens when two copies are the same, and one differs from them - the correct copy is selected by a majority of votes. But when all three copies are different, the system logs an error. The idea is to store the same information at three different memory addresses located at three different places on the chip. In order to spoil the data, two particles must simultaneously collide with those places where two copies of the same particle of information are stored, which is extremely unlikely. The downside of this approach is the availability of redundant work for the processor. He needs to do each operation three times, which means that he will reach only a third of his speed.

So the newest idea came to bring the performance of space-class processors even closer to their commercial counterparts. Instead of protecting the entire system on a chip from radiation, engineers decide where this protection is most relevant. And where can you refuse it. This significantly changes design priorities. Older space processors were insensitive to radiation. New processors are sensitive to it, but they are designed to automatically cope with all the errors that radiation can cause.

For example, LEON GR740 is the latest European space-class processor. It is expected that he will experience 9 single disturbances per day while in the geostationary orbit of the Earth. The trick is that they will all be held back by the system and will not lead to errors in operation. GR740 is designed so that a functional error on it does not occur more than once every 300 years. And even in this case, it can just reboot.

Europe chooses openness

The LEON space processor line with SPARC architecture is Europe's most popular choice for space applications. “In the 90s, when the SPARC specification was chosen, it was very deeply embedded in the industry,” says Weigand. “Sun Microsystems has used it on its successful workstations.” According to him, the key reasons for the transition to SPARC were then software support and the openness of the platform. “Open architecture meant that everyone could use it without licensing issues. This was important because in such a narrow niche as space, the cost of licenses is distributed among a small number of devices, which seriously increases their cost, ”he explains.

As a result, ESA learned through bitter experience with licensing issues. The first European space SPARC processor - ERC32, used to this day - used commercial processors. It was based on an open architecture, but the processor circuitry was proprietary. “This led to problems. There is usually no access to the source codes of proprietary systems, so it’s hard to make changes to the project that are necessary to enhance radiation protection, ”says Weigand. Therefore, in the next step, ESA began working on its own processor, LEON. “His project was completely under our control, and we finally got the opportunity to use all the radiation protection technologies we wanted.”

The latest development in the LEON processor line is the quad-core GR740, operating at a frequency of about 250 MHz. (Weigand says that he expects the delivery of the first shipments of equipment by the end of 2019). GR740 is manufactured using the 65 nm process technology . This is a chip-based system designed for general purpose high-speed computing based on the SPARC32 architecture. “The goal of creating the GR740 was to achieve greater speed and the ability to add additional devices to the integrated circuit, while leaving compatibility with previous European space-class processors,” says Weigand. Another feature of the GR740 is its advanced fault tolerance system. The processor can cope with a significant number of errors caused by radiation, and still ensure uninterrupted operation of the software. Each unit and GR740 function is optimized for the highest speed. This means that components that are sensitive to single disturbances are adjacent to others that can easily cope with this. And all sensitive components are used in a circuit that reduces the effect of errors through redundancy.

For example, some triggers on the GR740 are regular commercial CORELIB FFs. They were chosen for use on this chip because they take up less space, thereby increasing the computational density. The downside is that they are subject to single disturbances, but they figured this out with the help of TMI blocks. Each piece of information read from these triggers is confirmed by voting between all modules located far enough so that one event does not affect several bits. Similar schemes are implemented for processor caches L1 and L2, consisting of SRAM cells, also subjected to single disturbances. When such schemes began to influence performance too much, ESA engineers switched to perturbation-resistant SKYROB triggers. However, they take up twice as much space as CORELIB. When trying to increase the computing power of computers in space, you always have to make some compromises.

So far, the GR740 has passed several radiation tests very well. The chip was fired by heavy ions with linear energy transfer (LET), reaching 125 MeV * cm 2 / mg, and they worked without a single failure. In order to have something to compare with, the very SRAM chips that caused Phobos-Grunt to fall, failed when particles with LET hit only about 0.375 MeV * cm 2 / mg. The GR740 withstood 300 times more powerful radiation. In addition to almost complete immunity to individual disturbances, the GR740 can absorb up to 300 steal radiation during its lifetime. During the tests, the Weigand team even irradiated one of the processors up to 293 degrees, but despite this, the chip worked, as usual, without showing signs of degradation.

Still, tests showing the true maximum ionization dose that the GR740 is capable of absorbing have yet to be done. All these figures together indicate that this processor, working in the geostationary orbit of the Earth, should produce one functional error every 350 years. In low orbit, this period increases to 1310 years. And even such errors will not kill the GR740. He only has to reboot.

America chooses patented solutions

“Space-class processors being developed in the United States have traditionally been based on proprietary technologies such as PowerPC, as people had more experience working with them and were supported by all kinds of software,” said Lovelie from the US Air Force research laboratories. After all, the history of space computing began with digital processors developed by IBM for the Gemini missions in the 1960s. And IBM worked with proprietary technology.

To this day, BAE RAD processors are based on PowerPC, which was born thanks to the work of the consortium of IBM, Apple and Motorola. The processors running in the computers of the cockpit of the space shuttle and the Hubble telescope were made based on the x86 architecture provided by Intel. Both PowerPC and x86 were proprietary technologies. Continuing this tradition, the latest project in this area is also based on closed technology. The High Speed Space Flight Computer ( HPSC ) differs from PowerPC and x86 in that the latter were better known as desktop processors. And HPSC is based on the ARM architecture that works today on most smartphones and tablets.

HPSC was developed by NASA, the US Air Force research laboratory and Boeing, responsible for the production. HPSC is based on four-core ARM Cortex A53 processors. He will have two such processors connected by the AMBA bus, which in the end will give an eight-core system. Its speed, therefore, will be somewhere in the region of mid-range smartphones in 2018 such as the Samsung Galaxy J8 or development boards like HiKey Lemaker or Raspberry Pi. True, these indicators are given before protection from radiation - it will reduce its performance by more than two times. However, we no longer have to read the dull headlines that 200 processors of the Curiosity rover will not catch up with one iPhone. After the launch of HPSC, only three or four of these chips will be required to compare in speed with an iPhone.

“Since we don’t have a real HPSC for testing yet, we can only make reasonable assumptions about its performance,” says Lovelie. The first carefully studied parameter was the clock frequency. Cortex A53 commercial eight-core processors typically run at frequencies from 1.2 GHz (in the case of HiKey Lemaker) to 1.8 GHz (like the Snapdragon 450). To figure out what the HPSC clock frequency would be after radiation protection, Lovely compared various space-class processors with their commercial counterparts. “We decided that it would be reasonable to expect a similar decrease in performance,” he says. Lovely rated the HPSC clock speed at 500 MHz. And still it will be exceptionally high speed for a space-class chip. If this frequency really is such, then the HPSC will be the champion in clock frequency among space-class processors. However, the increase in computing power and clock frequency in space usually turn into serious problems.

Today, the most powerful processor, protected from exposure to radiation, is the BAE RAD5545 . This is a 64-bit four-core machine made by the 45 nm manufacturing process, operating at a clock frequency of 466 MHz and dissipated power up to 20 W; and 20 watts is decent. The Quad Core i5 in the 13 "MacBook Pro 2018 dissipates 28 watts. It can heat the aluminum case to very high temperatures, up to those that begin to cause problems for users. During high computing load, fans will immediately turn on to cool the entire system. That's just fans will not help space at all, because there is no air that can blow on a hot chip.The only possible way to remove heat from the spacecraft is radiation, and this takes time. Of course, heat pipes will help to remove heat from processor, but this heat should end up somewhere. Moreover, some missions have a very limited energy budget and they simply can’t afford such powerful processors as the RAD5545. Therefore, the European GR740 dissipates energy power of only 1.5 W. It is not the fastest available, but the most efficient. It just gives you the maximum amount of computation per watt. HPSC with a dispersion of 10 W is not far from it in second place, but not always.

“Each HPSC core has its own module for a single instruction stream, multiple data stream, Single Instruction Multiple Data (SIMD). OKMD technology has often been used in commercial desktop and mobile computers since the 90s. It helps processors better handle image and sound processing in video games. Let's say we need to lighten the picture. It has many pixels, and each of them has a brightness that needs to be increased by two. Without OKMD, the processor will need to perform all this summation sequentially, one pixel after another. Using OKMD, this problem can be parallelized. The processor takes several data points — the brightness values of all the pixels in the image — and executes the same instruction with them, adding a deuce to all at the same time. And since the Cortex A53 processor was designed for smartphones and tablets that handle a large amount of media content, HPSC is also capable of this.

"This is especially beneficial in tasks such as image compression, processing, or stereo vision," Lovely says. - In applications that do not use this feature, HPSC works a little better than the GR740 and other fast space processors. But when it can be used, the chip is seriously ahead of its rivals. "

Bringing science fiction back to space exploration

Chip developers from the United States tend to be more powerful, but also more demanding on energy processors, since NASA missions, both robotic and manned, are usually on a larger scale than their European counterparts. In Europe, there are no plans for the foreseeable future to send people or all-terrain vehicles the size of a car to the moon or Mars.Today, ESA is focusing on probes and satellites, usually working with a limited energy budget, so choosing something lighter and more energy efficient, such as the GR740, makes more sense. The HPSC was originally designed to bring NASA's science-fiction ambitions to places.

For example, in 2011, NASA's Game Changing Development Program commissioned a study on how computing requests in space will look in 15-20 years. A team of experts from various centers of the agency compiled a list of tasks that advanced processors could solve in manned and robotic missions. One of the first tasks they identified was the constant monitoring of equipment status. This task comes down to the presence of sensors that constantly monitor the status of critical components. Acquiring high frequency data from all of these sensors requires fast processors. A slow computer would probably do the job if the data came to it somewhere every 10 minutes, but if you need to check all the equipment several times per second in order to achieve efficiency,resembling real-time monitoring, your processor should run very fast. All this needs to be developed so that astronauts can sit in front of the control panel, which would display the real state of the ship, capable of issuing voice alerts and beautiful graphics. To support such graphics, fast computers are also required.

However, science fiction goals do not end with cockpits. Astronauts exploring other worlds are likely to have an augmented reality system built into their helmets. Their environment will be supplemented by computer-generated video, sounds and GPS data. In theory, augmented reality will improve the effectiveness of researchers, mark areas worth exploring and warn of potentially dangerous situations. Of course, embedding augmented reality in a helmet is just one of several possibilities. Among the other options mentioned in the study are portable devices, such as smartphones, and something vaguely described as “other possibilities for displaying information.” Such computing breakthroughs will require faster space-class processors.

Also, such processors should improve robotic missions. One of the main examples is landing on a difficult terrain. Choosing a site for landing on the surface is always a compromise between safety and scientific value. The safest place is a flat plane without stones, hills, valleys and outcrops of rocks. From a scientific point of view, the most interesting place will be geologically diverse, which means an abundance of stones, hills, valleys and outcrops of rocks. One way to solve this problem is the so-called. Terrain Based Navigation (NOM). All-terrain vehicles equipped with the NOM system will be able to recognize important signs, see potential danger and go around it, and this can narrow the landing radius to 100 m.The problem is that the space-class processors existing today are too slow to process images at such a speed.A team from NASA launched a NOM performance test on the RAD 750 and found that updating from a single camera took about 10 seconds. Unfortunately, if you fall on the Martian surface, 10 seconds is a lot. To land an all-terrain vehicle on a site with a radius of 100 m, updates from the camera must be processed every second. For an exact landing on the site per meter, you will need 10 updates per second.

Among other computational wishes from NASA are algorithms that can predict impending disasters based on sensor readings, intelligent graphing, advanced autonomy, etc. All this is beyond the capabilities of current space-class processors. Therefore, in the study, NASA engineers provide their estimates of the computing power needed to support such tasks. They found that monitoring the condition of the ship and landing in difficult conditions would require 10 to 50 GOPS (gigaoperations per second). Futuristic sci-fi flight consoles with fashionable displays and advanced graphics will require 50-100 GOPS. The same goes for augmented reality helmets or other devices; they also consume from 50 to 100 GOPS.

Ideally, future space processors will be able to easily support all these projects. Today, the HPSC, operating in power dissipation from 7 to 10 watts, is capable of delivering 9-15 GOPS. This could already make possible an extreme landing, but the HPSC is designed so that this figure can increase significantly. First of all, these 15 GOPS do not include the performance advantages received from OKMD. Secondly, this processor can work in conjunction with other HPSC and external devices, for example, specialized FPGA or GPU processors. Therefore, the spacecraft of the future may have several distributed processors working in parallel, and specialized chips can deal with certain tasks such as image processing or signals.

Regardless of where the dreams of mankind about deep space go, engineers already know at what stage of development the current computing power is. The LEON GR740 should be made available to ESA later this year, and after passing a few additional tests, it should be ready to fly in 2020. The HPSC production phase, in turn, should begin in 2012 and end in 2022. It should take several months to test in 2022.

NASA should receive ready-to-use HPSC chips by the end of 2022. This means that, without taking into account other factors complicating the progress, at least cosmic silicon is moving into the future at a speed that will allow it to prepare for the return of people to the moon by 2024.