Under the cut is a little trick that I use when searching and choosing github solutions.

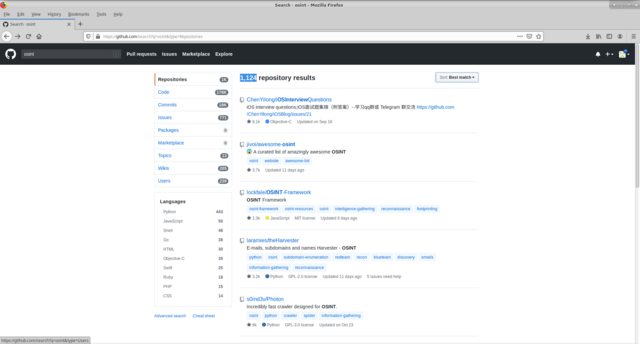

Imagine the task of developing a large OSINT system, let's say we need to look at all the available solutions on github in this direction. we use the standard global github search for the osint keyword. We get 1124 repositories, the ability to filter by the location of the keyword search (code, commits, issuse, etc), by the execution language. And sort by various attributes (such as most / fewest starts, forks, etc).

The choice of solution is carried out according to several criteria: functionality, number of stars, project support, development language.

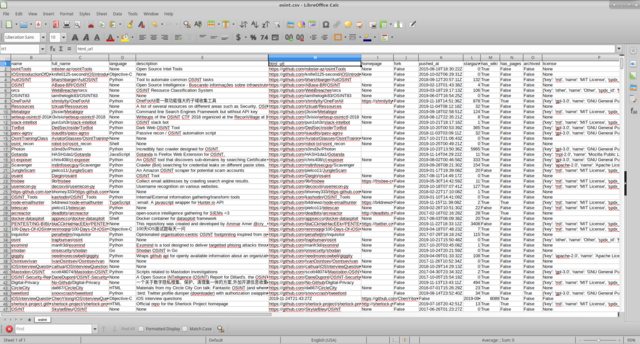

The decisions that interested me were summarized in a table, where the fields indicated above were filled in, appropriate notes were made based on the results of a particular test.

The lack of such a representation, it seems to me, is the lack of the ability to simultaneously sort and filter across multiple fields.

Using api_github and python3 we outline a simple simple script that forms a csv document for us with the fields of interest to us.

#!/usr/bin/env python3 from requests import get from sys import argv def print_to_csv(out_file,massive): open(out_file,'a').writelines('id;name;full_name;language;description;created_at;updated_at;html_url;homepage;fork' ';pushed_at;stargazers_count;has_wiki;has_pages;archived;license;score;stargazers_count\n') for i in massive: open(out_file,'a').writelines(i+'\n') def string_to_csv_string(my_dict): csv_string='' keys=['id', 'name', 'full_name','language', 'description','created_at', 'updated_at', 'html_url', 'homepage','fork', 'pushed_at', 'stargazers_count','has_wiki', 'has_pages', 'archived', 'license', 'score','stargazers_count'] for i in keys: csv_string+=(str(my_dict[i])+';') return csv_string def dicts_to_dictsString(dicts): strings=set() for dict in dicts: string=string_to_csv_string(dict) strings.add(string) return strings def search_to_git(keyword): item_all=set() req=get('https://api.github.com/search/repositories?q={}&per_page=100&sort=stars'.format(keyword)) item_all=item_all|dicts_to_dictsString(req.json()['items']) page_all=req.json()['total_count']/100 if page_all>=10: page_all=10 for i in range(2,int(page_all)+1): req = get('https://api.github.com/search/repositories?q={}&per_page=100&sort=stars&page={}'.format(keyword,i)) try: item_all=item_all|dicts_to_dictsString(req.json()['items']) except KeyError: return item_all return item_all if __name__ == '__main__': try: strings=list(search_to_git(argv[1])) print_to_csv(argv[1]+'.csv',strings) except IndexError: print('''exemple: ./git_search_info keyword_for_search out_file ''')

Run the script

python3 git_repo_search.py osint

we get

It seems to me that working with information is more convenient, having previously hidden unnecessary columns.

Code here

I hope someone comes in handy.