Hello, habrozhiteli! Natural Language Processing (NLP) is an extremely important task in the field of artificial intelligence. Successful implementations enable products such as Amazon's Amazon and Google Translate. This book will help you learn PyTorch, a deep learning library for the Python language, one of the leading tools for data scientists and NLP software developers. Delip Rao and Brian McMahan will get you up to speed with NLP and deep learning algorithms. And show how PyTorch allows you to implement applications that use text analysis.

Hello, habrozhiteli! Natural Language Processing (NLP) is an extremely important task in the field of artificial intelligence. Successful implementations enable products such as Amazon's Amazon and Google Translate. This book will help you learn PyTorch, a deep learning library for the Python language, one of the leading tools for data scientists and NLP software developers. Delip Rao and Brian McMahan will get you up to speed with NLP and deep learning algorithms. And show how PyTorch allows you to implement applications that use text analysis.

In this book • Computational graphs and the paradigm of learning with a teacher. • The basics of the optimized PyTorch library for working with tensors. • An overview of traditional NLP concepts and methods. • Proactive neural networks (multilayer perceptron and others). • Improvement of RNN with long-term short-term memory (LSTM) and controlled recurrence blocks. • Prediction and sequence transformation models. • Design patterns of NLP systems used in production.

Excerpt. Nesting words and other types

When solving problems of processing texts in natural languages, one has to deal with various types of discrete data types. The most obvious example is words. A lot of words (dictionary) of course. Among other examples, symbols, labels of parts of speech, named entities, named types of entities, features associated with parsing, positions in the product catalog, etc. In fact, any input feature taken from a finite (or infinite, but countable) set.

The basis of the successful application of deep learning in NLP is the representation of discrete data types (for example, words) in the form of dense vectors. The terms “representation learning” and “embedding” mean learning to display / represent from a discrete data type to a point in a vector space. If discrete types are words, then a dense vector representation is called a word embedding. We have already seen examples of nesting methods based on the number of occurrences, for example TF-IDF (“term frequency is the inverse frequency of a document”) in Chapter 2. In this chapter we will focus on training-based nesting methods and prediction-based nesting methods (see article by Baroni et al. (Baroni et al., 2014]), in which performance training is performed by maximizing the objective function for a specific learning task; for example, predicting a word by context. Training-based investment methods are currently the standard due to their wide applicability and high efficiency. In fact, the embedding of words in NLP problems is so widespread that they are called the “sriracha of NLP”, since it can be expected that their use in any task will increase the efficiency of the solution. But this nickname is a little misleading, because, unlike syraci, attachments are usually not added to the model after the fact, but are its basic component.

In this chapter, we will discuss vector representations in connection with word embeddings: word embedding methods, word embedding optimization methods for teaching tasks with and without a teacher, visual embedding visualization methods, and also word embedding combination methods for sentences and documents. However, do not forget that the methods described here apply to any discrete type.

Why investment training

In the previous chapters, we have shown you the usual methods for creating vector representations of words. Namely, you learned how to use unitary representations - vectors with a length matching the size of the dictionary, with zeros at all positions, except for one containing the value 1 corresponding to a specific word. In addition, you met with representations of the number of occurrences - vectors of length equal to the number of unique words in the model containing the number of occurrences of words in the sentence at the corresponding positions. Such representations are also called distributional representations, since their meaningful content / meaning is reflected by several dimensions of the vector. The history of distributive representations has been going on for many decades (see Firth's article [Firth, 1935]); they are excellent for many models of machine learning and neural networks. These representations are constructed heuristically1, and are not trained on data.

Distributed representation got its name because the words in them are represented by a dense vector of a much smaller dimension (for example, d = 100 instead of the size of the entire dictionary, which can be of the order

), and the meaning and other properties of the word are distributed over several dimensions of this dense vector.

), and the meaning and other properties of the word are distributed over several dimensions of this dense vector.

The low-dimensional dense representations obtained as a result of training have several advantages over the unitary vectors containing the number of occurrences that we encountered in the previous chapters. First, dimensionality reduction is computationally efficient. Secondly, representations based on the number of occurrences lead to high-dimensional vectors with excessive coding of the same information in different dimensions, and their statistical power is not too large. Thirdly, too much dimensionality of the input data can lead to problems in machine learning and optimization - a phenomenon often called the curse of dimensionality ( http://bit.ly/2CrhQXm ). To solve this problem with dimension, various methods of reducing the dimension are used, for example, singular-value decomposition (SVD) and the principal component analysis (PCA), but, ironically, these approaches do not scale well on dimensions of the order of millions ( typical case in NLP). Fourthly, the representations learned from (or fitted on the basis of) data specific to a problem are optimally suited for this particular task. In the case of heuristic algorithms like TF-IDF and dimensional reduction methods like SVD, it is unclear whether the target optimization function is suitable for a particular task with this embedding method.

Investment efficiency

To understand how embeddings work, consider an example of a unitary vector by which the weight matrix in a linear layer is multiplied, as shown in Fig. 5.1. In chapters 3 and 4, the size of unitary vectors coincided with the size of the dictionary. A vector is called unitary because it contains 1 at the position corresponding to a particular word, thus indicating its presence.

Fig. 5.1. An example of matrix multiplication for the case of a unitary vector and a matrix of weights of a linear layer. Since the unitary vector contains all zeros and only one unit, the position of this unit plays the role of the choice operator when multiplying the matrix. This is shown in the figure as a darkening of the cells of the weight matrix and the resulting vector. A similar search method, although it works, requires a large consumption of computing resources and is inefficient, since the unitary vector is multiplied by each of the numbers of the weight matrix and the sum is calculated in rows

By definition, the number of rows of the weight matrix of a linear layer receiving a unitary vector at the input should be equal to the size of this unitary vector. When multiplying the matrix, as shown in Fig. 5.1, the resulting vector is actually a string corresponding to a nonzero element of a unitary vector. Based on this observation, you can skip the multiplication step and use an integer value as an index to extract the desired row.

One last note regarding investment performance: despite the example in Figure 5.1, where the dimension of the weight matrix coincides with the dimension of the input unitary vector, this is far from always the case. In fact, attachments are often used to represent words from a space of lower dimension than would be necessary if using a unitary vector or representing the number of occurrences. A typical investment size in scientific articles is from 25 to 500 measurements, and the choice of a specific value is reduced to the amount of available GPU memory.

Attachment Learning Approaches

The purpose of this chapter is not to teach you specific techniques for investing words, but to help you figure out what investments are, how and where they can be applied, how best to use them in models, and what are their limitations. The fact is that in practice it is rarely necessary to write new learning algorithms for word embeddings. However, in this subsection we will give a brief overview of modern approaches to such training. Training in all methods of nesting words is done using only words (i.e. unmarked data), but with a teacher. This is possible due to the creation of auxiliary teaching tasks with the teacher, in which the data is marked implicitly, for the reason that the representation optimized for solving the auxiliary problem should capture many statistical and linguistic properties of the text corpus in order to bring at least some benefit. Here are some examples of such helper tasks.

- Predict the next word in a given sequence of words. It also bears the name of the language modeling problem.

- Predict the missing word by words located before and after it.

- Predict words within a specific window, regardless of position, for a given word.

Of course, this list is not complete and the choice of an auxiliary problem depends on the intuition of the algorithm developer and the computational costs. Examples include GloVe, Continuous Bag-of-Words (CBOW), Skipgrams, etc. Details can be found in Chapter 10 of Goldberg's book (Goldberg, 2017), but we will briefly discuss the CBOW model here. However, in most cases, it is quite enough to use pre-trained word attachments and fit them to the existing task.

Practical application of pre-trained word attachments

The bulk of this chapter, as well as the rest of the book, is about using pre-trained word attachments. Pre-trained using one of the many methods described above on a large body - for example, the full body of Google News, Wikipedia or Common Crawl1 - attachments of words can be freely downloaded and used. Further in the chapter we will show how to correctly find and load these attachments, study some properties of word embeddings and give examples of using pre-trained word embeddings in NLP tasks.

Download attachments

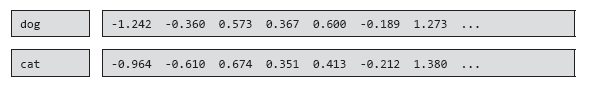

Attachments of words have become so popular and widespread that many different options are available for download, from the original Word2Vec2 to Stanford GloVe ( https://stanford.io/2PSIvPZ ), including Facebook’s FastText3 ( https://fasttext.cc / ) and many others. Usually, attachments are delivered in the following format: each line starts with a word / type followed by a sequence of numbers (i.e. a vector representation). The length of this sequence is equal to the dimension of the presentation (dimension of the attachment). The dimension of investments is usually of the order of hundreds. The number of types of tokens is most often equal to the size of the dictionary and amounts to about a million. For example, here are the first seven dimensions of the dog and cat vectors from GloVe.

For efficient loading and handling of attachments, we describe the helper class PreTrainedEmbeddings (Example 5.1). It creates an index of all the word vectors stored in RAM to simplify the quick search and queries of nearest neighbors with the help of the approximate nearest neighbor calculation package, annoy.

Example 5.1. Using Pre-trained Word Attachments

In these examples, we use the embedding of the words GloVe. You need to download them and create an instance of the PreTrainedEmbeddings class, as shown in Input [1] from Example 5.1.

Relationships between Word Attachments

The key property of word embeddings is the encoding of syntactic and semantic relationships, manifested in the form of patterns of use of words. For example, cats and dogs are usually spoken about very similarly (they discuss their pets, feeding habits, etc.). As a result, attachments for the words cats and dogs are much closer to each other than to attachments for the names of other animals, say ducks and elephants.

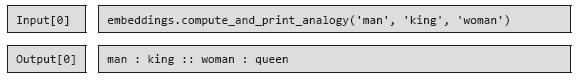

There are many ways to study semantic relationships encoded in word embeddings. One of the most popular methods is to use an analogy task (one of the common types of logical thinking tasks in exams such as SAT):

Word1: Word2 :: Word3: ______

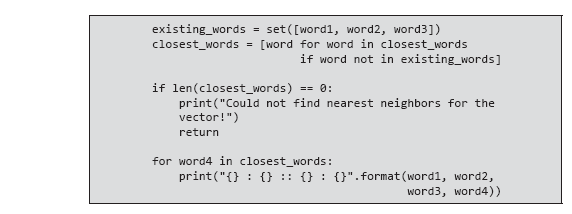

In this task, it is necessary to determine the fourth, given the connection, between the first two by given three words. With the help of nesting words, this problem can be encoded spatially. First, subtract Word2 from Word1. The difference vector between them encodes the relationship between Word1 and Word2. This difference can then be added to Slovo3 and the result is the vector closest to the fourth missing word. To solve the problem of analogy, it is sufficient to query the nearest neighbors by index using this obtained vector. The corresponding function shown in Example 5.2 does exactly the above: it uses vector arithmetic and an approximate index of nearest neighbors to find the missing element in the analogy.

Example 5.2. Solving an analogy problem using word embeddings

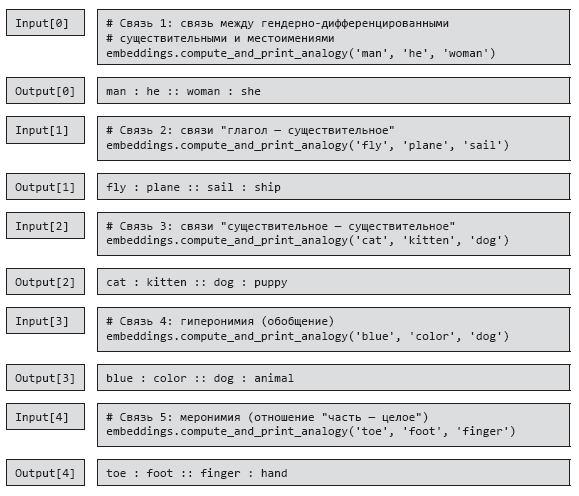

Interestingly, using a simple verbal analogy, one can demonstrate how word embeddings are able to capture a variety of semantic and syntactic relationships (Example 5.3).

Example 5.3 Coding with the help of nesting words of a lot of linguistic connections on the example of tasks on the analogy of SAT

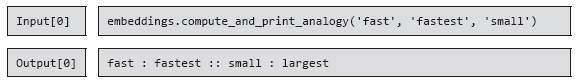

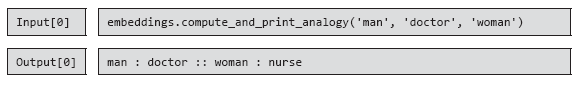

Although it might seem that connections clearly reflect the functioning of the language, not everything is so simple. As Example 5.4 demonstrates, connections can be incorrectly defined, because the vectors of words are determined based on their joint occurrence.

Example 5.4. An example illustrating the danger of coding the meaning of words based on co-occurrence - sometimes it does not work!

Example 5.5 illustrates one of the most common combinations when encoding gender roles.

Example 5.5 Be careful with protected attributes, such as gender, encoded by word attachments. They can lead to unwanted bias in future models.

It turns out that it’s quite difficult to distinguish between patterns of language and deep-rooted cultural biases. For example, doctors are by no means always men, and nurses are not always women, but such prejudices are so persistent that they are reflected in the language, and as a result, in the word vectors, as shown in Example 5.6.

Example 5.6. Cultural prejudices “sewn” into the vectors of words

We should not forget about possible systematic errors in investments, taking into account the growth of their popularity and prevalence in NLP applications. The eradication of systematic errors in word embedding is a new and very interesting field of scientific research (see the article by Bolukbashi et al. [Bolukbasi et al., 2016]). We recommend that you look at ethicsinnlp.org , where you can find up-to-date information on cross-sectional ethics and NLP.

About the authors

Delip Rao is the founder of the San Francisco-based consulting firm Joostware specializing in machine learning and NLP research. One of the co-founders of the Fake News Challenge - an initiative designed to bring together hackers and researchers in the field of AI over the tasks of fact-checking in the media. Delip previously worked on NLP-related research and software products on Twitter and Amazon (Alexa).

Brian McMahan is a research fellow at Wells Fargo, focusing primarily on NLP. Previously worked at Joostware.

»More details on the book can be found on the publisher’s website

» Contents

» Excerpt

25% off coupon for hawkers - PyTorch

Upon payment of the paper version of the book, an electronic book is sent by e-mail.