98 people participated in the competition - there are so few people because this is a sound processing competition on a Russian platform, and even in a docker. I was in a team with Dmitry Danevsky, Kaggle Master, whom we met and agreed to participate while discussing approaches in another competition.

Task

We were given 5 GB of audio files, divided into spoof / human classes, and we had to predict the probability of the class, wrap it in docker and send it to the server. The solution was supposed to work in 30 minutes and weigh less than 100 MB. According to official information, it was necessary to distinguish between a human voice and an automatically generated one - although it seemed to me personally that the spoof class also included cases where the sound was generated by bringing the speaker to the microphone (as attackers do by stealing a recording of someone else's voice for identification).

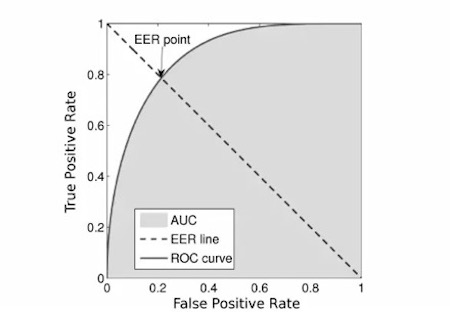

The metric was EER :

We took the first code that came across from the network, because the code of the organizers seemed overloaded.

Competition

The organizers provided the base line and concurrently the main riddle of the competition. It was as simple as a stick: we take audio files, count chalk spectrograms , train MobileNetV2 and find ourselves somewhere in the region of 12th place or lower. Because of this, many would have thought that a dozen people participated in the competition, but this was not so. The whole first stage of the competition, our team could not break this baseline. The ideally identical code gave the result much worse, and any improvements (such as replacing with heavier grids and OOF predictions) helped, but did not bring it closer to the base line.

And then the unexpected happened: about a week before the end of the competition it turned out that the implementation of the metrics of the organizers contained a bug and depended on the order of predictions. Around the same time, it was found that in the docker containers the organizers did not kindly turn off the Internet, so many downloaded the test sample. Then the contest was frozen for 4 days, corrected the metric, updated the data, turned off the Internet and started again for another 2 weeks. After recounting, we were in 7th place with one of our first submissions. This served as a powerful motivation for continuing to participate in the competition.

Speaking of model

We used a resnet-like convolutional grid trained over chalk spectrograms.

- There were 5 such blocks in total. After each such block, we did deep supervision and increased the number of filters by one and a half times.

- During the competition, we moved from a binary classification to a multiclass one in order to more efficiently utilize the mixup technique, in which we mix two sounds and summarize their class labels. In addition, after such a transition, we were able to artificially increase the probability of the spoof class by multiplying it by 1.3. This helped us, since there was an assumption that the balance of classes in the test sample may be different from the training one, and thus we improved the quality of the models.

- Fold models were trained, and the predictions of several models were averaged.

- Frequency encoding technique was also useful. The bottom line is: 2D convolutions are position-invariant, and in the spectrograms the values along the vertical axis have very different physical meanings, so we would like to transfer this information to the model. To do this, we concatenated the spectrogram and the matrix, consisting of numbers in a segment from -1 to 1 from bottom to top.

For clarity, I will give the code:

n, d, h, w = x.size() vertical = torch.linspace(-1, 1, h).view(1, 1, -1, 1) vertical = vertical.repeat(n, 1, 1, w) x = torch.cat([x, vertical], dim=1)

- We trained all this, including on pseudo-labeled data from the leaked test sample of the first stage.

Validation

From the very beginning of the competition, all the participants were tormented by the question: why does local validation give EER 0.01 and lower, and the leaderboard 0.1 and does not particularly correlate? We had 2 hypotheses: either there were duplicates in the data, or training data was collected on one set of speakers, and test data on another.

The truth was somewhere in between. In the training data, about 5% of the data turned out to be duplicates, and this is counting only full duplicates of the hashes (by the way, they could also contain different crop of the same file, but this is not so easy to check - that's why we did not).

To test the second hypothesis, we trained the speaker-id grid, received embeddings for each speaker, clustered it all with k-means, and folded stratified them. Namely, we trained on speakers from one cluster, and predicted speakers from others. This method of validation has already begun to correlate with the leaderboard, although it showed a score 3-4 times better. As an alternative, we tried to validate only on predictions in which the model was at least a little unsure, that is, the difference between the prediction and the class label was> 10 ** - 4 (0.0001), but such a scheme did not bring results.

And what did not work?

On the Internet, simply finding thousands of hours of human speech is enough. In addition, a similar competition was already held several years ago. Therefore, it seemed an obvious idea to download a lot of data (we downloaded ~ 300 GB) and teach a classifier on this. In some cases, training on such data proved a little bit if we taught on additional and on train data before reaching a plateau, and then we only trained on training data. But with this scheme, the model converged in about 2 days, which meant 10 days for all folds. Therefore, we abandoned this idea.

In addition, many participants noticed a correlation between the file length and the class; this correlation was not noticed in the test sample. Ordinary picture grids like resnext, nasnet-mobile, mobileNetV3 did not show themselves very well.

Afterword

It was not easy and sometimes strange, but still we got a cool experience and came out on top. Through trial and error, I realized which approaches are quite working and which are not very good. Now I will use these insights with us when processing sound. I work hard to bring conversational AI to a level indistinguishable from the human, and therefore always in the search for interesting tasks and chips. I hope you also learned something new.

Well, finally, I post our code .