Serverless computing is one of the most visible trends in cloud computing. The basic principle of work is that the infrastructure is not the concern of DevOps, but the service provider. Resource scaling automatically adapts to the load and has a high rate of change.

Another common feature is the tendency to minimize and focus code, which is why serverless computing is sometimes called "function as a service" (FaaS).

Historically, the first cloud service provider to offer FaaS with AWS Lambda was Amazon, which is where the name comes from. Other cloud service providers also offer analogues:

- Google Cloud Functions

- Azure Functions from Microsoft

All these companies provide serverless computing, automatic scaling and payment of only actually used resources, but at the same time they tie customers to their proprietary product. However, there are free open source alternatives for serverless computing. Its useful to note:

- The Apache OpenWhisk platform , developed by IBM at the incubator,

- Spring Cloud Functions , as part of the reasonably rich Spring Framework ecosystem, which can also be used as a facade of AWS Lambda, Azure Functions, and OpenWhisk,

- Fn project supported by Oracle.

All of them are completely independent from the clouds, that is, they are installed in any cloud, including your own, public or private, and of course in Exoscale.

How the Fn project works

Fn is completely based on Docker, consists of two main components:

- CLI program designed to manage all aspects of the Fn infrastructure, and interacting with the Fn server,

- Actually the Fn server, the usual application packaged in a container for Docker.

The functions deployed in Fn are also performed in separate containers, which allows you to support a lot of programming languages, for example ... Clojure!

Arguments of functions are transferred to standard input (STDIN), the results are written to standard output (STDOUT). If the arguments or return values are not simple values (for example, a JSON object), they can be converted using the abstraction layer provided by Fn itself as a function development kit (FDK).

For convenience, built-in templates are available to facilitate the deployment of FaaS in an extensive list of different languages and their versions (Go, different versions of Java, Python, etc.).

Creating FaaS is easy by following this pattern:

- We deploy the function using the CLI Fn: an application configuration file for Fn is created, based on the selected template.

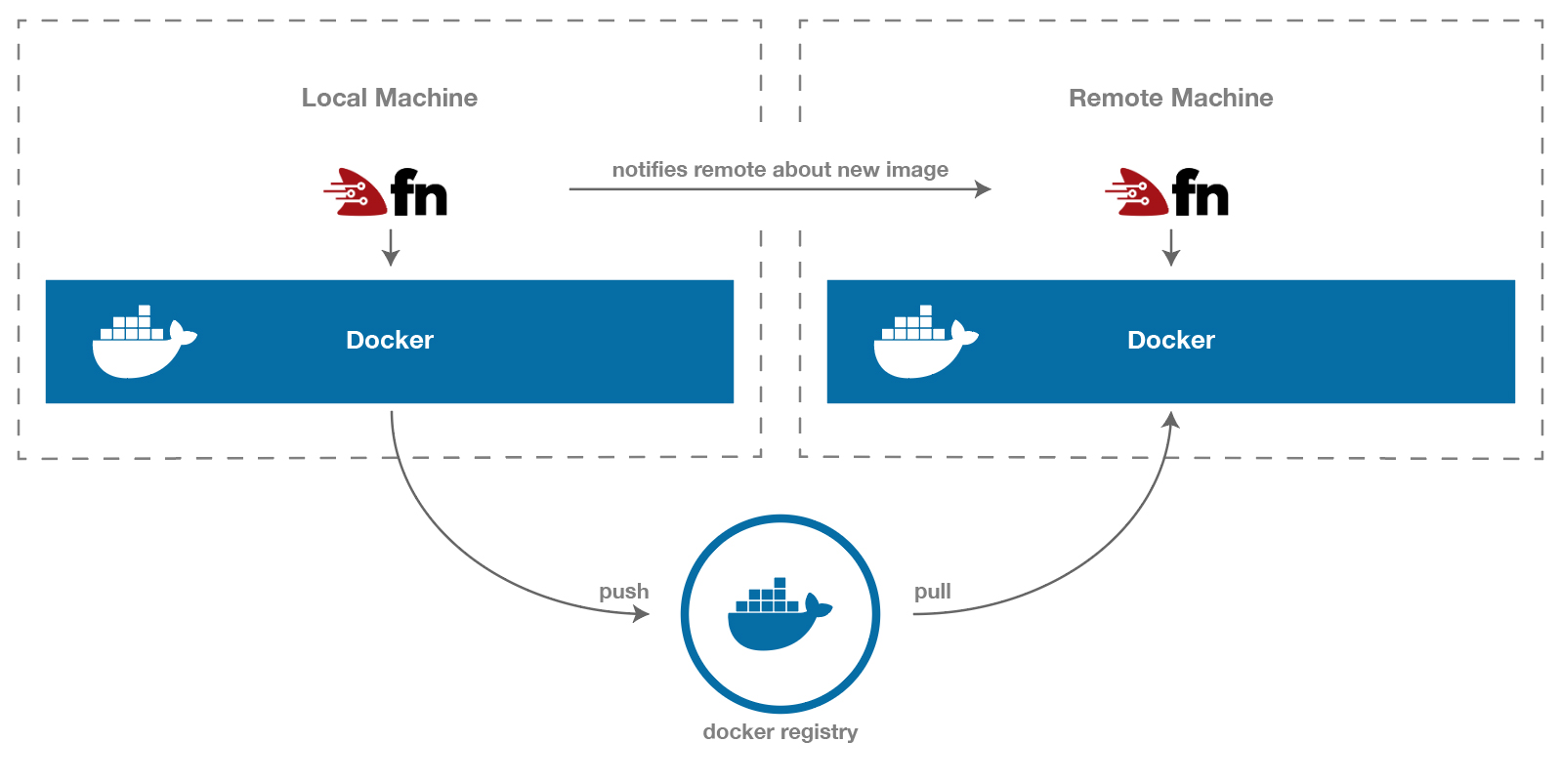

- We roll out our own function, again using the CLI Fn: the container image is placed in a certain repository, after which the server is notified of the existence and placement of this image.

Function Delivery Principle in Fn

Local installation and testing of serverless functions

We proceed to install Fn on the local machine. Docker is installed first, as required by Fn. We are supposed to be on Debian / Ubuntu:

$ sudo apt-get update $ sudo apt-get install docker.io

Well, or use the package manager / Docker build according to your system. Then you can go straight to the Fn CLI installation. For example, using curl:

$ curl -LSs https://raw.githubusercontent.com/fnproject/cli/master/install | sh

If you are running OSX with Homebrew installed, you can go the other way:

$ brew install fn ==> Downloading https://homebrew.bintray.com/bottles/fn-0.5.8.high_sierra.bottle.tar.gz ==> Downloading from https://akamai.bintray.com/b1/b1767fb00e2e69fd9da73427d0926b1d1d0003622f7ddc0dd3a899b2894781ff?__gda__=exp=1538038849~hmac=c702c9335e7785fcbacad1f29afa61244d02f2eebb ######################################################################## 100.0% ==> Pouring fn-0.5.8.high_sierra.bottle.tar.gz /usr/local/Cellar/fn/0.5.8: 5 files, 16.7MB

Now everything is ready for the initial deployment of our function using the CLI. For simplicity, we will use the built-in environment to run, for example, Node:

$ fn init --runtime node --trigger http hellonode Creating function at: /hellonode Function boilerplate generated. func.yaml created.

A new hellonode

directory will be created to further develop our Fn function with some basic configuration files. Inside the newly created directory, you can create your application that follows the standards of your chosen language or runtime:

# node : hellonode ├── func.js ├── func.yaml └── package.json # Java11 : hellojava11 ├── func.yaml ├── pom.xml └── src ├── main │ └── java │ └── com │ └── example │ └── fn │ └── HelloFunction.java └── test └── java └── com └── example └── fn └── HelloFunctionTest.java

Fn creates the initial structure of the project, creates a func.yaml

file containing the necessary settings for Fn, and installs a template for the code in the language you selected.

In the case of the Node runtime, this means:

$ cat hellonode/func.js const fdk=require('@fnproject/fdk'); fdk.handle(function(input){ let name = 'World'; if (input.name) { name = input.name; } return {'message': 'Hello ' + name} })

Now we will quickly check our function locally to see how everything works.

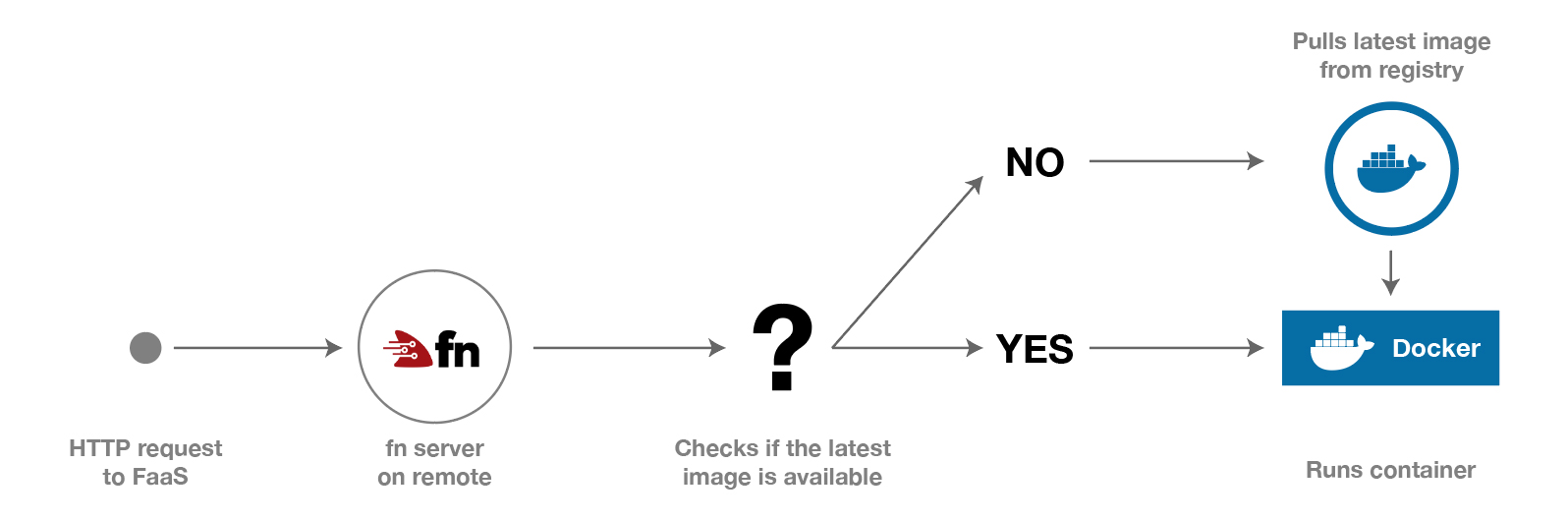

First, we will start the Fn server. As already mentioned, the Fn server is a Docker container, therefore, after starting it will go and take the image from the Docker registry.

$ fn start -d # Unable to find image 'fnproject/fnserver:latest' locally latest: Pulling from fnproject/fnserver ff3a5c916c92: Pull complete 1a649ea86bca: Pull complete ce35f4d5f86a: Pull complete ... Status: Downloaded newer image for fnproject/fnserver:latest 668ce9ac0ed8d7cd59da49228bda62464e01bff2c0c60079542d24ac6070f8e5

To run our function, you need to roll it out. This requires an

: in Fn, all applications must be defined as namespaces for related functions.

Fn CLI will look for the func.yaml

file in the current directory, which will be used to configure the function. So first you need to go to our hellonode

directory.

$ cd hellonode $ fn deploy --app fnexo --local # , - fnexo. # local , # Deploying hellonode to app: fnexo Bumped to version 0.0.2 Building image nfrankel/hellonode:0.0.3 . Updating function hellonode using image nfrankel/hellonode:0.0.3... Successfully created app: fnexo Successfully created function: hellonode with nfrankel/hellonode:0.0.3 Successfully created trigger: hellonode-trigger

As you can see from the output of the command, a new container image for Docker is created that contains our function. The function is ready to be called, and we have two ways to do this:

- using the fn

invoke

command - calling directly via

http

Invoking via Fn simply emulates HTTP work for tests, which is convenient for quick verification:

$ fn invoke fnexo hellonode # hellonode fnexo {"message":"Hello World"}

In order to call the function directly, you need to know the full URL:

$ curl http://localhost:8080/t/fnexo/hellonode-trigger {"message":"Hello World"}

The Fn server provides its functions through port 8080, and it seems that the URL of the function matches the t/app/function

scheme, but not completely. Through HTTP, the function is not called directly, but through the so-called trigger, which, according to its name, “launches” the function call. Triggers are defined in the `func.yml

project:

schema_version: 20180708 name: hellonode version: 0.0.3 runtime: node entrypoint: node func.js format: json triggers: - name: hellonode-trigger type: http source: /hellonode-trigger # URL

We can change the name of the trigger so that it matches the name of the function, this will simplify everything:

triggers: - name: hellonode-trigger type: http source: /hellonode #

Then we start the delivery of the function again and call it from the new trigger:

$ fn deploy --app fnexo hellonode --local $ curl http://localhost:8080/t/fnexo/hellonode {"message":"Hello World"}

Everything works! It's time to go to full-scale experiments and publish our FaaS on the server!

Installing serverless function services on your own infrastructure

Let's quickly install a virtual machine using the CLI Exoscale. If you haven’t set it up yet, you can use our guide to quickly launch it . This is a cool tool that will further enhance your productivity. Do not forget that you need to configure a rule to open port 8080 in the Security Group! The following commands will launch a clean virtual machine, ready to host our features:

$ exo firewall create fn-securitygroup $ exo firewall add fn-securitygroup ssh --my-ip $ exo firewall add fn-securitygroup -p tcp -P 8080-8080 -c 0.0.0.0/0 $ exo vm create fn-server -s fn-securitygroup

Then you can ssh into the virtual machine and install the remote Fn server:

$ exo ssh fn-server The authenticity of host '185.19.30.175 (185.19.30.175)' can't be established. ECDSA key fingerprint is SHA256:uaCKRYeX4cvim+Gr8StdPvIQ7eQgPuOKdnj5WI3gI9Q. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '185.19.30.175' (ECDSA) to the list of known hosts. Welcome to Ubuntu 18.04 LTS (GNU/Linux 4.15.0-20-generic x86_64)

Then install Docker and the Fn server in the same way as we did on the local machine, start the server:

$ sudo apt-get update $ sudo apt-get install docker.io $ sudo systemctl start docker $ curl -LSs https://raw.githubusercontent.com/fnproject/cli/master/install | sh $ sudo fn start ... ______ / ____/___ / /_ / __ \ / __/ / / / / /_/ /_/ /_/ v0.3.643

Fn is ready for features! For the targeted transfer of functions to a remote server, we will use the deploy

command from the local computer, omitting the --local

flag.

In addition, Fn requires you to specify the location of the Fn server and the Docker registry. These parameters can be set through the environment variables FN_API_URL

and FN_REGISTRY

respectively, but a more convenient way is offered for easily managing the creation and management of configurations for deployment.

In terms of Fn, the configuration for deployment is called context

. The following command will create the context:

$ fn create context exoscale --provider default --api-url http://185.19.30.175:8080 --registry nfrankel

You can view the available contexts like this:

$ fn list contexts CURRENT NAME PROVIDER API URL REGISTRY default default http://localhost:8080/ exoscale default http://185.19.30.175:8080 nfrankel

And switch to the context that was just created, like this:

$ fn use context exoscale Now using context: exoscale

From this point on, the delivery of Fn functions will upload Docker images using the selected account on DockerHub (in my case, nfrankel

), and then notify the remote server (in this example, http://185.19.30.175:8080

) about the location and version The last image containing your function.

$ fn deploy --app fnexo . # hellonode Deploying function at: /. Deploying hellonode to app: fnexo Bumped to version 0.0.5 Building image nfrankel/hellonode:0.0.5 .

Finally:

$ curl http://185.19.30.175:8080/t/fnexo/hellonode {"message":"Hello World"}

Function life cycle in serverless computing based on Fn

The advantages of serverless computing at its facilities

Serverless computing is a convenient solution for quickly implementing independent parts of the application that interact with more complex applications or microservices.

Often this is due to the hidden cost of binding to the selected supplier, which, depending on the specific use case and volume, can lead to higher costs and reduced flexibility in the future.

Multi-cloud and hybrid cloud architectures also suffer in this case, because you can easily find yourself in a situation where you would like to use serverless computing, but due to corporate policy this may not be possible.

Fn is quite simple to work, it can give almost the same FaaS interface, with low costs. It eliminates any bindings to the provider; you can install it locally or in any convenient cloud solutions provider of your choice. There is also freedom in choosing a programming language.

Only the basics of Fn are presented in the article, but creating your own runtime is quite simple, and the general architecture can be expanded more widely using the Fn load balancer, or by placing Fn behind a proxy for protection.