We regularly encounter the Apache Cassandra database and the need to operate it within the framework of the Kubernetes-based infrastructure. In this article, we will share our vision of the necessary steps, criteria and existing solutions (including an overview of operators) for the migration of Cassandra to K8s.

“Who can control a woman will cope with the state”

Who is Cassandra? This is a distributed storage system designed to manage large amounts of data, while providing high availability without a single point of failure. The project hardly needs a long introduction, so I will give only the main features of Cassandra, which will be relevant in the context of a specific article:

- Cassandra is written in Java.

- The Cassandra topology includes several levels:

- Node - one deployed instance of Cassandra;

- Rack - a group of Cassandra instances, united by any attribute, located in one data center;

- Datacenter - the totality of all groups of Cassandra instances located in one data center;

- Cluster - a collection of all data centers.

- Cassandra uses an IP address to identify the host.

- For the speed of read and write operations, Cassandra stores part of the data in RAM.

Now for the actual potential move to Kubernetes.

Check-list for migration

Speaking about the migration of Cassandra to Kubernetes, we hope that it will become more convenient to manage it with the move. What will be required for this, what will help in this?

1. Data storage

As already specified, part of the data Cassanda stores in RAM - in Memtable . But there is another part of the data that is saved to disk - in the form of SSTable . To this data is added the entity Log Log - records of all transactions that are also saved to disk.

Cassandra Write Transaction Scheme

In Kubernetes, we can use PersistentVolume to store data. Thanks to well-developed mechanisms, working with data in Kubernetes becomes easier every year.

For each pod with Cassandra we will allocate our PersistentVolume

It is important to note that Cassandra itself implies data replication, offering built-in mechanisms for this. Therefore, if you are building a Cassandra cluster from a large number of nodes, then there is no need to use distributed systems like Ceph or GlusterFS to store data. In this case, it will be logical to store data on the host disk using local persistent disks or mounting

hostPath

.

Another question is if you want to create a separate development environment for each feature branch. In this case, the correct approach would be to raise one Cassandra node, and store the data in distributed storage, i.e. Ceph and GlusterFS mentioned will be your option. Then the developer will be sure that he will not lose test data even if one of the nodes of the Kuberntes cluster is lost.

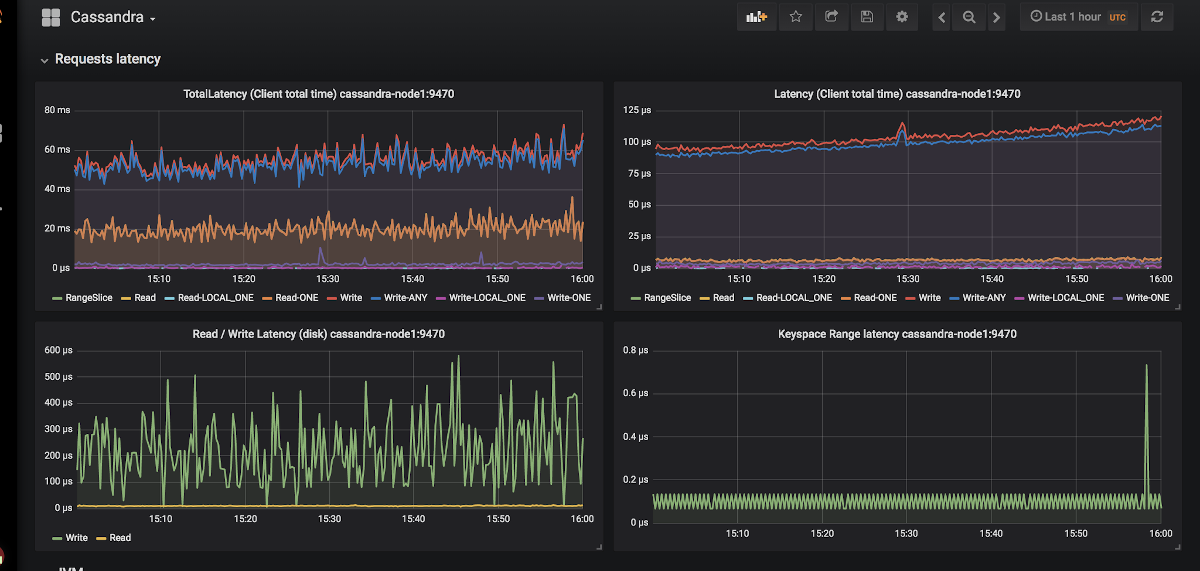

2. Monitoring

A virtually non-alternative choice for implementing monitoring in Kubernetes is Prometheus (we talked about this in detail in the corresponding report ) . How's Cassandra doing with metric exporters for Prometheus? And, what is even more important in some way, with dashboards suitable for them for Grafana?

An example of the appearance of graphs in Grafana for Cassandra

There are only two exporters: jmx_exporter and cassandra_exporter .

We chose the first one for ourselves, because:

- JMX Exporter is growing and developing, while Cassandra Exporter has not been able to get the proper community support. Cassandra Exporter still does not support most versions of Cassandra.

- You can run it as javaagent by adding the

-javaagent:<plugin-dir-name>/cassandra-exporter.jar=--listen=:9180

flag-javaagent:<plugin-dir-name>/cassandra-exporter.jar=--listen=:9180

. - For him there is an adequate dashboad that is incompatible with Cassandra Exporter.

3. Selection of Kubernetes primitives

According to the above structure of the Cassandra cluster, let's try to translate everything that is described there into Kubernetes terminology:

- Cassandra Node → Pod

- Cassandra Rack → StatefulSet

- Cassandra Datacenter → pool from StatefulSets

- Cassandra Cluster → ???

It turns out that some additional entity is missing to manage the entire Cassandra cluster at once. But if something is not there, we can create it! In Kubernetes, this is the mechanism for determining your own resources - Custom Resource Definitions .

Announcement of additional resources for logs and alerts

But Custom Resource alone does not mean anything: you need a controller for it. You may have to resort to the help of a Kubernetes operator ...

4. Identification of pods

The point above, we agreed that one Cassandra node would equal one pod in Kubernetes. But the pod's IP addresses will be different each time. And the identification of the node in Cassandra is based on the IP address ... It turns out that after each removal of the pod, the Cassandra cluster will add a new node.

There is a way out, and not even one:

- We can keep records by host identifiers (UUIDs that uniquely identify Cassandra instances) or by IP addresses and save all this in some structures / tables. The method has two main disadvantages:

- The risk of a race condition when two nodes fall at once. After the upgrade, the Cassandra nodes will go to request an IP address from the table for themselves and compete for the same resource.

- If the Cassandra node has lost its data, we will no longer be able to identify it.

- The second solution seems like a small hack, but nonetheless: we can create a Service with ClusterIP for each Cassandra node. Problems with this implementation:

- If there are a lot of nodes in the Cassandra cluster, we will have to create a lot of Services.

- ClusterIP feature is implemented through iptables. This can be a problem if the Cassandra cluster has many (1000 ... or even 100?) Nodes. Although balancing based on IPVS can solve this problem.

- The third solution is to use a network of nodes for Cassandra nodes instead of a dedicated pod network by turning on

hostNetwork: true

. This method imposes certain restrictions:

- To replace the nodes. It is necessary that the new host must have the same IP address as the previous one (in clouds like AWS, GCP, this is almost impossible);

- Using the network of cluster nodes, we begin to compete for network resources. Therefore, putting on one cluster node more than one pod with Cassandra will be problematic.

5. Backups

We want to keep the full version of the data for one Cassandra node on a schedule. Kubernetes provides a convenient opportunity using CronJob , but here Cassandra inserts the sticks into the wheels.

Let me remind you that part of the Cassandra data is stored in memory. To make a full backup, you need to transfer data from memory ( Memtables ) to disk ( SSTables ). At this point, the Cassandra node stops accepting connections, completely shutting down from the cluster.

After that, a backup ( snapshot ) is removed and the scheme ( keyspace ) is saved . And then it turns out that just a backup does not give us anything: you need to save the data identifiers for which the Cassandra node was responsible - these are special tokens.

Token distribution to identify which data the Cassandra nodes are responsible for

An example script for removing Cassandra from Google in Kubernetes can be found at this link . The only point that the script does not take into account is dumping data to the node before removing the snapshot. That is, backup is performed not for the current state, but for the state a little earlier. But this helps not to get the node out of work, which seems very logical.

set -eu if [[ -z "$1" ]]; then info "Please provide a keyspace" exit 1 fi KEYSPACE="$1" result=$(nodetool snapshot "${KEYSPACE}") if [[ $? -ne 0 ]]; then echo "Error while making snapshot" exit 1 fi timestamp=$(echo "$result" | awk '/Snapshot directory: / { print $3 }') mkdir -p /tmp/backup for path in $(find "/var/lib/cassandra/data/${KEYSPACE}" -name $timestamp); do table=$(echo "${path}" | awk -F "[/-]" '{print $7}') mkdir /tmp/backup/$table mv $path /tmp/backup/$table done tar -zcf /tmp/backup.tar.gz -C /tmp/backup . nodetool clearsnapshot "${KEYSPACE}"

Example bash script to remove backup from a single Cassandra node

Ready-made solutions for Cassandra in Kubernetes

What are they currently using to deploy Cassandra in Kubernetes, and which of these is most suitable for the given requirements?

1. StatefulSet or Helm Chart Solutions

Using the basic StatefulSets to start a Cassandra cluster is a good option. Using the Helm chart and Go templates, you can provide the user with a flexible interface for deploying Cassandra.

Usually this works fine ... until something unexpected happens - for example, a node goes down. The standard Kubernetes tools simply cannot take into account all the above features. In addition, this approach is very limited in how much it can be expanded for more complex use: node replacement, backup, restore, monitoring, etc.

Representatives:

Both charts are equally good, but are prone to the problems described above.

2. Solutions based on Kubernetes Operator

Such options are more interesting because they provide extensive cluster management capabilities. To design a Cassandra statement, like any other database, a good pattern looks like Sidecar <-> Controller <-> CRD:

Node management diagram in a correctly designed Cassandra statement

Consider the existing operators.

1. Cassandra-operator by instaclustr

- Github

- Willingness: Alpha

- License: Apache 2.0

- Implemented in: Java

This is indeed a very promising and rapidly developing project from a company that offers Cassandra managed deployments. It, as described above, uses a sidecar container that accepts commands via HTTP. It is written in Java, so sometimes it lacks the more advanced client-go library functionality. Also, the operator does not support different Racks for one Datacenter.

But the operator has such advantages as monitoring support, high-level cluster management using CRD, and even documentation on removing backups.

2. Navigator by Jetstack

- Github

- Willingness: Alpha

- License: Apache 2.0

- Implemented in: Golang

An operator for deploying DB-as-a-Service. Currently supports two databases: Elasticsearch and Cassandra. It has such interesting solutions as access control to the database via RBAC (for this, its own separate navigator-apiserver is raised). An interesting project, which would be worth a closer look, but the last commit was made a year and a half ago, which clearly reduces its potential.

3. Cassandra-operator from vgkowski

- Github

- Willingness: Alpha

- License: Apache 2.0

- Implemented in: Golang

They did not consider it “seriously”, since the last commit to the repository was more than a year ago. Operator development is abandoned: the latest version of Kubernetes, declared as supported, is 1.9.

4. Cassandra-operator from Rook

- Github

- Willingness: Alpha

- License: Apache 2.0

- Implemented in: Golang

An operator whose development is not going as fast as we would like. It has a well-thought-out CRD structure for managing the cluster, solves the problem of identifying nodes using Service with ClusterIP (the same "hack") ... but for now that's all. There is no monitoring and backups out of the box right now (by the way, we started monitoring ourselves ). An interesting point is that using this operator you can also deploy ScyllaDB.

NB: We used this operator with minor modifications in one of our projects. There were no problems in the operator’s work during the entire operation (~ 4 months of operation).

5. CassKop by Orange

- Github

- Willingness: Alpha

- License: Apache 2.0

- Implemented in: Golang

The youngest operator on the list: the first commit was made on May 23, 2019. Already, he has in his arsenal a large number of features from our list, more details of which can be found in the project repository. The operator is based on the popular operator-sdk. Supports out-of-box monitoring. The main difference from other operators is the use of the CassKop plugin , implemented in Python and used for communication between Cassandra nodes.

conclusions

The number of approaches and possible options for porting Cassandra to Kubernetes speaks for itself: the topic is in demand.

At this stage, you can try one of the above at your own peril and risk: none of the developers guarantees 100% work of their solution in the production environment. But now, many products look promising to try using them in development stands.

I think in the future this woman on the ship will have to go!

PS

Read also in our blog: