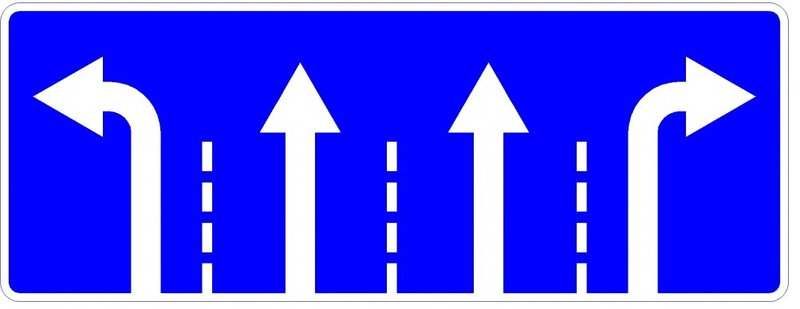

If we consider today's machine learning algorithms with a movement from ignorance (bottom) to awareness (top), then current algorithms are like a jump. After the jump, there is a slowdown in the speed of development (learning ability) and an inevitable turn and fall (retraining). All efforts are reduced to attempts to exert as much power as possible to the jump, which increases the height of the jump but does not fundamentally change the results. When pumping jumps, we increase the height, but do not learn to fly. To master the technique of "controlled flight" will need to rethink some basic principles.

In neural networks, a static structure is used that does not allow to go beyond the established learning ability of the whole structure. By fixing the size of the network from a fixed number of neurons, we limit the size of the learning ability of the network that the network can never bypass. The installation of a larger number of neurons when creating a network allows you to increase the learning ability, but it will slow down the learning time. Dynamically changing the network structure during training and using binary data will give the network unique properties and will circumvent these limitations.

1. Dynamic network structure

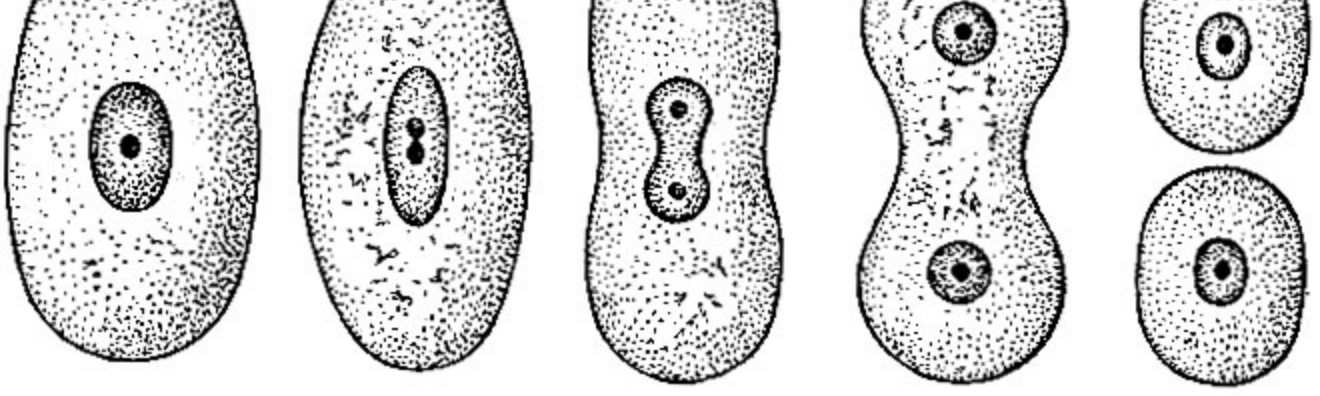

The network itself must make decisions about its size. As necessary, grow in the right direction increasing your size. When creating a network, one neuron, layer or two, we cannot unambiguously know the necessary training ability of the network and, consequently, its size. Our network should start from scratch (complete lack of structure) and should be able to expand in the right direction independently when new training data arrives.

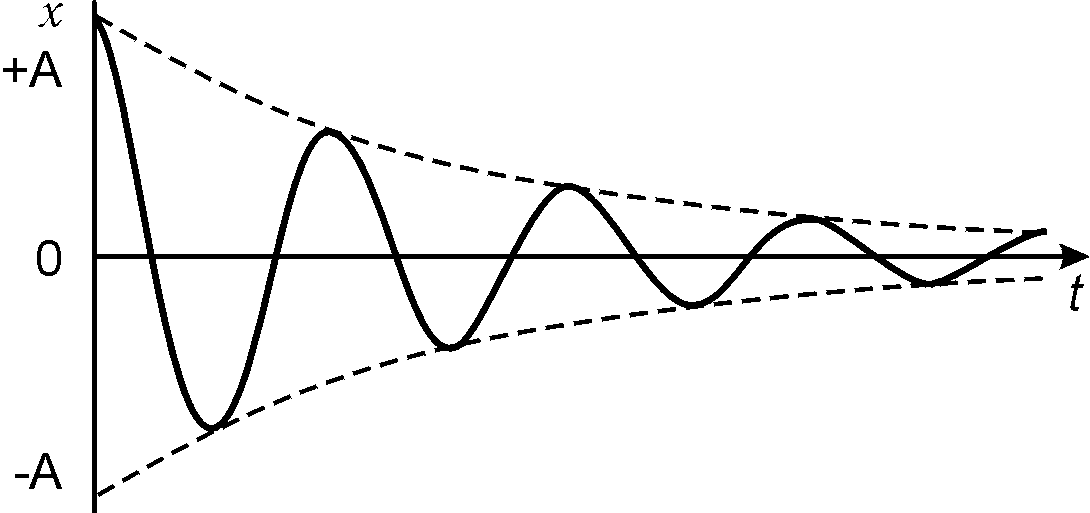

2. Signal attenuation

Neural networks engaged in the summation of the signal use the "analog" approach to the calculation. Multiple conversions lead to attenuation of the signal and its loss during transmission. On large network sizes, “analog” calculation methods will inevitably lead to losses. In neural networks, the problem of signal attenuation can be solved by recurrent networks, in fact being a crutch only reducing the problem, not solving it completely. The solution is to use a binary signal. The number of transformations of such a signal does not lead to its attenuation and loss during transformations.

3. Network learning ability

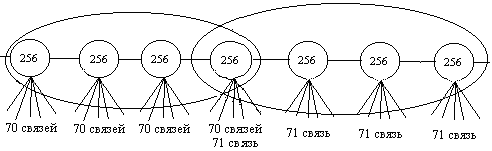

Expanding the structure as we learn, we increase its teaching ability. The network itself determines from the task in which direction to develop and how many neurons are needed to solve the problem. Network size limits only the computing power of the servers and the size limits of the hard disk.

4. Network training

When changing signals from analog to binary networks, it gets the opportunity for accelerated learning. Training provides an unambiguous setting of the output signal. The learning process itself changes only a small part of neurons, leaving a significant part of them unchanged. Learning time is reduced many times by limiting changes to several network elements.

5. Network calculation

To calculate the neural network, we need to calculate all the elements. Skipping the calculation of even a part of the neurons can lead to the butterfly effect where an insignificant signal can change the result of the entire calculation. The nature of binary signals is different. At each stage, it is possible to exclude part of the data from the calculation without consequences. The larger the network, the more elements we can ignore in the calculations. The calculation of network results comes down to calculating a small number of elements up to fractions of a percent of the network. For the calculation, 3.2% (32 neurons) with a network of a thousand neurons, 0.1% (1 thousand) with a million “neurons” in a network, and 0.003% (32 thousand) with a network of a billion “neurons” are sufficient. With an increase in network size, the percentage of neurons required for calculating decreases.

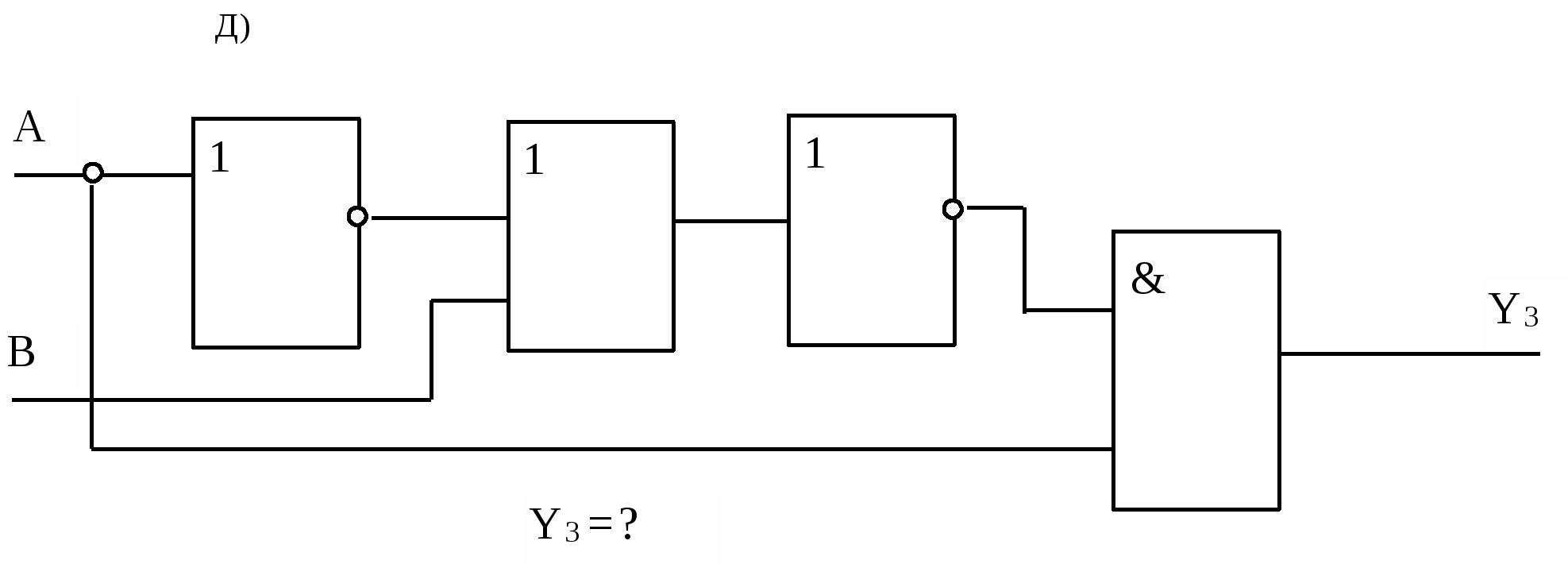

6. Interpretation of results

In neural networks, weights multiplied by coefficients make it impossible to establish a signal source. All incoming signals of the neural network, without exception, affect the result, complicating the understanding of the reasons that led to its formation. The influence of binary signals on the result can be zero, partial or complete, which clearly indicates the participation of the source data in the calculation. Binary methods for calculating signals allow you to track the relationship of incoming data with the result and determine the degree of influence of the source data on the formation of the result.

All of the above indicates the need to change approaches to machine learning algorithms. Classical methods do not give complete control of the network and an unambiguous understanding of the processes occurring in it. To achieve a “controlled flight”, it is required to ensure the reliability of signal conversion within the network and the dynamic formation of the structure on the fly.