That is why DNS was originally created as a highly cached protocol. Zone administrators set the life time (TTL) for individual records, and resolvers use this information when storing records in memory to avoid unnecessary traffic.

Is caching efficient? A couple of years ago, my little research showed that it was not perfect. Take a look at the current state of affairs.

To collect information, I patched the Encrypted DNS Server to store the TTL value for the response. It is defined as the minimum TTL of its entries for each incoming request. This gives a good overview of the distribution of TTL real traffic, and also takes into account the popularity of individual requests. The patched version of the server worked for several hours.

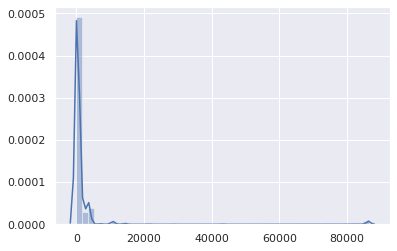

The resulting data set consists of 1,583,579 records (name, qtype, TTL, timestamp). Here is the general distribution of TTL (X axis is TTL in seconds):

Apart from the minor mound on 86,400 (mainly for SOA records), it is pretty obvious that TTLs are in the low range. Let's take a closer look:

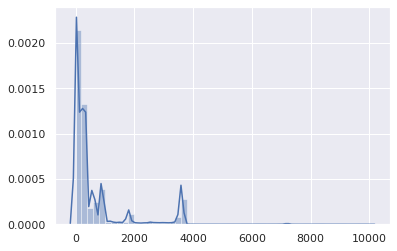

Well, TTLs over 1 hour are not statistically significant. Then focus on the range 0–3600:

Most TTL from 0 to 15 minutes:

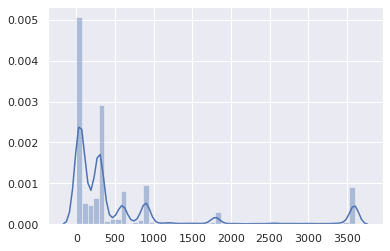

The vast majority from 0 to 5 minutes:

This is not good.

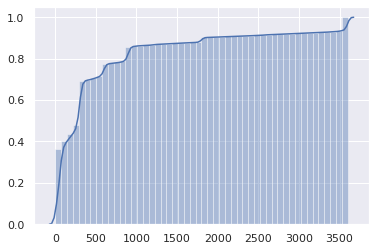

Cumulative distribution makes the problem even more obvious:

In half of the DNS answers, TTL is 1 minute or less, and in three quarters - 5 minutes or less.

But wait, it’s actually worse. After all, this is TTL from authoritative servers. However, client resolvers (for example, routers, local caches) receive TTLs from upstream resolvers, and it decreases every second.

Thus, the client can actually use each record, on average, for half the original TTL, after which it will send a new request.

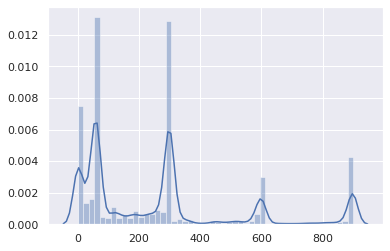

Maybe these very low TTLs only deal with unusual requests, not popular websites and APIs? Let's get a look:

The X axis is TTL, the Y axis is query popularity.

Unfortunately, the most popular queries are also cached worst of all.

Zoom in:

Verdict: everything is really bad. It was bad before, but it got worse. DNS caching has become virtually useless. As fewer people use their ISP's DNS resolver (for good reason), the increase in latency is becoming more noticeable.

DNS caching has become useful only for content that no one visits.

Also note that software may interpret low TTLs differently .

Why is that?

Why is such a small TTL set for DNS records?

- Outdated load balancers are left with default settings.

- There are myths that DNS load balancing depends on TTL (this is not the case - since Netscape Navigator clients choose a random IP address from the RR set and transparently try another if they can’t connect)

- Administrators want to apply the changes immediately, because it’s easier to plan.

- The administrator of the DNS server or load balancer sees his task as effectively deploying the configuration that users request, rather than speeding up sites and services.

- Low TTL give peace of mind.

- People initially set low TTLs for testing and then forget to change them.

I did not include “failover” in the list, since this is all less relevant. If you need to redirect users to another network only to display the error page, when absolutely everything else has broken, a delay of more than 1 minute is probably acceptable.

In addition, the one-minute TTL means that if authoritative DNS servers are blocked for more than 1 minute, no one else will be able to access the dependent services. And redundancy will not help if the cause is a configuration error or hack. On the other hand, with reasonable TTLs, many clients will continue to use the previous configuration and will never notice anything.

CDN services and load balancers are largely to blame for low TTLs, especially when they combine CNAME with small TTLs and records with equally small (but independent) TTLs:

$ drill raw.githubusercontent.com raw.githubusercontent.com. 9 IN CNAME github.map.fastly.net. github.map.fastly.net. 20 IN A 151.101.128.133 github.map.fastly.net. 20 IN A 151.101.192.133 github.map.fastly.net. 20 IN A 151.101.0.133 github.map.fastly.net. 20 IN A 151.101.64.133

Whenever a CNAME or any of the A records expire, you have to send a new request. Both have a 30 second TTL, but it does not match. The actual average TTL will be 15 seconds.

But wait! Still worse. Some resolvers behave very badly in this situation with two associated low TTLs:

$ drill raw.githubusercontent.com @ 4.2.2.2 raw.githubusercontent.com. 1 IN CNAME github.map.fastly.net. github.map.fastly.net. 1 IN A 151.101.16.133

The Level3 resolver probably works on BIND. If you keep sending this request, a TTL of 1 will always be returned. Essentially,

raw.githubusercontent.com

never cached.

Here is another example of such a situation with a very popular domain:

$ drill detectportal.firefox.com @ 1.1.1.1 detectportal.firefox.com. 25 IN CNAME detectportal.prod.mozaws.net. detectportal.prod.mozaws.net. 26 IN CNAME detectportal.firefox.com-v2.edgesuite.net. detectportal.firefox.com-v2.edgesuite.net. 10668 IN CNAME a1089.dscd.akamai.net. a1089.dscd.akamai.net. 10 IN A 104.123.50.106 a1089.dscd.akamai.net. 10 IN A 104.123.50.88

At least three CNAME records. Aw. One has a decent TTL, but it's completely useless. In other CNAMEs, the initial TTL is 60 seconds, but for

akamai.net

domains

akamai.net

maximum TTL is 20 seconds, and none of them are in phase.

What about domains that constantly poll Apple devices?

$ drill 1-courier.push.apple.com @ 4.2.2.2 1-courier.push.apple.com. 1253 IN CNAME 1.courier-push-apple.com.akadns.net. 1.courier-push-apple.com.akadns.net. 1 IN CNAME gb-courier-4.push-apple.com.akadns.net. gb-courier-4.push-apple.com.akadns.net. 1 IN A 17.57.146.84 gb-courier-4.push-apple.com.akadns.net. 1 IN A 17.57.146.85

The same problem that Firefox has, and TTL will get stuck for 1 second most of the time when using the Level3 resolver.

Dropbox?

$ drill client.dropbox.com @ 8.8.8.8 client.dropbox.com. 7 IN CNAME client.dropbox-dns.com. client.dropbox-dns.com. 59 IN A 162.125.67.3 $ drill client.dropbox.com @ 4.2.2.2 client.dropbox.com. 1 IN CNAME client.dropbox-dns.com. client.dropbox-dns.com. 1 IN A 162.125.64.3

safebrowsing.googleapis.com

a TTL of 60 seconds, just like with Facebook domains. And, again, from the point of view of the client, these values are halved.

How about setting a minimum TTL?

Using the name, request type, TTL, and the originally saved timestamp, I wrote a script to simulate 1.5 million requests passing through a caching resolver to estimate the amount of extra requests sent due to an expired cache entry.

47.4% of requests were made after the expiration of the existing record. This is unreasonably high.

What will be the effect on caching if the minimum TTL is set?

The X axis is the minimum TTL value. Records with source TTLs above this value are not affected.

The Y axis is the percentage of requests from a client who already has a cached record, but has expired and it makes a new request.

The percentage of “extra” requests is reduced from 47% to 36% by simply setting the minimum TTL in 5 minutes. When setting the minimum TTL in 15 minutes, the number of these requests is reduced to 29%. A minimum TTL of 1 hour reduces them to 17%. The big difference!

How about not changing anything on the server side, but instead setting the minimum TTL in client DNS caches (routers, local resolvers)?

The number of required requests is reduced from 47% to 34% when setting the minimum TTL in 5 minutes, to 25% with a minimum of 15 minutes, and up to 13% with a minimum of 1 hour. Perhaps the optimal value is 40 minutes.

The impact of this minimal change is huge.

What are the implications?

Of course, the service can be transferred to a new cloud provider, a new server, a new network, requiring customers to use the latest DNS records. And a sufficiently small TTL helps to smoothly and seamlessly make such a transition. But with the transition to a new infrastructure, no one expects customers to switch to new DNS records within 1 minute, 5 minutes, or 15 minutes. Setting a minimum life of 40 minutes instead of 5 minutes will not prevent users from accessing the service.

However, this will significantly reduce latency and increase confidentiality and reliability, avoiding unnecessary requests.

Of course, RFCs say you need to strictly enforce TTL. But the reality is that the DNS system has become too inefficient.

If you work with authoritative DNS servers, please check your TTL. Do you really need such ridiculously low values?

Of course, there are good reasons for setting small TTLs for DNS records. But not for 75% of DNS traffic, which remains virtually unchanged.

And if for some reason you really need to use low TTL for DNS, at the same time make sure that caching is not enabled on your site. For the same reasons.

If you have a local DNS cache, such as dnscrypt-proxy , that allows you to set the minimum TTL, use this function. This is normal. Nothing bad will happen. Set the minimum TTL between approximately 40 minutes (2400 seconds) and 1 hour. Quite a reasonable range.