Where did we start

To implement autotests and embed them in a pipeline, we needed a framework for automation, which can be flexibly changed to suit our needs. Ideally, I wanted to get a single standard for the autotest engine, adapted for embedding autotests in a pipeline. For implementation, we have chosen the following technologies:

- Java

- Maven

- Selenium

- Cucumber + JUNIT 4,

- Allure

- Gitlab

Why such a set? Java is one of the most popular languages for autotests, in addition, all members of the team speak it. Selenium is the obvious solution. Cucumber, among other things, was supposed to increase the credibility of the results of autotests by the units involved in manual testing.

Single threaded tests

In order not to reinvent the wheel, we took the experience from various repositories on GitHub as the basis of the framework and adapted them for ourselves. We created a repository for the main library with the core of the autotest framework and a repository with a Gold example of implementing autotests on our core. Each team had to take a Gold-image and develop tests in it, adapting it to their project. We deployed to the GitLab-CI bank, on which we configured:

- daily runs of all written autotests for each project;

- launches in the assembly pipeline.

At first there were few tests, and they went in one stream. The single-threaded launch on the GitLab Windows-runner was quite suitable for us: the tests very slightly loaded the test stand and almost did not utilize resources.

Over time, autotests became more and more, and we thought about running them in parallel, when a full run began to take about three hours. Other issues appeared:

- we could not make sure that the tests are stable;

- tests that went through several runs in a row on the local machine sometimes fell into CI.

Autotest setup example:

<plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-surefire-plugin</artifactId> <version>2.20</version> <configuration> <skipTests>${skipTests}</skipTests> <testFailureIgnore>false</testFailureIgnore> <argLine> -javaagent:"${settings.localRepository}/org/aspectj/aspectjweaver/${aspectj.version}/aspectjweaver-${aspectj.version}.jar" -Dcucumber.options="--tags ${TAGS} --plugin io.qameta.allure.cucumber2jvm.AllureCucumber2Jvm --plugin pretty" </argLine> </configuration> <dependencies> <dependency> <groupId>org.aspectj</groupId> <artifactId>aspectjweaver</artifactId> <version>${aspectj.version}</version> </dependency> </dependencies> </plugin> <plugin> <groupId>io.qameta.allure</groupId> <artifactId>allure-maven</artifactId> <version>2.9</version> </plugin> </plugins>

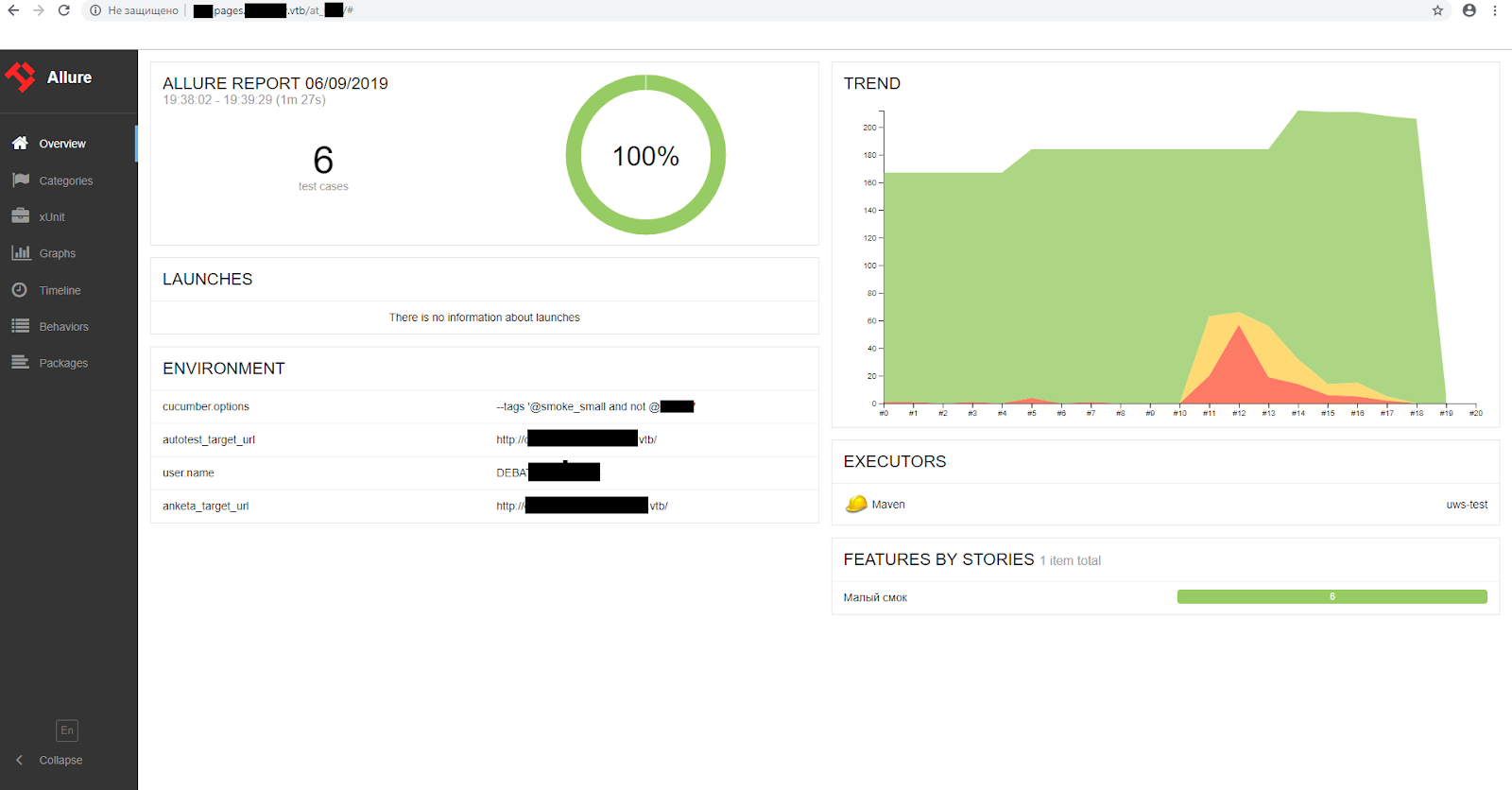

Allure Report Example

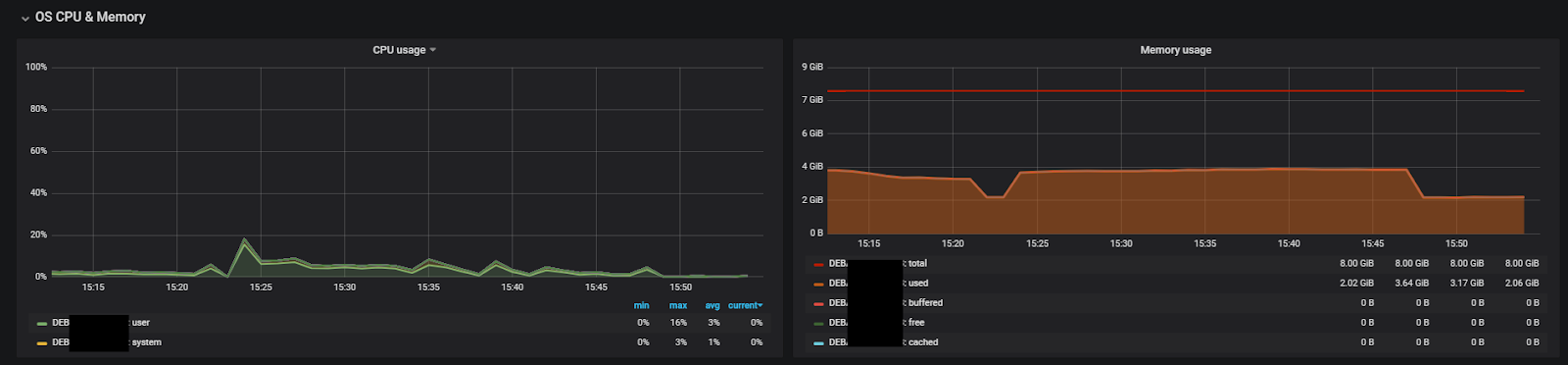

Runner load during tests (8 cores, 8 GB of RAM, 1 thread)

Pluses of single-threaded tests:

- easy to configure and run;

- Launches in CI practically do not differ from local launches;

- tests do not affect each other;

- minimum runner resource requirements.

Cons of single-threaded tests:

- very long run;

- long stabilization of tests;

- inefficient use of runner resources, extremely low utilization.

JVM Fork Tests

Since when implementing the basic framework we did not take care of thread-safe code, the most obvious way to run in parallel was cucumber-jvm-parallel-plugin for Maven. The plugin is easy to configure, but for the correct parallel operation of the autotests you need to run in separate browsers. Nothing to do, I had to use Selenoid.

The Selenoid server was raised on a machine with 32 cores and 24 GB of RAM. The limit was set in 48 browsers - 1.5 threads per core and about 400 MB of RAM. As a result, the test time was reduced from three hours to 40 minutes. Speeding up runs helped solve the stabilization problem: now we could quickly run new autotests 20-30 times until we make sure that they are performed stably.

The first drawback of the solution was the high utilization of runner resources with a small number of parallel threads: on 4 cores and 8 GB of RAM, the tests worked stably in no more than 6 threads. The second minus: the plugin generates runner classes for each scenario, no matter how many are run.

Important! Do not throw a variable with tags in argLine , for example, like this:

<argLine>-Dcucumber.options="--tags ${TAGS} --plugin io.qameta.allure.cucumber2jvm.AllureCucumber2Jvm --plugin pretty"</argLine> … Mvn –DTAGS="@smoke"

If you pass the tag in this way, the plugin will generate runners for all tests, that is, try to run all the tests, skipping them right after launch and creating a lot of JVM forks.

It’s correct to throw the variable with the tag into tags in the plugin settings, see the example below. Other methods we tested have problems connecting the Allure plugin.

Example run time for 6 short tests with incorrect settings:

[INFO] Total time: 03:17 min

An example of test run time if you directly pass the tag to mvn ... –Dcucumber.options :

[INFO] Total time: 44.467 s

Autotest setup example:

<profiles> <profile> <id>parallel</id> <build> <plugins> <plugin> <groupId>com.github.temyers</groupId> <artifactId>cucumber-jvm-parallel-plugin</artifactId> <version>5.0.0</version> <executions> <execution> <id>generateRunners</id> <phase>generate-test-sources</phase> <goals> <goal>generateRunners</goal> </goals> <configuration> <tags> <tag>${TAGS}</tag> </tags> <glue> <package>stepdefs</package> </glue> </configuration> </execution> </executions> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-surefire-plugin</artifactId> <version>2.21.0</version> <configuration> <forkCount>12</forkCount> <reuseForks>false</reuseForks> <includes>**/*IT.class</includes> <testFailureIgnore>false</testFailureIgnore> <!--suppress UnresolvedMavenProperty --> <argLine> -javaagent:"${settings.localRepository}/org/aspectj/aspectjweaver/${aspectj.version}/aspectjweaver-${aspectj.version}.jar" -Dcucumber.options="--plugin io.qameta.allure.cucumber2jvm.AllureCucumber2Jvm TagPFAllureReporter --plugin pretty" </argLine> </configuration> <dependencies> <dependency> <groupId>org.aspectj</groupId> <artifactId>aspectjweaver</artifactId> <version>${aspectj.version}</version> </dependency> </dependencies> </plugin> </plugins> </build> </profile>

Example of an Allure report (the most unstable test, 4 rerana)

Runner load during tests (8 cores, 8 GB of RAM, 12 threads)

Runner load during tests (8 cores, 8 GB of RAM, 12 threads)

Pros:

- simple setup - you just need to add the plugin;

- the ability to simultaneously perform a large number of tests;

- faster stabilization of tests thanks to claim 1.

Minuses:

- multiple OS / containers required;

- high resource consumption per fork;

- The plugin is deprecated and is no longer supported.

How to beat instability

Test stands are not perfect, as are the autotests themselves. Not surprisingly, we got a number of flacky tests. The maven surefire plugin came to the rescue , which out of the box supports restarting fallen tests. You need to update the plugin version to at least 2.21 and write one line with the number of restarts in the pom-file or pass as an argument for Maven.

Autotest setup example:

<plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-surefire-plugin</artifactId> <version>2.21.0</version> <configuration> …. <rerunFailingTestsCount>2</rerunFailingTestsCount> …. </configuration> </plugin>

Or at startup: mvn ... -Dsurefire.rerunFailingTestsCount = 2 ...

Alternatively, set the Maven options for the PowerShell script (PS1):

Set-Item Env:MAVEN_OPTS "-Dfile.encoding=UTF-8 -Dsurefire.rerunFailingTestsCount=2"

Pros:

- no need to waste time analyzing an unstable test when it falls;

- You can smooth out the stability problems of the test bench.

Minuses:

- you can skip floating defects;

- run time is increased.

Parallel Tests with the Cucumber 4 Library

The number of tests grew every day. We again thought about accelerating runs. In addition, I wanted to integrate as many tests as possible into the pipeline assembly of the application. A critical factor was the too long generation of runners when running in parallel using the Maven plugin.

Cucumber 4 was already released at that time, so we decided to rewrite the kernel for this version. In release notes, we were promised a parallel launch at the thread level. Theoretically, this should have been:

- significantly speed up the run of autotests by increasing the number of threads;

- exclude the loss of time for generating runners for each autotest.

Optimizing the framework for multi-threaded autotests was not so difficult. Cucumber 4 runs each individual test in a dedicated thread from start to finish, so some common static things were simply converted to ThreadLocal variables.

The main thing when converting with Idea refactoring tools is to check the places in which the variable was compared (for example, checking for null). In addition, you need to render the Allure plugin in the annotations of the Junit Runner class.

Autotest setup example:

<profile> <id>parallel</id> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-surefire-plugin</artifactId> <version>3.0.0-M3</version> <configuration> <useFile>false</useFile> <testFailureIgnore>false</testFailureIgnore> <parallel>methods</parallel> <threadCount>6</threadCount> <perCoreThreadCount>true</perCoreThreadCount> <argLine> -javaagent:"${settings.localRepository}/org/aspectj/aspectjweaver/${aspectj.version}/aspectjweaver-${aspectj.version}.jar" </argLine> </configuration> <dependencies> <dependency> <groupId>org.aspectj</groupId> <artifactId>aspectjweaver</artifactId> <version>${aspectj.version}</version> </dependency> </dependencies> </plugin> </plugins> </build> </profile>

An example of an Allure report (the most unstable test, 5 reranes)

An example of an Allure report (the most unstable test, 5 reranes)

Runner load during tests (8 cores, 8 GB of RAM, 24 threads)

Runner load during tests (8 cores, 8 GB of RAM, 24 threads)

Pros:

- low resource consumption;

- Native support from Cucumber - no additional tools needed;

- the ability to run more than 6 threads on the processor core.

Minuses:

- you need to ensure that the code supports multi-threaded execution;

- entry threshold increases.

Allure reports in GitLab pages

After the introduction of multithreaded launch, we began to spend much more time on analyzing reports. At that time, we had to upload each report as an artifact in GitLab, then download it, unpack it. It is not very convenient and long. And if someone else wants to see the report at home, then he will need to do the same operations. We wanted to get feedback faster, and there was a way out - GitLab pages. This is a built-in feature that is available out of the box in all recent versions of GitLab. Allows you to deploy static sites on your server and access them via a direct link.

All screenshots with Allure reports were made in GitLab pages. The script for deploying the report to GitLab pages is to Windows PowerShell (before that you need to run autotests):

New-Item -ItemType directory -Path $testresult\history | Out-Null try {Invoke-WebRequest -Uri $hst -OutFile $outputhst} Catch{echo "fail copy history"} try {Invoke-WebRequest -Uri $hsttrend -OutFile $outputhsttrnd} Catch{echo "fail copy history trend"} mvn allure:report #mvn assembly:single -PzipAllureReport xcopy $buildlocation\target\site\allure-maven-plugin\* $buildlocation\public /s /i /Y

What is the result

So, if you were thinking about whether you need Thread safe code in the Cucumber autotest framework, now the answer is obvious - with Cucumber 4 it is easy to implement it, thereby significantly increasing the number of threads launched simultaneously. With this method of running tests, the question is already about the performance of the machine with Selenoid and the test bench.

Practice has shown that running autotests on threads can minimize resource consumption at the best performance. As can be seen from the graphs, a 2-fold increase in flows does not lead to a similar acceleration in passing performance tests. Nevertheless, we were able to add more than 200 automatic tests to the application assembly, which even with 5 rerans can be completed in approximately 24 minutes. This allows you to receive quick feedback from them, and if necessary, make changes and repeat the procedure again.