Goal and objective

Let me remind you that in the first article we got a model with a quality that satisfies us and came to the conclusion that it’s not worth building neural networks right away, it won’t be of much benefit from incorrect data. To avoid wasting time and energy, it is enough to analyze errors on “simple” models.

In this article, we will talk about the derivation of a working model in a productive.

The first classifier tests. From Methodius to Anna

So, after analyzing the errors, they got an acceptable quality and decided to bring the model into a productive one. We deployed the model as a web service, added the means of telephony to call the service during a call. And if before that we struggled with the typical difficulties of ml-tasks (markup, imbalance), the methods of dealing with them are known, then the fun began.

Without thinking for a long time, we decided to start with the Methodius robot, which will meet customers with a robotic voice.

Methodius served people like that.

The first test day showed that people are not happy with the robot.

| Methodius | |

|---|---|

| Abandoned tubes | nineteen% |

| The silent | 58% |

19% of customers hung up, 58% were silent and did not answer Methodius. For some reason, only after these numbers we thought that before starting the service, we had to think about what the robot would ask, in what voice, whether it would be a robot or an “operator”, in other words, we had to think about integrating the model into the real world users. This turned out to be the most difficult.

Integration. Check list

We have compiled a checklist for integrating the system with the real world. So, before starting this kind of service in a product, you need to think about:

- Dialogue purpose

- Bot phrases

- Volume of text / speech from the bot

- Identify end of customer replica

- Interaction scenario

Next, I explain each of the points.

The first important point is to understand what we want to get by implementing a robot. We immediately replied: “a formulated request for technical support.” But how to ask so that the user understands what they want from him is a different story. We stormed every phrase, if only the number of people who answered the bot increased. The main conclusions we came to regarding the phrases of the robot:

- Bot phrases must not contain passive voice

- Bot phrases should be short

- At the end of each phrase, it should be clear what the user should do. It is necessary to use guiding questions at each stage of communication with the bot. In our experience, I can say that there is a difference between the phrases “I am listening to you” and “What is your question?”

- The final phrase in communication with the bot is important, so that the client understands what to expect next. In our case, at the end of communication, the robot clearly said: “I will transfer your call to a specialist on this issue,” so the client understood the value of communicating with the robot.

Next, we decided to experiment with the voice of the robot, so we got a girl, Maria.

Audio

The test result with Maria gave us hope.

| Methodius | Maria | |

|---|---|---|

| Abandoned tubes | nineteen% | 14% |

| The silent | 58% | 27% |

Already more people answered the robot, there were 27% of silent people instead of 58%, but still they wanted to reduce their number. We listened to examples from the test run and revealed interesting cases when people did not have time to finish or did not even have time to start talking. The above example is where Mary interrupted the client, did not wait for the end of the answer.

There were people who are silently deliberately, they know that this is a robot and are waiting for the operator. We dealt with them separately. And there are people who could not answer because of the small amount of time laid in reply. We understood that it was impolite to interrupt, as customer loyalty is reduced.

We decided to carry out experiments on choosing the duration of recording the response of the client. It was necessary to choose the optimal recording duration so that as many phrases as possible became informative, that is, they contained a meaningful text that could be classified. The table shows the percentage of informative phrases for different durations of recording the response of the client.

| Response time | Methodius | Maria |

|---|---|---|

| 5 second | 52.4 | 56.3 |

| 7 seconds | 63.8 | 78.2 |

| 10 Seconds | 84.1 | 91.4 |

| 12 seconds | 83.7 | 92.1 |

| 15 seconds | 79.2 | 90.6 |

Experiments have shown that 10 seconds is enough to formulate a request.

But limiting by time is only one way to complete the recording of a client’s response, there are others. Detecting silence or determining the end of a cue by the speaker's intonation are more effective methods. Speech attenuation detection has already been implemented in the world, developers are guided by intonation. But after several experiments with a specific response recording time, we decided to detect silence using Asteriska, this was already enough to get good results.

Silence Detection Example

It would seem that everything is already fine, the robot listens as much as necessary, received a new voice and the name “Anna”. But another test with such improvements showed a significant reduction in the number of abandoned tubes. The number of silent people also decreased, but I wanted better.

| Methodius | Maria | Anna, v1 | |

|---|---|---|---|

| Abandoned tubes | nineteen% | 14% | 5% |

| The silent | 58% | 27% | 14% |

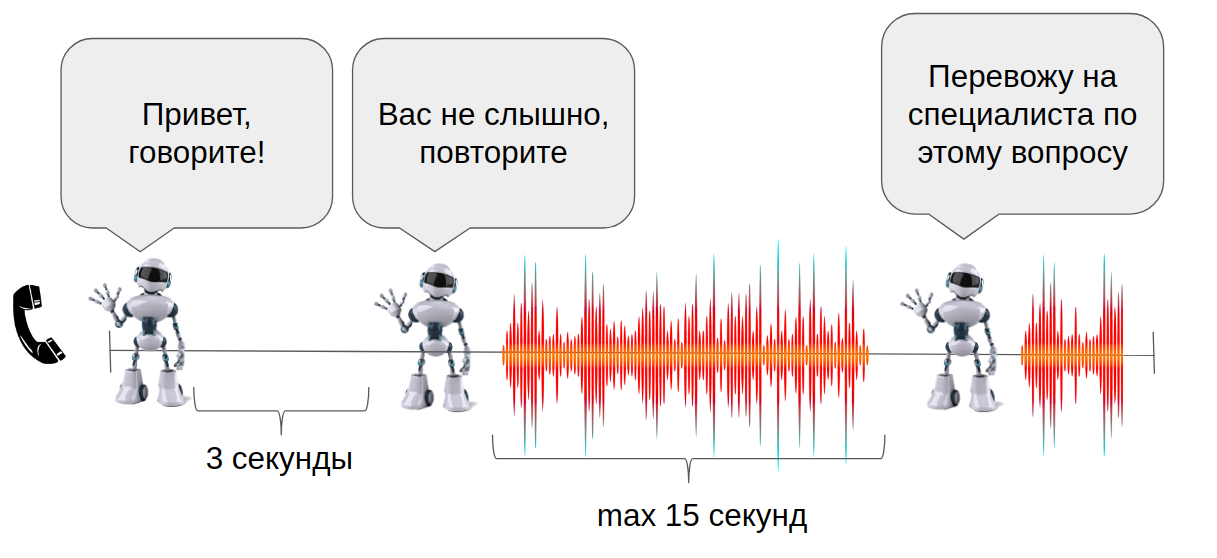

Without thinking twice, we decided to modify the script for the interaction of the bot with the client. If the client does not answer (silent) and we detect it within three seconds, then Anna will ask again. Due to the silence detection used earlier, it turned out to be easy to realize. The final outline of the dialogue scenario is presented below.

This was done to revitalize the conversation and repeat the question of the robot when the user may not have heard the first phrase from Anna.

Re-ask example

| Methodius | Maria | Anna, v1 | Anna, v2 | |

|---|---|---|---|---|

| Abandoned tubes | nineteen% | 14% | 5% | 4% |

| The silent | 58% | 27% | 14% | 6% |

| Replies after re-asking | - | - | - | 48% |

As a result, such an implementation went into productive, with 4% of abandoned pipes and only 6% of silent people. We went about this for about 6 months, it would seem that the model is ready, classifies well, but it was difficult to implement.

Post Conclusions

The finished model is the only thing that can be done, it will turn out to be productive when you understand your users how and what they say, whether they are ready to communicate with the robot.

Only after that, to introduce the model will not be difficult and business indicators will go up.

The introduction of Anna. Summary

Call classification has reduced call time. It was reduced by 15 seconds, and this is 350 processed calls per day. It was reduced due to the fact that the operators immediately answered the question that passed to them from the robot, and did not waste time trying to listen to the client. But this is not the main thing.

Call classification allowed operators to receive calls on specific topics. What was important because of the problems that I wrote in the first part of the article : the variety of topics did not allow operators to quickly get on the line, first it was necessary to learn the answers to all customer questions. After the introduction of the system, operator training began to take 1 week instead of 3 months. The operator, of course, continues to study, but can already receive calls on a topic that he studied in the first week.

I'll see you in the next article, where I’ll talk about another case of using voice classifiers, namely, how Anna robot reduced the number of transfers between technical support and the sales department.