It is amazing how tremendously and qualitatively they transferred it, preserving not only absolutely the entire 32-year history of the project, but also bug reports (got into Issues), patches (got into PRs), releases and branches. The inscription " 32 years ago " next to the files causes an involuntary smile.

What else to do on this dull Friday night, when rain and snow unpleasantly drizzle on the street, and all the street paths are mired in autumn slush? That's right, red-eyed! So for the sake of experiment and interest, I decided to take and assemble the ancient Perl on a modern x86_64 machine with the latest version of GCC 9.2.0 as a compiler. Can such old code pass the test of time?

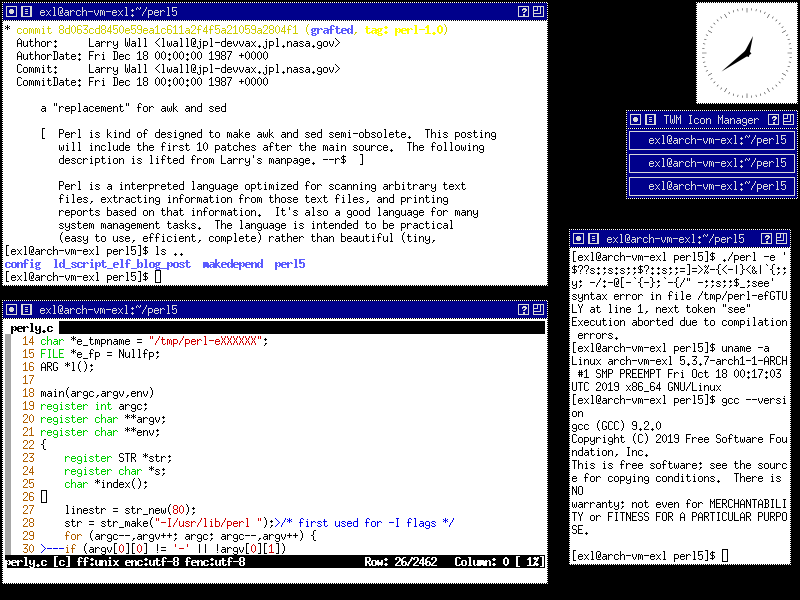

Demonstration of twm , one of the first window managers for the X Window System, on a modern Arch Linux distribution.

To make it completely authentic and necromancy, I deployed a virtual machine with bare Xs and a twm window manager, who was also born in 1987. Who knows, maybe Larry Wall wrote his Perl using exactly twm , so to speak bleeding edge technology of that time. The distribution used is Arch Linux. Just because there are some useful things in his repository that came in handy later on. So let's go!

Content:

1. Preparation of the environment

2. Configuring the source code

3. Yacc grammar file errors

4. Errors of compilation of code on "C"

5. Correction of some errors Segmentation fault

6. To summarize

1. Preparation of the environment

First, we install on a deployed operating system in a virtual machine all the gentlemanly set of utilities and compilers necessary for assembling and editing the source code: gcc , make , vim , git , gdb , etc. Some of them are already installed, while others are available in the meta-package base-devel , it must be installed if it is not installed. After the environment is ready for action, we get a copy of the 32-year-old Perl source code!

$ git clone https://github.com/Perl/perl5/ --depth=1 -b perl-1.0

Thanks to the features of Git, we don’t need to drag a bunch of files to get to the very first release of the project:

* commit 8d063cd8450e59ea1c611a2f4f5a21059a2804f1 (grafted, HEAD, tag: perl-1.0) Commit: Larry Wall <lwall@jpl-devvax.jpl.nasa.gov> CommitDate: Fri Dec 18 00:00:00 1987 +0000 a "replacement" for awk and sed

We only download a small amount of data and as a result, a repository with the source code of the first version of Perl takes only 150 KB.

At that dark and dense time there was no such elementary thing as autotools ( what a blessing! ), However, there is a Configure script in the root of the repository. What is the matter? But the fact is that Larry Wall is the inventor of such scripts that allowed generating Makefiles for the most variegated UNIX machines of that time. As the Wikipedia article about the scripts of the same name says, Larry Wall supplied the Configure file with some of its software, for example, a newsreader rn , three more years before writing Perl. Subsequently, Perl was no exception, and a script that was already run on many machines was used to build it. Later, other developers, for example, programmers from Trolltech, also picked up this idea. They used a similar script to configure the build of their Qt framework, which many people confuse with configure from autotools . It was the zoo of such scripts from different developers that served as the impetus for creating tools for their simplified and automatic generation.

<< Skip to content

2. Configuring the source code

The Configure script of the “old school”, which is already evident from its Shebang ', which has a space:

$ cat Configure | head -5 #! /bin/sh # # If these # comments don't work, trim them. Don't worry about any other # shell scripts, Configure will trim # comments from them for you. #

According to the comment, it turns out there were shells in the scripts of which it was not possible to leave comments! The space situation looks unusual, but once this was the norm, see the link for more information here . Most importantly, there is no difference for modern shell interpreters whether there is a space or not.

Enough of the lyrics, let's get down to business! We start the script and see an interesting assumption, which turns out to be not entirely true:

$ ./Configure (I see you are using the Korn shell. Some ksh's blow up on Configure, especially on exotic machines. If yours does, try the Bourne shell instead.) Beginning of configuration questions for perl kit. Checking echo to see how to suppress newlines... ...using -n. Type carriage return to continue. Your cursor should be here-->

Surprisingly, the script is interactive and contains a huge bunch of various background information. The user interaction model is built on dialogs, analyzing the answers to which the script changes its parameters, according to which it will subsequently generate Makefiles. I was personally interested in checking if all shell commands are in place?

Locating common programs... expr is in /bin/expr. sed is in /bin/sed. echo is in /bin/echo. cat is in /bin/cat. rm is in /bin/rm. mv is in /bin/mv. cp is in /bin/cp. tr is in /bin/tr. mkdir is in /bin/mkdir. sort is in /bin/sort. uniq is in /bin/uniq. grep is in /bin/grep. Don't worry if any of the following aren't found... test is in /bin/test. egrep is in /bin/egrep. I don't see Mcc out there, offhand.

Apparently before this was far from the case. I wonder what the Mcc utility is responsible for , which could not be found? The funny thing is that this script in the best hacker traditions of the time is full of friendly humor. Now you’ll hardly see anything like it:

Is your "test" built into sh? [n] (OK to guess) OK Checking compatibility between /bin/echo and builtin echo (if any)... They are compatible. In fact, they may be identical. Your C library is in /lib/libc.a. You're normal. Extracting names from /lib/libc.a for later perusal...done Hmm... Looks kind of like a USG system, but we'll see... Congratulations. You aren't running Eunice. It's not Xenix... Nor is it Venix... Checking your sh to see if it knows about # comments... Your sh handles # comments correctly. Okay, let's see if #! works on this system... It does. Checking out how to guarantee sh startup... Let's see if '#!/bin/sh' works... Yup, it does.

I answered most of the questions with the default value, or with what the script offered me. Particularly pleased and surprised was the request for flags for the compiler and linker:

Any additional cc flags? [none] Any additional ld flags? [none]

There you can write something interesting, for example, -m32 to build a 32-bit executable file or a library that is required for linking. To the last question of the script:

Now you need to generate make dependencies by running "make depend". You might prefer to run it in background: "make depend > makedepend.out &" It can take a while, so you might not want to run it right now. Run make depend now? [n] y

I answered positively. Judging by its Wikipedia page , the ancient makedepend utility was created at the very beginning of the Athena project life to facilitate the work with Makefiles. This project gave us the X Window System, Kerberos, Zephyr and influenced many other things familiar today. All this is wonderful, but where does this utility come from in a modern Linux environment? It has long been used by nobody and anywhere. But if you look carefully at the root of the repository, it turns out that Larry Wall wrote its script version-substitute, which we carefully unpacked and executed the configuration script.

Makedepend completed with some strange errors:

./makedepend: command substitution: line 82: unexpected EOF while looking for matching `'' ./makedepend: command substitution: line 83: syntax error: unexpected end of file ./makedepend: command substitution: line 82: unexpected EOF while looking for matching `'' ./makedepend: command substitution: line 83: syntax error: unexpected end of file

Perhaps it was they that caused the problem due to which the generated Makefiles were a bit chewed:

$ make make: *** No rule to make target '<built-in>', needed by 'arg.o'. Stop.

I definitely did not want to go into the jungle of the intricate shell-noodles of the makedepend utility and I decided to carefully look at the Makefiles in which a strange pattern emerged:

arg.o: arg.c arg.o: arg.h arg.o: array.h arg.o: <built-in> arg.o: cmd.h arg.o: <command-line> arg.o: config.h arg.o: EXTERN.h ... array.o: arg.h array.o: array.c array.o: array.h array.o: <built-in> array.o: cmd.h array.o: <command-line> array.o: config.h array.o: EXTERN.h ...

Apparently some utility incorrectly inserted its arguments into the exhaust. Picking up the

$ sed -i '/built-in/d' Makefile $ sed -i '/command-line/d' Makefile

Surprisingly, the trick worked and Makefiles worked as they should!

<< Skip to content

3. Yacc grammar file errors

It would be unbelievable if the 32-year-old code took and compiled without any problems. Unfortunately, miracles do not happen. Studying the source tree, I came across a perl.y file, which is a grammar description for the yacc utility, which has long been replaced by bison in modern distributions. The script located on the path / usr / bin / yacc just calls bison in compatibility mode with yacc . It's just that this compatibility is not complete, and when processing this file, a huge bunch of errors are pouring in, which I don’t know how to correct and don’t really want to, because there is an alternative solution that I learned about recently.

Just a year or two ago, Helio Chissini de Castro, who is the developer of KDE, did similar work and adapted KDE 1, 2 and Qt 1, 2 to modern environments and compilers. I became interested in his work, downloaded the source codes of the projects, but during the assembly I came across a similar pitfall due to the incompatibility of yacc and bison , which were used to build the ancient version of the moc metacompiler. Subsequently, I managed to find a solution to this problem in the form of replacing bison with byacc utility (Berkeley Yacc), which turned out to be compatible with old grammars for yacc and was available in many Linux distributions.

A simple replacement of yacc with byacc in the build system helped me out then, though not for long, because a bit later in new versions of byacc they still broke compatibility with yacc , breaking off the debugging associated with the yydebug entity. Therefore, I had to slightly correct the grammar of the utility.

So, the strategy for correcting construction errors in the perl.y file was predicted by previous experience: install the byacc utility, change yacc to byacc in all Makefiles, then cut yydebug from everywhere. These actions solved all the problems with this file, the errors disappeared and the compilation continued.

<< Skip to content

4. Errors of compilation of code on "C"

Perl’s ancient code was full of horrors, like the long-obsolete and forgotten notation of K&R function definitions:

format(orec,fcmd) register struct outrec *orec; register FCMD *fcmd; { ... } STR * hfetch(tb,key) register HASH *tb; char *key; { ... } /*VARARGS1*/ fatal(pat,a1,a2,a3,a4) char *pat; { fprintf(stderr,pat,a1,a2,a3,a4); exit(1); }

Similar features were found, for example, in Microsoft Word 1.1a code, which is also quite ancient. The first standard of the programming language “C”, called “C89”, will appear only in two years. Modern compilers are able to work with such code, but some IDEs do not cope with parsing such definitions and highlight them as syntax errors, for example, Qt Creator sinned before before parsing the code in it to the libclang library.

The GCC 9.2.0 compiler, spewing out a huge number of warnings, undertook to compile the ancient code of the first version of Perl. The sheets from the warnings were so large that in order to get to the error, we had to scroll several pages of the exhaust up. To my surprise, most of the compilation errors were typical and mainly related to predefined defines, which played the role of flags for the assembly.

The work of the modern GCC 9.2.0 compiler and the GDB 8.3.1 debugger in the twm window manager and the xterm terminal emulator.

Under STDSTDIO , Larry Wall experimented with some ancient and non-standard programming language library “C”, and under DEBUGGING there was debugging information with the notorious yydebug , which I mentioned above. By default, these checkboxes were enabled. By turning them off in the perl.h file and adding some forgotten defines, I was able to significantly reduce the number of errors.

Another type of error is overriding the now standardized functions of the standard library and the POSIX layer. The project has its own malloc () , setenv () and other entities that created conflicts.

A couple of places defined static functions without declarations. Over time, compilers began to take a stricter approach to this problem and turned the warning into an error . And finally, a couple of forgotten headers, where without them.

To my surprise, the patch for the 32-year-old code turned out to be so tiny that it can be fully quoted here:

diff --git a/malloc.cb/malloc.c index 17c3b27..a1dfe9c 100644 --- a/malloc.c +++ b/malloc.c @@ -79,6 +79,9 @@ static u_int nmalloc[NBUCKETS]; #include <stdio.h> #endif +static findbucket(union overhead *freep, int srchlen); +static morecore(register bucket); + #ifdef debug #define ASSERT(p) if (!(p)) botch("p"); else static diff --git a/perl.hb/perl.h index 3ccff10..e98ded5 100644 --- a/perl.h +++ b/perl.h @@ -6,16 +6,16 @@ * */ -#define DEBUGGING -#define STDSTDIO /* eventually should be in config.h */ +//#define DEBUGGING +//#define STDSTDIO /* eventually should be in config.h */ #define VOIDUSED 1 #include "config.h" -#ifndef BCOPY -# define bcopy(s1,s2,l) memcpy(s2,s1,l); -# define bzero(s,l) memset(s,0,l); -#endif +//#ifndef BCOPY +//# define bcopy(s1,s2,l) memcpy(s2,s1,l); +//# define bzero(s,l) memset(s,0,l); +//#endif #include <stdio.h> #include <ctype.h> @@ -183,11 +183,11 @@ double atof(); long time(); struct tm *gmtime(), *localtime(); -#ifdef CHARSPRINTF - char *sprintf(); -#else - int sprintf(); -#endif +//#ifdef CHARSPRINTF +// char *sprintf(); +//#else +// int sprintf(); +//#endif #ifdef EUNICE #define UNLINK(f) while (unlink(f) >= 0) diff --git a/perl.yb/perl.y index 16f8a9a..1ab769f 100644 --- a/perl.y +++ b/perl.y @@ -7,6 +7,7 @@ */ %{ +#include <stdlib.h> #include "handy.h" #include "EXTERN.h" #include "search.h" diff --git a/perly.cb/perly.c index bc32318..fe945eb 100644 --- a/perly.c +++ b/perly.c @@ -246,12 +246,14 @@ yylex() static bool firstline = TRUE; retry: +#ifdef DEBUGGING #ifdef YYDEBUG if (yydebug) if (index(s,'\n')) fprintf(stderr,"Tokener at %s",s); else fprintf(stderr,"Tokener at %s\n",s); +#endif #endif switch (*s) { default: diff --git a/stab.cb/stab.c index b9ef533..9757cfe 100644 --- a/stab.c +++ b/stab.c @@ -7,6 +7,7 @@ */ #include <signal.h> +#include <errno.h> #include "handy.h" #include "EXTERN.h" #include "search.h" diff --git a/util.hb/util.h index 4f92eeb..95cb9bf 100644 --- a/util.h +++ b/util.h @@ -28,7 +28,7 @@ void prexit(); char *get_a_line(); char *savestr(); int makedir(); -void setenv(); +//void setenv(); int envix(); void notincl(); char *getval();

Great result for 32 year old code! The undefined reference to `crypt ' linking bug was fixed by adding the -lcrypt directive to the Makefile with the appropriate libcrypt library, after which I finally got the desired Perl interpreter executable:

$ file perl perl: ELF 64-bit LSB pie executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, BuildID[sha1]=fd952ceae424613568530b3a2ca88ebd6477e0ae, for GNU/Linux 3.2.0, not stripped

<< Skip to content

5. Correction of some errors Segmentation fault

After an almost hassle-free compilation, luck turned its back on me. Immediately after starting the assembled Perl interpreter, I got some strange errors and a Segmentation fault at the end:

$ ./perl -e 'print "Hello World!\n";' Corrupt malloc ptr 0x2db36040 at 0x2db36000 Corrupt malloc ptr 0x2db36880 at 0x2db36800 Corrupt malloc ptr 0x2db36080 at 0x2db36040 Corrupt malloc ptr 0x2db37020 at 0x2db37000 Segmentation fault (core dumped)

Having gnawed at the source text for the phrase Corrupt malloc , it turned out that instead of the system malloc () some kind of custom allocator was called from 1982. Interestingly, Berkeley is written in one of the string literals in its source code, and Caltech in a comment next to it. Collaboration between these universities was evident then very strong. In general, I commented out this hacker allocator and rebuilt the source code. Memory corruption errors disappeared, but the Segmentation fault remained. So that was not the point, and now we need to uncover the debugger.

Running the program under gdb, I found that the crash occurs when I call the function to create a temporary mktemp () file from libc:

$ gdb --args ./perl -e 'print "Hello, World!\n";' (gdb) r Starting program: /home/exl/perl5/perl -e print\ \"Hello\ World\!\\n\"\; Program received signal SIGSEGV, Segmentation fault. 0x00007ffff7cd20c7 in __gen_tempname () from /usr/lib/libc.so.6 (gdb) bt #0 0x00007ffff7cd20c7 in __gen_tempname () from /usr/lib/libc.so.6 #1 0x00007ffff7d71577 in mktemp () from /usr/lib/libc.so.6 #2 0x000055555556bb08 in main ()

By the way, the linker previously cursed at this function. Not a compiler, but a linker, which surprised me:

/usr/bin/ld: perl.o: in function `main': perl.c:(.text+0x978c): warning: the use of `mktemp' is dangerous, better use `mkstemp' or `mkdtemp'

The first thought that probably came to your mind too was to replace the unsafe function mktemp () with mkstemp () , which I did. The linker warning disappeared, but the Segmentation fault remained in this place anyway, only now it was in the mkstemp () function.

Therefore, now you need to look very carefully at the piece of code that is associated with this function. There I discovered a rather strange thing that is highlighted in this snippet:

char *e_tmpname = "/tmp/perl-eXXXXXX"; int main(void) { mktemp(e_tmpname); e_fp = f_open(e_tmpname, "w"); ... }

It turns out that mktemp () is trying to change the literal for the mask, which is located in the .rodata section, which is obviously doomed to failure. Or, after all, 32 years ago, this was acceptable, met in the code, and even somehow worked?

Of course, replacing char * e_tmpname with char e_tmpname [] fixed this Segmentation fault and I was able to get what I killed for the whole evening:

$ ./perl -e 'print "Hello World!\n";' $ Hello, World! $ ./perl -e '$a = 5; $b = 6.3; $c = $a+$b; print $c."\n";' $ 11.3000000000000007 $ ./perl -v $Header: perly.c,v 1.0 87/12/18 15:53:31 root Exp $ Patch level: 0

We checked the execution from the command line, but what about the file? I downloaded the first “Hello World” for the Perl programming language from the Internet:

################# test.pl #!/usr/bin/perl # # The traditional first program. # Strict and warnings are recommended. use strict; use warnings; # Print a message. print "Hello, World!\n";

Then I tried to start it, but, alas, the Segmentation fault was waiting for me again. This time in a completely different place:

$ gdb --args ./perl test.pl (gdb) r Starting program: /home/exl/perl5/perl test.pl Program received signal SIGSEGV, Segmentation fault. 0x00007ffff7d1da75 in __strcpy_sse2_unaligned () from /usr/lib/libc.so.6 (gdb) bt #0 0x00007ffff7d1da75 in __strcpy_sse2_unaligned () from /usr/lib/libc.so.6 #1 0x00005555555629ea in yyerror () #2 0x0000555555568dd6 in yyparse () #3 0x000055555556bd4f in main ()

The following interesting point was found in the yyerror () function, I quote the original snippet:

// perl.y char *tokename[] = { "256", "word", "append", ... // perl.c yyerror(s) char *s; { char tmpbuf[128]; char *tname = tmpbuf; if (yychar > 256) { tname = tokename[yychar-256]; // ??? if (strEQ(tname,"word")) strcpy(tname,tokenbuf); // Oops! else if (strEQ(tname,"register")) sprintf(tname,"$%s",tokenbuf); // Oops! ...

Again, the situation is similar to the one about which I wrote above. The data in the .rodata section is modified again . Maybe it's just typos because of Copy-Paste and instead of tname they wanted to write tmpbuf ? Or is there really some kind of hidden meaning behind it? In any case, replacing char * tokename [] with char tokename [] [32] removes the Segmentation fault error and Perl tells us the following:

$ ./perl test.pl syntax error in file test.pl at line 7, next token "strict" Execution aborted due to compilation errors.

It turns out that he does not like all sorts of newfangled use strict , that's what he is trying to tell us! If you delete or comment out these lines in the file, the program starts:

$ ./perl test.pl Hello, World!

<< Skip to content

6. To summarize

In fact, I achieved my goal and made the ancient code from 1987 not only compile, but also work in a modern Linux environment. Undoubtedly, there still remains a large pile of various Segmentation faults errors, possibly related to the size of the pointer on a 64-bit architecture. All this can be cleaned after sitting a few evenings with the debugger at the ready. But this is not a very pleasant and rather tedious task. After all, initially this experiment was planned as entertainment for a boring evening, and not as a full-fledged work, which will be brought to an end. Is there any practical benefit from the actions taken? Maybe someday some digital archaeologist will stumble upon this article and it will be useful to him. But in the real world, even the experience drawn from such research, in my opinion, is not too valuable.

If anyone is interested, I post a set of two patches. The first fixes compilation errors, and the second fixes some Segmentation fault errors.

PS I hasten to upset fans of destructive single-line players , this does not work here. Perhaps Perl’s version is too old for such entertainment.

PPS All good and have a nice weekend. Thanks to kawaii_neko for a small fix .

Update 28-Oct-2019: A user of the LINUX.ORG.RU forum, using the nickname utf8nowhere , provided quite interesting links in his commentary on this article, the information from which not only clarifies the situation with mutable string literals, but even considers the use problem described above mktemp () functions! Let me quote these sources, which describe various incompatibilities between the non-standardized K&R C and GNU C:

Incompatibilities of GCCThe -fwritable-strings compiler flag was deprecated in GCC 3.4 and permanently removed in GCC 4.0.

There are several noteworthy incompatibilities between GNU C and K&R (non-ISO) versions of C.

GCC normally makes string constants read-only. If several identical-looking string constants are used, GCC stores only one copy of the string.

One consequence is that you cannot call mktemp with a string constant argument. The function mktemp always alters the string its argument points to.

Another consequence is that sscanf does not work on some systems when passed a string constant as its format control string or input. This is because sscanf incorrectly tries to write into the string constant. Likewise fscanf and scanf .

The best solution to these problems is to change the program to use char -array variables with initialization strings for these purposes instead of string constants. But if this is not possible, you can use the -fwritable-strings flag, which directs GCC to handle string constants the same way most C compilers do.

Source: Using the GNU Compiler Collection (GCC 3.3) Official Manual .

ANSI C rationale | String literalsThe user VarfolomeyKote4ka proposed an interesting dirty hack that allows you to bypass Segmentation faults errors when trying to change data in the .rodata section by converting it to the .rwdata section . Not so long ago, a very interesting article appeared on the Internet “From .rodata to .rwdata - introduction to memory mapping and LD scripts” by the programmer guye1296 , which tells how to do this trick. To facilitate obtaining the desired result, the author of the article prepared a rather voluminous script for the standard linker ld - rwdata.ld. It is enough to download this script, put it in the root of the Perl source directory, correct the LDFLAGS flag as follows: LDFLAGS = -T rwdata.ld , then rebuild the project. As a result, we have the following:

String literals are specified to be unmodifiable. This specification allows implementations to share copies of strings with identical text, to place string literals in read-only memory, and perform certain optimizations. However, string literals do not have the type array of const char, in order to avoid the problems of pointer type checking, particularly with library functions, since assigning a pointer to const char to a plain pointer to char is not valid. Those members of the Committee who insisted that string literals should be modifiable were content to have this practice designated a common extension (see F.5.5).

Existing code which modifies string literals can be made strictly conforming by replacing the string literal with an initialized static character array. For instance,

char *p, *make_temp(char *str); /* ... */ p = make_temp("tempXXX"); /* make_temp overwrites the literal */ /* with a unique name */

can be changed to:

char *p, *make_temp(char *str); /* ... */ { static char template[ ] = "tempXXX"; p = make_temp( template ); }

: Rationale for American National Standard for Information Systems, Programming Language C .

$ make clean && make -j1 $ mv perl perl_rodata $ curl -LOJ https://raw.githubusercontent.com/guye1296/ld_script_elf_blog_post/master/rwdata.ld $ sed -i 's/LDFLAGS =/LDFLAGS = -T rwdata.ld/' Makefile $ make clean && make -j1 $ mv perl perl_rwdata $ objdump -s -j .rodata perl_rodata | grep tmp -2 19da0 21233f5e 7e3d2d25 30313233 34353637 !#?^~=-%01234567 19db0 38392e2b 262a2829 2c5c2f5b 7c002400 89.+&*(),\/[|.$. 19dc0 73746465 7272002f 746d702f 7065726c stderr./tmp/perl 19dd0 2d655858 58585858 00323536 00617070 -eXXXXXX.256.app 19de0 656e6400 6c6f6f70 63746c00 66756e63 end.loopctl.func $ objdump -s -j .rwdata perl_rodata | grep tmp -2 objdump: section '.rwdata' mentioned in a -j option, but not found in any input file $ objdump -s -j .rwdata perl_rwdata | grep tmp -2 41d9c0 21233f5e 7e3d2d25 30313233 34353637 !#?^~=-%01234567 41d9d0 38392e2b 262a2829 2c5c2f5b 7c002400 89.+&*(),\/[|.$. 41d9e0 73746465 7272002f 746d702f 7065726c stderr./tmp/perl 41d9f0 2d655858 58585858 00323536 00617070 -eXXXXXX.256.app 41da00 656e6400 6c6f6f70 63746c00 66756e63 end.loopctl.func $ objdump -s -j .rodata perl_rwdata | grep tmp -2 objdump: section '.rodata' mentioned in a -j option, but not found in any input file $ ./perl_rodata -e 'print "Hello, World!\n";' Segmentation fault (core dumped) $ ./perl_rwdata -e 'print "Hello, World!\n";' Hello, World!

It turns out that thanks to this hack, almost all changes from the second patch can be simply omitted! Although, of course, bringing the code to a look that does not violate standards is still preferable.

<< Skip to content