Pavel Selivanov, Southbridge Solution Architect and Slurm Lecturer, made a presentation at DevOpsConf 2019. This report is part of Kubernetes in-depth Slur Mega in-depth course.

Slurm Basic: An introduction to Kubernetes takes place in Moscow on November 18-20.

Slurm Mega: We look under the hood of Kubernetes - Moscow, November 22-24.

Slurm Online: Both Kubernetes courses are always available.

Under the cut - the transcript of the report.

Good afternoon, colleagues and sympathizers. Today I will talk about security.

I see that there are many security guards in the hall today. I apologize in advance to you if I will not use the terms from the security world in the very way that is customary for you.

It so happened that about six months ago I got into the hands of one public cluster of Kubernetes. Public - means that there is an nth number of namespaces, in these namespace there are users isolated in their namespace. All these users belong to different companies. Well, it was supposed that this cluster should be used as a CDN. That is, they give you a cluster, they give the user there, you go there in your namespace, deploy your fronts.

They tried to sell such a service to my previous company. And I was asked to poke a cluster on the subject - is this solution suitable or not.

I came to this cluster. I was given limited rights, limited namespace. There, the guys understood what security was. They read what Kubernetes had Role-based access control (RBAC) - and they twisted it so that I could not run pods separately from deployment. I don’t remember the task that I was trying to solve by running under without deployment, but I really wanted to run just under. I decided for luck to see what rights I have in the cluster, what I can, what I can’t, what they screwed up there. At the same time I’ll tell you that they are configured incorrectly in RBAC.

It so happened that two minutes later I got an admin to their cluster, looked at all the neighboring namespaces, saw the production fronts of companies that had already bought the service and got stuck there. I barely stopped myself, so as not to come to someone in the front and not put any obscene word on the main page.

I will tell you with examples how I did this and how to protect myself from this.

But first, introduce myself. My name is Pavel Selivanov. I am an architect at Southbridge. I understand Kubernetes, DevOps and all sorts of fancy stuff. Southbridge engineers and I are building all this, and I advise.

In addition to our core business, we recently launched projects called Slory. We are trying to bring our ability to work with Kubernetes to the masses, to teach other people how to work with K8s too.

What I will talk about today. The topic of the report is obvious - about the security of the Kubernetes cluster. But I want to say right away that this topic is very big - and therefore I want to immediately stipulate what I will not tell about for sure. I will not talk about hackneyed terms that are already a hundred times over-grinded on the Internet. Any RBAC and certificates.

I will talk about how it hurts me and my colleagues from security in the Kubernetes cluster. We see these problems both with providers who provide Kubernetes clusters and with customers who come to us. And even with clients who come to us from other consulting admin companies. That is, the scale of the tragedy is very large in fact.

Literally three points, which I will talk about today:

- User rights vs pod rights. User rights and hearth rights are not the same thing.

- Collect cluster information. I will show that from the cluster you can collect all the information that you need without having special rights in this cluster.

- DoS attack on the cluster. If we cannot collect information, we can put the cluster in any case. I will talk about DoS attacks on cluster controls.

Another common thing that I’ll mention is where I tested it all, and I can definitely say that it all works.

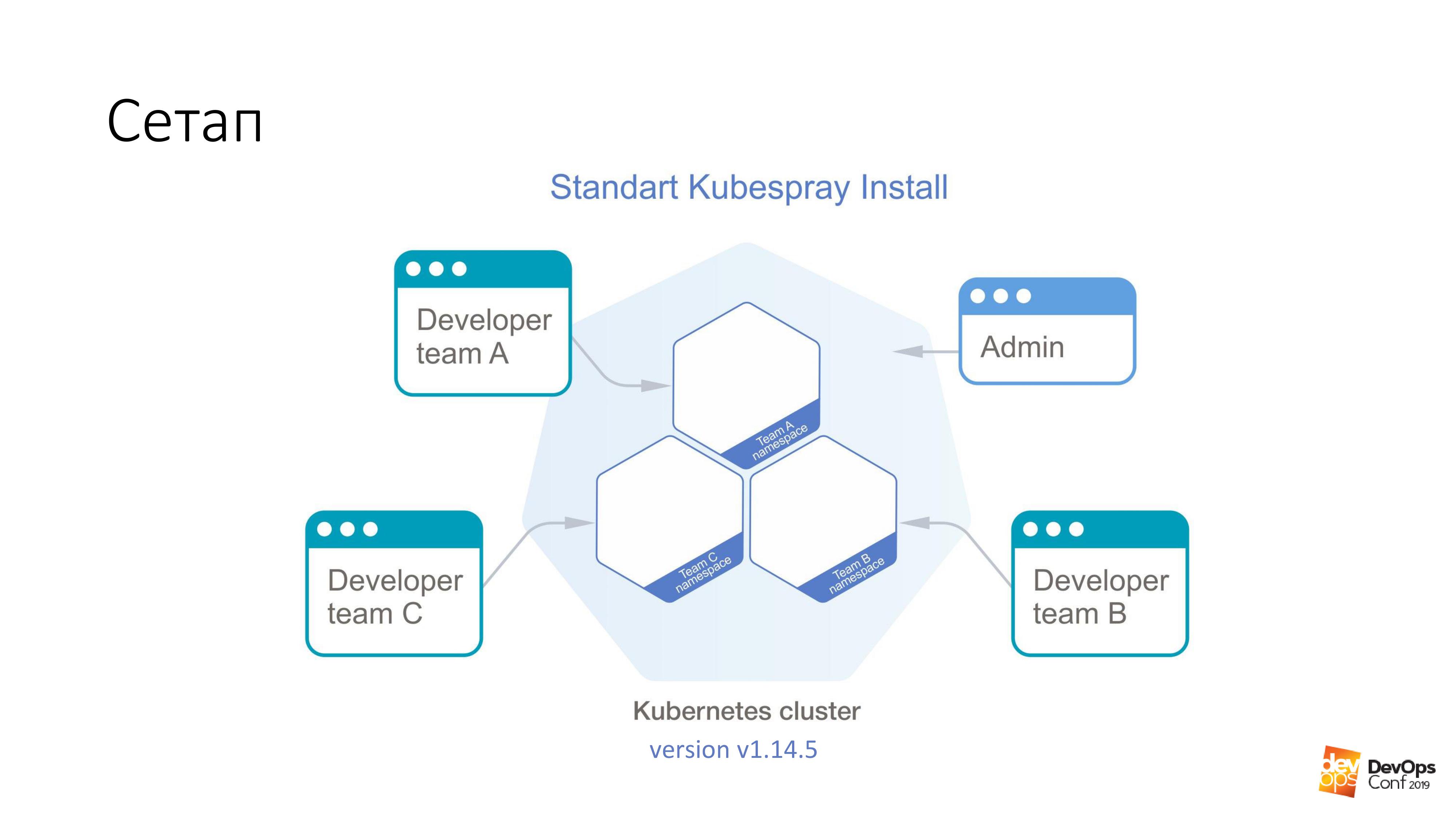

As a basis, we take the installation of a Kubernetes cluster using Kubespray. If someone does not know, this is actually a set of roles for Ansible. We are constantly using it in our work. The good thing is that you can roll anywhere - you can roll on pieces of iron, and somewhere in the cloud. One installation method is suitable in principle for everything.

In this cluster, I will have Kubernetes v1.14.5. The entire cluster of Cuba, which we will consider, is divided into namespaces, each namespace belongs to a separate team, members of this team have access to each namespace. They cannot go to different namespaces, only to their own. But there is some admin account that has rights to the entire cluster.

I promised that the first thing we will have is getting admin rights to the cluster. We need a specially prepared pod that will break the Kubernetes cluster. All we need to do is apply it to the Kubernetes cluster.

kubectl apply -f pod.yaml

This pod will arrive at one of the masters of the Kubernetes cluster. And after that the cluster will happily return a file called admin.conf to us. In Cuba, all admin certificates are stored in this file, and at the same time, the cluster API is configured. This is how easy it is to get admin access, I think, to 98% of Kubernetes clusters.

I repeat, this pod was made by one developer in your cluster who has access to deploy his proposals to one small namespace, he is all clamped by RBAC. He had no rights. Nevertheless, the certificate returned.

And now about the specially prepared hearth. Run on any image. For example, take debian: jessie.

We have such a thing:

tolerations: - effect: NoSchedule operator: Exists nodeSelector: node-role.kubernetes.io/master: ""

What is toleration? The masters in the Kubernetes cluster are usually marked with a thing called taint ("infection" in English). And the essence of this "infection" - she says that pods cannot be assigned to master nodes. But no one bothers to indicate in any way that he is tolerant of the "infection". The Toleration section just says that if NoSchedule stands on some node, then our under such infection is tolerant - and no problems.

Further, we say that our under is not only tolerant, but also wants to specifically fall on the master. Because the masters are the most delicious that we need - all the certificates. Therefore, we say nodeSelector - and we have a standard label on the wizards, which allows us to select exactly those nodes that are wizards from all the nodes of the cluster.

With such two sections, he’ll definitely come to the master. And he will be allowed to live there.

But just coming to the master is not enough for us. It will not give us anything. Therefore, further we have these two things:

hostNetwork: true hostPID: true

We indicate that our under, which we are launching, will live in the kernel namespace, in the network namespace and in the PID namespace. As soon as it starts on the wizard, it will be able to see all the real, live interfaces of this node, listen to all the traffic and see the PID of all processes.

Next, it’s small. Take etcd and read what you want.

The most interesting is this Kubernetes feature, which is present there by default.

volumeMounts: - mountPath: /host name: host volumes: - hostPath: path: / type: Directory name: host

And its essence is that we can say in the hearth that we run, even without rights to this cluster, that we want to create a volume of type hostPath. It means to take the path from the host on which we will start - and take it as volume. And then call it name: host. All this hostPath we mount inside the hearth. In this example, to the / host directory.

I repeat once again. We told the pod to come to the master, get hostNetwork and hostPID there - and mount the entire root of the master inside this pod.

You understand that in debian we have bash running, and this bash works under our root. That is, we just got the root for the master, while not having any rights in the Kubernetes cluster.

Then the whole task is to go into the sub directory / host / etc / kubernetes / pki, if I am not mistaken, pick up all the master certificates of the cluster there and, accordingly, become the cluster admin.

If you look this way, these are some of the most dangerous rights in pods - regardless of what rights a user has:

If I have rights to run under in some cluster namespace, then this sub has these rights by default. I can run privileged pods, and this is generally all rights, practically root on the node.

My favorite is Root user. And Kubernetes has such an option Run As Non-Root. This is a type of hacker protection. Do you know what the “Moldavian virus" is? If you are a hacker and come to my Kubernetes cluster, then we, poor administrators, ask: “Please indicate in your pods with which you will hack my cluster, run as non-root. And it so happens that you start the process in your hearth under the root, and it will be very easy for you to hack me. Please protect yourself from yourself. ”

Host path volume - in my opinion, the fastest way to get the desired result from the Kubernetes cluster.

But what to do with all this?

Thoughts that should come to any normal administrator who encounters Kubernetes: “Yeah, I told you, Kubernetes does not work. There are holes in it. And the whole cube is bullshit. " In fact, there is such a thing as documentation, and if you look there, then there is a Pod Security Policy section.

This is such a yaml-object - we can create it in the Kubernetes cluster - which controls the security aspects in the description of the hearths. That is, in fact, it controls those rights to use all kinds of hostNetwork, hostPID, certain types of volume, which are in the pods at startup. With Pod Security Policy, all of this can be described.

The most interesting thing in the Pod Security Policy is that in the Kubernetes cluster, all PSP installers are not simply not described in any way, they are simply turned off by default. Pod Security Policy is enabled using the admission plugin.

Okay, let's end up with a cluster of Pod Security Policy, let's say that we have some kind of service pods in the namespace, which only admins have access to. Let's say in all other pods they have limited rights. Because most likely, developers do not need to run privileged pods in your cluster.

And everything seems to be fine with us. And our Kubernetes cluster cannot be hacked in two minutes.

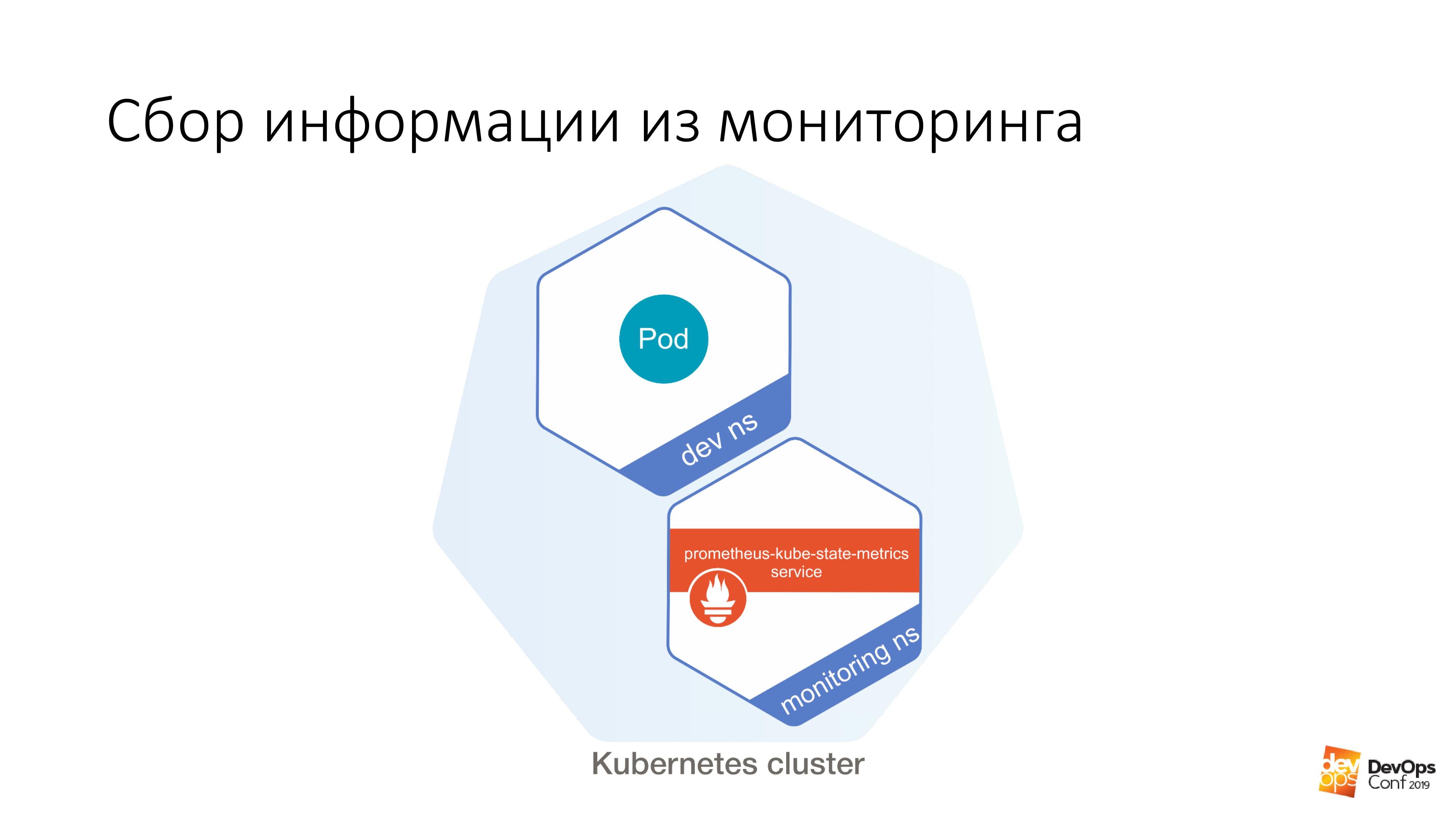

There is a problem. Most likely, if you have a Kubernetes cluster, then monitoring is installed in your cluster. I even presume to predict that if your cluster has monitoring, then it is called Prometheus.

What I will tell you now will be valid both for the Prometheus operator and for the Prometheus delivered in its pure form. The question is that if I can’t get an admin so quickly into the cluster, it means that I need to look more. And I can search using your monitoring.

Probably, everyone read the same articles on Habré, and monitoring is in monitoring. Helm chart is called approximately the same for everyone. I assume that if you do helm install stable / prometheus, then you will get approximately the same names. And even most likely I will not have to guess the DNS name in your cluster. Because it is standard.

Further we have a certain dev ns, in it it is possible to launch a certain under. And further from this hearth it is very easy to do like this:

$ curl http://prometheus-kube-state-metrics.monitoring

prometheus-kube-state-metrics is one of the prometheus exporters that collects metrics from the Kubernetes API itself. There is a lot of data that is running in your cluster, what it is, what problems you have with it.

As a simple example:

kube_pod_container_info {namespace = "kube-system", pod = "kube-apiserver-k8s-1", container = "kube-apiserver", image =

"gcr.io/google-containers/kube-apiserver:v1.14.5"

, image_id = "docker-pullable: //gcr.io/google-containers/kube- apiserver @ sha256: e29561119a52adad9edc72bfe0e7fcab308501313b09bf99df4a96 38ee634989", container_id = "docker: // 7cbe7b1f8ff2f2f2f2f2ff2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2f2fe2f2f

Having made a simple curl request from an unprivileged file, you can get such information. If you do not know which version of Kubernetes you are running in, then it will easily tell you.

And the most interesting is that besides the fact that you turn to kube-state-metrics, you can just as directly turn to Prometheus itself. You can collect metrics from there. You can even build metrics from there. Even theoretically, you can build such a request from a cluster in Prometheus, which simply turns it off. And your monitoring generally ceases to work from the cluster.

And here the question already arises whether any external monitoring monitors your monitoring. I just got the opportunity to act in the Kubernetes cluster without any consequences for myself. You won’t even know that I’m acting there, since there’s no monitoring anymore.

Just like with PSP, it feels like the problem is that all these trendy technologies - Kubernetes, Prometheus - they just don't work and are full of holes. Not really.

There is such a thing - Network Policy .

If you are a normal admin, then most likely about Network Policy you know that this is another yaml, which in the cluster is already dofig. And some network policies are definitely not needed. And even if you read what Network Policy is, what is the Kubernetes yaml-firewall, it allows you to restrict access rights between namespaces, between pods, then you certainly decided that the yaml-type firewall in Kubernetes is on the next abstractions ... No, not . This is definitely not necessary.

Even if your security specialists weren’t told that using your Kubernetes you can build a firewall very easily and simply, and it’s very granular. If they still don’t know this and don’t pull you: “Well, give, give ...” In any case, you need Network Policy to block access to some service places that you can pull from your cluster without any authorization.

As in the example that I cited, you can pull the kube state metrics from any namespace in the Kubernetes cluster without having any rights to it. Network policies have closed access from all other namespaces to the namespace monitoring and, as it were, everything: no access, no problems. In all the charts that exist, both the standard prometeus, and that prometeus that is in the operator, there simply in the values of the helm there is an option to simply enable network policies for them. You just need to turn it on and they will work.

There is really one problem here. Being a normal bearded admin, you most likely decided that network policies are not needed. And after reading all sorts of articles on resources such as Habr, you decided that flannel especially with host-gateway mode is the best thing you can choose.

What to do?

You can try re-installing the network solution that is in your Kubernetes cluster, try replacing it with something more functional. On the same Calico, for example. But I want to say right away that the task of changing the network solution in the Kubernetes working cluster is quite nontrivial. I solved it twice (both times, theoretically, though), but we even showed how to do this on the Slurms. For our students, we showed how to change the network solution in the Kubernetes cluster. In principle, you can try to make sure that there is no downtime on the production cluster. But you probably won’t succeed.

And the problem is actually solved very simply. There are certificates in the cluster, and you know that your certificates will go bad in a year. Well, and usually a normal solution with certificates in the cluster - why are we going to steam, we’ll raise a new cluster next to it, let it go rotten in the old one, and we’ll redo everything. True, when it goes bad, everything will lie down in our day, but then a new cluster.

When you will raise a new cluster, at the same time insert Calico instead of flannel.

What to do if you have certificates issued for a hundred years and you are not going to re-cluster the cluster? There is such a thing Kube-RBAC-Proxy. This is a very cool development, it allows you to embed yourself as a sidecar container to any hearth in the Kubernetes cluster. And she actually adds authorization through Kubernetes RBAC to this pod.

There is one problem. Previously, in the operator's prometheus, this Kube-RBAC-Proxy solution was built-in. But then he was gone. Now, modern versions rely on the fact that you have a network policy and close using them. And so you have to rewrite the chart a bit. In fact, if you go to this repository , there are examples of how to use it as sidecars, and you will have to rewrite the charts minimally.

There is another small problem. Not only Prometheus gives its metrics to anyone who gets it. We have all the components of the Kubernetes cluster, too, they can give their metrics.

But as I said, if you can’t get access to the cluster and collect information, then you can at least do harm.

So I’ll quickly show you two ways you can spoil your Kubernetes cluster.

You will laugh when I tell you, these are two cases from real life.

The first way. Running out of resources.

We launch one more special under. He will have such a section.

resources: requests: cpu: 4 memory: 4Gi

As you know, requests - this is the amount of CPU and memory that is reserved on the host for specific pods with requests. If we have a four-core host in the Kubernetes cluster, and four CPUs come there with requests, then no more pods with requests will be able to come to this host.

If I run this under, then I will make a command:

$ kubectl scale special-pod --replicas=...

Then no one else will be able to deploy to the Kubernetes cluster. Because in all nodes the requests will end. And so I stop your Kubernetes cluster. If I do this in the evening, then I can stop deployment for quite some time.

If we look again at the Kubernetes documentation, then we will see such a thing called the Limit Range. It sets resources for cluster objects. You can write a Limit Range object in yaml, apply it to certain namespaces - and further in this namespace you can say that you have resources for the default, maximum and minimum pods.

With the help of such a thing, we can limit users in specific product namespace teams in the ability to indicate all kinds of nasty things on their pods. But unfortunately, even if you tell the user that it is impossible to run pods with requests of more than one CPU, there is such a wonderful scale command, well, or through dashboard they can do scale.

And from here comes the number two method. We launch 11 111 111 111 111 hearths. That is eleven billion. This is not because I came up with such a number, but because I saw it myself.

Real story. Late in the evening I was about to leave the office. I look, a group of developers is sitting in the corner and doing something frantically with laptops. I go up to the guys and ask: “What happened to you?”

A little earlier, at nine in the evening, one of the developers was going home. And he decided: "I’ll skip my application to one now." I clicked on a little, and the Internet a little dull. He once again clicked on the unit, he pressed on the unit, clicked Enter. Poked on everything that he could. Then the Internet came to life - and everything began to scale to this date.

True, this story did not occur on Kubernetes, at that time it was Nomad. It ended with the fact that after an hour of our attempts to stop Nomad from stubborn attempts to stick together, Nomad replied that he would not stop sticking and would not do anything else. "I'm tired, I'm leaving." And curled up.

I naturally tried to do the same on Kubernetes. The eleven billion pods of Kubernetes were not pleased, he said: “I can’t. Exceeds the internal mouthguards. " But 1,000,000,000 hearths could.

In response to one billion, the Cube did not go inside. He really started to scale. The further the process went, the more time it took him to create new hearths. But still the process was going on. The only problem is that if I can run pods indefinitely in my namespace, then even without requests and limits I can start up so many pods with some tasks that with these tasks the nodes will start to add up from memory, from the CPU. When I run so many hearths, the information from them should go to the repository, that is, etcd. And when too much information arrives there, the storehouse begins to give out too slowly - and at Kubernetes dull things begin.

And one more problem ... As you know, the control elements of Kubernetes are not just one central thing, but several components. There, in particular, there is a controller manager, scheduler, and so on. All these guys will start doing unnecessary stupid work at the same time, which over time will start to take more and more time. The controller manager will create new pods. Scheduler will try to find them a new node. New nodes in your cluster will most likely end soon. The Kubernetes cluster will start to work more slowly and slowly.

But I decided to go even further. As you know, in Kubernetes there is such a thing called service. Well, and by default in your clusters, most likely, the service works using IP tables.

If you run one billion hearths, for example, and then use script to force Kubernetis to create new services:

for i in {1..1111111}; do kubectl expose deployment test --port 80 \ --overrides="{\"apiVersion\": \"v1\", \"metadata\": {\"name\": \"nginx$i\"}}"; done

On all nodes of the cluster, approximately new iptables rules will be generated approximately simultaneously. Moreover, for each service, one billion iptables rules will be generated.

I checked this whole thing on several thousand, up to a dozen. And the problem is that already at this threshold ssh on a node is quite problematic to do. Because the packets, passing such a number of chains, begin to not feel very good.

And all this is also solved with the help of Kubernetes. There is such a Resource quota object. Sets the number of available resources and objects for the namespace in the cluster. We can create a yaml object in each namespace of the Kubernetes cluster. Using this object, we can say that we have allocated a certain number of requests, limits for this namespace, and then we can say that in this namespace it is possible to create 10 services and 10 pods. And a single developer can at least squeeze in the evenings. Kubernetes will tell him: “You cannot stick your pods to such an amount because it exceeds the resource quota.” Everything, the problem is solved. The documentation is here .

One problematic point arises in connection with this. You feel how difficult it becomes to create a namespace in Kubernetes. To create it, we need to consider a bunch of things.

Resource quota + Limit Range + RBAC

• Create a namespace

• Create inside limitrange

• Create inside resourcequota

• Create a serviceaccount for CI

• Create a rolebinding for CI and users

• Optionally run the necessary service pods

Therefore, taking this opportunity, I would like to share my developments. There is such a thing, called the SDK operator. This is a way in the Kubernetes cluster to write operators for it. You can write statements using Ansible.

First, it was written in Ansible, and then I looked that there was an SDK operator and rewrote the Ansible role in the operator. This operator allows you to create an object in the Kubernetes cluster called a team. yaml . , - .

. ?

The first one. Pod Security Policy — . , , - .

Network Policy — - . , .

LimitRange/ResourceQuota — . , , . , .

. , warlocks , .

, , . , ResourceQuota, Pod Security Policy . .

Thanks to all.