Tesseract is an open source optical character recognition engine (OCR) engine that is the most popular and high-quality OCR library.

OCR uses neural networks to search and recognize text in images.

Tesseract searches for patterns in pixels, letters, words, and sentences, and uses a two-step approach called adaptive recognition. It takes one pass through the data for character recognition, then a second pass to fill in any letters that he was not sure of with letters that most likely correspond to the given word or sentence context.

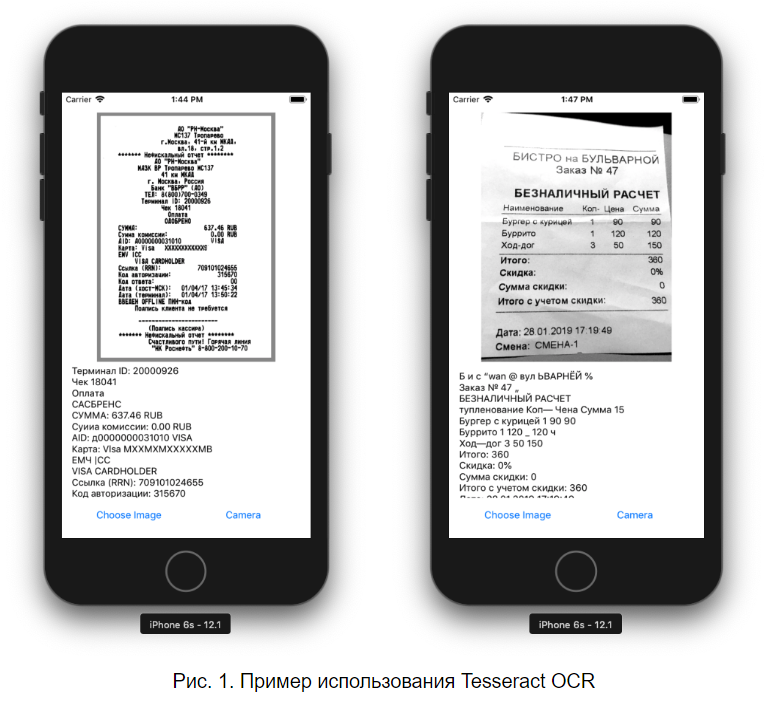

One of the projects was to recognize checks from photographs.

The recognition tool used was Tesseract OCR . The advantages of this library include trained language models (> 192), different types of recognition (image as a word, block of text, vertical text), easy setup. Since Tesseract OCR is written in C ++, a third-party wrapper with github was used.

The differences between the versions are different trained models (version 4 has greater accuracy, so we used it).

We need data files for text recognition, each language has its own file. You can download the data here .

The better the quality of the original image (size, contrast, lighting matter), the better the recognition result.

An image processing method was also found for its further recognition by using the OpenCV library. Since OpenCV is written in C ++, and there is no written wrapper for our solution, it was decided to write our own wrapper for this library with the necessary image processing functions. The main difficulty is the selection of values for the filter for the correct image processing. It is also possible to find the contours of checks / text, but not fully understood. The result is better (5-10%).

Options:

language - the language of the text from the image, you can select several by listing them through "+";

pageSegmentationMode - type of text location in the picture;

charBlacklist - characters to be ignored ignoring characters.

Using only Tesseract gave an accuracy of ~ 70% with a perfect image, with poor lighting / picture quality the accuracy was ~ 30%.

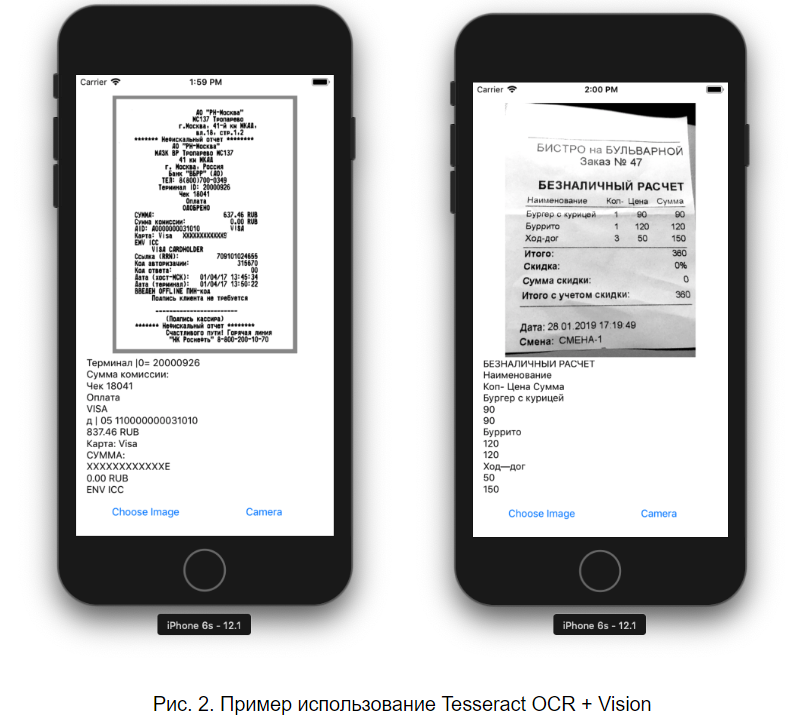

Vision + Tesseract OCR

Since the result was unsatisfactory, it was decided to use the library from Apple - Vision. We used Vision to find blocks of text, further divide the image into separate blocks and recognize them. The result was better by ~ 5%, but errors appeared due to repeated blocks.

The disadvantages of this solution were:

- Speed of work. The speed of operation has decreased> 4 times (there may be a variant of spreading)

- Some blocks of text were recognized more than 1 time

- The text is recognized from right to left, which is why the text on the right side of the check was recognized earlier than the text on the left.

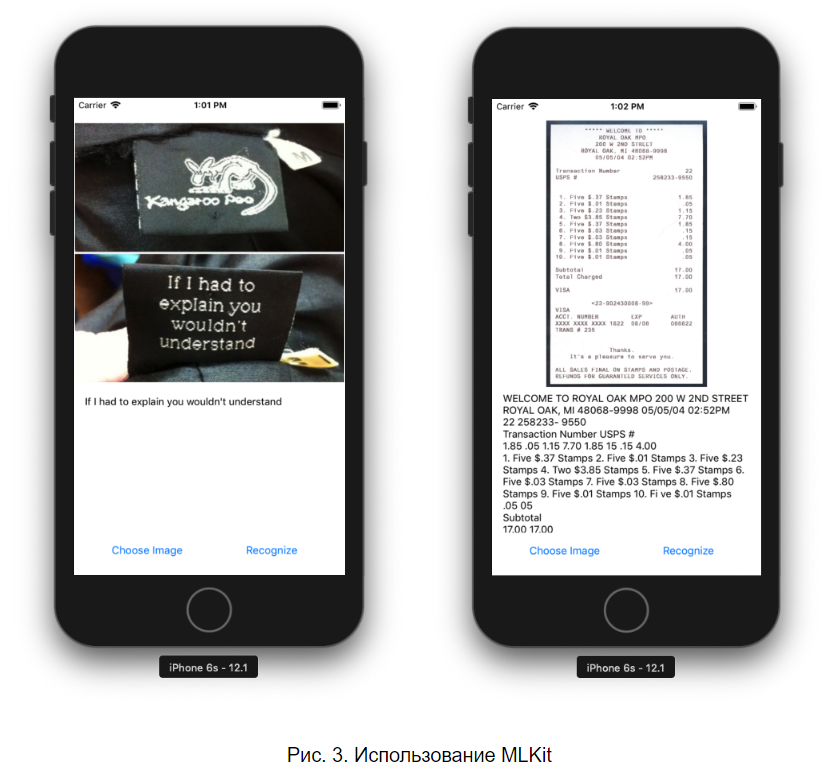

MLKit

Another text detection method is Google’s MLKit, deployed on Firebase. This method showed the best results (~ 90%), but the main drawback of this method is the support of only Latin characters and the difficult processing of divided text in one line (name - on the left, price - on the right).

In the end, we can say that recognizing text in images is a doable task, but there are some difficulties. The main problem is the quality (size, illumination, contrast) of the image, which can be solved by filtering the image. When recognizing text using Vision or MLKit, there were problems with the wrong order of text recognition, processing of split text.

Recognized text can be manually corrected and suitable for use; in most cases, when recognizing text from checks, the total amount is recognized well and does not need to be adjusted.