A few years ago, we decided that flood prediction provided a unique opportunity to improve people's lives, and began to see how the infrastructure and experience in machine learning at Google could help in this area. Last year, we launched our pilot project for flood forecasting in the Patna region of India, and since then we have expanded our prediction coverage as part of the AI for Social Welfare policy. In this article, we discuss some of the technologies and methodologies behind these attempts.

Flood model

A critical step in developing an accurate flood prediction system is to develop flood models using either measurements or river water level predictions as input and simulating the behavior of water in its floodplain.

Three-dimensional visualization of a hydraulic model simulating various river states

This allows us to turn the current or future state of the river into extremely accurate spatial risk maps telling us which areas will be flooded and which will remain safe. Flood models depend on four main components, each of which has its own complexities and innovations:

Real-time water level measurement

To run these models to good use, we need to know what is happening on earth in real time - so we rely on our partners in relevant government agencies to be able to provide us with accurate information on time. Our first government partner was the Central Water Commission of India (CWC), hourly measuring water levels in more than a thousand river beds throughout India, collecting these data and issuing forecasts based on measurements in the upper reaches of the rivers. CWC provides these real-time measurements and forecasts, and then they are used as input for our models.

CWC staff measure water level and flow near Lucknow

Create a height map

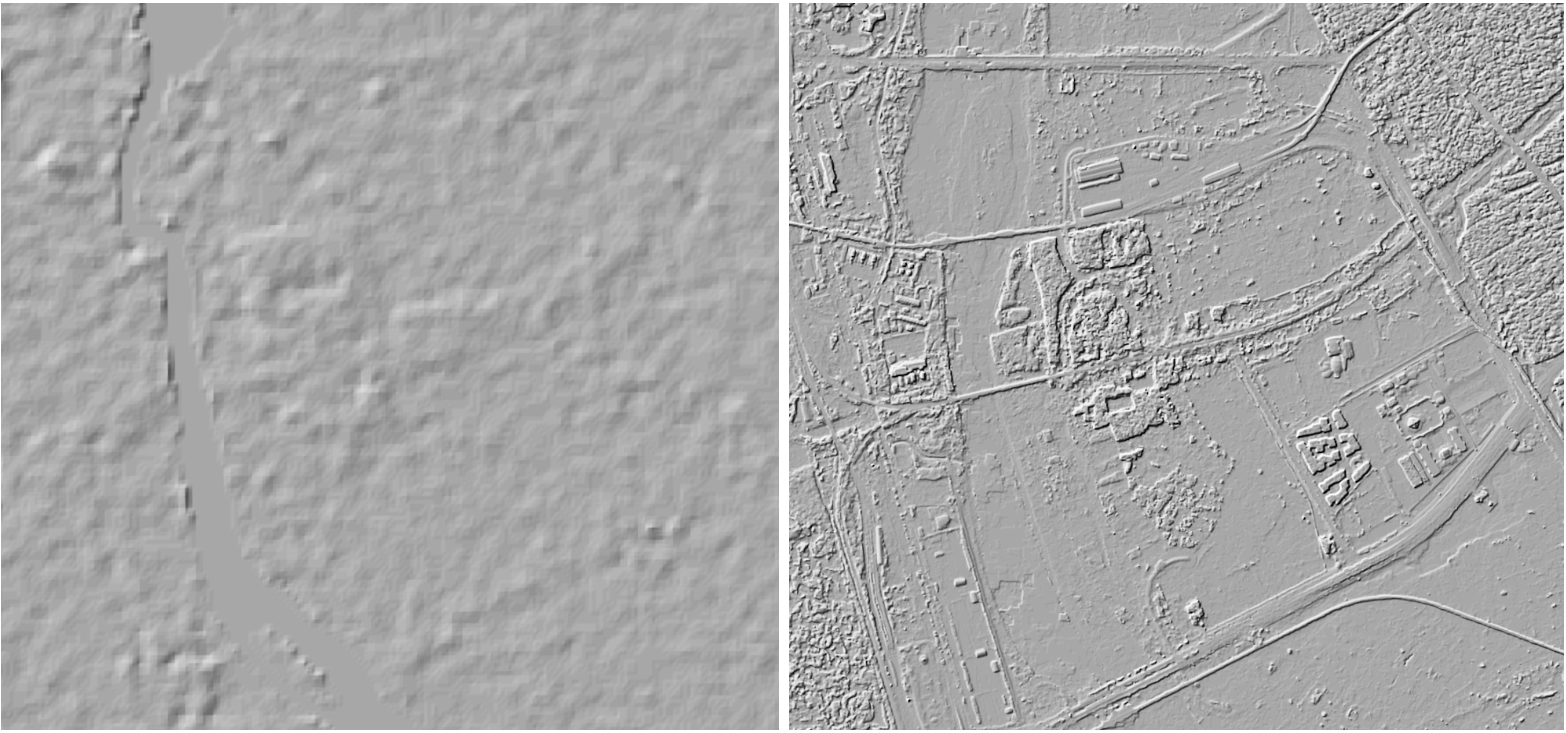

Once you know how much water is in the river, it is critical to provide the model with a good map of the area. High-resolution digital high-altitude (DEM) models are incredibly useful for a wide range of applications in earth sciences, but are not yet available for most of the planet, especially for flood prediction. Even meter-specific features can lead to a critical difference in flood results ( dams can be an extremely important example), but the resolution of publicly available DEMs is tens of meters. To overcome this problem, we have developed a new methodology that produces high-resolution DEM based on completely ordinary optical photographs.

We start with a large and varied collection of satellite images used in Google Maps. Comparing and aligning images with large packets, we simultaneously correct both inaccuracies in the satellite camera (orientation errors, etc.) and altitude data. Then we use adjusted camera models to create a depth map for each image. To obtain a height map, we optimally combine depth maps for each section. Finally, we remove objects such as trees and bridges from them so that they do not block water flows in the simulations. This can be done manually, or by teaching the convolutional neural network to understand in which places you need to interpolate heights. The result is a DEM with a resolution of approximately 1 m, which can be used to run hydraulic models.

DEM of a 30 m wide section of the Jamna River, and DEM of the same section with a 1 m resolution obtained by Google

Hydraulic modeling

Having received all these input data - river measurements, forecasts and a height map - we can start the modeling process itself, which can be divided into two main components. The first and most important is a physical hydraulic model that updates the location and speed of water over time based on approximate calculations of the laws of physics. In particular, we have implemented a decisive program for the equations of two-dimensional shallow water (Saint-Venant equations). These models are accurate enough for high-quality input and high-resolution work, but their computational complexity poses problems because it is proportional to the resolution cube. When doubling the resolution, the computation time grows by about 8 times. And since we are convinced that accurate forecasts require a lot of resolution, the computational cost of this model may turn out to be impregnable even for Google!

To solve this problem, we came up with a unique implementation of our hydraulic model, optimized for Tensor Processing Units ( TPU ). Although TPUs are optimized for neural networks and not for solving differential equations, their parallelizable nature gives an 85-fold increase in the computational speed on the TPU core compared to the CPU core. Additional optimization is achieved through the use of machine learning, which helps replace some physical algorithms, and the expansion of sampling based on data on two-dimensional hydraulic models, which allows us to support even larger grids.

Goalpar flood emulation on TPU

As already mentioned, the hydraulic model is just one of the components of our flood forecasts. We constantly came across areas where our hydraulic models were not accurate enough - whether due to inaccuracies in the DEM, breakthroughs in dams, or unexpected sources of water. Our goal is to find effective ways to reduce these errors. To do this, we have added a predictive flood model based on historical measurements. Since 2014, the European Space Agency has possessed a set of Chasovoy-1 satellites using radars with radar aperture synthesis (RAS) in the C-band. RAS images are great for flood detection, and can be obtained regardless of cloud cover and weather conditions. Based on this valuable data set, we compare historical measurements of water levels with historical floods, which allows us to apply consistent corrections to our hydraulic models. Based on the output of both components, we can evaluate which differences are caused by real changes in the state of the surface and which are caused by inaccuracies in the model.

Google interface flood warnings

Future plans

We still have a lot to do to fully understand the benefits of our flood models. First of all, we are working to expand the coverage of our operating systems, both in India and in other countries. We would also like to be able to provide more information in real time - to predict the depth of flooding, temporary information, and so on. In addition, we are exploring how best to transmit this information to individuals with the utmost clarity, encouraging them to take preventative measures.

Although flood models are good tools for improving the spatial resolution (and therefore accuracy and reliability) of existing flood forecasts, the various government agencies and international organizations we have worked with are worried about areas that do not have access to effective flood forecasts, or predictions of which do not give sufficient time for handicap to effectively respond to them. In parallel with our work on the flood model, we are conducting basic research on improved hydrological models, which, we hope, will allow governments not only to produce spatially more accurate forecasts, but also give more time to prepare.

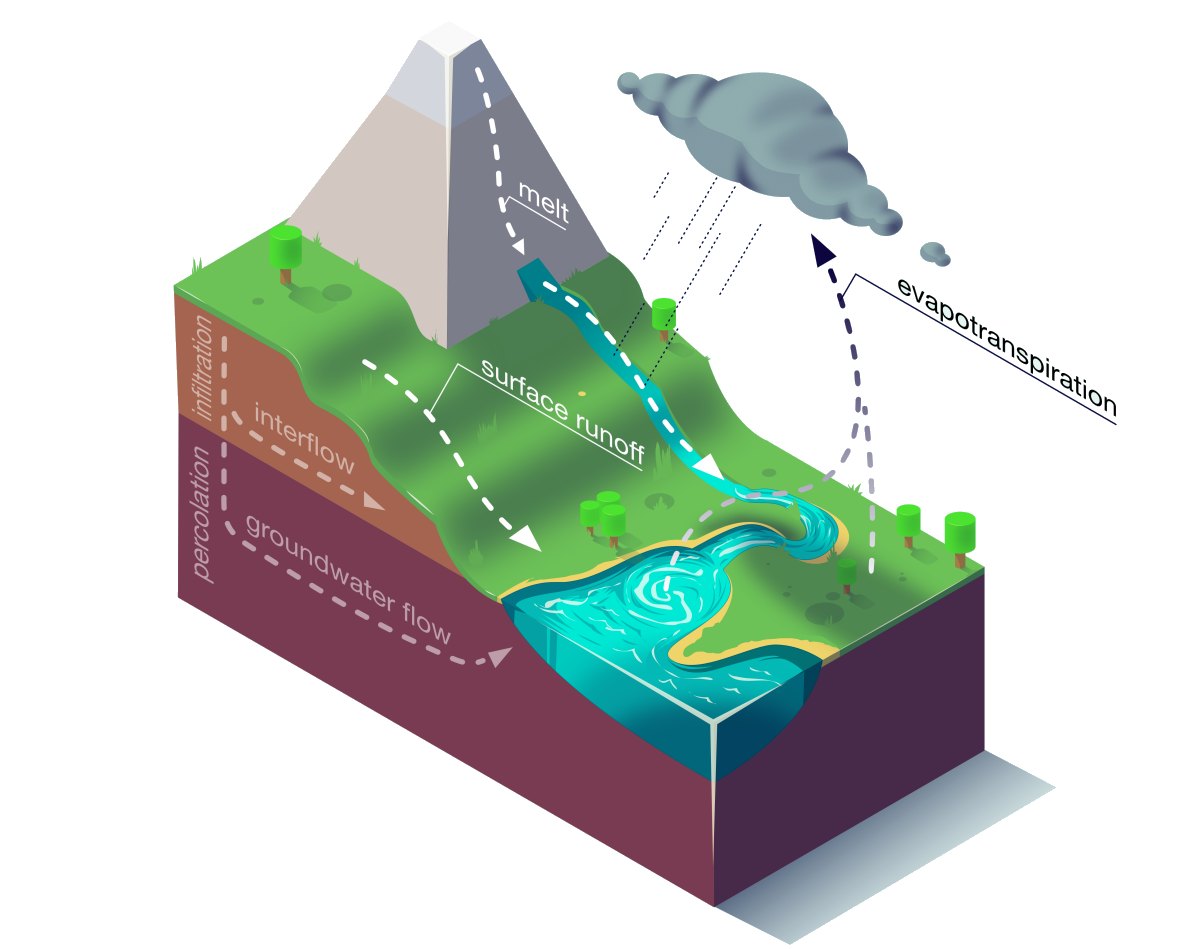

Hydrological models take such input data as precipitation, solar radiation, soil moisture, etc., and give a forecast of water flow (and so on) for several days into the future. These models are traditionally implemented through a combination of conceptual models that approximate various key processes such as snow melting, surface runoff, evapotranspiration, and more.

Key hydrological model processes

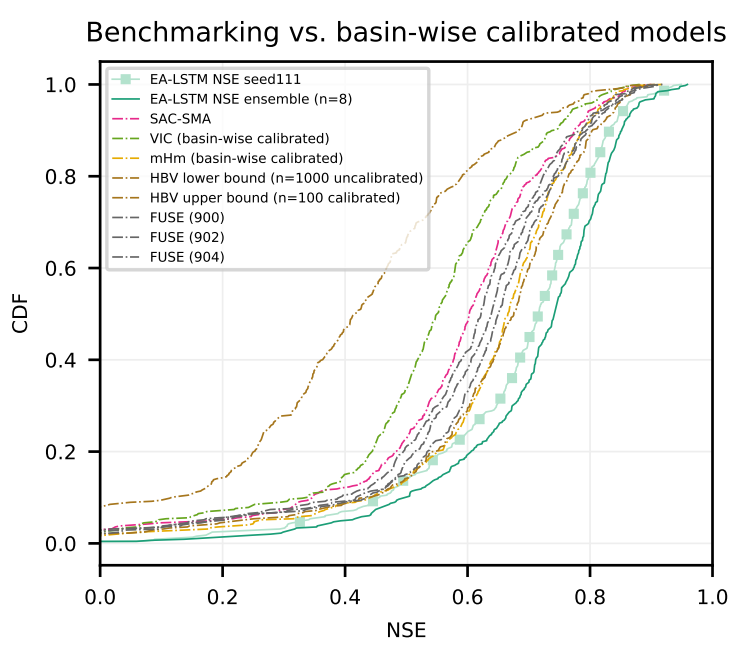

Also, such models usually require careful manual adjustment, and in areas with a lack of data, they work poorly. We are studying the question of how multitasking training can be suitable for solving both of these problems, and to make hydrological models both more scalable and more accurate. In a joint study with a group from the Institute of Machine Learning at the University of Linz, led by Sepp Hochreiter developing hydrological models based on machine learning, Kratzert and colleagues showed that neural networks with a long short-term memory proved to be better than any classical hydrological models.

Distribution of the efficiency coefficient of the Nash-Sutcliff model of various US basins in different models. The EA-LSTM is steadily ahead of a wide range of commonly used models.

Although this work is still at the stage of initial research, we believe that this is an important first step, and we hope that it may already be useful to other researchers and hydrologists. We consider it an incredible honor to work in a large ecosystem of researchers, governments and non-governmental institutions to reduce the effects of floods. We enthusiastically assess the potential consequences of such studies, and hope to see where they lead us.