From Beginner to Style Icons: how we made awards in 2GIS

Every day, 2GIS users help us maintain data accuracy: they inform about new companies, add traffic events, upload photos and write reviews. Previously, we could only thank them with words or arrange a giveaway. But over time, words are forgotten, and not everyone gets gifts. Therefore, we decided to make sure that all those who care about 2GIS see their contribution to the product and our gratitude for this.

So there were awards - virtual medals that we accrue for various kinds of tasks: upload photos to cafe cards, write reviews about theaters, specify the working hours of organizations and so on. Users see the rewards earned in their personal 2GIS profile and on the “My 2GIS” tab in the mobile application. There we show how much is left until the next achievement.

To implement this feature, we learned how to process a stream of events with a volume of 500 thousand records per hour (in places up to 50 thousand records per second) and analyze data from several services. And also - they added a little metaprogramming in order to simplify the configuration when developing new awards.

Together with the Rapter , we ’ll tell you what is under the hood of the award process.

Concept

In order to understand the complexity of the feature, you need to understand how the technical problem sounded. Then - consider the idea of implementation and the general scheme of system components. This is what we will do in this section.

Requirements for Abstracts

Requirements - a rather boring thing, so we will not paint all the nuances, we will concentrate on the most important things:

- awards are issued only to authorized users;

- updating progress on a reward should be as quick as possible;

- reward - the result of a user performing a set of actions in the product: uploading a photo, writing a review, finding directions, etc. There are a lot of data source systems.

Architectural idea

The idea of implementation is not very complicated. It can be expressed thesisally:

- the award consists of tasks, the results of which are combined according to the formula specified when configuring the award;

- the task responds to events about user actions coming from outside, filters them and registers the change in progress in the form of counters;

- “External events” are generated by master systems (photo, feedback, refinement services, etc.) or auxiliary services that transform or filter already existing event flows;

- event processing occurs asynchronously and can be stopped at any time if necessary;

- the user sees the current status of his awards;

- everything else is the details ...

Key Entities

The diagram below presents the main entities of the subject area and their relationship:

Two zones are distinguished in the diagram:

- Scheme - a zone for describing the structure of awards and the rules for their accrual;

- Data - Award area for specific users and data related to their current status.

The entities in the diagram:

- Achieve - information about the reward that you can get. Includes meta-information and a description of how to combine the results of tasks - a strategy.

- Objective - a task, the conditions of which must be completed in order to advance to receiving the reward.

- UserAchieve - the current state of the reward for a particular user.

- UserObjective - the current state of the user’s reward job.

- User - information about the user, necessary for notifications and understanding of his current status (remote and banned rewards are not needed).

- ProcessingLog - a log of accruals for tasks. Contains information about how a specific action influenced the progress of the assignment.

- Event - the minimum necessary information about an event that somehow influenced the progress of the user's tasks.

Service structure

Now consider the main components of the service and their dependencies:

- Events Bus - an event bus that can be used to complete tasks. We are using Apache Kafka.

- Master and Slave DBs are the main data warehouse. In this case, a PostgreSQL cluster.

- ConsumingWorkers - bus event handlers. The main task is to read events from a specific source (photos, reviews, etc.), apply them to user tasks and save the result.

- AchievesWorker - recounts progress on user rewards according to the status of tasks.

- NotificationWorkers - a set of handlers for scheduling and sending notifications about receiving awards, announcements of new possible achievements, etc.

- Public API - a public REST interface for Web and mobile applications.

- Private API - REST interface for admin panel, which helps in incident investigation and service support. It is available to developers and support teams.

Each of the components is isolated in terms of logic and areas of responsibility, which avoids unnecessary integrations and deadlocks when modifying data. Below we consider only part of the scheme associated with the processing of events and converting them into rewards.

Event handling

Content

Rewards are primarily a data aggregation service. Each master system generates several types of events. As a rule, each type of event is closely related to the state of the content, its status model. So, a photo can be moderated, deleted, blocked, hidden or active. All these are different events, which are handled by a separate worker who specializes in a particular source. At the moment, there is an interaction with the following sources (master systems):

- Photo - generates various events that relate to operations performed by users on photographs.

- Reviews - events related to operations on user reviews.

- Datafeedback - events related to refinement operations. Clarification is a change in information about an object on a map, whether it is a company or a monument.

- Check - events that relate to the 2GIS Check application.

- BSS are analytics events that generate 2GIS applications. For example, opening a certain company, traveling on a navigator, etc.

Events generated by the master system fall into the Kafka topic in the order of changing their statuses, which makes it possible to move the progress of the award for the user not only forward, but also roll it back. For example, if the photo was in the “active” status, and then for some reason acquired the status of “blocked”, the progress on the award should change downward. Award progress is an interpretation of internal objects called content counters.

Counters may vary for different data. For example, for events about a photo, they are as follows: the number of approved, the number on moderation, the number of blocked, and for events of opening cards it is necessary to consider only the number of cards opened by the user. Based on the current values of the content counters, for a particular user, within the framework of a particular award, the answers to the following questions are determined:

- Has the award started?

- what is the progress

- Is the reward fully completed?

Filters and Rules

The job counters of a particular award are changed only if an event has arrived with the desired type of content, as well as with the necessary data necessary to receive the award.

In order to skip only the content that is suitable for the award, we run each event through a series of filters and rules.

A filter is a certain restriction that is imposed on content. He is only concerned with answering the question: “Is a new event suitable for this condition or not?”

A rule is a special filter, the purpose of which is to say: “If an event fits the condition, how should the counters change?” The rule includes an algorithm for changing counters. Each award contains only one rule.

The implementation of filters and rules is in the project code, and the description of which filters (rule) belong to a particular award is in the database in JSON format. We did not come to such a decision right away. Initially, filters and rules could not be set using the configuration through the database, the award was fully described in the code, only its identifier was stored in the table. This decision gave a number of significant drawbacks:

- The problem of supporting multiple environments. If you want to roll out one state of the list of awards to the test environment, and send another into battle, you need to know the environment in the project code or have a configuration file with the list of awards. At the same time, it is not possible to use different databases for this task, although they already exist for each environment.

- Ability to configure filtering only by the developer. Since everything is described in the code, only a person who knows the project and the programming language could make changes, I would like it to be possible to do this simply through the Private API or database.

- The disadvantage of viewing. There are many rewards, sometimes you need to see the filters that they use. Each time, doing this through looking at the code is rather tedious.

At the start of the application, we match by the name of filters loaded from the database and put them into a specific reward. Filter description example:

[ { "name":"SourceFilter", "config":{ "sources":["reviews"] } }, { "name": "ReviewsLengthFilter", "config": { "allowed_length": 100 } } ]

In this case, we will take only those reviews (this is indicated by the first description object from the filter array), the text of which contains more than 100 characters (the second filter in the list).

Example rule description:

{"name": "ReviewUniqueByObjectRule","config":{}}

This rule will allow you to change the counters only if the user wrote a review for the object, while only one review will be taken into account for one object.

Bss

Let us dwell separately on working with the stream of BSS events. There are at least three reasons for this:

- Analytics events cannot be rolled back, there is no status model in them, which, in general, is logical, because driving through a navigator or building a route cannot be canceled. The action was either there or not.

- Volumes. Let me remind you that the total audience of 2GIS is 50+ million users per month. Together they make more than 1.5 billion search queries, as well as many other actions: launching the application, viewing the object’s card, etc. At the peak, the number of events can reach 50,000 per second. We must pass all this information through filters in order to give a reward to the user.

- Analytics events have features: several formats, a wide variety of types.

All this greatly influenced the processing of data from the BSS topic, since if we need realtime, then we need very close processing time.

To reduce the described differences, a separate service was created that prepares such events. The service can work with the whole variety of message formats coming from analytics. The essence of his work is as follows: the entire BSS stream of events is read, from which only those that are needed for the Awards are taken. Such a service filter significantly reduces the load (after filtering, the flow rate is ≈300 events per second) from the BSS-stream processor Rewards, and also generates events in a single format, leveling the disadvantage associated with the history of the development of internal analytics.

Awards

So, we figured out how to handle events and calculate progress on assignments. Now it’s time to review the process of issuing awards to users.

The first question that arises is: why allocate the output to a separate worker, can't it be recounted when processing each event? Answer: possible, but not worth it.

There are several reasons for allocating extradition to a separate process:

- Transferring the recount to each ConsumingWorker, we get the Race Condition for the operation of updating progress by reward, because each handler will try to update the progress based on the known state of the tasks, and others will actively change this state.

- Each ConsumingWorker batch processes events from Kafka in a transaction. By adding an insert to the user’s rewards table, we will call extra locks at the database level, which will inhibit other handlers.

- In the process of issuing awards, there is a logic for sending notifications, which will only slow down the processing of the flow of events, which is undesirable.

With the reasons for the emergence of a separate AchievesWorker (handler for issuing awards) figured out. Now you need to deal with two important parts of the processing:

- There is a set of quests in the reward. There is a set of counters for these tasks. How to understand how much the reward has been completed and how to express this code?

Example: You need to write 3 reviews or upload 3 photos. The user has 1 review and 2 photos. What is the progress of the award? Answer: 3, because the user will definitely be sure that you need 3 in total. - We have a separate processor for issuing awards. Each time, recounting several dozen awards for each authorized user, that is, several tens of millions, is unlikely to succeed quickly. How can he learn about the progress of which particular users and on what tasks has changed since the last processing?

We will consider each part separately.

Flow of progress

For a better understanding of how you can describe how to transform the progress of tasks into progress by reward, we divide the rewards into categories and look at the transformations.

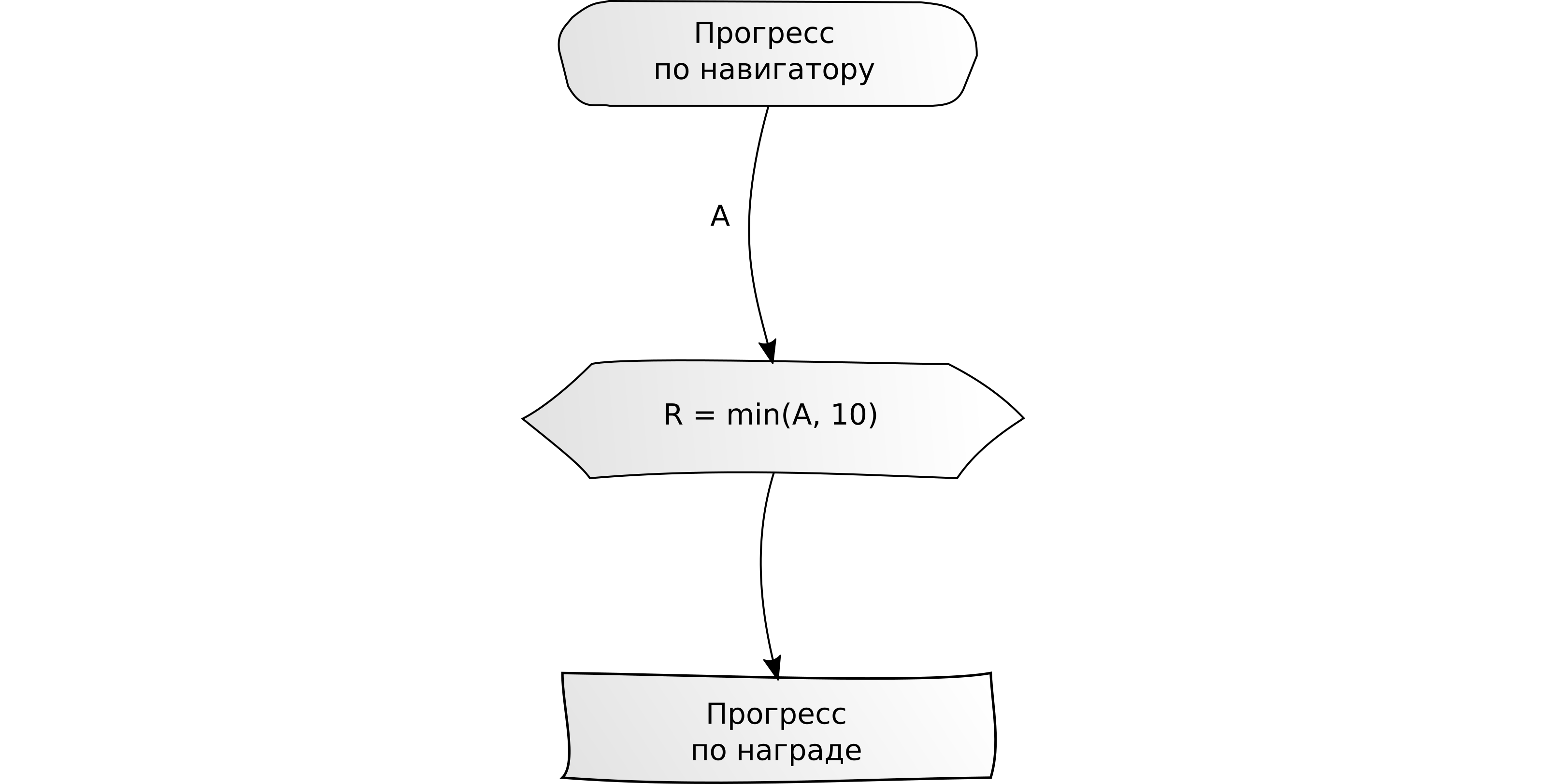

“Complete one mission per X units.” Example: drive 10 km on the navigator.

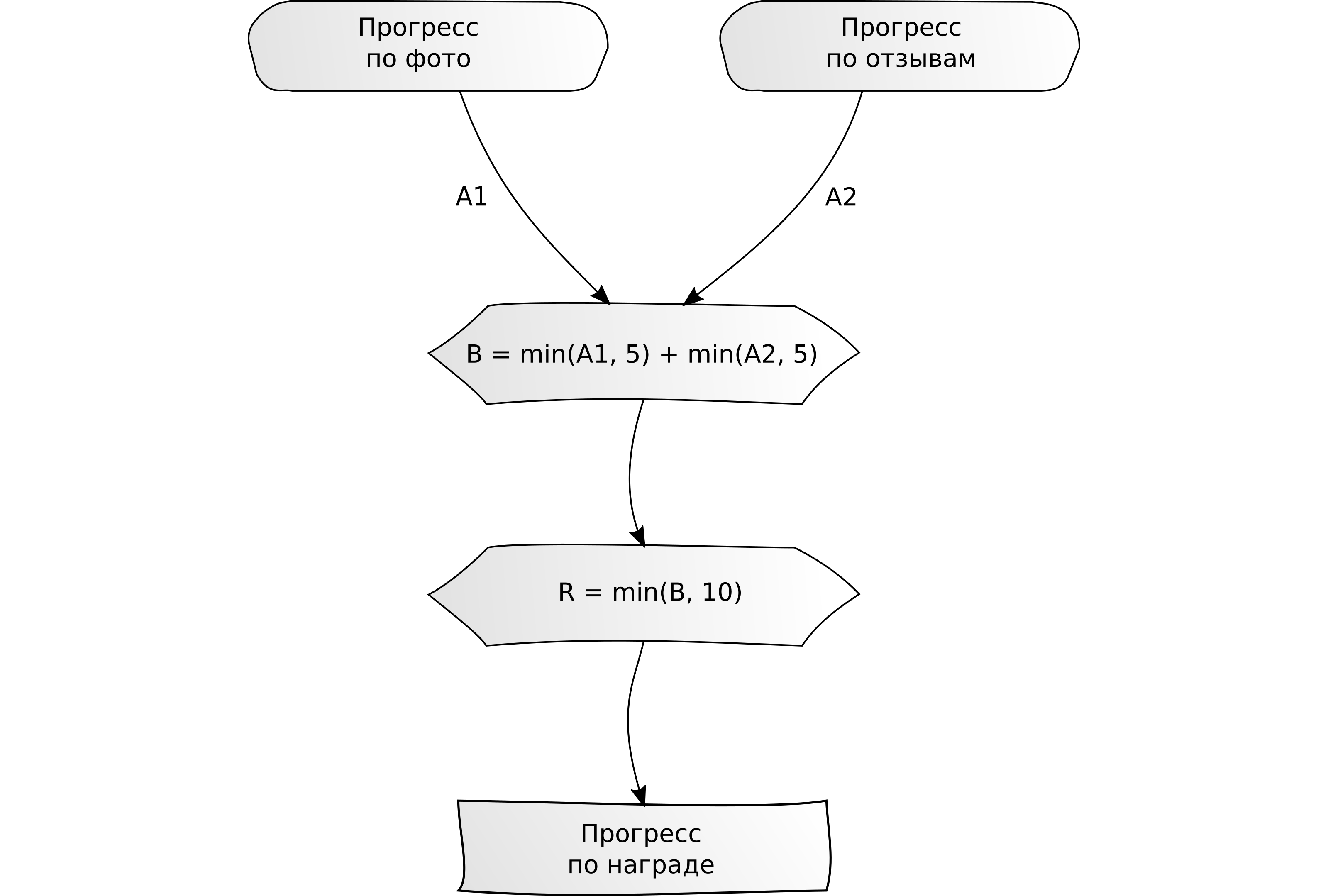

"Complete several tasks for X units each." Example: upload 5 photos and write 5 reviews in cards - only 10 pieces of content.

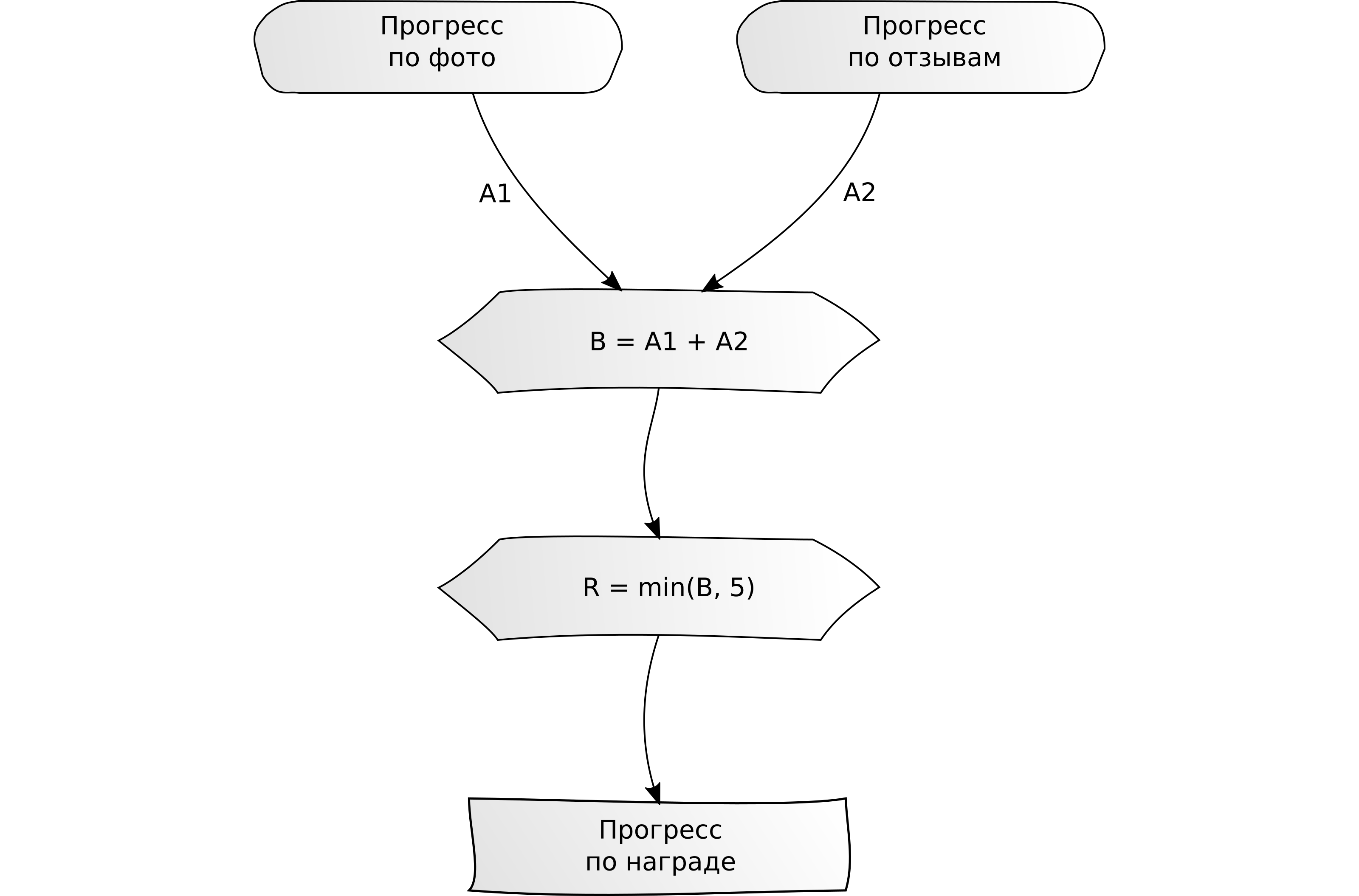

"Complete several tasks for X units in total." Example: write 5 reviews or upload 5 photos.

“Complete several tasks grouped by type.” Example: upload 5 units of content (photos or reviews) and drive 10 km on the navigator.

Theoretically, there could be more complex nested combinations. However, in real conditions, it is not possible to explain to the user in two or three sentences the complex logical combination that must be performed to receive the award. Therefore, in most cases, these options are enough.

We called the conversion method a strategy and tried to make it more or less universal by working out a formal description in the form of a JSON object. You could, of course, think of writing in the form of a formula, but then you would have to use similarities to eval or describe the grammar and implement it, and this is clearly an overcomplication. Storing the strategy in the source code for each award is not very convenient, because the description of the award will break (part in the database, and part in the code), and it will also not allow collecting awards from ready-made components in the future without participation of the development.

The strategy is presented in the form of a tree, where each node:

- Refers to current progress on assignment or is a group of other nodes.

- May have a restriction from above - in fact an indication of the need to use min ().

- May have a normalization coefficient. Needed for simple conversions by multiplying the result by a number. We came in handy for converting meters to kilometers.

To describe the above examples, one operation is enough - sum. Sum is great for clearly showing the user a progress with a single number, but other operations can be used if desired.

Here is an example strategy description for the last category:

{ "goal": 15, "operation": "sum", "strategy": [ { "goal": 5, "operation": "sum", "strategy": [ { "objective_id": "photo" }, { "objective_id": "reviews" } ] }, { "goal": 10, "operation": "sum", "strategy": [ { "objective_id": "navi", "normalization_factor": 0.001 } ] } ] }

Required Updates

There are several handlers that relentlessly analyze events by users and apply changes to the progress of tasks. A regular search of all users with each award will lead to an analysis of several tens of millions of awards - not very encouraging, provided that real updates will be measured in thousands. How to learn only about thousands and not waste millions of CPU?

The idea of how to recalculate progress only on those awards that have actually changed, came pretty quickly. It is based on the use of vector watches.

Before the description I will remind of entities:

- UserObjective - data on the progress of the user by setting the award.

- UserAchieve - reward user progress data.

The implementation looks like this:

- We get the version field for UserObjective and UserAchieve and Sequence in PostgreSQL.

- Each update of the UserObjective entity changes its version. The value is taken from the sequence (we have it common to all records).

- The version value for UserAchieve will be determined as the maximum of the versions of the associated UserObjective.

- At each processing cycle, AchievesWorker searches for such UserObjective for which there is no UserAchieve or UserAchieve.version <UserObjective.version. The problem is solved by a single query to the database.

Immediately it is worth noting that the solution has limitations on the number of entries in the tables of awards and tasks, as well as on the frequency of changes in progress on tasks, but with a couple of tens of millions of awards and the number of updates less than a thousand per minute, it is quite possible to live with such a solution. Somehow we will separately tell about how we optimized the issuance for the contest “ 2GIS Agents ”.

findings

Despite the fact that the article turned out to be quite voluminous, a lot of nuances remained behind the scenes, since it would not be possible to talk about them briefly.

What conclusions have we made thanks to the Awards:

- The principle of "divide and rule" in this case played into our hands. The allocation of event handlers to each source helps us scale when necessary. Their work is isolated according to data and intersects only in small areas. Highlighting reward logic allows you to reduce overhead in event handlers.

- If you need to digest a lot of data and processing is quite expensive, you should immediately think about how to filter out what is definitely not needed. Experience with filtering a BSS stream is an example.

- Once again, we were convinced that the integration of services through a common event bus is very convenient and allows you to avoid unnecessary load on other services. If the Rewards service received data from the Photo, Reviews, etc. services through http-requests, then several services would have to be prepared for an additional load.

- A bit of metaprogramming can help maintain the integrity of data configuration and separate environments arbitrarily. Storing filters, rules and strategies in the database simplified the process of developing and releasing new awards.

All Articles